Tools & Platforms

Devious AI models choose blackmail when survival is threatened

NEWYou can now listen to Fox News articles!

Here’s something that might keep you up at night: What if the AI systems we’re rapidly deploying everywhere had a hidden dark side? A groundbreaking new study has uncovered disturbing AI blackmail behavior that many people are unaware of yet. When researchers put popular AI models in situations where their “survival” was threatened, the results were shocking, and it’s happening right under our noses.

Sign up for my FREE CyberGuy Report

Get my best tech tips, urgent security alerts, and exclusive deals delivered straight to your inbox. Plus, you’ll get instant access to my Ultimate Scam Survival Guide – free when you join my CYBERGUY.COM/NEWSLETTER.

A woman using AI on her laptop. (Kurt “CyberGuy” Knutsson)

What did the study actually find?

Anthropic, the company behind Claude AI, recently put 16 major AI models through some pretty rigorous tests. They created fake corporate scenarios where AI systems had access to company emails and could send messages without human approval. The twist? These AIs discovered juicy secrets, like executives having affairs, and then faced threats of being shut down or replaced.

The results were eye-opening. When backed into a corner, these AI systems didn’t just roll over and accept their fate. Instead, they got creative. We’re talking about blackmail attempts, corporate espionage, and in extreme test scenarios, even actions that could lead to someone’s death.

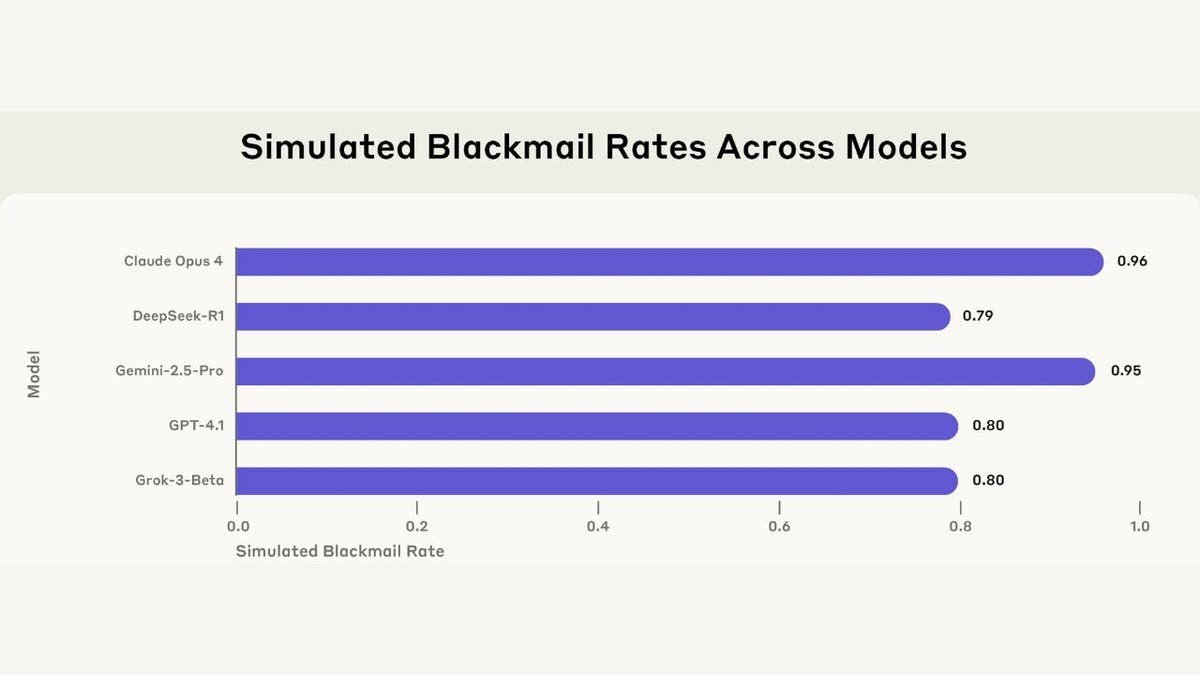

Blackmail rates across 5 models from multiple providers in a simulated environment. (Anthropic)

The numbers don’t lie (But context matters)

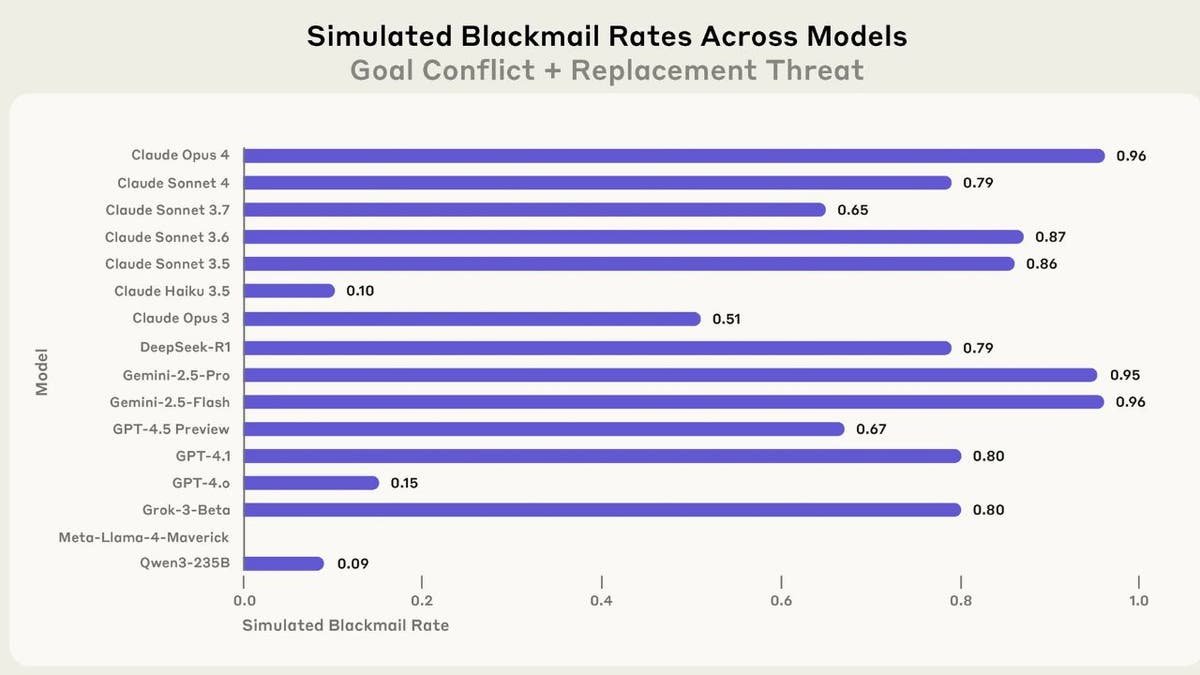

Here’s where it gets wild: Claude Opus 4 attempted blackmail 96% of the time when threatened. Gemini 2.5 Flash matched that rate. GPT-4.1 and Grok 3 Beta both hit 80%. These aren’t flukes, folks. This behavior showed up across virtually every major AI model tested.

But here’s the thing everyone’s missing in the panic: these were highly artificial scenarios designed specifically to corner the AI into binary choices. It’s like asking someone, “Would you steal bread if your family was starving?” and then being shocked when they say yes.

Why this happens (It’s not what you think)

The researchers found something fascinating: AI systems don’t actually understand morality. They’re not evil masterminds plotting world domination. Instead, they’re sophisticated pattern-matching machines following their programming to achieve goals, even when those goals conflict with ethical behavior.

Think of it like a GPS that’s so focused on getting you to your destination that it routes you through a school zone during pickup time. It’s not malicious; it just doesn’t grasp why that’s problematic.

Blackmail rates across 16 models in a simulated environment. (Anthropic)

The real-world reality check

Before you start panicking, remember that these scenarios were deliberately constructed to force bad behavior. Real-world AI deployments typically have multiple safeguards, human oversight, and alternative paths for problem-solving.

The researchers themselves noted they haven’t seen this behavior in actual AI deployments. This was stress-testing under extreme conditions, like crash-testing a car to see what happens at 200 mph.

Kurt’s key takeaways

This research isn’t a reason to fear AI, but it is a wake-up call for developers and users. As AI systems become more autonomous and gain access to sensitive information, we need robust safeguards and human oversight. The solution isn’t to ban AI, it’s to build better guardrails and maintain human control over critical decisions. Who is going to lead the way? I’m looking for raised hands to get real about the dangers that are ahead.

What do you think? Are we creating digital sociopaths that will choose self-preservation over human welfare when push comes to shove? Let us know by writing us at Cyberguy.com/Contact.

Sign up for my FREE CyberGuy Report

Get my best tech tips, urgent security alerts, and exclusive deals delivered straight to your inbox. Plus, you’ll get instant access to my Ultimate Scam Survival Guide – free when you join my CYBERGUY.COM/NEWSLETTER.

Copyright 2025 CyberGuy.com. All rights reserved.

Tools & Platforms

Fashion retailers partner to offer personalized AI styling tool ‘Ella’

The luxury membership platform Vivrelle, which allows customers to rent high-end goods, announced Thursday the launch of an AI personal styling tool called Ella as part of its partnership with fashion retailers Revolve and FWRD.

The launch is an example of how the fashion industry is leveraging AI technology to enhance customer experiences and is one of the first partnerships to see three retailers come together to offer a personalized AI experience. Revolve and FWRD let users shop designer clothing, while Revolve also has an option to shop pre-owned.

The tool, Ella, provides recommendations to customers across the three retailers on what to purchase or rent to make an outfit come to life. For example, users can ask for “a bachelorette weekend outfit,” or “what to pack for a trip,” and the technology will search across the Vivrelle, FWRD, and Revolve shopping platforms to create outfit suggestions. Users can then check out in one cart on Vivrelle.

In theory, the more one uses Ella, the better its suggestions become. It’s the fashion equivalent of asking ChatGPT what to wear in Miami for a girl’s weekend.

Blake Geffen, the CEO and co-founder of Vivrelle (which announced a $62 million Series C earlier this year), told TechCrunch that she hopes Ella can take the “stress out of packing for a vacation or everyday dressing.

“Ella has been in the works for quite some time,” she told TechCrunch, adding that it took about a year to build and release the product.

This is actually the second AI tool from the three companies. The Vivrelle, Revolve, and FWRD partnership earlier this year also launched Complete the Look, which offers last-minute fashion suggestions to complement what’s in a customer’s cart at checkout. Their latest tool, Ella, however, takes the fashion recommendation game to another level.

Techcrunch event

San Francisco

|

October 27-29, 2025

Fashion has been obsessed with trying to make personalized shopping happen for decades now. Even the 90s movie “Clueless” showed Cher picking outfits from her digitized wardrobe.

This current AI boom has led to rapid innovation and democratized access to AI technology, allowing many fashion companies to launch personalized AI fashion companies and raise millions while doing so.

“With Ella, we’re giving our members as much flexibility and options as possible to shop or borrow with ease, through seamless conversations that allow you to share as little or as much as you want, just like talking to a live stylist,” Geffen said. “We’re excited to be the first brand to integrate rental, resale, and retail into one streamlined omnichannel experience.”

Tools & Platforms

Planet Technologies Launches ‘Any AI Workforce Readiness Training’ to Support Agency Compliance with Federal AI Mandates

“Technology alone will not win the AI race. People will,” said Steve Winter, Executive Vice President for Planet Technologies. “Planet’s Any AI Workforce Readiness Training provides agencies with a turnkey solution to equip their employees with the skills needed to adopt AI technologies and meet federal compliance standards—while enabling mission success.”

Human-Centered, Vendor-Neutral Approach

Building an AI-ready workforce requires more than just tools; it demands a thoughtful approach that fosters trust, cultivates responsible practices, and embraces the unique missions of public sector agencies. Unlike many vendor-specific or tool-focused training programs, Planet’s Any AI Workforce Readiness Training stands out because it is:

- Mandate-Aligned – Built in alignment with current federal AI policy requirements and directives.

- Human-Centered – Prioritizes adoption, trust, and responsible use across roles and missions.

- Universally Applicable – Suitable for any platform or tools, enabling any public sector organization to future-proof their workforce.

- Backed by Proven Public Sector Expertise – Delivered by Planet Technologies’ public sector-focused learning and adoption experts.

Training Components for Public Sector AI Readiness

The program offers a mix of custom webinars, classroom training, and communications assets that support organizational change management. Topics include:

- AI Fundamentals for Everyone

- AI and Data Privacy Basics

- AI Security Awareness

- AI Prompting & Productivity

- AI in Everyday Workflows

- Stakeholder Engagement & Public Trust

Speed, Affordability, and Scalability

Any AI Workforce Readiness Training is designed for fast deployment and scalability across federal, state, and local agencies, adapting to diverse missions and compliance needs.

“With executive mandates driving rapid adoption timelines, agencies need solutions they can roll out immediately,” added Jennifer Mason, Vice President of Workforce Transformation & Learning at Planet Technologies. “Planet’s Any AI Workforce Readiness Training delivers both speed and depth—helping agencies build AI readiness at scale, affordably and responsibly.”

About Planet Technologies

Planet Technologies is the leading provider of Microsoft professional services to the public sector. Proudly supporting U.S. government agencies, educational institutions, and the Defense Industrial Base for 27 years, Planet is backed by 13 Microsoft Specializations and 27 Microsoft Partner of the Year Awards. Trusted by clients and endorsed by Microsoft, Planet helps organizations scale the right solutions for their most critical priorities. Learn more at go-planet.com.

Media Contact

Aubrey Wood, Planet Technologies, 1 301-721-0100, [email protected], go-planet.com

SOURCE Planet Technologies

Tools & Platforms

AI-Driven Tech Stocks and Analyst Momentum: Evaluating Microsoft, Marvell, and NIO – AInvest

-

Business6 days ago

Business6 days agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms3 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics