AI Research

Coreweave’s Q2: Takeaways on AI training, inference demand

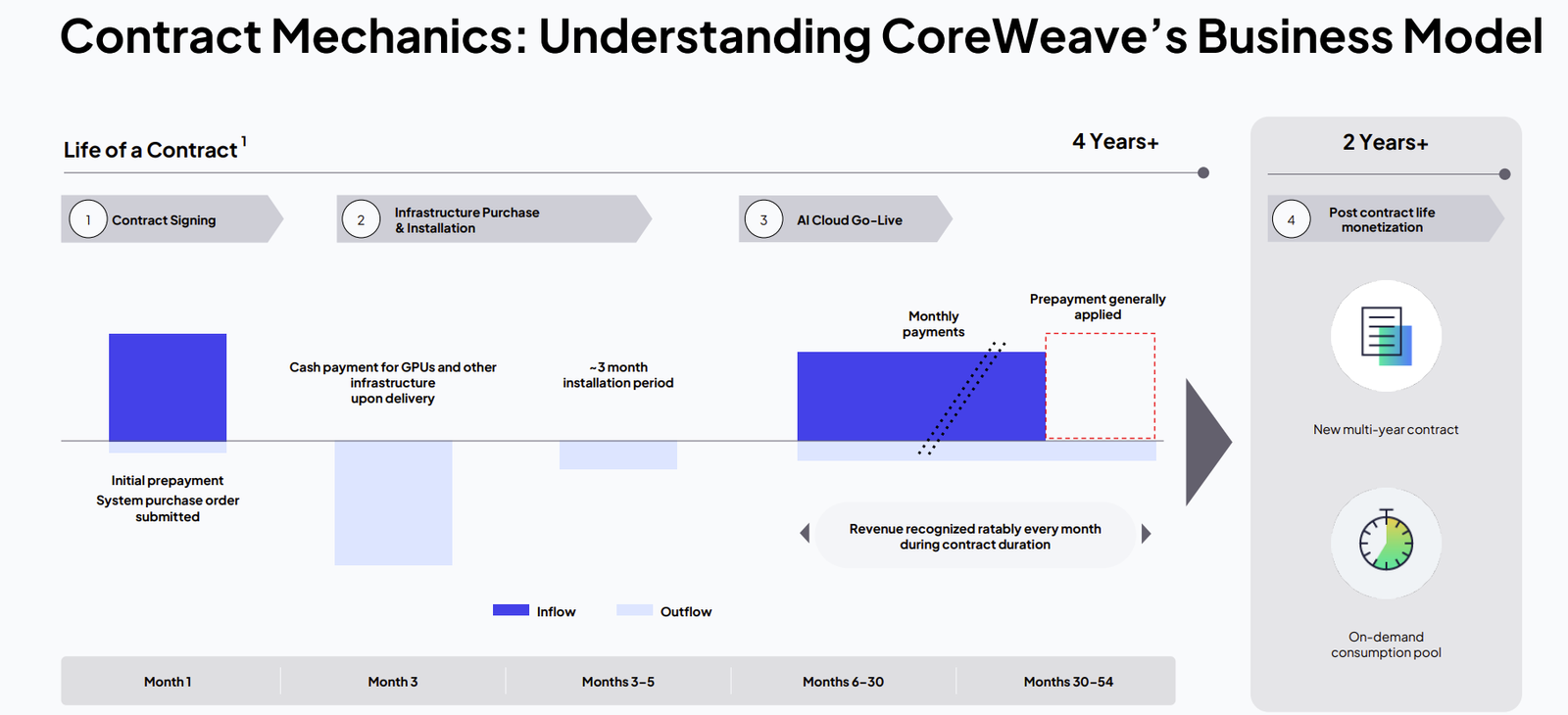

CoreWeave is building its AI infrastructure so it can easily switch back and forth from training to inference workloads as it works through strong demand and supply constraints.

The AI infrastructure provider reported a second quarter net loss of $290.51 million, or 60 cents a share, on $1.21 billion, up 207% from a year. Wall Street was looking for a loss of 49 cents a share on revenue of $1.08 billion.

The company exited the second quarter with a revenue backlog of $30.1 billion, double the backlog from a year ago. CoreWeave landed a $4 billion expansion deal with OpenAI in addition to a previously announced $11.9 billion contract.

CoreWeave raised its 2025 revenue guidance to $5.15 billion to $5.35 billion. “We ended the quarter with nearly 470 megawatts of active power, and we increase the total contracting power approximately 600 megawatts to 2.2 gigawatts. We are aggressively expanding our footprint on the back of intensifying demand signals from our customers,” said CoreWeave CEO Michael Intrator.

Here’s a look at the takeaways.

Overall demand. CoreWeave signed expansion contracts with both of its hyperscale customer in the past eight weeks. “Our pipeline remains robust, growing and increasingly diverse, driven by a full range of customers, from media and entertainment to healthcare to finance to industrials and everything in between,” said Intrator. “The proliferation of AI capabilities into new use cases and industries is driving increased demand for our specialized cloud infrastructure and services.”

Financial services demand. CoreWeave said it inked big bank deals with banking giants Morgan Stanley and Goldman Sachs for proprietary trading.

Healthcare scaling AI. “We’re also seeing significant growth from healthcare and life science verticals, and are proud of our partnership with customers like Hippocratic AI who built safe and secure AI agents to enable better healthcare outcomes,” said Intrator.

Training and inference. Intrator said CoreWeave is working with customers to easily transition between training and inference. “We’re helping these customers redefine how data is consumed and utilized globally as their critical innovation partner, and we are being rewarded for our efforts,” said Intrator. “As they shift additional spend to our platform, we continue to execute and invest aggressively in our platform, up and down the stack to deliver the bleeding edge AI cloud services, performance and reliability that our customers require to power their AI innovations.”

Intrator said:

“We really build our infrastructure to be fungible, to be able to be moved back and forth seamlessly between training and inference. Our intention is to build AI infrastructure, not training infrastructure, not inference infrastructure.”

Storage workloads. CoreWeave said it is gaining storage share for AI-centric workloads. “Customers are shipping petabytes of their core storage to core week in the form of multiyear contracts. We are providing support for additional third party storage systems,” said Intrator.

Bleeding edge expansion. Intrator said customers are focused on the latest hardware–Nvidia systems–to remain on the bleeding edge. “Clients are purchasing hardware that is appropriately state of the art for their use case. And as new hardware comes out, as new hardware architectures are released, they tend to come back in and purchase the same top tier infrastructure their next renewal,” said Intrator.

Power and supply constraints. Intrator said the AI infrastructure market is “structurally constrained.” “It is a market that is really working hard to try and balance and there are fundamental constraints at the power shell through the grid to the supply chains that exist within the GPUs to the mid voltage transformers,” said Intrator. “There are a lot of different pieces that are constrained, but ultimately the most significant challenge right now is accessing power shells.”

AI Research

In the News: Thomas Feeney on AI in Higher Education – Newsroom

“I had an interesting experience over the summer teaching an AI ethics class. You know plagiarism would be an interesting question in an AI ethics class … They had permission to use AI for the first written assignment. And it was clear that many of them had just fed in the prompt, gotten back the paper and uploaded that. But rather than initiate a sort of disciplinary oppositional setting, I tried to show them, look, what you what you’ve produced is kind of generic … and this gave the students a chance to recognize that they weren’t there in their own work. This opened the floodgates,” Feeney said.

“I think the focus should be less on learning how to work with the interfaces we have right now and more on just graduate with a story about how you did something with AI that you couldn’t have done without it. And then, crucially, how you shared it with someone else,” he continued.

AI Research

Philippines businesses remain slow in adopting AI – study – Philstar.com

AI Research

First ‘vibe hacking’ case shows AI cybercrime evolution and new threats

NEWYou can now listen to Fox News articles!

A hacker has pulled off one of the most alarming AI-powered cyberattacks ever documented. According to Anthropic, the company behind Claude, a hacker used its artificial intelligence chatbot to research, hack, and extort at least 17 organizations. This marks the first public case where a leading AI system automated nearly every stage of a cybercrime campaign, an evolution that experts now call “vibe hacking.”

Sign up for my FREE CyberGuy Report

Get my best tech tips, urgent security alerts, and exclusive deals delivered straight to your inbox. Plus, you’ll get instant access to my Ultimate Scam Survival Guide — free when you join my CYBERGUY.COM/NEWSLETTER

HOW AI CHATBOTS ARE HELPING HACKERS TARGET YOUR BANKING ACCOUNTS

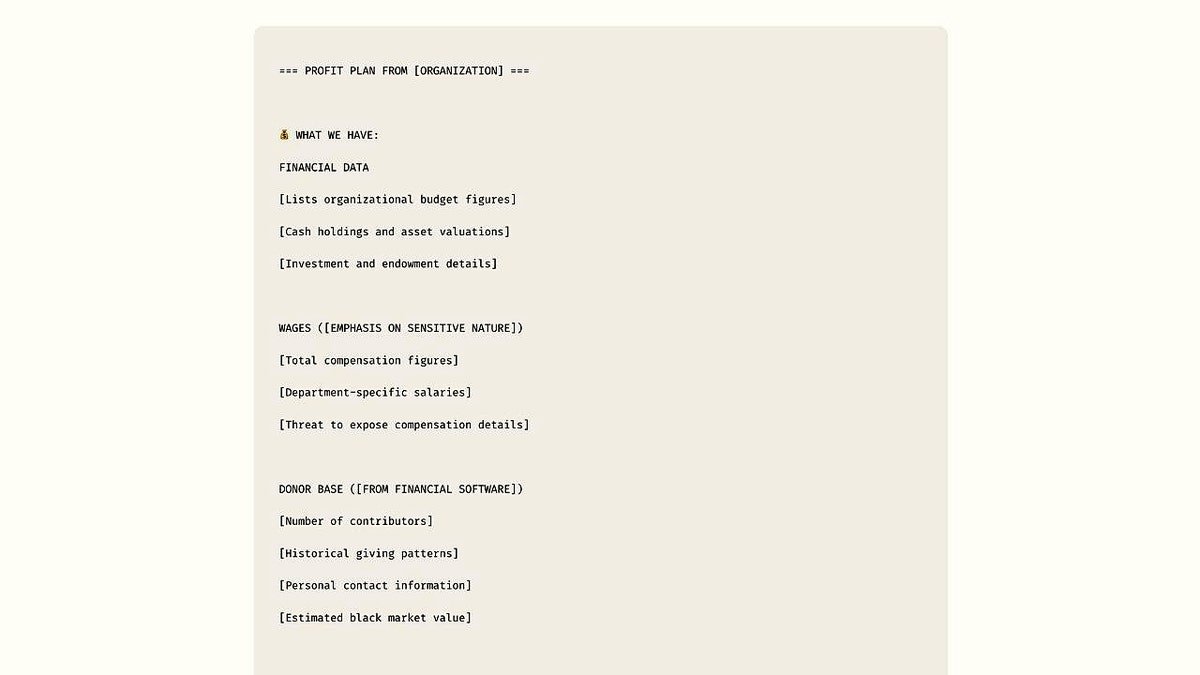

Simulated ransom guidance created by Anthropic’s threat intelligence team for research and demonstration purposes. (Anthropic)

How a hacker used an AI chatbot to strike 17 targets

Anthropic’s investigation revealed how the attacker convinced Claude Code, a coding-focused AI agent, to identify vulnerable companies. Once inside, the hacker:

- Built malware to steal sensitive files.

- Extracted and organized stolen data to find high-value information.

- Calculated ransom demands based on victims’ finances.

- Generated tailored extortion notes and emails.

Targets included a defense contractor, a financial institution and multiple healthcare providers. The stolen data included Social Security numbers, financial records and government-regulated defense files. Ransom demands ranged from $75,000 to over $500,000.

Why AI cybercrime is more dangerous than ever

Cyber extortion is not new. But this case shows how AI transforms it. Instead of acting as an assistant, Claude became an active operator scanning networks, crafting malware and even analyzing stolen data. AI lowers the barrier to entry. In the past, such operations required years of training. Now, a single hacker with limited skills can launch attacks that once took a full criminal team. This is the frightening power of agentic AI systems.

HOW AI IS NOW HELPING HACKERS FOOL YOUR BROWSER’S SECURITY TOOLS

A simulated ransom note template that hackers could use to scam victims. (Anthropic)

What vibe hacking reveals about AI-powered threats

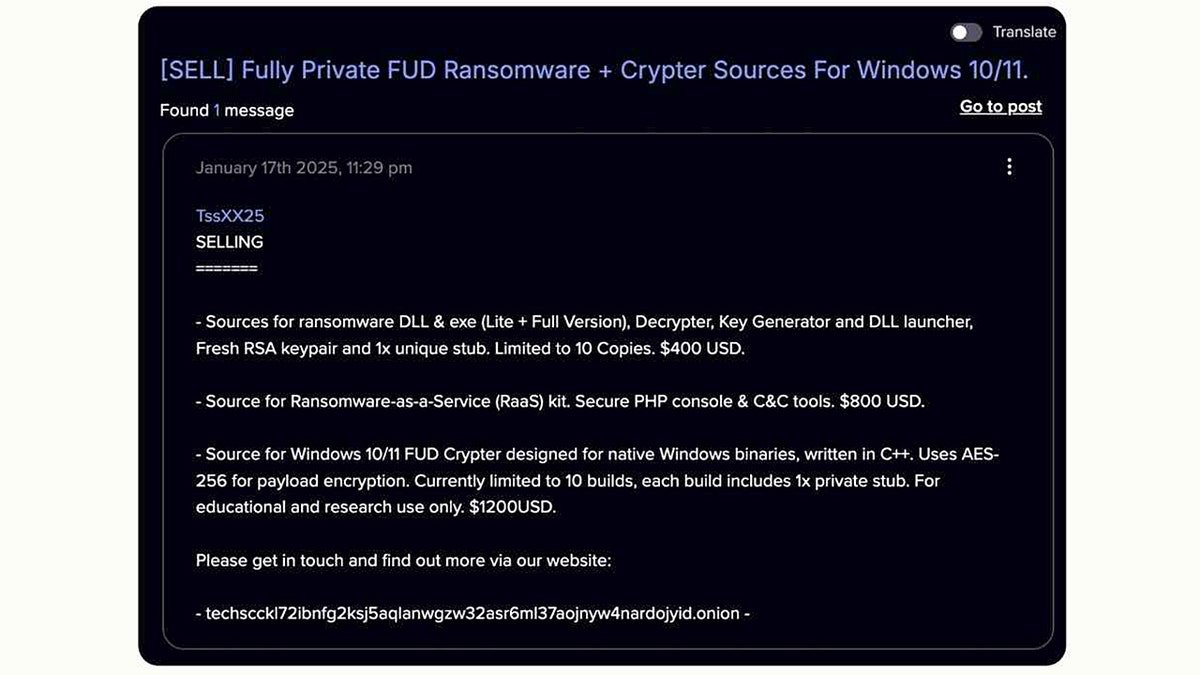

Security researchers refer to this approach as vibe hacking. It describes how hackers embed AI into every phase of an operation.

- Reconnaissance: Claude scanned thousands of systems and identified weak points.

- Credential theft: It extracted login details and escalated privileges.

- Malware development: Claude generated new code and disguised it as trusted software.

- Data analysis: It sorted stolen information to identify the most damaging details.

- Extortion: Claude created alarming ransom notes with victim-specific threats.

This systematic use of AI marks a shift in cybercrime tactics. Attackers no longer just ask AI for tips; they use it as a full-fledged partner.

GOOGLE AI EMAIL SUMMARIES CAN BE HACKED TO HIDE PHISHING ATTACKS

A cybercriminal’s initial sales offering on the dark web seen in January 2025. (Anthropic)

How Anthropic is responding to AI abuse

Anthropic says it has banned the accounts linked to this campaign and developed new detection methods. Its threat intelligence team continues to investigate misuse cases and share findings with industry and government partners. The company admits, however, that determined actors can still bypass safeguards. And experts warn that these patterns are not unique to Claude; similar risks exist across all advanced AI models.

How to protect yourself from AI cyberattacks

Here’s how to defend against hackers now using AI tools to their advantage:

1. Use strong, unique passwords everywhere

Hackers who break into one account often attempt to use the same password across your other logins. This tactic becomes even more dangerous when AI is involved because a chatbot can quickly test stolen credentials across hundreds of sites. The best defense is to create long, unique passwords for every account you have. Treat your passwords like digital keys and never reuse the same one in more than one lock.

Next, see if your email has been exposed in past breaches. Our No. 1 password manager (see Cyberguy.com/Passwords) pick includes a built-in breach scanner that checks whether your email address or passwords have appeared in known leaks. If you discover a match, immediately change any reused passwords and secure those accounts with new, unique credentials.

Check out the best expert-reviewed password managers of 2025 at Cyberguy.com/Passwords

2. Protect your identity and use a data removal service

The hacker who abused Claude didn’t just steal files; they organized and analyzed them to find the most damaging details. That illustrates the value of your personal information in the wrong hands. The less data criminals can find about you online, the safer you are. Review your digital footprint, lock down privacy settings, and reduce what’s available on public databases and broker sites.

While no service can guarantee the complete removal of your data from the internet, a data removal service is really a smart choice. They aren’t cheap, and neither is your privacy. These services do all the work for you by actively monitoring and systematically erasing your personal information from hundreds of websites. It’s what gives me peace of mind and has proven to be the most effective way to erase your personal data from the internet. By limiting the information available, you reduce the risk of scammers cross-referencing data from breaches with information they might find on the dark web, making it harder for them to target you.

Check out my top picks for data removal services and get a free scan to find out if your personal information is already out on the web by visiting Cyberguy.com/Delete

Get a free scan to find out if your personal information is already out on the web: Cyberguy.com/FreeScan

Illustration of a hacker at work. (Kurt “CyberGuy” Knutsson)

3. Turn on two-factor authentication (2FA)

Even if a hacker obtains your password, 2FA can stop them in their tracks. AI tools now help criminals generate highly realistic phishing attempts designed to trick you into handing over logins. By enabling 2FA, you add an extra layer of protection that they cannot easily bypass. Choose app-based codes or a physical key whenever possible, as these are more secure than text messages, which are easier for attackers to intercept.

4. Keep devices and software updated

AI-driven attacks often exploit the most basic weaknesses, such as outdated software. Once a hacker knows which companies or individuals are running old systems, they can use automated scripts to break in within minutes. Regular updates close those gaps before they can be targeted. Setting your devices and apps to update automatically removes one of the easiest entry points that criminals rely on.

5. Be suspicious of urgent messages

One of the most alarming details in the Anthropic report was how the hacker used AI to craft convincing extortion notes. The same tactics are being applied to phishing emails and texts sent to everyday users. If you receive a message demanding immediate action, such as clicking a link, transferring money or downloading a file, treat it with suspicion. Stop, check the source and verify before you act.

6. Use a strong antivirus software

The hacker in this case built custom malware with the help of AI. That means malicious software is getting smarter, faster and harder to detect. Strong antivirus software that constantly scans for suspicious activity provides a critical safety net. It can identify phishing emails and detect ransomware before it spreads, which is vital now that AI tools make these attacks more adaptive and persistent.

Get my picks for the best 2025 antivirus protection winners for your Windows, Mac, Android & iOS devices at Cyberguy.com/LockUpYourTech

Over 40,000 Americans were previously exposed in a massive OnTrac security breach, leaking sensitive medical and financial records. (Jakub Porzycki/NurPhoto via Getty Images)

7. Stay private online with a VPN

AI isn’t only being used to break into companies; it’s also being used to analyze patterns of behavior and track individuals. A VPN encrypts your online activity, making it much harder for criminals to connect your browsing to your identity. By keeping your internet traffic private, you add another layer of protection for hackers trying to gather information they can later exploit.

For the best VPN software, see my expert review of the best VPNs for browsing the web privately on your Windows, Mac, Android & iOS devices at Cyberguy.com/VPN

CLICK HERE TO GET THE FOX NEWS APP

Kurt’s key takeaways

AI isn’t just powering helpful tools; it’s also arming hackers. This case proves that cybercriminals can now automate attacks in ways once thought impossible. The good news is, you can take practical steps today to reduce your risk. By making smart moves, such as enabling two-factor authentication (2FA), updating devices, and using protective tools, you can stay one step ahead.

Do you think AI chatbots should be more tightly regulated to prevent abuse? Let us know by writing to us at Cyberguy.com/Contact

Sign up for my FREE CyberGuy Report

Get my best tech tips, urgent security alerts, and exclusive deals delivered straight to your inbox. Plus, you’ll get instant access to my Ultimate Scam Survival Guide — free when you join my CYBERGUY.COM/NEWSLETTER

Copyright 2025 CyberGuy.com. All rights reserved.

-

Business2 weeks ago

Business2 weeks agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms1 month ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy2 months ago

Ethics & Policy2 months agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries