AI Insights

Compiling the Future of U.S. Artificial Intelligence Regulation

Experts examine the benefits and pitfalls of AI regulation.

Recently, the U.S. House of Representatives, voting along party lines, passed H.R. 1—colloquially known as the “One Big Beautiful Bill Act.” If enacted, H.R.1 would pause any state or local regulations affecting artificial intelligence (AI) models or research for ten years.

Over the past several years, AI tools—from chatbots like ChatGPT and DeepSeek to sophisticated video-generating software such as Alphabet Inc.’s Veo 3—have gained widespread consumer acceptance. Approximately 40 percent of Americans use AI tools daily. These tools continue to improve rapidly, becoming more usable and useful for average consumers and corporate users alike.

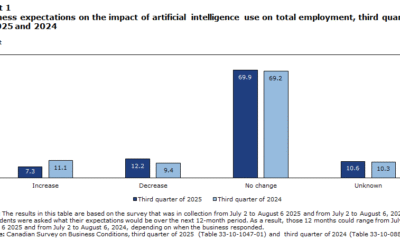

Optimistic projections suggest that the continued adoption of AI could lead to trillions of dollars of economic growth. Unlocking the benefits of AI, however, undoubtedly requires meaningful social and economic adjustments in the face of new employment, cyber-security and information-consumption patterns. Experts estimate that widespread AI implementation could displace or transform approximately 40 percent of existing jobs. Some analysts warn that without robust safety nets or reskilling programs, this displacement could exacerbate existing inequalities, particularly for low-income workers and communities of color and between more and less developed nations.

Given the potential for dramatic and widespread economic displacement, national and state governments, human rights watchdog groups, and labor unions increasingly support greater regulatory oversight of the emerging AI sector.

The data center infrastructure required to support current AI tools already consumes as much electricity as the eleventh-largest national market—rivaling that of France. Continued growth in the AI sector necessitates ever-greater electricity generation and storage capacity, creating significant potential for environmental impact. In addition to electricity use, AI development consumes large amounts of water for cooling, raising further sustainability concerns in water-scarce regions.

Industry insiders and critics alike note that overly broad training parameters and flawed or unrepresentative data can lead models to embed harmful stereotypes and mimic human biases. These biases lead critics to call for strict regulation of AI implementation in policing, national security, and other policy contexts.

Polling shows that American voters desire more regulation of AI companies, including limiting the training data AI models can employ, imposing environmental-impact taxes on AI companies, and outright banning AI implementation in some sectors of the economy.

Nonetheless, there is little consensus among academics, industry insiders, and legislators as to whether—much less how—the emerging AI sector should be regulated.

In this week’s Saturday Seminar, scholars discuss the need for AI regulation and the benefits and drawbacks of centralized federal oversight.

- In an article in the Stanford Emerging Technology Review 2025, Fei-Fei Li, Christopher Manning, and Anka Reuel of Stanford University, argue that federal regulation of AI may undermine U.S. leadership in the field by locking in rigid rules before key technologies have matured. Li, Manning, and Reuel caution that centralized regulation, especially of general-purpose AI models, risks discouraging competition, entrenching dominant firms, and shutting out third-party researchers. Instead, Li, Manning, and Reuel call for flexible regulatory models drawing on existing sectoral rules and voluntary governance to address use-specific risks. Such an approach, Li, Manning, and Reuel suggest, would better preserve the benefits of regulatory flexibility while maintaining targeted oversight of areas of greatest risk.

- In a paper in the Common Market Law Review, Philipp Hacker, a professor at the European University Viadrina, argues that AI regulation must weigh the significant climate impacts of machine learning technologies. Hacker highlights the substantial energy and water consumption needed to train large generative models such as GPT-4. Critiquing current European Union regulatory frameworks, including the General Data Protection Regulation and the then-proposed EU AI Act, Hacker urges policy reforms that move beyond transparency toward incorporating sustainability in design and consumption caps tied to emissions trading schemes. Finally, Hacker proposes these sustainable AI regulatory strategies as a broader blueprint for the environmentally conscious development of emerging technologies, such as blockchain and the Metaverse.

- The Cato Institute’s, David Inserra, warns that government-led efforts to regulate AI could undermine free expression. In a recent briefing paper, Inserra explains that regulatory schemes often target content labeled as misinformation or hate speech—efforts that can lead to AI systems reflecting narrow ideological norms. Inserra cautions that such rules may entrench dominant companies and crowd out AI products designed to reflect a wider range of views. Inserra calls for a flexible approach grounded in soft law, such as voluntary codes of conduct and third-party standards, to allow for the development of AI tools that support diverse expressions of speech.

- In an article in the North Carolina Law Review, Erwin Chemerinsky, the Dean of UC Berkeley Law, and practitioner Alex Chemerinsky argue that state regulation of a closely related field—internet content moderation more broadly—is constitutionally problematic and bad policy. Drawing on precedents including Miami Herald v. Tornillo and Hurley v. Irish-American Gay Group, Chemerinsky and Chemerinsky contend that many state laws restricting or requiring content moderation violate First Amendment editorial discretion protections. Chemerinsky and Chemerinsky further argue that federal law preempts most state content moderation regulations. The Chemerinskys warn that allowing multiple state regulatory schemes would create a “lowest-common-denominator” problem where the most restrictive states effectively control nationwide internet speech, undermining the editorial rights of platforms and the free expression of their users.

- In a forthcoming chapter, John Yun, of Antonin Scalia Law School at George Mason University, cautions against premature regulation of AI. Yun argues that overly restrictive AI regulations risk stifling innovation and could lead to long-term social costs outweighing any short-term benefits gained from mitigating immediate harms. Drawing parallels with the early days of internet regulation, Yun emphasizes that premature interventions could entrench market incumbents, limit competition, and crowd out potentially superior market-driven solutions to emerging risks. Instead, Yun advocates applying existing laws of general applicability to AI and maintaining a regulatory restraint similar to the approach adopted during the formative early years of the internet.

- In a forthcoming article in the Journal of Learning Analytics, Rogers Kaliisa of the University of Oslo and several coauthors examine how the diversity of AI regulations across different countries creates an “uneven storm” for learning analytics research. Kaliisa and his coauthors analyze how comprehensive EU regulations such as their AI Act, U.S. sector-specific approaches, and China’s algorithm disclosure requirements impose different restrictions on the use of educational data in AI research. Kaliisa and his team warn that strict rules—particularly the EU’s ban on emotion recognition and biometric sensors—may limit innovative AI applications, widening global inequalities in educational AI development. The Kaliisa team proposes that experts engage with policymakers to develop frameworks that balance innovation with ethical safeguards across borders.

The Saturday Seminar is a weekly feature that aims to put into written form the kind of content that would be conveyed in a live seminar involving regulatory experts. Each week, The Regulatory Review publishes a brief overview of a selected regulatory topic and then distills recent research and scholarly writing on that topic.

AI Insights

Commanders vs. Packers props, SportsLine Machine Learning Model AI picks, bets: Jordan Love Over 223.5 yards

The NFL Week 2 schedule gets underway with a Thursday Night Football matchup between NFC playoff teams from a year ago. The Washington Commanders battle the Green Bay Packers beginning at 8:15 p.m. ET from Lambeau Field. Second-year quarterback Jayden Daniels led the Commanders to a 21-6 opening-day win over the New York Giants, completing 19 of 30 passes for 233 yards and one touchdown. Jordan Love, meanwhile, helped propel the Packers to a dominating 27-13 win over the Detroit Lions in Week 1. He completed 16 of 22 passes for 188 yards and two touchdowns.

NFL prop bettors will likely target the two young quarterbacks with NFL prop picks, in addition to proven playmakers like Deebo Samuel, Romeo Doubs and Zach Ertz. Green Bay’s Jayden Reed has been dealing with a foot injury, but still managed to haul in a touchdown pass in the opener, while Austin Ekeler (shoulder) does not carry an injury designation for TNF. The Packers enter as a 3-point favorite with Green Bay at -172 on the money line, while the over/under is 49 points. Before betting any Commanders vs. Packers props for Thursday Night Football, you need to see the Commanders vs. Packers prop predictions powered by SportsLine’s Machine Learning Model AI.

Built using cutting-edge artificial intelligence and machine learning techniques by SportsLine’s Data Science team, AI Predictions and AI Ratings are generated for each player prop.

For Packers vs. Commanders NFL betting on Monday Night Football, the Machine Learning Model has evaluated the NFL player prop odds and provided Commanders vs. Packers prop picks. You can only see the Machine Learning Model player prop predictions for Washington vs. Green Bay here.

Top NFL player prop bets for Commanders vs. Packers

After analyzing the Commanders vs. Packers props and examining the dozens of NFL player prop markets, the SportsLine’s Machine Learning Model says Packers quarterback Love goes Over 223.5 passing yards (-112 at FanDuel). Love passed for 224 or more yards in eight games a year ago, despite an injury-filled season. In 15 regular-season games in 2024, he completed 63.1% of his passes for 3,389 yards and 25 touchdowns with 11 interceptions. Additionally, Washington allowed an average of 240.3 passing yards per game on the road last season.

In a 30-13 win over the Seattle Seahawks on Dec. 15, he completed 20 of 27 passes for 229 yards and two touchdowns. Love completed 21 of 28 passes for 274 yards and two scores in a 30-17 victory over the Miami Dolphins on Nov. 28. The model projects Love to pass for 259.5 yards, giving this prop bet a 4.5 rating out of 5. See more NFL props here, and new users can also target the FanDuel promo code, which offers new users $300 in bonus bets if their first $5 bet wins:

How to make NFL player prop bets for Washington vs. Green Bay

In addition, the SportsLine Machine Learning Model says another star sails past his total and has nine additional NFL props that are rated four stars or better. You need to see the Machine Learning Model analysis before making any Commanders vs. Packers prop bets for Thursday Night Football.

Which Commanders vs. Packers prop bets should you target for Thursday Night Football? Visit SportsLine now to see the top Commanders vs. Packers props, all from the SportsLine Machine Learning Model.

AI Insights

Adobe Says Its AI Sales Are Coming in Strong. But Will It Lift the Stock?

Adobe (ADBE) just reported record quarterly revenue driven by artificial intelligence gains. Will it revive confidence in the stock?

The creative software giant late Thursday posted adjusted earnings per share of $5.31 on revenue that jumped 11% year-over-year to a record $5.99 billion in the fiscal third quarter, above analysts’ estimates compiled by Visible Alpha, as AI revenues topped company targets.

CEO Shantanu Narayen said that with the third-quarter’s revenue driven by AI, Adobe has already surpassed its “AI-first” revenue goals for the year, leading the company to boost its outlook. The company said it now anticipates full-year adjusted earnings of $20.80 to $20.85 per share and revenue of $23.65 billion to $23.7 billion, up from adjusted earnings of $20.50 to $20.70 on revenue of $23.50 billion to $23.6 billion previously.

Shares of Adobe were recently rising in late trading. But they’ve had a tough year so far, with the stock down more than 20% for 2025 through Thursday’s close amid worries about the company’s AI progress and growing competition.

Wall Street is optimistic. The shares finished Thursday a bit below $351, and the mean price target as tracked by Visible Alpha, above $461, represents a more than 30% premium. Most of the analysts tracking the stock have “buy” ratings.

But even that target represents a degree of caution in the context of recent highs. The shares were above $600 in February 2024.

AI Insights

First Trust Nasdaq Artificial Intelligence and Robotics ETF (ROBT): How to Invest

The First Trust Nasdaq Artificial Intelligence and Robotics ETF (ROBT 1.91%) offers a sophisticated way to track AI companies, with an emphasis on fundamental analysis.

It does so through an advanced index methodology designed to target companies that meet specific artificial intelligence and robotics criteria, rather than simply buying the largest names in the space.

However, with this added complexity comes higher costs. ROBT may not be the most beginner-friendly ETF. Here’s what you need to know to decide if the First Trust Nasdaq Artificial Intelligence and Robotics ETF is worth choosing over its AI-focused competitors.

Image source: Getty Images.

Overview

What is First Trust Nasdaq Artificial Intelligence and Robotics ETF?

The First Trust Nasdaq Artificial Intelligence and Robotics ETF is a thematic ETF that tracks the Nasdaq CTA Artificial Intelligence and Robotics index. It is not a mutual fund.

This benchmark takes a more nuanced approach than most AI ETFs by grouping companies into three categories:

- Enablers, which develop the hardware, software, and infrastructure that form the building blocks of artificial intelligence.

- Engagers, who design, integrate, or deliver AI-driven products and services.

- Enhancers, which offer value-added AI capabilities as part of a broader business model, however, AI and robotics are not their primary focus.

Companies are scored across the three categories based on their level of AI involvement, with the top 30 in each category selected. However, the index is not equally split between these groups.

Engagers receive the highest weighting at 60% of the portfolio because they have the most direct AI exposure. Enablers make up 25%, while Enhancers account for15% to provide balance and diversification. Within each group, holdings are equally weighted.

The portfolio is rebalanced quarterly to reset weights and capture relative performance changes, while a semiannual reconstitution ensures the index remains current with evolving AI and robotics developments.

How to invest

How to invest

- Open your brokerage app: Log in to your brokerage account where you handle your investments.

- Search for the ETF: Enter the ticker or ETF name into the search bar to bring up the ETF’s trading page.

- Decide how many shares to buy: Consider your investment goals and how much of your portfolio you want to allocate to this ETF.

- Select order type: Choose between a market order to buy at the current price or a limit order to specify the maximum price you’re willing to pay.

- Submit your order: Confirm the details and submit your buy order.

- Review your purchase: Check your portfolio to ensure your order was filled as expected and adjust your investment strategy accordingly.

Holdings

Holdings

First Trust Nasdaq Artificial Intelligence and Robotics ETF has a heavy U.S. weighting at 64%, followed by Japan at 9.2%, then the U.K., Israel, South Korea, France, and Taiwan.

The fund holds 100 companies, with 50% classified as technology sector, 22% as industrials, and 9% as healthcare. The remaining holdings are primarily spread across the consumer discretionary and communications sectors, with small allocations to financials, consumer staples, and energy.

The First Trust Nasdaq Artificial Intelligence and Robotics ETF’s largest holdings as of late August 2025 are:

- Symbotic (SYM 0.29%): 2.92%

- Upstart Holdings (UPST -0.34%): 2.28%

- AeroVironment (AVAV -1.81%): 2.25%

- Ocado Group (OCDO -1.99%): 2.22%

- Palantir Technologies (PLTR -1.39%): 2.14%

- Synopsys (SNPS 13.35%): 2.07%

- Recursion Pharmaceuticals (RXRX 6.72%): 2.00%

- Cadence Design Systems (CDNS 4.95%): 1.91%

- Gentex (GNTX 1.36%): 1.91%

- Ambarella (AMBA -0.28%): 1.85%

The portfolio has a large-cap tilt, with an average market cap of $28 billion. Valuations are elevated, with shares trading around 29x price-to-earnings, 2.75x price to sales, and 17x price to cash flow.

Should I invest?

Should I invest?

Only consider First Trust Nasdaq Artificial Intelligence and Robotics ETF if you specifically believe in its index methodology and the “engagers, enablers, enhancers” classification system, along with the resulting weighting across these groups.

There is no single “right” way to invest in AI. This is simply how ROBT’s benchmark index approaches selection and weighting compared to competing ETFs. It may under or outperform similar funds at various times.

If you appreciate a more complex, rules-based approach, you may find the fund appealing. But if you’re seeking simplicity, this ETF probably isn’t a fit.

Note that the ETF has historically lagged the broader market and has shown greater volatility than diversified index ETFs, like S&P 500 funds. Its relatively narrow portfolio means individual stock positions can have an outsized impact on performance, for better or worse.

Moreover, investing in AI and robotics carries idiosyncratic risk. These companies are often priced for growth, with high valuations that may be vulnerable to pullbacks if earnings don’t keep pace. The sector also tends to lack exposure to defensive, non-cyclical industries, which can leave long-term investors more exposed during market downturns.

Dividends

Does the ETF pay a dividend?

The First Trust Nasdaq Artificial Intelligence and Robotics ETF has a 30-day SEC yield of 0.27% as of August 2025. The ETF pays dividends semiannually in December and June. The yield is low because many AI-focused companies reinvest earnings into growth rather than paying dividends.

Expense ratio

What is the ETF’s expense ratio?

The expense ratio for First Trust Nasdaq Artificial Intelligence and Robotics ETF is 0.65%, or $65 per $10,000 invested annually. This is higher than both sector and broad market ETFs, and even on the pricey side for a thematic ETF, approaching the cost of some actively managed funds due to its more specialized index methodology.

A percentage of mutual fund or ETF assets deducted annually to cover management, operational, and administrative costs.

Historical performance

Historical performance

Since its inception, First Trust Nasdaq Artificial Intelligence and Robotics ETF has generally tracked its benchmark, the Nasdaq CTA Artificial Intelligence and Robotics Index, but has delivered slightly lower returns across most periods due to fee drag.

For example, over the past five years, the fund returned 6.4% annually versus 6.9% for the index.

Where the gap really shows is against the broader market: The S&P 500 has compounded at nearly 16% annually over the same period, far ahead of the ETF.

This underperformance highlights two challenges with thematic funds like this: higher volatility and sector concentration.

While the ETF has at times outpaced the S&P 500 over short stretches, it has struggled to keep up over longer horizons, reflecting the risks of a narrower, more specialized portfolio.

|

1-Year |

3-Year |

5-Year |

|

|

Net Asset Value |

14.38% |

9.54% |

6.35% |

|

Market Price |

14.56% |

9.58% |

6.37% |

Related investing topics

The bottom line

First Trust Nasdaq Artificial Intelligence and Robotics ETF takes a very involved approach to index construction, breaking the AI and robotics universe into three categories and then assigning different portfolio weights to each group.

While this adds a layer of precision that some investors may appreciate, it also introduces complexity that can make the strategy harder to evaluate and follow compared to simpler, market-cap-weighted thematic ETFs.

The 0.65% expense ratio is on the higher side for a passive ETF and approaches the cost of certain actively managed funds in this space, which could make some investors question whether the additional complexity justifies the fee.

Over the long term, higher costs combined with a specialized weighting methodology may influence performance, so this fund may be best-suited for those who specifically want this unique structure rather than a broader, more conventional approach.

FAQ

Investing in First Trust Nasdaq Artificial Intelligence and Robotics ETF FAQ

What is the best way to invest in AI and robotics?

The best way to invest in AI and robotics depends on your investment style. Some investors are more comfortable with diversified thematic ETFs like the First Trust Nasdaq Artificial Intelligence and Robotics ETF, while others prefer to build their own portfolios of individual stocks.

Is ROBT a good investment?

First Trust Nasdaq Artificial Intelligence and Robotics ETF may appeal if you like its complex index approach, but its high fees and convoluted weighting can be drawbacks.

What is the best AI and robotics ETF?

The best AI and robotics ETF varies by investor goals, costs, and desired exposure, so compare options carefully. Some of the top AI and robotics ETFs by market cap include:

- Global X Robotics & Artificial Intelligence ETF (NASDAQ:BOTZ)

- Global X Artificial Intelligence & Technology ETF (NASDAQ:AIQ)

- iShares Future AI & Tech ETF (NYSEARCA:ARTY)

- Roundhill Generative AI & Technology ETF (NYSEARCA:CHAT)

-

Business2 weeks ago

Business2 weeks agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms1 month ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy2 months ago

Ethics & Policy2 months agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi