AI Research

Cherokee Nation signs first AI policy

Cherokee Nation Principal Chief Chuck Hoskin Junior has signed the tribe’s first policy on artificial intelligence.

The tribe says the new policy sets guidelines for ethical use of AI and requirements for review measures.

It says the policy also outlines specific approved uses for AI, like summarizing public information and brainstorming activities.

The tribe says that any AI use involving the Cherokee language must be reviewed and approved by fluent speakers.

“As Cherokee people, we were not meant to fall behind but were meant to be the people who surged ahead,” says Chief Chuck Hoskin Jr. “AI is the future and Cherokee Nation is working to embrace that future, but not at the expense of our language and culture.”

The policy also creates a committee to approve or reject proposed AI tools and set limitations on their use.

AI Research

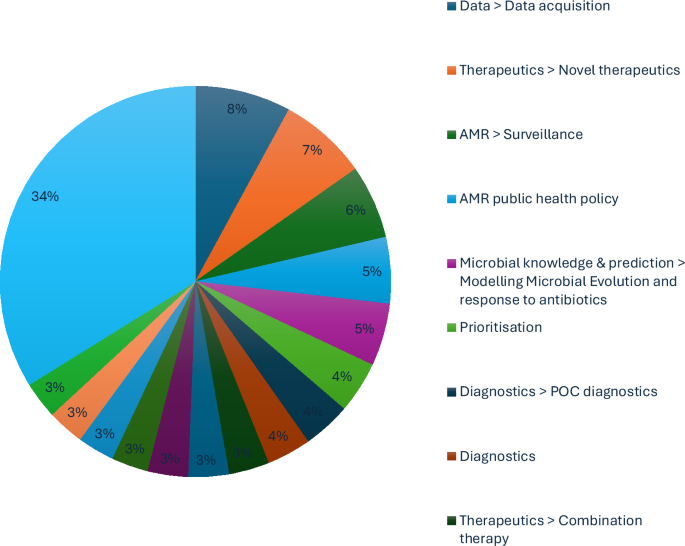

Mitigating antimicrobial resistance by innovative solutions in AI (MARISA): a modified James Lind Alliance analysis

These results have set out the direction of expert opinion for AMR policy, particularly in how to optimise research and development efforts. Nevertheless, there remain obstacles to explore in implementing this expert vision.

Brokered data-sharing was proposed by the expert interviews to unify the divided efforts against AMR. A data broker is a regulator and diplomat; a referee of how data is collected and used, but also an unbiased arbitrator between commercially competitive organisations. This precedent is established in other industrial contexts by the UK Information Commissioner’s Office11 and European Data Protection Regulation12. Data was the most consistently raised issue throughout the interviews. The interviewees were aware that any machine-learning algorithm often requires large amounts of data, with concerns centred around access to datasets that already exist, particularly pharmacological data rather than microbial data. Possible solutions include a federated pharmacological dataset that does not compromise participating commercial research programmes, such as through a data broker. This would require standardisation of data collection or at least a data cleaning methodology. Moreover, Wan et al. demonstrated that AI models can be trained with less data than previously thought; APEX was trained on standardised experimental data collected for about 1000 compounds tested against an array of target bacteria, yielding a matrix of about 15,000 data points13.

AI-driven modelling is essential for the development of novel therapeutic options. Creating novel therapeutics was seen as a very high priority by interviewees, though most mentioned the importance of pursuing multiple avenues, as there is significant uncertainty as to where the future lies. Safety must be a central theme and concern. Alongside specifically targeting drugs, modelling pharmacokinetics and pharmacodynamics could also be approached using AI methods. Peptides, immunologics and small molecules were all seen as reasonable targets14,15,16. The paucity of anti-fungal therapy was highlighted as a pressing issue. Phage was viewed favourably in terms of a second-line therapy or personalised medicine, but concerns were raised about their longevity as a therapeutic option and the potential for unintended consequences (e.g. acting as a vector for AMR spread).

Rapid diagnostics emerged as a key pillar in the fight against AMR. Interviewees emphasised the need for more accessible, affordable and rapid diagnostic tools that could be implemented at various levels of healthcare, including in low-resource settings. Advances in AI and molecular diagnostics can help enable real-time pathogen detection, reducing delays in treatment and limiting unnecessary antimicrobial use. Improved diagnostics would enhance antimicrobial stewardship, enable more precise epidemiological tracking and improve patient outcomes by ensuring the right treatment is administered early. Acquisition of this data needs to be standardised to optimise international efforts, which was also a subject of intense scrutiny during the AMR Data Symposium at The London School of Hygiene and Tropical Medicine in October 202417. Data is currently stored in silos corresponding to traditional disciplines, and therefore, the datasets are incomplete, whilst there remain integration issues due to heterogeneity of data collection methods from different labs or disciplines. This is corroborated by a review of antimicrobial learning systems (ALSs), which noted the obstacles of incomplete data capture, dwindling pipelines of new antimicrobials, one-size-fits-all antimicrobial treatment formularies, resource pressures and poorly implemented diagnostic innovations18. Moreover, gaps in the currently available data require further research (e.g. pharmacological studies in humans), and data is being missed due to poor testing capability, resulting in skewed datasets. The specific priority for applying genomic data in the fight against AMR was also highlighted by the Wellcome conference on AMR in March 202419. Comprehensive datasets are needed to fully realise the opportunities AI presents in combating AMR20.

Drug discovery is essential to deliver regional biosecurity. Vaccines were highly rated by interviewees, but they acknowledged the difficulty of creating anti-bacterial rather than anti-viral vaccines. Nevertheless, it is appealing to target organisms that drive high antimicrobial use. Therapeutic approaches were an area most interviewees felt was a priority, though it tended to be highlighted by those who were currently working in this field, and they admitted their bias due to their specialisation. The concept of automating preclinical drug discovery was seen as very appealing. High-throughput screening was detailed as a way that AI could be used to speed up screening of vaccine candidates and to highlight new potential mechanisms for drug targets. Combination therapies were described as a way that AI was well-suited to the large-scale screening required for finding combination therapies. However, the challenges raised by interviewees, which mostly centred around pharmacokinetic and pharmacodynamic concerns, should be noted. However, although a drug may work synergistically with another in theory, the concentrations required for antimicrobial drugs, rather than other non-antimicrobials, are often greater by an order of magnitude, which presents safety concerns. AI was postulated to model the factors that affect drug penetration, the effect of the immune system and the effect of the gut microbiome.

Integrated Economic Prevention refers to the strategic investment in preventative public health measures that mitigate financial and societal costs associated with AMR before they escalate into more severe economic and healthcare burdens. It underscores the importance of cost-effective interventions, such as improved stewardship, surveillance and public awareness campaigns, that not only reduce long-term expenditures for healthcare systems but also enhance societal engagement by demonstrating tangible financial savings. Economic prevention is an essential component of framing the public narrative. The cost of AMR and the savings to be made for the public purse through pre-emptive action are needed for improving societal engagement. Despite the ongoing research and predicted professional concern, AMR does not have the public profile of other diseases, such as cancer.

This BARDI framework for directing AMR policy is corroborated by leading reviews in this space. To that end, Rabaan et al.21 discuss how AI can address AMR by optimising antibiotic prescriptions, predicting resistance patterns and enhancing diagnostics; they emphasise the potential of machine learning to improve clinical decisions and reduce the misuse of antibiotics. Moreover, Howard et al.18 propose a framework for implementing AI-driven ALSs, and highlight AI’s role in optimising antimicrobial use, addressing the complexity of AMR data and promoting adaptive, sustainable interventions within healthcare systems; the development of ALSs is especially important for modelling in surveillance efforts and understanding the drivers of AMR22. Meanwhile, Ali et al.23 outline AI’s potential in antimicrobial stewardship, emphasising its application in predicting bacterial resistance, optimising treatment pathways and identifying emerging threats through large-scale data analysis. Furthermore, Rabaan et al.21, in alignment with the BARDI framework, focus on AI’s application in clinical settings, particularly in improving antibiotic stewardship. Moreover, Ali et al.23 further support these views by detailing AI’s role in data-driven antimicrobial stewardship, reinforcing the importance of large-scale data integration and machine learning for proactive AMR management. Moreover, inappropriate antibiotic prescribing leads to increased AMR, patient morbidity and mortality. Clinical decision support systems that integrate AI with widely used clinical screaming systems can aid clinicians by providing accurate, evidence-based recommendations. This would enable more objective prescription of antibiotics in compliance with guidelines as per diagnosis. To this end, Howard et al.18 expand on this by proposing a systemic framework for integrating AI within healthcare, stressing the importance of continuous learning systems. The obstacles to progress in AI use for AMR include incomplete or biased data, especially from primary care, which hinders accurate AI predictions. Sparse data and fragmented health systems complicate AI’s effectiveness18,20; the BARDI framework provides a basis for international cooperation against AMR.

Moreover, this BARDI framework aligns with Chindelevitch et al.24 who describe AMR advancements in surveillance, prevention, diagnosis and treatment; this research group has developed the first WHO-endorsed catalogue of mutations in the Mycobacterium tuberculosis complex associated with drug resistance and also developed INGOT-DR, an interpretable machine-learning approach to predicting drug resistance in bacterial genomic datasets25. The molecular mechanisms of AMR have been reviewed to deliver an overview of intervention and modelling opportunities, particularly using genomic sequencing and functional genomic approaches, including CRISPR26. Machine-learning applications to whole-genome studies27 present opportunities for both AMR surveillance as well as downstream drug discovery. Whilst applying AI to small molecules may generate recommendations which are not chemically synthesizable28, future models may be able to incorporate pharmacology and toxicity to deliver promising molecules such as Guavanin-2, which expressed the novel and non-coded mechanism of hyperpolarization of the bacterial membrane29. Meanwhile, with a growing demand for microbial resistance data collection, the irregular standards and practices of reporting globally compromise confidence in the field’s advancement30. Modelling AMR transmission may enable personalised antimicrobial stewardship interventions, particularly in carbapenem-resistant Klebsiella pneumoniae31. This necessitates a whole-system approach32 with collaborations such as the Surveillance Partnership to Improve Data for Action on Antimicrobial Resistance [SPIDAAR], funded by Pfizer and the Wellcome Trust33, for national plans for upscaling surveillance and prevention programmes in collaborations with private sector resources, particularly for deriving mechanistic insights on drug activity from population-scale experimental data34.

This BARDI framework is an urgent strategy to fight AMR; in a study of 41.6 million US hospital admissions (over 20% of national hospitalisations annually) between 2012 to 2017, the incidence of extended-spectrum beta-lactamase increased by 53.3% (from 37.55 to 57.12 cases per 10,000 hospitalisations), demonstrating that most hospitalisations were now showing characteristics of AMR10. If this trajectory does not stop, even the wealthiest nations will be overwhelmed.

There are at least four ongoing considerations to the implementation of this BARDI framework. Data privacy and security are important when dealing with sensitive patient information. Global standardisation and interoperability of AI systems are necessary to ensure that AI-driven AMR detection can be effectively integrated for biosecurity across different healthcare environments35. Now that 460 academic, commercial and public data consumers in the UK National Health Service have been mapped, and the challenges of multistage data flow chains have been shown to include noncompliance with best practice, there remain concerns about delivering the full potential of UK health data36, including the establishment of a data broker.

Subtle differences in the definitions of a particular clinical event can have a dramatic impact on prediction performance. Cohen et al.37, when applying tree-based, deep learning and survival analysis to the MIMI-III intensive care admissions database, demonstrated a 0-6% variation in area under the receiver operator characteristic (AUROC) depending on the onset of time in different definitions of sepsis. This is an essential observation to consider when using AI in the forecast of AMR because of the potential for resistance to be expressed in different patterns across different bacteria, as each resistant strain manifests harm to the host. A more precise definition beyond ‘when microbes no longer respond to antimicrobial medicines’38 is needed to minimise the variation in AUROC of AMR predictive diagnostics; there must not be a repeat of the error of the Sepsis-III definition in not defining the clinical event onset time39,40. Moreover, it is important to differentiate suspicion of infection and organ dysfunction when interpreting the clinical scenario41; verifiable methods with a precise definition of AMR are needed to direct a valid clinical response. Li et al.42 may have begun to address this problem by cataloguing some of the exact alleles associated with specific resistance patterns, but this would need to be improved to real-time analysis to allow for meaningful improvements to clinical decision-making.

Both ‘One Health’ and ‘Global Health’ are interdisciplinary concepts based on the interdependence of human and animal health, and integrating biological, environmental and socioeconomic factors, but they address AMR at different levels. Hospitals, patients and infections are all sometimes described as ‘resistant’43, but technically only the bacteria become resistant to an antibiotic38. However, when interrogating genomic and EHR data to develop a forecast, one is inclined to define AMR by a broader criterion of observed phenomena as the infection takes effect in the host patient and hospital. Gene capture strategies44 and real-time PCR procedures45 may improve the detection of antibiotic-resistant bacteria, regardless of their environment. This matters for a ‘Global Health’ perspective since novel resistance arises in one place and then disseminates worldwide46.

Modern vaccine platforms such as mRNA, protein subunit and vector-based technologies offer new opportunities for developing targeted vaccines against resistant pathogens, alleviating the AMR pressure on healthcare systems worldwide47. Vaccines offer a complementary approach to antimicrobials by decreasing antibiotic demand; vaccines against Streptococcus pneumoniae and Haemophilus influenzae type b have reduced rates of pneumonia and meningitis48. This reduces demand for broad-spectrum antibiotics, slowing resistance from antibiotic regimens selecting for resistant strains49. Moreover, vaccination against viral pathogens can help reduce the incidence of secondary bacterial infections and reduce symptomatic presentations, which may be confused with bacterial pathology50. In populations with high influenza vaccination coverage, there is a consequent reduction in antibiotic prescriptions, particularly during flu seasons51. mRNA vaccines, vector-based vaccines and protein subunit vaccines have expanded the potential for targeting AMR directly52. mRNA vaccines use messenger RNA to induce cells to produce specific antigens, thereby triggering an immune response to AMR pathogens53. This technology allows for the rapid development and testing of vaccines against AMR bacteria with rapid turnover, such as Pseudomonas aeruginosa and Mycobacterium tuberculosis54. Protein subunit vaccines enable targeted responses against resistant bacterial strains, such as MRSA55. Conjugate vaccines, which combine a protein with a polysaccharide to enhance immunogenicity, can prevent infections that commonly exhibit antibiotic resistance; the pneumococcal conjugate vaccine has not only reduced pneumococcal disease prevalence but also curbed the spread of drug-resistant S. pneumoniae strains56. However, caution is required as some drug-resistant strains have evaded vaccination57 and general resistance has been reported to bounce back to pre-vaccine levels58. Vector-based vaccines can be engineered to target specific resistant pathogens, especially those with complex life cycles59. Escherichia coli, Klebsiella pneumoniae and Acinetobacter baumannii are emerging targets for pharmaceutical research60 since these infections are difficult to treat due to multidrug resistance.

Moreover, these developments complement combination machine-learning methodologies, which leverage data-driven models in synthetic gene circuit engineering61; the introduction of RhoFold+62, an RNA language model-based deep learning method that accurately predicts 3D structures of single-chain RNAs from sequences, enables the fully automated end-to-end pipeline for RNA 3D structure prediction. Vaccines could significantly impact AMR by preventing infections that are notoriously hard to manage with available antibiotics. Whilst we wait for vaccines to be developed, resistant microorganisms will need to be scrutinised for druggable vulnerabilities, such as through collateral sensitivity induction by manipulating bacterial physiology63. These intermediate efforts can be supported by antimicrobial resistant gene databases64,65,66,67,68,69, particularly which offer spatiotemporal and abundancy data70.

The full James Lind Alliance (JLA) protocol process was modified; each Priority-Setting Partnership (PSP) would normally consist of patients, carers and their representatives, and clinicians, and is led by a Steering Group. The Steering Group oversees the activities of the PSP and has responsibility for the activity and the outcomes of the PSP. Since the role of the PSP is to identify questions that have not been answered by research to date, this study followed a modified path with the experts giving answers within a thematic framework. While the study excluded direct public involvement in its priority setting, it acknowledges that public awareness and engagement are critical in combating AMR. Instead of broad public surveys and workshops, the study used targeted expert interviews to identify research priorities in applying AI to AMR. Despite this abridged process, the extensive subsequent thematic analysis maintained the principles set out by the National Institute for Health and Care Research, which coordinates the infrastructure of the JLA to oversee the processes for PSPs, based at the NIHR Coordinating Centre, University of Southampton.

The BARDI framework advances previous antimicrobial-resistance proposals by transforming disconnected objectives into a unified, action-oriented ecosystem. Whereas previous action plans simply urged stronger surveillance, more research, better diagnostics, expanded R&D and the development of an economic case for investment, BARDI weaves these goals into five interlocking pillars that actively drive progress. First, instead of leaving data systems in siloed national or sectoral hands, BARDI establishes a neutral data broker. This broker enforces common standards, mediates access and protects proprietary interests, ensuring that human health, veterinary, agricultural and environmental datasets flow together under fair governance. Second, BARDI elevates AI from an aspirational research tool to the central engine of the response: AI-driven modelling continuously ingests brokered data to forecast resistance trends, optimise pharmacokinetic/pharmacodynamic regimens and even propose novel therapeutic compounds. Third, rapid diagnostics are promoted from a side objective to a full pillar. Decentralised, POC tests feed real-time results into the data broker, triggering AI-informed stewardship interventions and improving surveillance with minimal delay. Fourth, the drug-discovery pillar couples AI-identified bacterial targets with a prioritisation schema linked directly to public health impact, ensuring that investment goes toward the most critical vaccines and antimicrobial compounds rather than dispersing funding across undifferentiated R&D. Finally, integrated economic prevention converts the longstanding call to ‘develop the economic case’ into a dynamic funding mechanism. Real-time data on resistance burden and projected economic losses guide the allocation of resources to stewardship programmes, awareness campaigns, incentive prizes and cross-sector collaborations. In this way, economics is not merely a justification for action but an operational tool that continually sustains and adapts the entire BARDI ecosystem.

The top ten expert research themes for the application of AI to AMR provide valuable clarity on allocating resources to inform research developments in biosecurity and pandemic preparation. We propose a BARDI framework for directing AMR policy (Brokered data-sharing, AI-driven modelling, Rapid diagnostics, Drug discovery and Integrated economic prevention). Data was the most consistently raised issue throughout the interviews and needs to be neutrally coordinated to avoid obstacles, including automation bias, fear of job displacement and scepticism about AI’s decision-making abilities. Developing AI for AMR faces complex challenges, particularly in ensuring that AI tools comply with medical device regulations and data protection laws across different countries. The BARDI framework is a coherent strategy to fight AMR with AI to help realise the aspiration of the GRAM Project to save up to 92 million lives by 20503.

AI Research

Rethinking AI Innovation: Is Artificial Intelligence Advancing or Just Recycling Old Ideas?

Artificial Intelligence (AI) is often seen as the most important technology of our time. It is transforming industries, tackling global problems, and changing the way people work. The potential is enormous. But an important question remains: is AI truly creating new ideas, or just reusing old ones with faster computers and more data?

Generative AI systems, such as GPT-4, seem to produce original content. But often, they may only rearrange existing information in new ways. This question is not just about technology. It also affects where investors spend money, how companies use AI, and how societies handle changes in jobs, privacy, and ethics. To understand AI’s real progress, we need to look at its history, study patterns of development, and see whether it is making real breakthroughs or repeating what has been done before.

Looking Back: Lessons from AI’s Past

AI has evolved over more than seven decades, following a recurring pattern in which periods of genuine innovation are often interwoven with the revival of earlier concepts.

In the 1950s, symbolic AI emerged as an ambitious attempt to replicate human reasoning through explicit, rule-based programming. While this approach generated significant enthusiasm, it soon revealed its limitations. These systems struggled to interpret ambiguity, lacked adaptability, and failed when confronted with real-world problems that deviated from their rigidly defined structures.

The 1980s saw the emergence of expert systems, which aimed to replicate human decision-making by encoding domain knowledge into structured rule sets. These systems were initially seen as a breakthrough. However, they struggled when faced with complex and unpredictable situations, revealing the limitations of relying only on predefined logic for intelligence.

In the 2010s, deep learning became the focus of AI research and application. Neural networks had been introduced as early as the 1960s. However, their true potential was realized only when advances in computing hardware, the availability of large datasets, and improved algorithms came together to overcome earlier limitations.

This history shows a repeating pattern in AI: earlier concepts often return and gain prominence when the necessary technological conditions are in place. It also raises the question of whether today’s AI advances are entirely new developments or improved versions of long-standing ideas made possible by modern computational power.

How Perception Frames the Story of AI Progress

Modern AI attracts attention because of its impressive capabilities. These include systems that can produce realistic images, respond to voice commands with natural fluency, and generate text that reads as if written by a person. Such applications influence the way people work, communicate, and create. For many, they seem to represent a sudden step into a new technological era.

However, this sense of novelty can be misleading. What appears to be a revolution is often the visible result of many years of gradual progress that remained outside public awareness. The reason AI feels new is less related to the invention of entirely unknown methods and more related to the recent combination of computing power, access to data, and practical engineering that has allowed these systems to operate at a large scale. This distinction is essential. If innovation is judged only by what feels different to users, there is a risk of overlooking the continuity in how the field develops.

This gap in perception affects public discussions. Industry leaders often describe AI as a series of transformative breakthroughs. Critics argue that much of the progress stems from refining existing techniques rather than developing entirely new ones. Both views can be correct. Yet without a clear understanding of what counts as innovation, debates about the future of the field may be influenced more by promotional claims than by technical facts.

The key challenge is to distinguish the feeling of novelty from the reality of innovation. AI may seem unfamiliar because its results now reach people quickly and are embedded in everyday tools. However, this should not be taken as evidence that the field has entered a completely new stage of thinking. Questioning this assumption allows for a more accurate evaluation of where the field is making real advances and where the progress may be more a matter of appearance.

True Innovation and the Illusion of Progress

Many advances considered as breakthroughs in AI are, on closer examination, refinements of existing methods rather than foundational transformations. The industry often equates larger models, expanded datasets, and greater computational capacity with innovation. This expansion does yield measurable performance gains, yet it does not alter the underlying architecture or conceptual basis of the systems.

A clear example is the progression from earlier language models to GPT-4. While its scale and capabilities have increased significantly, its core mechanism remains statistical prediction of text sequences. Such developments represent optimization within established boundaries, not the creation of systems that reason or comprehend in a human-like sense.

Even techniques framed as transformative, such as reinforcement learning with human feedback, emerge from decades-old theoretical work. Their novelty lies more in the implementation context than in the conceptual origin. This raises an uncomfortable question: is the field witnessing genuine paradigm shifts, or is it marketing narratives that transform incremental engineering achievements into the appearance of revolution?

Without a critical distinction between genuine innovation and iterative enhancement, the discourse risks mistaking volume for vision and speed for direction.

Examples of Recycling in AI

Many AI developments are reapplications of older concepts in new contexts. Some examples are as below:

Neural Networks

First explored in the mid-20th century, they became practical only after computing resources caught up.

Computer Vision

Early pattern recognition systems inspired today’s convolutional neural networks.

Chatbots

Rule-based systems from the 1960s, such as ELIZA, laid the groundwork for today’s conversational AI, though the scale and realism are vastly improved.

Optimization Techniques

Gradient descent, a standard training method, has been a part of mathematics for over a century.

These examples demonstrate that significant AI progress often stems from recombining, scaling, and optimizing established techniques, rather than from discovering entirely new foundations.

The Role of Data, Compute, and Algorithms

Modern AI relies on three interconnected factors, namely, data, computing power, and algorithmic design. The expansion of the Internet and digital ecosystems has produced vast amounts of structured and unstructured data, enabling models to learn from billions of real-world examples. Advances in hardware, particularly GPUs and TPUs, have provided the capability to train increasingly large models with billions of parameters. Improvements in algorithms, including refined activation functions, more efficient optimization methods, and better architectures, have allowed researchers to extract greater performance from the same foundational concepts.

While these developments have resulted in significant progress, they also introduce challenges. The current trajectory often depends on exponential growth in data and computing resources, which raises concerns about cost, accessibility, and environmental sustainability. If further innovations require disproportionately larger datasets and hardware capabilities, the pace of innovation may slow once these resources become scarce or prohibitively expensive.

Market Hype vs. Actual Capability

AI is often promoted as being far more capable than it actually is. Headlines can exaggerate progress, and companies sometimes make bold claims to attract funding and public attention. For example, AI is described as understanding language, but in reality, current models do not truly comprehend meaning. They work by predicting the next word based on patterns in large amounts of data. Similarly, image generators can create impressive and realistic visuals, but they do not actually “know” what the objects in those images are.

This gap between perception and reality fuels both excitement and disappointment. It can lead to inflated expectations, which in turn increase the risk of another AI winter, a period when funding and interest decline because the technology fails to meet the promises made about it.

Where True AI Innovation Could Come From

If AI is to advance beyond recycling, several areas might lead the way:

Neuromorphic Computing

Hardware designed to work more like the human brain, potentially enabling energy-efficient and adaptive AI.

Hybrid Models

Systems that combine symbolic reasoning with neural networks, giving models both pattern recognition and logical reasoning abilities.

AI for Scientific Discovery

Tools that help researchers create new theories or materials, rather than only analyzing existing data.

General AI Research

Efforts to move from narrow AI, which is task-specific, to more flexible intelligence that can adapt to unfamiliar challenges.

These directions require collaboration between fields such as neuroscience, robotics, and quantum computing.

Balancing Progress with Realism

While AI has achieved remarkable outcomes in specific domains, it is essential to approach these developments with measured expectations. Current systems excel in clearly defined tasks but often struggle when faced with unfamiliar or complex situations that require adaptability and reasoning. This difference between specialized performance and broader human-like intelligence remains substantial.

Maintaining a balanced perspective ensures that excitement over immediate successes does not overshadow the need for deeper research. Efforts should extend beyond refining existing tools to include exploration of new approaches that support adaptability, independent reasoning, and learning in diverse contexts. Such a balance between celebrating achievements and confronting limitations can guide AI toward advances that are both sustainable and transformative.

The Bottom Line

AI has reached a stage where its progress is evident, yet its future direction requires careful consideration. The field has achieved large-scale development, improved efficiency, and created widely used applications. However, these achievements do not ensure the arrival of entirely new abilities. Treating gradual progress as significant change can lead to short-term focus instead of long-term growth. Moving forward requires valuing present tools while also supporting research that goes beyond current limits.

Real progress may depend on rethinking system design, combining knowledge from different fields, and improving adaptability and reasoning. By avoiding exaggerated expectations and maintaining a balanced view, AI can advance in a way that is not only extensive but also meaningful, creating lasting and genuine innovation.

AI Research

Meta Pursues AI Advancements by Collaborating with Google and OpenAI, ETCIO

Meta Platforms is considering partnerships with rivals Google or OpenAI to enhance artificial intelligence features in its applications, The Information reported on Friday, citing people familiar with the conversations.

Leaders at Meta’s new AI organization, Meta Superintelligence Labs, have explored integrating Google’s Gemini model to deliver conversational, text-based responses for queries submitted to Meta AI, the company’s primary chatbot, the report said.

Discussions have included leveraging OpenAI’s models to power Meta AI and other AI features in Meta’s social media apps, the Information added. Any deals with external model providers such as Google or OpenAI are likely temporary steps to enhance Meta’s AI products until its own models advance, the report said. A priority for the lab is ensuring its next-generation model, Llama 5, can compete with rivals, it added.

Inside Meta, the company has already integrated external AI models into some internal tools for staff, the Information reported. For instance, employees can use Anthropic models to code via the company’s internal coding assistant, the report added, citing three people familiar with the matter.

“We are taking an all-of-the-above approach to building the best AI products; and that includes building world-leading models ourselves, partnering with companies, as well as open sourcing technology,” a Meta spokesperson said in a statement. OpenAI, Google and Microsoft, which backs OpenAI, did not immediately respond to Reuters’ requests for comment. Reuters could not immediately verify the report.

Earlier this year, Meta committed billions of dollars to bring on former Scale AI CEO Alexandr Wang and former GitHub CEO Nat Friedman to co-lead Meta Superintelligence Labs, while offering generous compensation packages to attract dozens of leading AI researchers to the initiative.

-

Tools & Platforms3 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Business3 days ago

Business3 days agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences3 months ago

Events & Conferences3 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Mergers & Acquisitions2 months ago

Mergers & Acquisitions2 months agoDonald Trump suggests US government review subsidies to Elon Musk’s companies