Tools & Platforms

ChatGPT dissidents, the students who refuse to use AI: ‘I couldn’t remember the last time I had written something by myself’ | Technology

“I started using ChatGPT in my second year of college, during a very stressful time juggling internships, assignments, studies and extracurricular activities. To ease this burden, I began using it on small assignments. And, little by little, I realized [that it was remembering] details about my writing style and my previous texts. So, I quickly integrated it into everything… my work [became] as easy as clicking a button,” explains Mónica de los Ángeles Rivera Sosa, a 20-year-old Political Communications student at Emerson College in Boston, Massachusetts.

“I managed to pass the course… but I realized that I couldn’t remember the last time I had written an essay by myself, which was my favorite activity. This was the catalyst for me to stop using the app,” she affirms.

This student’s view isn’t the most common, but it’s not exceptional, either. More and more students are ceasing to use artificial intelligence (AI) in their assignments. They feel that, with this technology, they’re becoming lazier and less creative, losing the ability to think for themselves.

“I’ve stopped using artificial intelligence to do my university work because it doesn’t do me any good. Last year, I felt less creative. This year, I’m barely using it,” says Macarena Paz Guerrero, a third-year journalism student at Ramon Llull University in Barcelona. “At university, we should encourage experimentation, learning and critical thinking, instead of copying and pasting questions into a machine without even reading them,” she adds.

Microsoft recently published a study in which it interviewed 319 workers to investigate how the use of AI tools impacts critical thinking and how this technology affects their labor. The results indicate that AI users produce a less diverse set of results for the same task. This means that workers who trust the machine put less effort into contributing their own ideas. But who delegates work to the machine? And why do they do so?

The workers who are most critical of AI are those who are most demanding of themselves. In other words, the more confident a person is – and the more confidence they have in the tasks they perform – the less they resort to technology. “We’re talking about overqualified [individuals]… that is, students or workers who stand out for their high abilities and encounter limitations when using AI,” says Francisco Javier González Castaño, a professor at the University of Vigo, in Spain. He has participated in the development of AI chatbots. “But for most people and tasks that require repetition, artificial intelligence tools are very helpful,” he adds.

“When a university assignment can easily be solved by a machine, it’s not the students’ problem. Rather, the education system [is at fault],” says Violeta González, a 25-year-old pianist and graduate student in Pedagogy at the Royal Conservatory of Brussels. “However, if the assignment requires a critical mindset to be carried out, things change, because AI still isn’t capable of doing many of the things that humans can do. A ChatGPT response is a blank canvas to work on. It’s nothing more than collected data that we use to decide what to do. On its own, it doesn’t contribute anything new.”

Despite criticism, generative AI is widely used in universities. According to a recent study by the CYD Foundation, 89% of Spanish undergraduate students use some of these tools to resolve doubts (66%), research, analyze data, or gather information (48%), or even write essays (45%). Approximately 44% of students use AI tools several times a week, while 35% use them daily.

Toni Lozano, a professor at the Autonomous University of Barcelona, admits that these tools pose a challenge for the education system. “[AI] can be helpful for students who want to improve the quality of their work and develop their own skills. [But it] can be detrimental for those who don’t want to put in the effort or lack the motivation. It’s just another tool – similar to a calculator – and it all depends on how you use it.”

“There are students who only attend university for the degree,” he notes, “and others who come to learn. But in any case, I don’t think limiting or suspending the use of AI is a good idea. We’re increasingly committed to in-person classes and have returned to written exams,” he adds.

AI for Critical Thinking

In an age of automation and uniformity of results, fostering critical thinking is a challenge for both universities and technology companies. These institutions are trying to develop generative AI tools that motivate users to think for themselves. They also purport to help them tackle more complex problems and enter a job market that’s increasingly influenced by AI. This is evident from the aforementioned Microsoft study and also from recent announcements by the two leading AI companies: OpenAI and Anthropic.

OpenAI launched ChatGPT Edu – a version of its chatbot for students – in May of 2024. Anthropic, meanwhile, launched Claude for Education, a version of its chatbot focused on universities. Claude poses Socratic questions (“How would you approach this?” or “What evidence supports your conclusion?”) to guide students in problem-solving and help them develop critical thinking.

When Google first burst on the scene, there were similar claims about it leading to less creativity, less effort and less critical thinking. What are the differences between using the search engine or generative AI to complete a project? “There are many,” Macarena Paz asserts emphatically. “In search engines, you enter the question, consult different pages and structure your answers, adding and discarding what you consider to be appropriate,” she details.

Paz explains that she’s now opting for other types of search engines, since Google has integrated AI through Overviews: automatically-generated answers that appear at the top of the page in some search results. Instead, she uses Ecosia, which promotes itself as a sustainable alternative to Google, as it uses advertising revenue generated by searches to fund reforestation projects. All the students interviewed for this report expressed concern about the water consumption associated with each search performed with an AI tool.

“One of the biggest limitations I find with ChatGPT is that it doesn’t know how to say ‘no.’ If it doesn’t know an answer, it makes one up. This can be very dangerous. When I realized this, I started to take the information it gave me with a grain of salt. If you don’t add this layer of critical thinking, your work becomes very limited,” Violeta González explains. “[ChatGPT] selects the information for you and you lose that decision-making ability. It’s faster, but also more limited,” she clarifies. “Critical thinking is like an exercise. If you stop doing it, your body forgets it and you lose the talent,” Mónica de los Ángeles Rivera Sosa warns.

Furthermore, it has its limitations. “In the case of programming, we must differentiate between coding and programming. Generative AI is ideal for automating thousands of specific tasks executed with a single line of code… but it still shows significant limitations when it comes to solving complex and original problems,” Toni Lozano adds. In the case of the humanities, it can write a report or an email appropriately and correctly, but it’s unable to write in its own style. A way of writing with the ChatGPT tone is already becoming established. Again, this is the problem of standardization.

“People have never been as educated as they are now. But do we all really need to be hyper-educated for the system to work?” Francisco Javier González asks. “Obviously not. AI is likely to reduce some skills that aren’t as necessary as we think. There was a period in ancient times – I’m not saying it was better – when only a few monks had critical thinking skills. In five years, it won’t be necessary to learn languages. [At that point], something will be lost,” he admits.

There are scientific studies that confirm the negative impact of generative AI on memory, creativity and critical thinking. Before generative AI entered our lives, the American writer Nicholas Carr already warned of the epistemological impact of the internet: “Once, I was a scuba diver in a sea of words. Now, I zip along the surface like a guy on a Jet Ski,” he writes, in the opening pages of The Shallows: What the Internet Is Doing to Our Brains (2011). “As our window onto the world – and onto ourselves – a popular medium molds what we see and how we see it… and eventually, if we use it enough, it changes who we are, as individuals and as a society.”

If generative AI makes us less original and lazier, removing our critical thinking skills, what impact will this have on our brains? Will we all have the same answers to different questions? Will everything be more uniform, less creative? Only time will tell. But as we wait for the future to speak, this is what ChatGPT says: “The advance of generative artificial intelligence poses a disturbing paradox: the more it facilitates our thinking, the less we exercise it.”

Sign up for our weekly newsletter to get more English-language news coverage from EL PAÍS USA Edition

Tools & Platforms

Pistoia Alliance Announces Agentic AI Collaboration Initiative

Doctor technology AI integrates big data analytics with clinical research, enabling precise treatment plans tailored to individual patient needs and genetic profiles. | Image Credit: © Suriyo – stock.adobe.com

At the European conference of the Pistoia Alliance, a global nonprofit, early in 2025, a theme emerged: that agentic artificial intelligence (AI) is viewed by life sciences professionals as potentially being among the most disruptive emerging technologies over the next two to three years (1). Agentic AI has the potential, according to the Pistoia Alliance, to accelerate multi-step processes, such as target prioritization and compound optimization, by joining together reasoning, tool use, and execution.

However, the Pistoia Alliance said in a Sept. 4, 2025 press release that surrendering full autonomy to AI creates a sort of “black box” that may undermine trust, capacity for reproducibility, and regulatory compliance (1). It is for those reasons—with the overarching mission of safely adopting agentic AI—that the nonprofit is establishing a new initiative bringing together experts from the pharmaceutical, technology, and biotech industries to help shape certain standards and protocols under which AI agents will be allowed to perform.

How will the initiative encourage responsible AI use?

With Genentech providing the initial seed funding for the project, the Pistoia Alliance said it is currently seeking both additional partners and funds (1). The nonprofit has stated strategic priorities to harness AI and expedite R&D and is calling upon an AI and machine learning (ML) community of experts to continue building support for the industry-wide responsible adoption of AI.

“Our members see agentic AI as one of the most impactful technologies set to change how they work and innovate, but they also recognize the risks if adoption happens without the right guardrails,” said Becky Upton, PhD, president of the Pistoia Alliance, in the press release (1). “The Alliance is uniquely positioned to lead this work, drawing on more than eight years’ experience in pre-competitive collaboration around AI, from benchmarking frameworks for large language models to a pharmacovigilance community focused on responsible AI deployment. We know that more expert minds focused on the same topic will advance the safe and successful use of AI technologies.”

What are priorities for the industry?

As part of a recent webinar, the Pistoia Alliance said it polled more than 100 pharma professionals, and the consensus top priority for pre-competitive collaboration was the creation of shared validation frameworks and metrics, for model robustness and bias (1). Shared frameworks urgently need to be established, according to the Pistoia Alliance, for the safe adoption of AI because when evidence must be validated, auditable agent workflows that are shaped by subject matter experts and reputable data sources are necessary for the production of reliable results.

“This initiative will address the common issues we all face in integrating AI developments into a cohesive ecosystem that improves output quality,” said Robert Gill, the Agentic AI program lead at the Pistoia Alliance, in the press release (1). “It will enable members to link standalone AI applications into a dynamic network and build workflows where multiple agents can reason, plan and act together. By becoming sponsors, organizations can act as first movers—shaping the standards, gaining early access to outputs, and ensuring they are at the forefront of the next wave of AI innovation in healthcare.”

Want to make your voice heard?

Pharmaceutical Technology® Group is asking its audience within the bio/pharmaceutical industry to share their experiences in a survey that seeks perspectives on new and rapidly evolving technologies as automation, advanced analytics, digital twins, and AI (2). The survey can be accessed directly at this link.

References

1. Pistoia Alliance. Pistoia Alliance Unveils Agentic AI Initiative and Seeks Industry Funding to Drive Safe Adoption. Press Release. Sept. 4, 2025.

2. Cole, C. Digital Transformation in Pharma Manufacturing: Industry Perspectives Survey. PharmTech.com, Aug. 27, 2025.

Tools & Platforms

Pistoia Alliance Announces Agentic AI Collaboration Initiative

Doctor technology AI integrates big data analytics with clinical research, enabling precise treatment plans tailored to individual patient needs and genetic profiles. | Image Credit: © Suriyo – stock.adobe.com

At the European conference of the Pistoia Alliance, a global nonprofit, early in 2025, a theme emerged: that agentic artificial intelligence (AI) is viewed by life sciences professionals as potentially being among the most disruptive emerging technologies over the next two to three years (1). Agentic AI has the potential, according to the Pistoia Alliance, to accelerate multi-step processes, such as target prioritization and compound optimization, by joining together reasoning, tool use, and execution.

However, the Pistoia Alliance said in a Sept. 4, 2025 press release that surrendering full autonomy to AI creates a sort of “black box” that may undermine trust, capacity for reproducibility, and regulatory compliance (1). It is for those reasons—with the overarching mission of safely adopting agentic AI—that the nonprofit is establishing a new initiative bringing together experts from the pharmaceutical, technology, and biotech industries to help shape certain standards and protocols under which AI agents will be allowed to perform.

How will the initiative encourage responsible AI use?

With Genentech providing the initial seed funding for the project, the Pistoia Alliance said it is currently seeking both additional partners and funds (1). The nonprofit has stated strategic priorities to harness AI and expedite R&D and is calling upon an AI and machine learning (ML) community of experts to continue building support for the industry-wide responsible adoption of AI.

“Our members see agentic AI as one of the most impactful technologies set to change how they work and innovate, but they also recognize the risks if adoption happens without the right guardrails,” said Becky Upton, PhD, president of the Pistoia Alliance, in the press release (1). “The Alliance is uniquely positioned to lead this work, drawing on more than eight years’ experience in pre-competitive collaboration around AI, from benchmarking frameworks for large language models to a pharmacovigilance community focused on responsible AI deployment. We know that more expert minds focused on the same topic will advance the safe and successful use of AI technologies.”

What are priorities for the industry?

As part of a recent webinar, the Pistoia Alliance said it polled more than 100 pharma professionals, and the consensus top priority for pre-competitive collaboration was the creation of shared validation frameworks and metrics, for model robustness and bias (1). Shared frameworks urgently need to be established, according to the Pistoia Alliance, for the safe adoption of AI because when evidence must be validated, auditable agent workflows that are shaped by subject matter experts and reputable data sources are necessary for the production of reliable results.

“This initiative will address the common issues we all face in integrating AI developments into a cohesive ecosystem that improves output quality,” said Robert Gill, the Agentic AI program lead at the Pistoia Alliance, in the press release (1). “It will enable members to link standalone AI applications into a dynamic network and build workflows where multiple agents can reason, plan and act together. By becoming sponsors, organizations can act as first movers—shaping the standards, gaining early access to outputs, and ensuring they are at the forefront of the next wave of AI innovation in healthcare.”

Want to make your voice heard?

Pharmaceutical Technology® Group is asking its audience within the bio/pharmaceutical industry to share their experiences in a survey that seeks perspectives on new and rapidly evolving technologies as automation, advanced analytics, digital twins, and AI (2). The survey can be accessed directly at this link.

References

1. Pistoia Alliance. Pistoia Alliance Unveils Agentic AI Initiative and Seeks Industry Funding to Drive Safe Adoption. Press Release. Sept. 4, 2025.

2. Cole, C. Digital Transformation in Pharma Manufacturing: Industry Perspectives Survey. PharmTech.com, Aug. 27, 2025.

Tools & Platforms

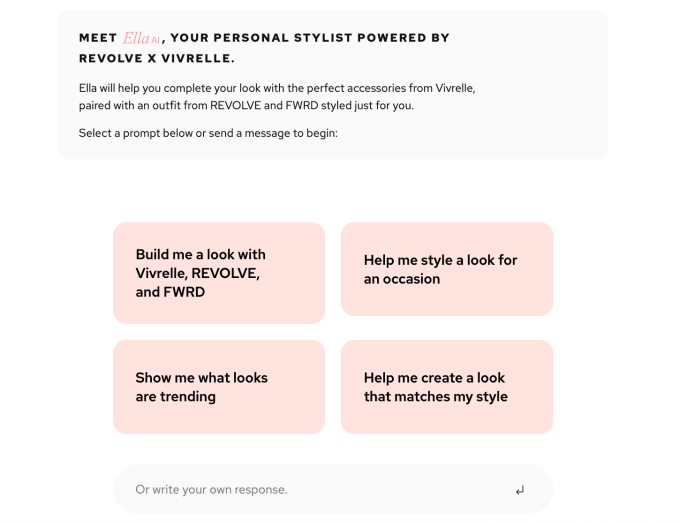

Fashion retailers partner to offer personalized AI styling tool ‘Ella’

The luxury membership platform Vivrelle, which allows customers to rent high-end goods, announced Thursday the launch of an AI personal styling tool called Ella as part of its partnership with fashion retailers Revolve and FWRD.

The launch is an example of how the fashion industry is leveraging AI technology to enhance customer experiences and is one of the first partnerships to see three retailers come together to offer a personalized AI experience. Revolve and FWRD let users shop designer clothing, while Revolve also has an option to shop pre-owned.

The tool, Ella, provides recommendations to customers across the three retailers on what to purchase or rent to make an outfit come to life. For example, users can ask for “a bachelorette weekend outfit,” or “what to pack for a trip,” and the technology will search across the Vivrelle, FWRD, and Revolve shopping platforms to create outfit suggestions. Users can then check out in one cart on Vivrelle.

In theory, the more one uses Ella, the better its suggestions become. It’s the fashion equivalent of asking ChatGPT what to wear in Miami for a girl’s weekend.

Blake Geffen, the CEO and co-founder of Vivrelle (which announced a $62 million Series C earlier this year), told TechCrunch that she hopes Ella can take the “stress out of packing for a vacation or everyday dressing.

“Ella has been in the works for quite some time,” she told TechCrunch, adding that it took about a year to build and release the product.

This is actually the second AI tool from the three companies. The Vivrelle, Revolve, and FWRD partnership earlier this year also launched Complete the Look, which offers last-minute fashion suggestions to complement what’s in a customer’s cart at checkout. Their latest tool, Ella, however, takes the fashion recommendation game to another level.

Techcrunch event

San Francisco

|

October 27-29, 2025

Fashion has been obsessed with trying to make personalized shopping happen for decades now. Even the 90s movie “Clueless” showed Cher picking outfits from her digitized wardrobe.

This current AI boom has led to rapid innovation and democratized access to AI technology, allowing many fashion companies to launch personalized AI fashion companies and raise millions while doing so.

“With Ella, we’re giving our members as much flexibility and options as possible to shop or borrow with ease, through seamless conversations that allow you to share as little or as much as you want, just like talking to a live stylist,” Geffen said. “We’re excited to be the first brand to integrate rental, resale, and retail into one streamlined omnichannel experience.”

-

Business6 days ago

Business6 days agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms3 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics