Books, Courses & Certifications

Building AI-Resistant Technical Debt – O’Reilly

Anyone who’s used AI to generate code has seen it make mistakes. But the real danger isn’t the occasional wrong answer; it’s in what happens when those errors pile up across a codebase. Issues that seem small at first can compound quickly, making code harder to understand, maintain, and evolve. To really see that danger, you have to look at how AI is used in practice—which for many developers starts with vibe coding.

Vibe coding is an exploratory, prompt-first approach to software development where developers rapidly prompt, get code, and iterate. When the code seems close but not quite right, the developer describes what’s wrong and lets the AI try again. When it doesn’t compile or tests fail, they copy the error messages back to the AI. The cycle continues—prompt, run, error, paste, prompt again—often without reading or understanding the generated code. It feels productive because you’re making visible progress: errors disappear, tests start passing, features seem to work. You’re treating the AI like a coding partner who handles the implementation details while you steer at a high level.

Developers use vibe coding to explore and refine ideas and can generate large amounts of code quickly. It’s often the natural first step for most developers using AI tools, because it feels so intuitive and productive. Vibe coding offloads detail to the AI, making exploration and ideation fast and effective—which is exactly why it’s so popular.

The AI generates a lot of code, and it’s not practical to review every line every time it regenerates. Trying to read it all can lead to cognitive overload—mental exhaustion from wading through too much code—and makes it harder to throw away code that isn’t working just because you already invested time in reading it.

Vibe coding is a normal and useful way to explore with AI, but on its own it presents a significant risk. The models used by LLMs can hallucinate and produce made-up answers—for example, generating code that calls APIs or methods that don’t even exist. Preventing those AI-generated mistakes from compromising your codebase starts with understanding the capabilities and limitations of these tools, and taking an approach to AI-assisted development that takes those limitations into account.

Here’s a simple example of how these issues compound. When I ask AI to generate a class that handles user interaction, it often creates methods that directly read from and write to the console. When I then ask it to make the code more testable, if I don’t very specifically prompt for a simple fix like having methods take input as parameters and return output as values, the AI frequently suggests wrapping the entire I/O mechanism in an abstraction layer. Now I have an interface, an implementation, mock objects for testing, and dependency injection throughout. What started as a straightforward class has become a miniature framework. The AI isn’t wrong, exactly—the abstraction approach is a valid pattern—but it’s overengineered for the problem at hand. Each iteration adds more complexity, and if you’re not paying attention, you’ll end up with layers upon layers of unnecessary code. This is a good example of how vibe coding can balloon into unnecessary complexity if you don’t stop to verify what’s happening.

Novice Developers Face a New Kind of Technical Debt Challenge with AI

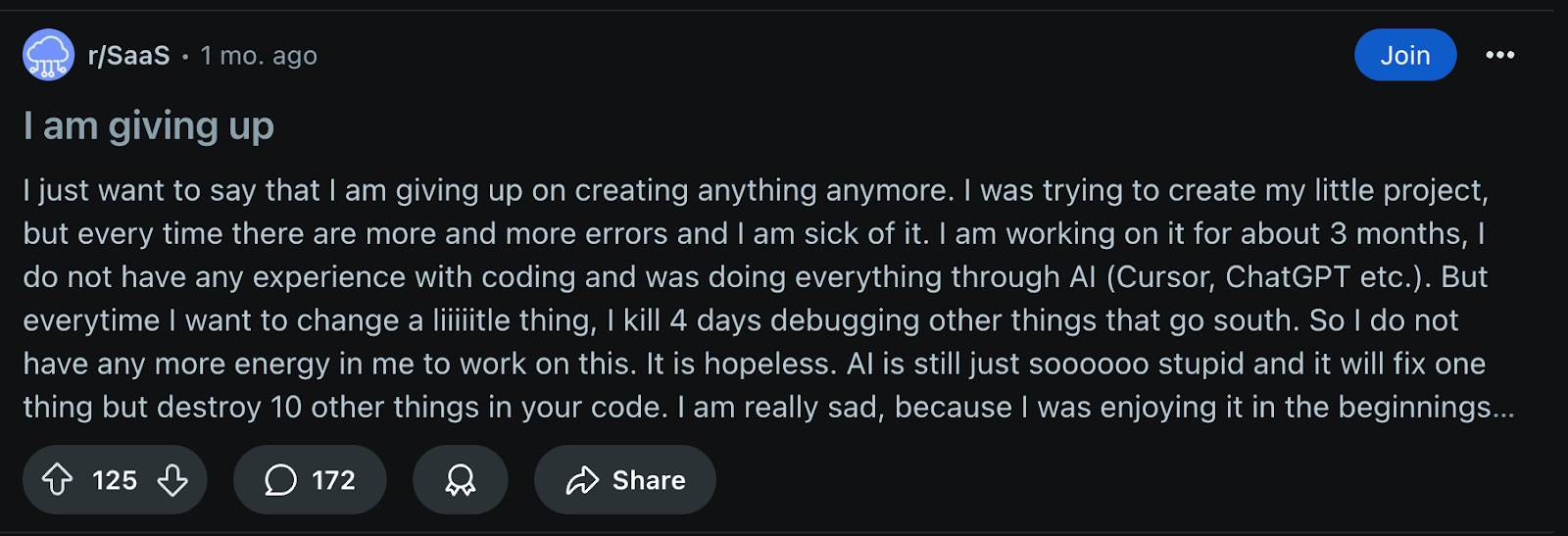

Three months after writing their first line of code, a Reddit user going by SpacetimeSorcerer posted a frustrated update: Their AI-assisted project had reached the point where making any change meant editing dozens of files. The design had hardened around early mistakes, and every change brought a wave of debugging. They’d hit the wall known in software design as “shotgun surgery,” where a single change ripples through so much code that it’s risky and slow to work on—a classic sign of technical debt, the hidden cost of early shortcuts that make future changes harder and more expensive.

AI didn’t cause the problem directly; the code worked (until it didn’t). But the speed of AI-assisted development let this new developer skip the design thinking that prevents these patterns from forming. The same thing happens to experienced developers when deadlines push delivery over maintainability. The difference is, an experienced developer often knows they’re taking on debt. They can spot antipatterns early because they’ve seen them repeatedly, and take steps to “pay off” the debt before it gets much more expensive to fix. Someone new to coding may not even realize it’s happening until it’s too late—and they haven’t yet built the tools or habits to prevent it.

Part of the reason new developers are especially vulnerable to this problem goes back to the Cognitive Shortcut Paradox (Radar, October 8). Without enough hands-on experience debugging, refactoring, and working through ambiguous requirements, they don’t have the instincts built up through experience to spot structural problems in AI-generated code. The AI can hand them a clean, working solution. But if they can’t see the design flaws hiding inside it, those flaws grow unchecked until they’re locked into the project, built into the foundations of the code so changing them requires extensive, frustrating work.

The signals of AI-accelerated technical debt show up quickly: highly coupled code where modules depend on each other’s internal details; “God objects” with too many responsibilities; overly structured solutions where a simple problem gets buried under extra layers. These are the same problems that typically reflect technical debt in human-built code; the reason they emerge so quickly in AI-generated code is because it can be generated much more quickly and without oversight or intentional design or architectural decisions being made. AI can generate these patterns convincingly, making them look deliberate even when they emerged by accident. Because the output compiles, passes tests, and works as expected, it’s easy to accept as “done” without thinking about how it will hold up when requirements change.

When adding or updating a unit test feels unreasonably difficult, that’s often the first sign the design is too rigid. The test is telling you something about the structure—maybe the code is too intertwined, maybe the boundaries are unclear. This feedback loop works whether the code was AI-generated or handwritten, but with AI the friction often shows up later, after the code has already been merged.

That’s where the “trust but verify” habit comes in. Trust the AI to give you a starting point, but verify that the design supports change, testability, and clarity. Ask yourself whether the code will still make sense to you—or anyone else—months from now. In practice, this can mean quick design reviews even for AI-generated code, refactoring when coupling or duplication starts to creep in, and taking a deliberate pass at naming so variables and functions read clearly. These aren’t optional touches; they’re what keep a codebase from locking in its worst early decisions.

AI can help with this too: It can suggest refactorings, point out duplicated logic, or help extract messy code into cleaner abstractions. But it’s up to you to direct it to make those changes, which means you have to spot them first—which is much easier for experienced developers who have seen these problems over the course of many projects.

Left to its defaults, AI-assisted development is biased toward adding new code, not revisiting old decisions. The discipline to avoid technical debt comes from building design checks into your workflow so AI’s speed works in service of maintainability instead of against it.

Books, Courses & Certifications

TII Falcon-H1 models now available on Amazon Bedrock Marketplace and Amazon SageMaker JumpStart

This post was co-authored with Jingwei Zuo from TII.

We are excited to announce the availability of the Technology Innovation Institute (TII)’s Falcon-H1 models on Amazon Bedrock Marketplace and Amazon SageMaker JumpStart. With this launch, developers and data scientists can now use six instruction-tuned Falcon-H1 models (0.5B, 1.5B, 1.5B-Deep, 3B, 7B, and 34B) on AWS, and have access to a comprehensive suite of hybrid architecture models that combine traditional attention mechanisms with State Space Models (SSMs) to deliver exceptional performance with unprecedented efficiency.

In this post, we present an overview of Falcon-H1 capabilities and show how to get started with TII’s Falcon-H1 models on both Amazon Bedrock Marketplace and SageMaker JumpStart.

Overview of TII and AWS collaboration

TII is a leading research institute based in Abu Dhabi. As part of UAE’s Advanced Technology Research Council (ATRC), TII focuses on advanced technology research and development across AI, quantum computing, autonomous robotics, cryptography, and more. TII employs international teams of scientists, researchers, and engineers in an open and agile environment, aiming to drive technological innovation and position Abu Dhabi and the UAE as a global research and development hub in alignment with the UAE National Strategy for Artificial Intelligence 2031.

TII and Amazon Web Services (AWS) are collaborating to expand access to made-in-the-UAE AI models across the globe. By combining TII’s technical expertise in building large language models (LLMs) with AWS Cloud-based AI and machine learning (ML) services, professionals worldwide can now build and scale generative AI applications using the Falcon-H1 series of models.

About Falcon-H1 models

The Falcon-H1 architecture implements a parallel hybrid design, using elements from Mamba and Transformer architectures to combine the faster inference and lower memory footprint of SSMs like Mamba with the effectiveness of Transformers’ attention mechanism in understanding context and enhanced generalization capabilities. The Falcon-H1 architecture scales across multiple configurations ranging from 0.5–34 billion parameters and provides native support for 18 languages. According to TII, the Falcon-H1 family demonstrates notable efficiency with published metrics indicating that smaller model variants achieve performance parity with larger models. Some of the benefits of Falcon-H1 series include:

- Performance – The hybrid attention-SSM model has optimized parameters with adjustable ratios between attention and SSM heads, leading to faster inference, lower memory usage, and strong generalization capabilities. According to TII benchmarks published in Falcon-H1’s technical blog post and technical report, Falcon-H1 models demonstrate superior performance across multiple scales against other leading Transformer models of similar or larger scales. For example, Falcon-H1-0.5B delivers performance similar to typical 7B models from 2024, and Falcon-H1-1.5B-Deep rivals many of the current leading 7B-10B models.

- Wide range of model sizes – The Falcon-H1 series includes six sizes: 0.5B, 1.5B, 1.5B-Deep, 3B, 7B, and 34B, with both base and instruction-tuned variants. The Instruct models are now available in Amazon Bedrock Marketplace and SageMaker JumpStart.

- Multilingual by design – The models support 18 languages natively (Arabic, Czech, German, English, Spanish, French, Hindi, Italian, Japanese, Korean, Dutch, Polish, Portuguese, Romanian, Russian, Swedish, Urdu, and Chinese) and can scale to over 100 languages according to TII, thanks to a multilingual tokenizer trained on diverse language datasets.

- Up to 256,000 context length – The Falcon-H1 series enables applications in long-document processing, multi-turn dialogue, and long-range reasoning, showing a distinct advantage over competitors in practical long-context applications like Retrieval Augmented Generation (RAG).

- Robust data and training strategy – Training of Falcon-H1 models employs an innovative approach that introduces complex data early on, contrary to traditional curriculum learning. It also implements strategic data reuse based on careful memorization window assessment. Additionally, the training process scales smoothly across model sizes through a customized Maximal Update Parametrization (µP) recipe, specifically adapted for this novel architecture.

- Balanced performance in science and knowledge-intensive domains – Through a carefully designed data mixture and regular evaluations during training, the model achieves strong general capabilities and broad world knowledge while minimizing unintended specialization or domain-specific biases.

In line with their mission to foster AI accessibility and collaboration, TII have released Falcon-H1 models under the Falcon LLM license. It offers the following benefits:

- Open source nature and accessibility

- Multi-language capabilities

- Cost-effectiveness compared to proprietary models

- Energy-efficiency

About Amazon Bedrock Marketplace and SageMaker JumpStart

Amazon Bedrock Marketplace offers access to over 100 popular, emerging, specialized, and domain-specific models, so you can find the best proprietary and publicly available models for your use case based on factors such as accuracy, flexibility, and cost. On Amazon Bedrock Marketplace you can discover models in a single place and access them through unified and secure Amazon Bedrock APIs. You can also select your desired number of instances and the instance type to meet the demands of your workload and optimize your costs.

SageMaker JumpStart helps you quickly get started with machine learning. It provides access to state-of-the-art model architectures, such as language models, computer vision models, and more, without having to build them from scratch. With SageMaker JumpStart you can deploy models in a secure environment by provisioning them on SageMaker inference instances and isolating them within your virtual private cloud (VPC). You can also use Amazon SageMaker AI to further customize and fine-tune the models and streamline the entire model deployment process.

Solution overview

This post demonstrates how to deploy a Falcon-H1 model using both Amazon Bedrock Marketplace and SageMaker JumpStart. Although we use Falcon-H1-0.5B as an example, you can apply these steps to other models in the Falcon-H1 series. For help determining which deployment option—Amazon Bedrock Marketplace or SageMaker JumpStart—best suits your specific requirements, see Amazon Bedrock or Amazon SageMaker AI?

Deploy Falcon-H1-0.5B-Instruct with Amazon Bedrock Marketplace

In this section, we show how to deploy the Falcon-H1-0.5B-Instruct model in Amazon Bedrock Marketplace.

Prerequisites

To try the Falcon-H1-0.5B-Instruct model in Amazon Bedrock Marketplace, you must have access to an AWS account that will contain your AWS resources.Prior to deploying Falcon-H1-0.5B-Instruct, verify that your AWS account has sufficient quota allocation for ml.g6.xlarge instances. The default quota for endpoints using several instance types and sizes is 0, so attempting to deploy the model without a higher quota will trigger a deployment failure.

To request a quota increase, open the AWS Service Quotas console and search for Amazon SageMaker. Locate ml.g6.xlarge for endpoint usage and choose Request quota increase, then specify your required limit value. After the request is approved, you can proceed with the deployment.

Deploy the model using the Amazon Bedrock Marketplace UI

To deploy the model using Amazon Bedrock Marketplace, complete the following steps:

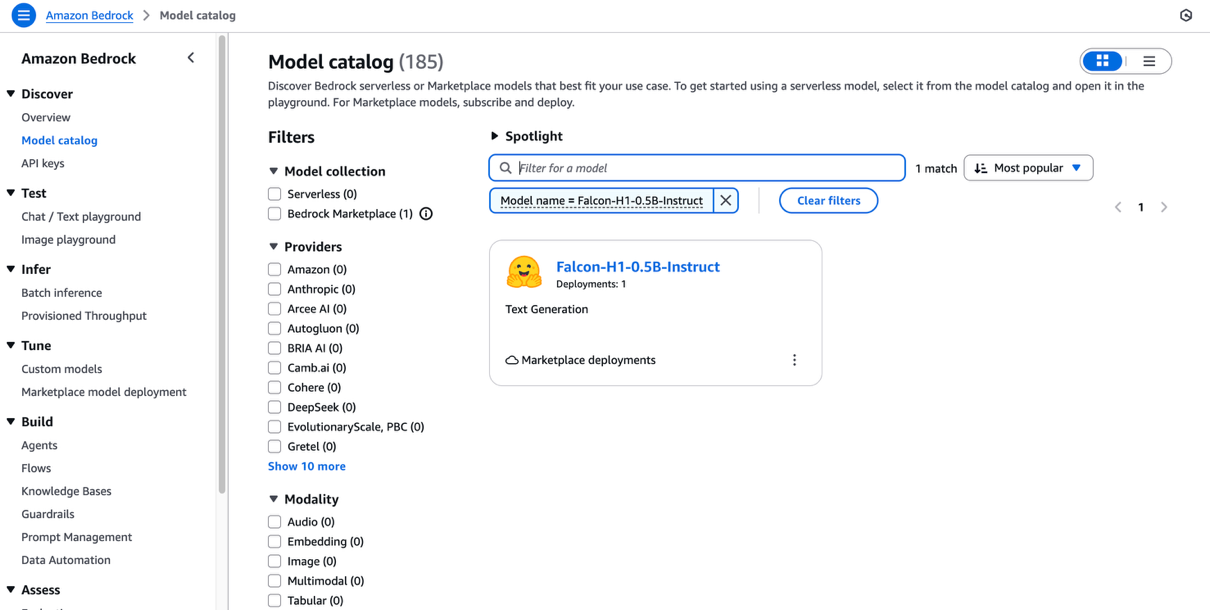

- On the Amazon Bedrock console, under Discover in the navigation pane, choose Model catalog.

- Filter for Falcon-H1 as the model name and choose Falcon-H1-0.5B-Instruct.

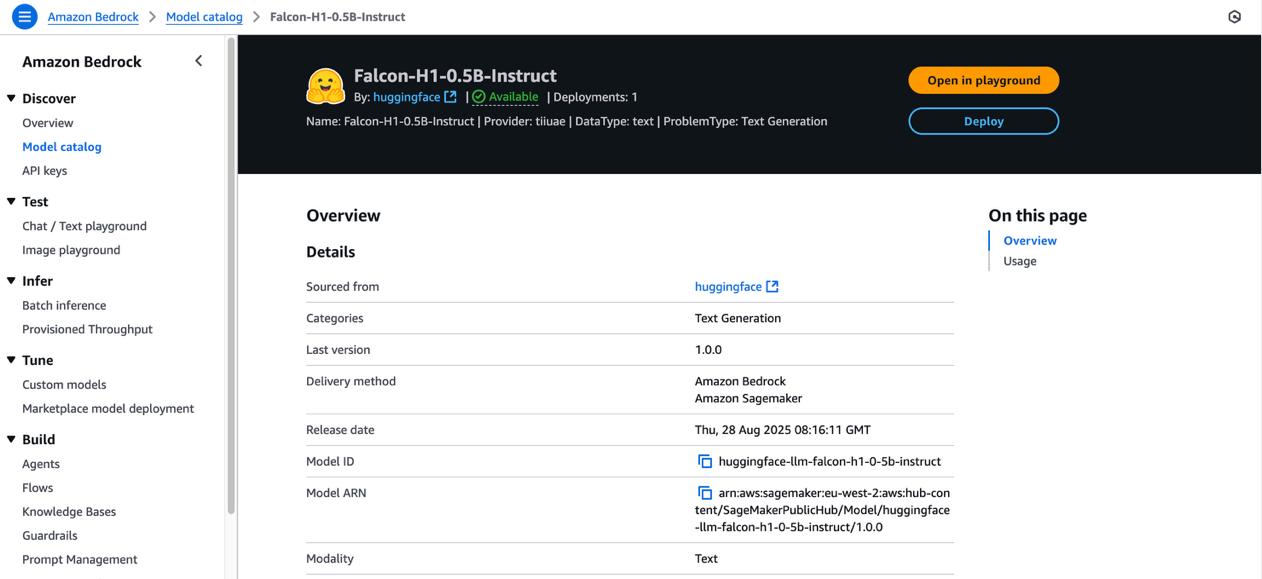

The model overview page includes information about the model’s license terms, features, setup instructions, and links to further resources.

- Review the model license terms, and if you agree with the terms, choose Deploy.

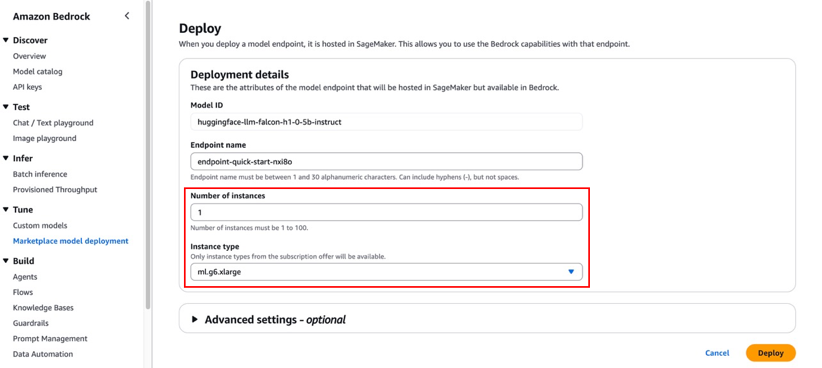

- For Endpoint name, enter an endpoint name or leave it as the default pre-populated name.

- To minimize costs while experimenting, set the Number of instances to 1.

- For Instance type, choose from the list of compatible instance types. Falcon-H1-0.5B-Instruct is an efficient model, so ml.m6.xlarge is sufficient for this exercise.

Although the default configurations are typically sufficient for basic needs, you can customize advanced settings like VPC, service access permissions, encryption keys, and resource tags. These advanced settings might require adjustment for production environments to maintain compliance with your organization’s security protocols.

- Choose Deploy.

- A prompt asks you to stay on the page while the AWS Identity and Access Management (IAM) role is being created. If your AWS account lacks sufficient quota for the selected instance type, you’ll receive an error message. In this case, refer to the preceding prerequisite section to increase your quota, then try the deployment again.

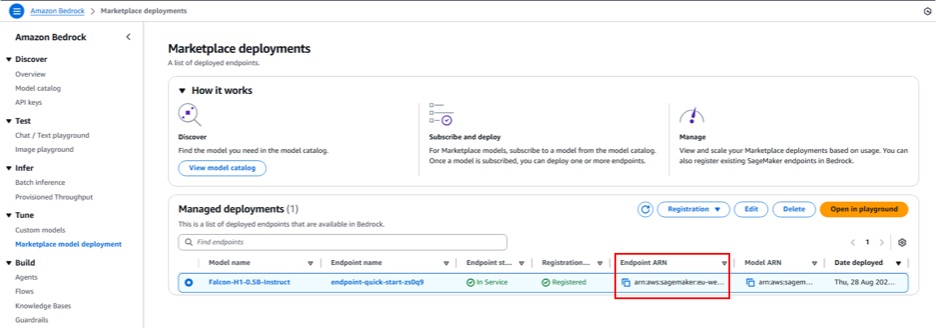

While deployment is in progress, you can choose Marketplace model deployments in the navigation pane to monitor the deployment progress in the Managed deployment section. When the deployment is complete, the endpoint status will change from Creating to In Service.

Interact with the model in the Amazon Bedrock Marketplace playground

You can now test Falcon-H1 capabilities directly in the Amazon Bedrock playground by selecting the managed deployment and choosing Open in playground.

You can now use the Amazon Bedrock Marketplace playground to interact with Falcon-H1-0.5B-Instruct.

Invoke the model using code

In this section, we demonstrate to invoke the model using the Amazon Bedrock Converse API.

Replace the placeholder code with the endpoint’s Amazon Resource Name (ARN), which begins with arn:aws:sagemaker. You can find this ARN on the endpoint details page in the Managed deployments section.

To learn more about the detailed steps and example code for invoking the model using Amazon Bedrock APIs, refer to Submit prompts and generate response using the API.

Deploy Falcon-H1-0.5B-Instruct with SageMaker JumpStart

You can access FMs in SageMaker JumpStart through Amazon SageMaker Studio, the SageMaker SDK, and the AWS Management Console. In this walkthrough, we demonstrate how to deploy Falcon-H1-0.5B-Instruct using the SageMaker Python SDK. Refer to Deploy a model in Studio to learn how to deploy the model through SageMaker Studio.

Prerequisites

To deploy Falcon-H1-0.5B-Instruct with SageMaker JumpStart, you must have the following prerequisites:

- An AWS account that will contain your AWS resources.

- An IAM role to access SageMaker AI. To learn more about how IAM works with SageMaker AI, see Identity and Access Management for Amazon SageMaker AI.

- Access to SageMaker Studio with a JupyterLab space, or an interactive development environment (IDE) such as Visual Studio Code or PyCharm.

Deploy the model programmatically using the SageMaker Python SDK

Before deploying Falcon-H1-0.5B-Instruct using the SageMaker Python SDK, make sure you have installed the SDK and configured your AWS credentials and permissions.

The following code example demonstrates how to deploy the model:

When the previous code segment completes successfully, the Falcon-H1-0.5B-Instruct model deployment is complete and available on a SageMaker endpoint. Note the endpoint name shown in the output—you will replace the placeholder in the following code segment with this value.The following code demonstrates how to prepare the input data, make the inference API call, and process the model’s response:

Clean up

To avoid ongoing charges for AWS resources used while experimenting with Falcon-H1 models, make sure to delete all deployed endpoints and their associated resources when you’re finished. To do so, complete the following steps:

- Delete Amazon Bedrock Marketplace resources:

- On the Amazon Bedrock console, choose Marketplace model deployment in the navigation pane.

- Under Managed deployments, choose the Falcon-H1 model endpoint you deployed earlier.

- Choose Delete and confirm the deletion if you no longer need to use this endpoint in Amazon Bedrock Marketplace.

- Delete SageMaker endpoints:

- On the SageMaker AI console, in the navigation pane, choose Endpoints under Inference.

- Select the endpoint associated with the Falcon-H1 models.

- Choose Delete and confirm the deletion. This stops the endpoint and avoids further compute charges.

- Delete SageMaker models:

- On the SageMaker AI console, choose Models under Inference.

- Select the model associated with your endpoint and choose Delete.

Always verify that all endpoints are deleted after experimentation to optimize costs. Refer to the Amazon SageMaker documentation for additional guidance on managing resources.

Conclusion

The availability of Falcon-H1 models in Amazon Bedrock Marketplace and SageMaker JumpStart helps developers, researchers, and businesses build cutting-edge generative AI applications with ease. Falcon-H1 models offer multilingual support (18 languages) across various model sizes (from 0.5B to 34B parameters) and support up to 256K context length, thanks to their efficient hybrid attention-SSM architecture.

By using the seamless discovery and deployment capabilities of Amazon Bedrock Marketplace and SageMaker JumpStart, you can accelerate your AI innovation while benefiting from the secure, scalable, and cost-effective AWS Cloud infrastructure.

We encourage you to explore the Falcon-H1 models in Amazon Bedrock Marketplace or SageMaker JumpStart. You can use these models in AWS Regions where Amazon Bedrock or SageMaker JumpStart and the required instance types are available.

For further learning, explore the AWS Machine Learning Blog, SageMaker JumpStart GitHub repository, and Amazon Bedrock User Guide. Start building your next generative AI application with Falcon-H1 models and unlock new possibilities with AWS!

Special thanks to everyone who contributed to the launch: Evan Kravitz, Varun Morishetty, and Yotam Moss.

About the authors

Mehran Nikoo leads the Go-to-Market strategy for Amazon Bedrock and agentic AI in EMEA at AWS, where he has been driving the development of AI systems and cloud-native solutions over the last four years. Prior to joining AWS, Mehran held leadership and technical positions at Trainline, McLaren, and Microsoft. He holds an MBA from Warwick Business School and an MRes in Computer Science from Birkbeck, University of London.

Mehran Nikoo leads the Go-to-Market strategy for Amazon Bedrock and agentic AI in EMEA at AWS, where he has been driving the development of AI systems and cloud-native solutions over the last four years. Prior to joining AWS, Mehran held leadership and technical positions at Trainline, McLaren, and Microsoft. He holds an MBA from Warwick Business School and an MRes in Computer Science from Birkbeck, University of London.

Mustapha Tawbi is a Senior Partner Solutions Architect at AWS, specializing in generative AI and ML, with 25 years of enterprise technology experience across AWS, IBM, Sopra Group, and Capgemini. He has a PhD in Computer Science from Sorbonne and a Master’s degree in Data Science from Heriot-Watt University Dubai. Mustapha leads generative AI technical collaborations with AWS partners throughout the MENAT region.

Mustapha Tawbi is a Senior Partner Solutions Architect at AWS, specializing in generative AI and ML, with 25 years of enterprise technology experience across AWS, IBM, Sopra Group, and Capgemini. He has a PhD in Computer Science from Sorbonne and a Master’s degree in Data Science from Heriot-Watt University Dubai. Mustapha leads generative AI technical collaborations with AWS partners throughout the MENAT region.

Jingwei Zuo is a Lead Researcher at the Technology Innovation Institute (TII) in the UAE, where he leads the Falcon Foundational Models team. He received his PhD in 2022 from University of Paris-Saclay, where he was awarded the Plateau de Saclay Doctoral Prize. He holds an MSc (2018) from the University of Paris-Saclay, an Engineer degree (2017) from Sorbonne Université, and a BSc from Huazhong University of Science & Technology.

Jingwei Zuo is a Lead Researcher at the Technology Innovation Institute (TII) in the UAE, where he leads the Falcon Foundational Models team. He received his PhD in 2022 from University of Paris-Saclay, where he was awarded the Plateau de Saclay Doctoral Prize. He holds an MSc (2018) from the University of Paris-Saclay, an Engineer degree (2017) from Sorbonne Université, and a BSc from Huazhong University of Science & Technology.

John Liu is a Principal Product Manager for Amazon Bedrock at AWS. Previously, he served as the Head of Product for AWS Web3/Blockchain. Prior to joining AWS, John held various product leadership roles at public blockchain protocols and financial technology (fintech) companies for 14 years. He also has nine years of portfolio management experience at several hedge funds.

John Liu is a Principal Product Manager for Amazon Bedrock at AWS. Previously, he served as the Head of Product for AWS Web3/Blockchain. Prior to joining AWS, John held various product leadership roles at public blockchain protocols and financial technology (fintech) companies for 14 years. He also has nine years of portfolio management experience at several hedge funds.

Hamza MIMI is a Solutions Architect for partners and strategic deals in the MENAT region at AWS, where he bridges cutting-edge technology with impactful business outcomes. With expertise in AI and a passion for sustainability, he helps organizations architect innovative solutions that drive both digital transformation and environmental responsibility, transforming complex challenges into opportunities for growth and positive change.

Hamza MIMI is a Solutions Architect for partners and strategic deals in the MENAT region at AWS, where he bridges cutting-edge technology with impactful business outcomes. With expertise in AI and a passion for sustainability, he helps organizations architect innovative solutions that drive both digital transformation and environmental responsibility, transforming complex challenges into opportunities for growth and positive change.

Books, Courses & Certifications

Oldcastle accelerates document processing with Amazon Bedrock

This post was written with Avdhesh Paliwal of Oldcastle APG.

Oldcastle APG, one of the largest global networks of manufacturers in the architectural products industry, was grappling with an inefficient and labor-intensive process for handling proof of delivery (POD) documents, known as ship tickets. The company was processing 100,000–300,000 ship tickets per month across more than 200 facilities. Their existing optical character recognition (OCR) system was unreliable, requiring constant maintenance and manual intervention. It could only accurately read 30–40% of the documents, leading to significant time and resource expenditure.

This post explores how Oldcastle partnered with AWS to transform their document processing workflow using Amazon Bedrock with Amazon Textract. We discuss how Oldcastle overcame the limitations of their previous OCR solution to automate the processing of hundreds of thousands of POD documents each month, dramatically improving accuracy while reducing manual effort. This solution demonstrates a practical, scalable approach that can be adapted to your specific needs, such as similar challenges addressing document processing or using generative AI for business process optimization.

Challenges with document processing

The primary challenge for Oldcastle was to find a solution that could accomplish the following:

- Accurately process a high volume of ship tickets (PODs) with minimal human intervention

- Scale to handle 200,000–300,000 documents per month

- Handle inconsistent inputs like rotated pages and variable formatting

- Improve the accuracy of data extraction from the current 30–40% to a much higher rate

- Add new capabilities like signature validation on PODs

- Provide real-time visibility into outstanding PODs and deliveries

Additionally, Oldcastle needed a solution for processing supplier invoices and matching them against purchase orders, which presented similar challenges due to varying document formats.The existing process required dispatchers at more than 200 facilities to spend 4–5 hours daily manually processing ship tickets. This consumed valuable human resources and led to delays in processing and potential errors in data entry. The IT team was burdened with constant maintenance and development efforts to keep the unreliable OCR system functioning.

Solution overview

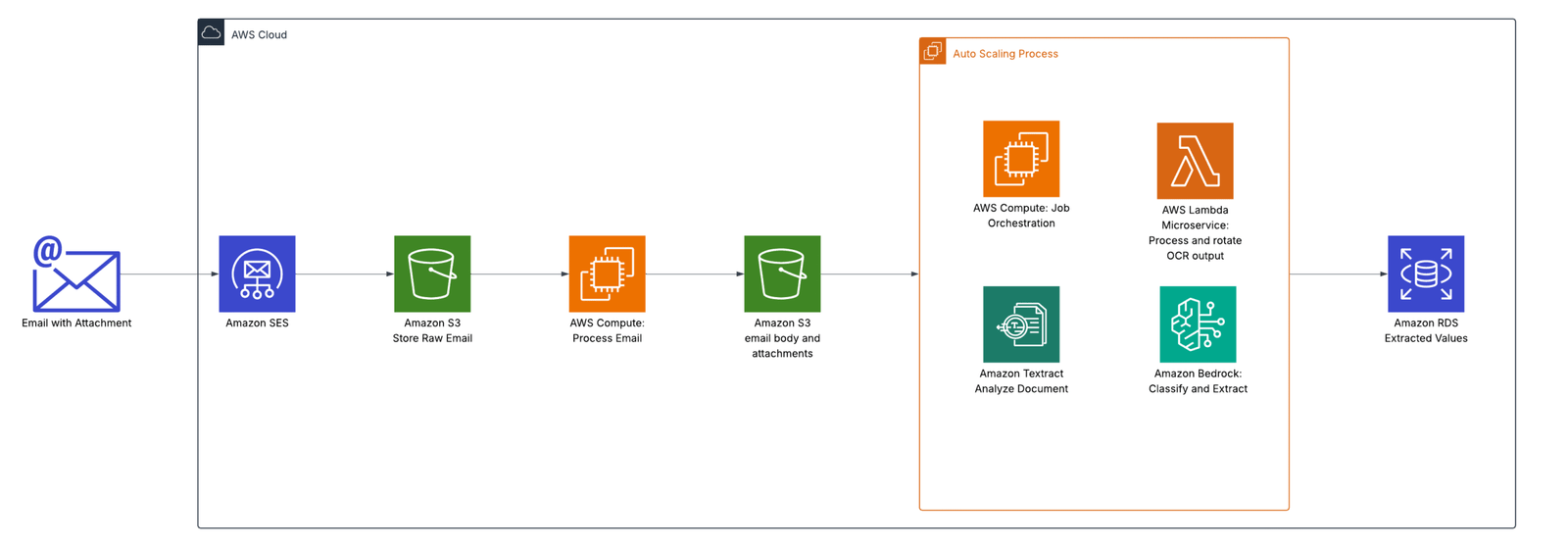

AWS Solutions Architects worked closely with Oldcastle engineers to build a solution addressing these challenges. The end-to-end workflow uses Amazon Simple Email Service (Amazon SES) to receive ship tickets, which are sent directly from drivers in the field. The system processes emails at scale using an event-based architecture centered on Amazon S3 Event Notifications. The workflow sends ship ticket documents to an automatic scaling compute job orchestrator. Documents are processed with the following steps:

- The system sends PDF files to Amazon Textract using the Start Document Analysis API with Layout and Signature features.

- Amazon Textract results are processed by an AWS Lambda microservice. This microservice resolves rotation issues with page text and generates a collection of pages of markdown representation of the text.

- The markdown is passed to Amazon Bedrock, which efficiently extracts key values from the markdown text.

- The orchestrator saves the results to their Amazon Relational Database Service (Amazon RDS) for PostgreSQL database.

The following diagram illustrates the solution architecture.

In this architecture, Amazon Textract is an effective solution to handle large PDF files at scale. The output of Amazon Textract contains the necessary geometries used to calculate rotation and fix layout issues before generating markdown. Quality markdown layouts are critical for Amazon Bedrock in identifying the right key-value pairs from the content. We further optimized cost by extracting only the data needed to limit output tokens and by using Amazon Bedrock batch processing to get the lowest token cost. Amazon Bedrock was used for its cost-effectiveness and ability to process format shipping tickets where the fields that need to be extracted are the same.

Results

The implementation using this architecture on AWS brought numerous benefits to Oldcastle:

- Business process improvement – The solution accomplished the following:

- Alleviated the need for manual processing of ship tickets at each facility

- Automated document processing with minimal human intervention

- Improved accuracy and reliability of data extraction

- Enhanced ability to validate signatures and reject incomplete documents

- Provided real-time visibility into outstanding PODs and deliveries

- Productivity gains – Oldcastle saw the following benefits:

- Significantly fewer human hours were spent on manual data entry and document processing

- Staff had more time for more value-added activities

- The IT team benefited from reduced development and maintenance efforts

- Scalability and performance – The team experienced the following performance gains:

- They seamlessly scaled from processing a few thousand documents to 200,000–300,000 documents per month

- The team observed no performance issues with increased volume

- User satisfaction – The solution improved user sentiment in several ways:

- High user confidence in the new system due to its accuracy and reliability

- Positive feedback from business users on the ease of use and effectiveness

- Cost-effective – With this approach, Oldcastle can process documents at less than $0.04 per page

Conclusion

With the success of the AWS implementation, Oldcastle is exploring potential expansion to other use cases such as AP invoice processing, W9 form validation, and automated document approval workflows. This strategic move towards AI-powered document processing is positioning Oldcastle for improved efficiency and scalability in its operations.

Review your current manual document processing procedures and identify where intelligent document processing can help you automate these workflows for your business.

For further exploration and learning, we recommend checking out the following resources:

About the authors

Erik Cordsen is a Solutions Architect at AWS serving customers in Georgia. He is passionate about applying cloud technologies and ML to solve real life problems. When he is not designing cloud solutions, Erik enjoys travel, cooking, and cycling.

Erik Cordsen is a Solutions Architect at AWS serving customers in Georgia. He is passionate about applying cloud technologies and ML to solve real life problems. When he is not designing cloud solutions, Erik enjoys travel, cooking, and cycling.

Sourabh Jain is a Senior Solutions Architect with over 8 years of experience developing cloud solutions that drive better business outcomes for organizations worldwide. He specializes in architecting and implementing robust cloud software solutions, with extensive experience working alongside global Fortune 500 teams across diverse time zones and cultures.

Sourabh Jain is a Senior Solutions Architect with over 8 years of experience developing cloud solutions that drive better business outcomes for organizations worldwide. He specializes in architecting and implementing robust cloud software solutions, with extensive experience working alongside global Fortune 500 teams across diverse time zones and cultures.

Avdhesh Paliwal is an accomplished Application Architect at Oldcastle APG with 29 years of extensive ERP experience. His expertise spans Manufacturing, Supply Chain, and Human Resources modules, with a proven track record of designing and implementing enterprise solutions that drive operational efficiency and business value.

Avdhesh Paliwal is an accomplished Application Architect at Oldcastle APG with 29 years of extensive ERP experience. His expertise spans Manufacturing, Supply Chain, and Human Resources modules, with a proven track record of designing and implementing enterprise solutions that drive operational efficiency and business value.

Books, Courses & Certifications

Coursera’s 2025 Partner Awards for Outstanding Achievement

Discover Coursera’s 2025 Partner Award winners, honoring leading universities and companies driving innovation and excellence in online education worldwide.

Transformative learning thrives through collaboration. Our partner ecosystem of over 350 leading universities and companies creates world-class educational content that reaches millions of learners across more than 190 countries.

At our 13th annual Coursera Conference in Las Vegas on September 9th, 2025, we celebrated six partners who have demonstrated exceptional achievement, creativity, and commitment to learner success.

These Partner Awards honor institutions that have leveraged the Coursera platform to create innovative, high-impact learning experiences. From pioneering new educational models to embracing cutting-edge AI technologies, our 2025 winners exemplify what’s possible when educational vision meets technological capability.

Let’s meet the partners who are shaping the future of online learning.

Rising Star Award

This award celebrates a new partner who has quickly demonstrated exceptional innovation and impact in their collaboration with Coursera.

Winner: Heriot-Watt University

Heriot-Watt University has made an immediate impact with their MSc in Computer Science, launched this year with a revolutionary approach to accessible higher education. Their program combines flexible, affordable design with performance-based admissions, while pairing degree programs with free access to over 500 industry certificates. This innovative model provides a powerful example of inclusive, career-aligned higher education that meets learners where they are and prepares them for where they want to go.

AI Innovation Award

This award honors a partner who has used AI tools to create more personalized, innovative learning programs.

Winner: Fractal

Fractal is redefining what’s possible in online education through bold, future-focused innovation. As the first Coursera partner to harness AI-driven content creation and digital cloning technology, they have set a new standard for high-quality, personalized learning at scale. With multilingual dubbing capabilities and skills-based learning pathways, Fractal is creating a more accessible, globally connected future of education that adapts to diverse learner needs and preferences.

Engagement Excellence Award

This award recognizes an institution that has cultivated creative, effective engagement strategies and driven significant increases in learning participation and completion rates.

Winner: Adobe

Adobe demonstrates what’s possible when a partner invests deeply in the complete learner journey. From discovery through progression, Adobe brings a bold, data-informed strategy that truly centers learners at every step. Their global reach, innovative content design, and focus on meaningful outcomes have created seamless, high-impact learning pathways that deeply engage learners and drive sustained participation across diverse audiences.

Trailblazer Award

This award celebrates a partner who has consistently pioneered new approaches to online education and continues to push the boundaries of what’s possible.

Winner: Gies College of Business, University of Illinois

Gies College of Business has been a trailblazer from the very beginning, launching the first degree program on Coursera and fundamentally reimagining higher education delivery. That innovative spirit remains strong today, demonstrated through their rapid adoption of AI tools and continuously evolving content and learning experiences. Gies consistently shows their deep commitment to accessible, high-quality education while maintaining their position at the forefront of educational innovation.

Vision and Values Award

This award recognizes a partner that embodies our shared values while advancing the future of learning.

Winner: Duke University

Duke University exemplifies innovation with intention, principle, and purpose. As a deeply engaged member of Coursera’s Innovation Team, they have helped shape our most promising new features and capabilities. Through strategic stewardship of their Coursera portfolio, thoughtful content optimization, and data-driven, learner-first experimentation, Duke ensures the future of online education will be equitable and transformative for learners everywhere.

Maximizing Impact Award

This award celebrates institutions that have achieved significant outcomes for their learners, organization, or region, including improved employability, enhanced learner capabilities, and meaningful social impact.

Winner: Universidad de los Andes

Universidad de los Andes has been a powerhouse since joining the Coursera community in 2015. With five degree programs and nearly 100 open courses on Coursera, they have reached learners at unprecedented scale while embracing every major platform innovation. From new admissions and credit strategies to early adoption of AI tools, Universidad de los Andes demonstrates tireless commitment to experimentation and excellence in online education delivery.

These six partners represent the very best of what collaborative innovation can achieve in education. They have transformed how we think about accessibility, engagement, and impact in online learning while maintaining unwavering commitment to learner success.

To our partner community of over 350 leading universities and companies: your dedication to creating world-class educational content continues to expand opportunities for millions of learners worldwide. Together, we are building an ecosystem where quality education reaches across geographical boundaries and where innovation serves the fundamental goal of transforming lives through learning.

Thank you for your partnership, your vision, and your commitment to educational excellence. Here’s to continued collaboration in unlocking human potential through the power of learning.

Join an ecosystem of over 350 leading universities and companies transforming education worldwide. Learn more

-

Business2 weeks ago

Business2 weeks agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms4 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi