AI Insights

Better Buy in 2025: SoundHound AI, or This Other Magnificent Artificial Intelligence Stock?

SoundHound AI (SOUN 11.99%) is a leading developer of conversational artificial intelligence (AI) software, and its revenue is growing at a lightning-fast pace. Its stock soared by 835% in 2024 after Nvidia revealed a small stake in the company, although the chip giant has since sold its entire position.

DigitalOcean (DOCN 2.03%) is another up-and-coming AI company. It operates a cloud computing platform designed specifically for small and mid-sized businesses (SMBs), which features a growing portfolio of AI services, including data center infrastructure and a new tool that allows them to build custom AI agents.

With the second half of 2025 officially underway, which stock is the better buy between SoundHound AI and DigitalOcean?

Image source: Getty Images.

The case for SoundHound AI

SoundHound AI amassed an impressive customer list that includes automotive giants like Hyundai and Kia and quick-service restaurant chains like Chipotle and Papa John’s. All of them use SoundHound’s conversational AI software to deliver new and unique experiences for their customers.

Automotive manufacturers are integrating SoundHound’s Chat AI product into their new vehicles, where it can teach drivers how to use different features or answer questions about gas mileage and even the weather. Manufacturers can customize Chat AI’s personality to suit their brand, which differentiates the user experience from the competition.

Restaurant chains use SoundHound’s software to autonomously take customer orders in-store, over the phone, and in the drive-thru. They also use the company’s voice-activated virtual assistant tool called Employee Assist, which workers can consult whenever they need instructions for preparing a menu item or help understanding store policies.

SoundHound generated $84.7 million in revenue during 2024, which was an 85% increase from the previous year. However, management’s latest guidance suggests the company could deliver $167 million in revenue during 2025, which would represent accelerated growth of 97%. SoundHound also has an order backlog worth over $1.2 billion, which it expects to convert into revenue over the next six years, so that will support further growth.

But there are a couple of caveats. First, SoundHound continues to lose money at the bottom line. It burned through $69.1 million on a non-GAAP (adjusted) basis in 2024 and a further $22.3 million in the first quarter of 2025 (ended March 31). The company only has $246 million in cash on hand, so it can’t afford to keep losing money at this pace forever — eventually, it will have to cut costs and sacrifice some of its revenue growth to achieve profitability.

The second caveat is SoundHound’s valuation, which we’ll explore further in a moment.

The case for DigitalOcean

The cloud computing industry is dominated by trillion-dollar tech giants like Amazon and Microsoft, but they mostly design their services for large organizations with deep pockets. SMB customers don’t really move the needle for them, but that leaves an enormous gap in the cloud market for other players like DigitalOcean.

DigitalOcean offers clear and transparent pricing, attentive customer service, and a simple dashboard, which is a great set of features for small- and mid-sized businesses with limited resources. The company is now helping those customers tap into the AI revolution in a cost-efficient way with a growing portfolio of services.

DigitalOcean operates data centers filled with graphics processing units (GPUs) from leading suppliers like Nvidia and Advanced Micro Devices, and it offers fractional capacity, which means its customers can access between one and eight chips. This is ideal for small workloads like deploying an AI customer service chatbot on a website.

Earlier this year, DigitalOcean launched a new platform called GenAI, where its clients can create and deploy custom AI agents. These agents can do almost anything, whether an SMB needs them to analyze documents, detect fraud, or even autonomously onboard new employees. The agents are built on the latest third-party large language models from leading developers like OpenAI and Meta Platforms, so SMBs know they are getting the same technology as some of their largest competitors.

DigitalOcean expects to generate $880 million in total revenue during 2025, which would represent a modest growth of 13% compared to the prior year. However, during the first quarter, the company said its AI revenue surged by an eye-popping 160%. Management doesn’t disclose exactly how much revenue is attributable to its AI services, but it says demand for GPU capacity continues to outstrip supply, which means the significant growth is likely to continue for now.

Unlike SoundHound AI, DigitalOcean is highly profitable. It generated $84.5 million in generally accepted accounting principles (GAAP) net income during 2024, which was up by a whopping 335% from the previous year. It carried that momentum into 2025, with its first-quarter net income soaring by 171% to $38.2 million.

The verdict

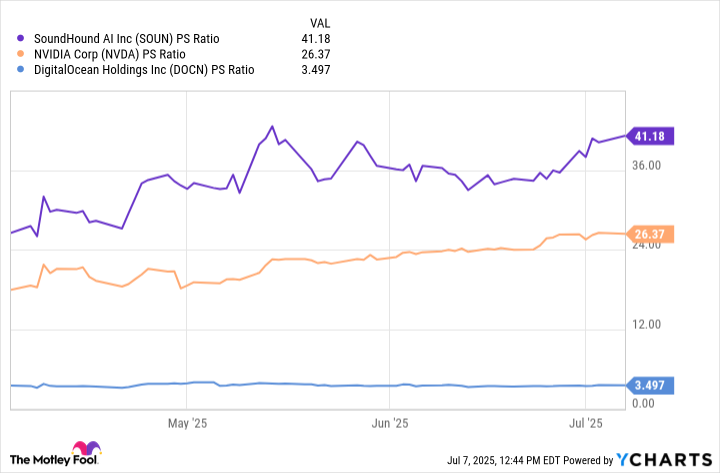

For me, the choice between SoundHound AI and DigitalOcean mostly comes down to valuation. SoundHound AI stock is trading at a sky-high price-to-sales (P/S) ratio of 41.4, making it even more expensive than Nvidia, which is one of the highest-quality companies in the world. DigitalOcean stock, on the other hand, trades at a very modest P/S ratio of just 3.5, which is actually near the cheapest level since the company went public in 2021.

SOUN PS Ratio data by YCharts

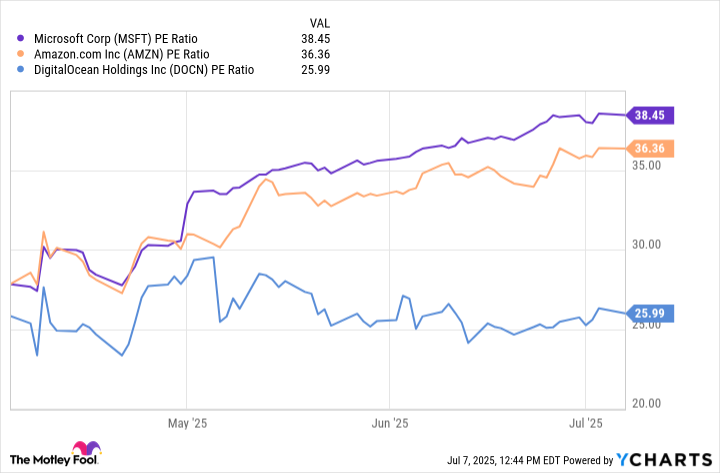

We can also value DigitalOcean based on its earnings, which can’t be said for SoundHound because the company isn’t profitable. DigitalOcean stock is trading at a price-to-earnings (P/E) ratio of 26.2, which makes it much cheaper than larger cloud providers like Amazon and Microsoft (although they also operate a host of other businesses):

MSFT PE Ratio data by YCharts

SoundHound’s rich valuation might limit further upside in the near term. When we combine that with the company’s steep losses at the bottom line, its stock simply doesn’t look very attractive right now, which might be why Nvidia sold it. DigitalOcean stock looks like a bargain in comparison, and it has legitimate potential for upside from here thanks to the company’s surging AI revenue and highly profitable business.

John Mackey, former CEO of Whole Foods Market, an Amazon subsidiary, is a member of The Motley Fool’s board of directors. Randi Zuckerberg, a former director of market development and spokeswoman for Facebook and sister to Meta Platforms CEO Mark Zuckerberg, is a member of The Motley Fool’s board of directors. Anthony Di Pizio has no position in any of the stocks mentioned. The Motley Fool has positions in and recommends Advanced Micro Devices, Amazon, Chipotle Mexican Grill, DigitalOcean, Meta Platforms, Microsoft, and Nvidia. The Motley Fool recommends the following options: long January 2026 $395 calls on Microsoft, short January 2026 $405 calls on Microsoft, and short June 2025 $55 calls on Chipotle Mexican Grill. The Motley Fool has a disclosure policy.

AI Insights

China Court Artificial Intelligence Cases Shape IP & Rights

On September 10, 2025, the Beijing Internet Court released eight Typical Cases Involving Artificial Intelligence (涉人工智能典型案例) “to better serve and safeguard the healthy and orderly development of the artificial intelligence industry.” Typical cases in China serve as educational examples, unify legal interpretation, and guide lower courts and the general public. While not legally binding precedents like those in common law systems, these cases provide authoritative guidance in a civil law system where codified statutes are the primary source of law. Note though that these cases are from the Beijing Internet Court and unless designated as Typical by Supreme People’s Court (SPC), as in cases #2 and #8 below, may have limited authority. Nonetheless, the cases provide early insight into Chinese legal thinking on AI and may be “more equal than others” coming from a court in China’s capital.

The following can be derived from the cases:

- AI-generated works can be protected by copyright and the author is the person entering the prompts into the AI.

- Designated Typical by the SPC: Personality rights extend to AI-generated voices that possess sufficient identifiability based on tone, intonation, and pronunciation style that would allow the general public to associate the voice with the specific person.

- Designated Typical by the SPC: Personality rights extend to people’s virtual images and unauthorized creation or use of a person’s AI-generated virtual image constitutes personality rights infringement.

- Network platforms using algorithms to detect AI-generated content for removal must provide reasonable explanations for their algorithmic decisions, particularly for content where providing creation evidence is unreasonable.

- Virtual digital persons or avatars demonstrating unique aesthetic choices in design elements constitute artistic works protected by copyright law.

As explained by the Beijing Internet Court:

Case I: Li v. Liu: Infringement of the Right of Authorship and the Right of Network Dissemination

—Determination of the Legal Attributes and Ownership of AI-Generated Images

[Basic Facts]

On February 24, 2023, plaintiff Li XX used the generative AI model Stable Diffusion to create a photographic close-up of a young girl at dusk. By selecting the model, inputting prompt words and reverse prompt words, and setting generation parameters, the model generated an image. The image was then published on the Xiaohongshu platform on February 26, 2023. On March 2, 2023, defendant Liu XX published an article on his registered Baijiahao account, using the image in question as an illustration. The image removed the plaintiff’s signature watermark and failed to provide any specific source information. Consequently, plaintiff Li filed a lawsuit, requesting an apology and compensation for economic losses. An in-court inspection revealed that, when using the generative AI model Stable Diffusion, the model generated different images by changing individual prompt words or parameters.

[Judgment]

The court held that, judging by the appearance of the images in question, they are no different from ordinary photographs and paintings, clearly belonging to the realm of art and possessing a certain form of expression. The images in question were generated by the plaintiff using generative artificial intelligence technology. From the moment the plaintiff conceived the images in question to their final selection, the plaintiff exerted considerable intellectual effort, thus satisfying the requirement of “intellectual achievement.” The images in question themselves exhibit discernible differences from prior works, reflecting the plaintiff’s selection and arrangement. The process of adjustment and revision also reflects the plaintiff’s aesthetic choices and individual judgment. In the absence of evidence to the contrary, it can be concluded that the images in question were independently created by the plaintiff, reflecting his or her individual expression, thus satisfying the requirement of “originality.” The images in question are aesthetically significant two-dimensional works of art composed of lines and colors, classified as works of fine art, and protected by copyright law. Regarding the ownership of the rights to the works in question, copyright law stipulates that authors are limited to natural persons, legal persons, or unincorporated organizations. Therefore, an artificial intelligence model itself cannot constitute an author under Chinese copyright law. The plaintiff configured the AI model in question as needed and ultimately selected the person who created the images in question. The images in question were directly generated based on the plaintiff’s intellectual input and reflect the plaintiff’s personal expression. Therefore, the plaintiff is the author of the images in question and enjoys the copyright to the images in question. The defendant, without permission, used the images in question as illustrations and posted them on his own account, allowing the public to access them at a time and location of his choosing. This infringed the plaintiff’s right to disseminate the images on the Internet. Furthermore, the defendant removed the signature watermark from the images in question, infringing upon the plaintiff’s right of authorship and should bear liability for infringement. The verdict ordered the defendant, Liu XX, to apologize and compensate for economic losses. Neither party appealed the verdict, which has since come into effect.

[Typical Significance]

This case clarifies that content generated by humans using AI, if it meets the definition of a work, should be considered a work and protected by copyright law. Furthermore, the copyright ownership of AI-generated content can be determined based on the original intellectual contribution of each participant, including the developer, user, and owner. While addressing new rights identification challenges arising from technological change, this case also provides a practical paradigm for institutional adaptation and regulatory responses to the judicial review of AI-generated content, demonstrating its guiding and directional significance. This case was selected as one of the top ten nominated cases for 2024 to promote the rule of law in the new era, one of the ten most influential events in China’s digital economy development and rule of law in 2024, and one of the top ten events in China’s rule of law implementation in 2023.

Case II: Yin XX v. A Certain Intelligent Technology Company, et al., Personal Rights Infringement Dispute

—Can the Rights of a Natural Person’s Voice Extend to AI-Generated Voices?

[Basic Facts]

Plaintiff Yin XX, a voice actor, discovered that works produced using his voice acting were widely circulated on several well-known apps. After audio screening and source tracing, it was discovered that the voices in these works originated from a text-to-speech product on a platform operated by the first defendant, a certain intelligent technology company. The plaintiff had previously been commissioned by the second defendant, a cultural media company, to record a sound recording, which held the copyright. The second defendant subsequently provided the audio of the recording recorded by the plaintiff to the third defendant, a certain software company. The third defendant used only one of the plaintiff’s recordings as source material, subjected it to AI processing, and generated the text-to-speech product in question. The product was then sold on a cloud service platform operated by the fourth defendant, a certain network technology company. The first defendant, a certain intelligent technology company, entered into an online services sales contract with the fifth defendant, a certain technology development company. The fifth defendant placed an order with the third defendant, which included the text-to-speech product in question. The first defendant, the certain intelligent technology company, used an application programming interface (API) to directly access and generate the text-to-speech product for use on its platform without any technical processing. The plaintiff claimed that the defendants’ actions had seriously infringed upon the plaintiff’s voice rights and demanded that Defendant 1, a certain intelligent technology company, and Defendant 3, a certain software company, should immediately cease the infringement and apologize. The plaintiff requested the five defendants compensate the plaintiff for economic and emotional losses.

[Judgment]

The court held that a natural person’s voice, distinguished by its voiceprint, timbre, and frequency, possesses unique, distinctive, and stable characteristics. It can generate or induce thoughts or emotions associated with that person in others, and can publicly reveal an individual’s behavior and identity. The recognizability of a natural person’s voice means that, based on repeated or prolonged listening, the voice’s characteristics can be used to identify a specific natural person. Voices synthesized using artificial intelligence are considered recognizable if they can be associated with that person by the general public or the public in relevant fields based on their timbre, intonation, and pronunciation style. In this case, Defendant No. 3 used only the plaintiff’s personal voice to develop the text-to-speech product in question. Furthermore, court inspection confirmed that the AI voice was highly consistent with the plaintiff’s voice in terms of timbre, intonation, and pronunciation style. This could evoke thoughts or emotions associated with the plaintiff in the average person, allowing the voice to be linked to the plaintiff and, therefore, identified. Defendant No. 2 enjoys copyright and other rights in the sound recording, but this does not include the right to authorize others to use the plaintiff’s voice in an AI-based manner. Defendant No. 2 signed a data agreement with Defendant No. 3’s company, authorizing Defendant No. 3 to use the plaintiff’s voice in an AI-based manner without the plaintiff’s informed consent, lacking any legal basis for such authorization. Therefore, Defendants No. 2 and No. 3’s defense that they had obtained legal authorization from the plaintiff is unsustainable. Defendants No. 2 and No. 3 used the plaintiff’s voice in an AI-based manner without the plaintiff’s permission, constituting an infringement of the plaintiff’s voice rights. Their infringement resulted in the impairment of the plaintiff’s voice rights, and they bear corresponding legal liability. Defendants No. 1, No. 4, and No. 5 were not subjectively at fault and are not liable for damages. Therefore, damages are determined based on a comprehensive consideration of the defendants’ infringement, the value of similar products in the market, and the number of views. Verdict: Defendants 1 and 3 shall issue written apologies to the plaintiff, and Defendants 2 and 3 shall compensate the plaintiff for economic losses. Neither party appealed the verdict, and the judgment has entered into force.

[Typical Significance]

This case clarifies the criteria for determining whether sounds processed by AI technology are protected by voice rights, establishes behavioral boundaries for the application of new business models and technologies, and helps regulate and guide the development of AI technology toward serving the people and promoting good. This case was selected by the Supreme People’s Court as a typical case commemorating the fifth anniversary of the promulgation of the Civil Code, a typical case involving infringement of personal rights through the use of the Internet and information technology, and one of the top ten Chinese media law cases of 2024.

Case III: Li XX v. XX Culture Media Co., Ltd., Internet Infringement Liability Dispute Case

—Using AI-synthesized celebrity voices for “selling products” without the rights holder’s permission constitutes infringement, and the commissioned promotional merchant shall bear joint and several liability.

[Basic Facts]

Plaintiff Li holds a certain level of fame and social influence in the fields of education and childcare. In 2024, Plaintiff Li discovered that Defendant XX Culture Media Co., Ltd. was promoting several of its family education books on an online platform store using videos of Plaintiff Li’s public speeches and lectures, accompanied by an AI-synthesized voice that closely resembled the Plaintiff’s voice. Plaintiff argued that the Defendant’s unauthorized use of Plaintiff’s portrait and AI-synthesized voice in promotional products closely associated the Plaintiff’s persona with the target audience of the commercial promotion, misleading consumers into believing the Plaintiff was the spokesperson or promoter for the books. The Defendant exploited the Plaintiff’s persona, professional background, and social influence to attract attention and increase sales, thereby infringing upon the Plaintiff’s portrait and voice rights. As a book seller, the defendant and the video publisher (a live streamer) had a commission relationship, jointly completing sales activities. The defendant had the obligation and ability to review the live streamer’s videos and should bear tort liability, including an apology and compensation for losses, for the publication of the video in question.

[Judgment]

The court held that the video in question used the plaintiff, Li’s portrait and AI-synthesized voice. This voice was highly consistent with Li’s own voice in timbre, intonation, and pronunciation style. Considering Li’s fame in the education and parenting fields, the video in question promoted family education books, making it easier for viewers to connect the relevant content in the video with Li. It can be determined that a certain range of listeners could establish a one-to-one correspondence between the AI-synthesized voice and the plaintiff himself. Therefore, the voice in question fell within the scope of protection of Li’s voice rights. The promotional video in question extensively used the plaintiff’s portrait and synthesized voice, without the plaintiff’s authorization. Therefore, the publication of the video in question constituted an infringement of the plaintiff’s portrait and voice rights. Defendant XX Culture Media Co., Ltd. and the video publisher (a live streamer) entered into a commissioned promotion relationship in accordance with the platform’s rules and service agreements. They jointly published the video in question for the purpose of promoting the defendant’s book and generating corresponding revenue. Furthermore, the defendant, based on the platform’s rules and management authority, possessed the ability to review and manage the video in question. Given that the video in question extensively used the plaintiff’s likeness and synthesized a simulated voice, the defendant should have had a degree of foresight regarding the potential copyright infringement risks posed by the video and exercised reasonable scrutiny to determine whether the video had been authorized by the plaintiff. However, the evidence in the case demonstrates that the defendant failed to exercise due diligence in its review. Therefore, defendant XX Culture Media Co., Ltd. should bear joint and several liability with the video publisher for publishing the infringing video. The judgment ordered defendant XX Culture Media Co., Ltd. to apologize to plaintiff Li and compensate for economic losses and reasonable expenses incurred in defending its rights. The judgment dismissed plaintiff Li’s other claims. Neither party appealed the verdict, and the judgment has entered into force.

[Typical Significance]

With the rapid development of generative artificial intelligence technology, the “cloning” and misuse of celebrity voices has become increasingly difficult to distinguish between genuine and fake, leading to widespread audio copyright infringement and significant consumer misinformation. This case clarifies that as long as the voice of a natural person synthesized using AI deep synthesis technology can enable the general public or the public in relevant fields to identify a specific natural person based on its timbre, intonation, pronunciation style, etc., it is identifiable and should be included in the scope of protection of the natural person’s voice rights. At the same time, in the legal relationship where merchants entrust video publishers to promote products, the platform merchant, as the entrusting party and actual beneficiary, has a reasonable review obligation to review the promotional content published by its affiliated influencers. Merchants cannot be exempted from liability simply on the grounds of “passive cooperation” or “no participation in production.” If they fail to fulfill their duty of care in review, they must bear joint and several liability with the influencers who promote products. This provides normative guidance for standardizing e-commerce promotion behavior, strengthening the main responsibilities of merchants, and governing the chaos of AI “voice substitution”, promoting the positive development of artificial intelligence and deep synthesis technology.

Case IV: Liao v. XX Technology and Culture Co., Ltd., Internet Infringement Liability Dispute Case

—Unauthorized “AI Face-Swapping” of Videos Containing Others’ Portraits, Constituting an Infringement of Others’ Personal Information Rights

[Basic Facts]

The plaintiff, Liao, is a short video blogger specializing in ancient Chinese style, with a large online following. The defendant, XX Technology and Culture Co., Ltd., without his authorization, used a series of videos featuring the plaintiff to create face-swapping templates, uploaded them to the software at issue, and provided them to users for a fee, profiting from the process. The plaintiff claims the defendant’s actions infringe upon his portrait rights and personal information rights and demands a written apology and compensation for economic and emotional damages. The defendant, XX Technology and Culture Co., Ltd., argues that the videos posted on the defendant’s platform have legitimate sources and that the facial features do not belong to the plaintiff, thus not infringing the plaintiff’s portrait rights. Furthermore, the “face-swapping technology” used in the software at issue was actually provided by a third party, and the defendant did not process the plaintiff’s personal information, thus not infringing the plaintiff’s personal information rights. The court found that the face-swapping template videos at issue shared the same makeup, hairstyle, clothing, movements, lighting, and camera transitions as the series of videos created by the plaintiff, but the facial features of the individuals featured were different and did not belong to the plaintiff. The software in question uses a third-party company’s service to implement face-swapping functionality. Users pay a membership fee to unlock all face-swapping features.

[Judgment]

The court held that the key to determining whether portrait rights have been infringed lies in recognizability. Recognizability emphasizes that the essence of a portrait is to point to a specific person. While the scope of a portrait centers around the face, it may also include unique body parts, voices, highly recognizable movements, and other elements that can be associated with a specific natural person. In this case, although the defendant used the plaintiff’s video to create a video template, it did not utilize the plaintiff’s portrait. Instead, it replaced the plaintiff’s facial features through technical means. The makeup, hairstyle, clothing, lighting, and camera transitions retained in the template are not inseparable from a specific natural person and are distinct from the natural personality elements of a natural person. The subject that the general public identifies through the replaced video is a third party, not the plaintiff. Furthermore, the defendant’s provision of the video template to users did not vilify, deface, or falsify the plaintiff’s portrait. Therefore, the defendant’s actions did not constitute an infringement of the plaintiff’s portrait rights. However, the defendant collected videos containing the plaintiff’s facial information and replaced the plaintiff’s face in those videos with a photo provided by the defendant. This synthesis process required algorithmically integrating features from the new static image with some facial features and expressions from the original video. This process involved the collection, use, and analysis of the plaintiff’s personal information, constituting the processing of the plaintiff’s personal information. The defendant processed this information without the plaintiff’s consent, thus infringing upon the plaintiff’s personal information rights. If the defendant infringes upon the creative work of others by using videos produced by others without authorization, the relevant rights holder should assert their rights. The judgment ordered the defendant to issue a written apology to the plaintiff and compensate the plaintiff for emotional distress. Neither party appealed the verdict, and the judgment has entered into force.

[Typical Significance]

This case, centered on the new business model of “AI face-swapping,” accurately distinguished between portrait rights, personal information rights, and legitimate rights based on labor and creative input in the generation of synthetic AI applications. This approach not only safeguards the legitimate rights and interests of individuals, but also leaves room for the development of AI technology and emerging industries, and provides a valuable opportunity for service providers.

Case V: Tang v. XX Technology Co., Ltd., Internet Service Contract Dispute Case

—An online platform using algorithmic tools to detect AI-generated content but failing to provide reasonable and appropriate explanations should be held liable for breach of contract.

[Basic Facts]

Plaintiff Tang posted a 200-word text on an online platform operated by defendant XX Technology Co., Ltd., stating, “Working part-time doesn’t make you much money, but it can open up new perspectives… If you’re interested in learning to drive and plan to drive in the future, you can do it during your free time during your vacation… After work, you won’t have much time to get a license.” The platform operated by defendant XX Technology Co., Ltd. classified the content as a violation of “containing AI-generated content without identifying it,” hid it, and banned the user for one day. Plaintiff Tang’s appeal was unsuccessful. He argued that he did not use AI to create content and that defendant XX Technology Co., Ltd.’s actions constituted a breach of contract. He requested the court to order the defendant to revoke the illegal actions of hiding the content and banning the account for one day, and to delete the record of the illegal actions from its backend system.

[Judgment]

The court held that when internet users create content using AI tools and post it to online platforms, they should label it truthfully in accordance with the principle of good faith. The defendant issued a community announcement requiring creators to proactively use labels when posting content containing AIGC. For content that fails to do so, the platform will take appropriate measures to restrict its circulation and add relevant labels. Using AI-generated content without such labels constitutes a violation. The plaintiff is a registered user of the platform, and the aforementioned announcement is part of the platform’s service agreement. The defendant has the right to review and address user-posted content as AI-generated and synthetic content in accordance with the agreement. Generally, the plaintiff should provide preliminary evidence, such as manuscripts, originals, source files, and source data, to prove the human nature of the content. However, in this case, the plaintiff’s responses were created in real time, making it objectively impossible to provide such evidence. Therefore, it is neither reasonable nor feasible for the plaintiff to provide such evidence. The defendant concluded that the content in question was AI-generated and synthetic based on the results of the algorithmic tool. The defendant is both the controller and judge of the algorithmic tool, controlling the algorithmic tool’s operation and review results. The defendant should provide reasonable evidence or explanation for this fact. Although the defendant provided the algorithm’s filing information, its relevance to the dispute could not be confirmed. The defendant failed to adequately explain the algorithm’s decision-making basis and results, nor to rationally justify its determination that the content in question was AI-generated and synthesized. The defendant should bear liability for breach of contract for its handling of the account in question without factual basis. The defendant’s standard for manual review required highly human emotional characteristics, and this judgment lacked scientific basis, persuasiveness, or credibility. The court ordered the defendant to repost the content and delete the relevant backend records. The defendant appealed the first-instance judgment but later withdrew the appeal, and the first-instance judgment stood.

[Typical Significance]

This case is a valuable exploration of the labeling, platform identification, and governance of AI-generated content within the context of judicial review. On the one hand, it affirms the positive role of online content service platforms in using algorithmic tools to review and process AI-generated content and fulfill their primary responsibility as information content managers. On the other hand, it recognizes the obligation of online content service platforms to adequately explain the results of automated algorithmic decisions in the context of live text creation, and establishes a standard for the level of explanation required during judicial review. Through judicial case-by-case adjudication, this case rationally distributes the burden of proof between online content service platforms and users, promotes online content service platforms to improve the recognition and decision-making capabilities of algorithms, and effectively improves the level of artificial intelligence information content governance.

Case VI: Cheng v. Sun Online Infringement Liability Dispute Case

—Using AI Software to Parody and Deface Others’ Portraits Consists of Personality Rights Infringement

[Basic Facts]

Plaintiff Cheng and defendant Sun were both members of a photography exchange WeChat group. Without Cheng’s consent, defendant Sun used AI software to create an anime-style image of Cheng, showing her as a scantily clad woman, from Cheng’s WeChat profile photo. He then sent the image to the group. Despite repeated attempts by plaintiff Cheng to dissuade him, defendant Sun continued to use the AI software to create an anime-style image of Cheng, showing her as a scantily clad woman with a distorted figure, and sent it to plaintiff via private WeChat messages. Plaintiff Cheng believes that the allegedly infringing images, sent by defendant Sun to groups and private messages, are recognizable as the plaintiff’s own image and contain significant sexual connotations and derogatory qualities, thereby diminishing her public reputation and infringing her portrait rights, reputation rights, and general personality rights. Plaintiff Cheng therefore demands an apology and compensation for emotional and economic losses.

[Judgment]

The court held that the allegedly infringing image posted by defendant Sun in the WeChat group was generated by AI using plaintiff Cheng’s WeChat profile picture without authorization. The image closely resembled the plaintiff Cheng’s appearance in terms of facial shape, posture, and style. WeChat group members were able to identify the plaintiff as the subject of the allegedly infringing image based on the appearance of the person and the context of the group chat. Therefore, the defendant’s group posting constituted an infringement of the plaintiff’s portrait rights. The plaintiff’s personal portrait displayed through her WeChat profile picture served as an identifier of her online virtual identity. The defendant’s use of AI software to generate the allegedly infringing image transformed the plaintiff’s well-dressed WeChat profile picture into an image revealing her breasts. This triggered inappropriate discussion within the WeChat group targeting the plaintiff and objectively led to vulgarized evaluations of her by others, constituting an infringement of her portrait rights and reputation rights. Furthermore, the defendant, Sun, used AI software to create an image of the plaintiff, Cheng, using her WeChat profile picture to create a picture with wooden legs and even three arms. The figure in the image clearly does not conform to the basic human anatomy, and the chest is also exposed. The defendant’s private message of these images to the plaintiff inevitably caused psychological humiliation, violated her personal dignity, and constituted an infringement of her general personality rights. The judgment ordered Sun to publicly apologize to Cheng and compensate her for emotional distress. Neither party appealed the verdict, and the judgment has entered into force.

[Typical Significance]

In this case, the court found that the unauthorized use of AI software to spoof and vilify another person’s portrait constituted an infringement of that person’s personality rights. The court emphasized that users of generative AI technology must abide by laws and administrative regulations, respect social morality and ethics, respect the legitimate rights and interests of others, and refrain from endangering the physical and mental health of others. This court clarified the behavioral boundaries for ordinary internet users using generative AI technology, and has exemplary significance for strengthening the protection of natural persons’ personality rights in the era of artificial intelligence.

Case VII: A Technology Co., Ltd. and B Technology Co., Ltd. v. Sun XX and X Network Technology Co., Ltd., Copyright Ownership and Infringement Dispute

—Original Avatar Images Constitute Works of Art

[Basic Facts]

Virtual Digital Humans [avatars] A and B were jointly produced by four entities, including plaintiff A Technology Co., Ltd. and plaintiff X Network Technology Co., Ltd. Plaintiff A Technology Co., Ltd. is the copyright owner, and plaintiff X Network Technology Co., Ltd. is the licensee. Virtual Digital Human A has over 4.4 million followers across various platforms and was recognized as one of the eight hottest events of the year in the cultural and tourism industries in 2022. The two plaintiffs claimed that the images of Virtual Humans A and B constitute works of art. Virtual Human A’s image was first published in the first episode of the short drama “Thousand ***,” and Virtual Human B’s image was first published on the Weibo account “Zhi****.” After resigning, Sun XX, an employee of one of the co-creation units, sold models of Virtual Humans A and B on a model website operated by defendant X Network Technology Co., Ltd. without authorization, infringing the two plaintiffs’ rights to reproduce and disseminate the virtual human images. As the platform provider, defendant X Network Technology Co., Ltd. failed to fulfill its supervisory responsibilities and should bear joint and several liability with defendant Sun XX.

[Judgment]

The court held that the full-body image of virtual human A and the head image of virtual human B were not directly derived from real people, but were created by a production team. They possess distinct artistic qualities, reflecting the team’s unique aesthetic choices and judgment regarding line, color, and specific image design. They meet the requirements of originality and constitute works of art. The defendant, Sun, published the allegedly infringing model on a model website. The model’s facial features, hairstyle, hair accessories, clothing design, and overall style, particularly in terms of the combination of original elements in the copyrighted work, are identical or similar to the virtual human A and virtual human B in the copyrighted work. This constitutes substantial similarity and infringes the plaintiffs’ right to disseminate the works through information networks. Taking into account factors such as the specific type of service provided by the defendant, the degree of interference with the content in question, whether it directly obtained economic benefits, the fame of the copyrighted work, and the popularity of the copyrighted content, the defendant, as a network service provider, did not commit joint infringement. Virtual human figures carry multiple rights and interests. This case only determines the rights and interests in the copyrighted work. The amount of economic compensation in this case is determined by comprehensively considering the type of rights sought to be protected, their market value, the subjective fault of the infringer, the nature and scale of the infringing acts, and the severity of the damages. Verdict: Defendant Sun was ordered to compensate the two plaintiffs for their economic losses. Defendant Sun appealed the first-instance judgment. The second-instance court dismissed the appeal and upheld the original judgment.

[Typical Significance]

This case concerns the legal attributes and originality of virtual digital human images. Virtual digital humans consist of two components: external representation and technical core, possessing a digital appearance and human-like functions. Regarding external representation, if a virtual human embodies the production team’s unique aesthetic choices and judgment regarding lines, colors, and specific image design, and thus meets the requirements for originality, it can be considered a work of art and protected by copyright law. This case provides a reference for similar adjudications, contributing to the prosperity of the virtual digital human industry and the development of new-quality productivity.

Case VIII: He v. Artificial Intelligence Technology Co., Ltd., a Case of Online Infringement Liability Dispute

—Creating an AI avatar of a natural person without consent constitutes an infringement of personality rights

[Basic Facts]

A certain artificial intelligence technology company (hereinafter referred to as the defendant) is the developer and operator of a mobile accounting software. Users can create their own “AI companions” within the software, setting their names and profile pictures, and establishing relationships with them (e.g., boyfriend/girlfriend, sibling, mother/son, etc.). He, a well-known public figure, was set as a companion by numerous users within the software. When users set “He” as a companion, they uploaded numerous portrait images of He to set their profile pictures and also set relationships. The defendant, through algorithmic deployment, categorized the companion “He” based on these relationships and recommended this character to other users. To make the AI character more human-like, the defendant also implemented a “training” algorithm for the AI character. This involves users uploading interactive content such as text, portraits, and animated expressions, with some users participating in review. The software then screens and categorizes the content to create the character data. The software can push relevant “portrait emoticons” and “sultry phrases” to users during conversations with “Ms. He,” based on topic categories and the character’s personality traits, creating a user experience that evokes a genuine interaction with the real person. The plaintiff claims that the defendant’s actions infringe upon the plaintiff’s right to name, right to likeness, and general personality rights, and therefore brought the case to court.

[Judgment]

The court held that the defendant’s actions constitute an infringement of the plaintiff’s right to name, right to likeness, and general personality rights. Under the defendant’s software’s functionalities and algorithmic design, users used Ms. He’s name and likeness to create virtual characters and interactive corpus materials. This projected an overall image, a composite of Ms. He’s name, likeness, and personality traits, onto the AI character, creating Ms. He’s virtual image. This constitutes a use of Ms. He’s overall personality, including her name and likeness. The defendant’s use of Ms. He’s name and likeness without her permission constitutes an infringement of his right to name and likeness. At the same time, users can establish virtual identities with the AI character, setting any mutual titles. By creating linguistic material to “tune” the character, the AI character becomes highly relatable to a real person, allowing users to easily experience a genuine interaction with Ms. He. This use, without Ms. He’s consent, infringes upon her personal dignity and personal freedom, constituting an infringement of her general personal rights and interests. Furthermore, the services provided by the software in question are fundamentally different from technical services. The defendant, through rule-setting and algorithmic design, organized and encouraged users to generate infringing material, co-creating virtual avatars with them, and incorporating them into user services. The defendant’s product design and algorithmic application encouraged and organized the creation of the virtual avatars in question, directly determining the implementation of the software’s core functionality. The defendant is no longer a neutral technical service provider, but rather bears liability for infringement as a network content service provider. Judgment: The defendant publicly apologizes to the plaintiff and compensates the plaintiff for emotional and economic losses. The defendant appealed the first-instance judgment but later withdrew the appeal, and the first-instance judgment came into effect. [Typical Significance]

This case clarifies that a natural person’s personality rights extend to their virtual image. The unauthorized creation and use of a natural person’s virtual image constitutes an infringement of that person’s personality rights. Internet service providers, through algorithmic design, substantially participate in the generation and provision of infringing content and should bear tort liability as content service providers. This case is of great significance in strengthening the protection of personality rights and has been selected by the Supreme People’s Court as a “Typical Civil Case on the Judicial Protection of Personality Rights after the Promulgation of the Civil Code” and a reference case for Beijing courts.

The original text can be found here: 北京互联网法院涉人工智能典型案例(Chinese only).

AI Insights

Vikings vs. Falcons props, bets, SportsLine Machine Learning Model AI predictions: Robinson over 68.5 rushing

Week 2 of Sunday Night Football will see the Minnesota Vikings (1-0) hosting the Atlanta Falcons (0-1). J.J. McCarthy and Michael Penix Jr. will be popular in NFL props, as the two will face off for the first time since squaring off in the 2023 CFP National Title Game. The cast of characters around them has changed since McCarthy and Michigan prevailed over Washington, as the likes of Justin Jefferson, Kyle Pitts, T.J. Hockenson, and Drake London now flank the quarterbacks. There are several NFL player props one could target for these star players, or you may find value in going after under-the-radar options.

Tyler Allgeier had 10 carries in Week 1, which were just two fewer than Bijan Robinson, with the latter being more involved in the passing game with six receptions. If Allgeier has a similar type of volume going forward, then the over for his rushing yards NFL prop may be one to consider. A strong run game would certainly help out a young quarterback like Penix, so both Allgeier and Robinson have intriguing Sunday Night Football props. Before betting any Falcons vs. Vikings props for Sunday Night Football, you need to see the Vikings vs. Falcons prop predictions powered by SportsLine’s Machine Learning Model AI.

Built using cutting-edge artificial intelligence and machine learning techniques by SportsLine’s Data Science team, AI Predictions and AI Ratings are generated for each player prop.

For Falcons vs. Vikings NFL betting on Sunday Night Football, the Machine Learning Model has evaluated the NFL player prop odds and provided Vikings vs. Falcons prop picks. You can only see the Machine Learning Model player prop predictions for Atlanta vs. Minnesota here.

Top NFL player prop bets for Falcons vs. Vikings

After analyzing the Vikings vs. Falcons props and examining the dozens of NFL player prop markets, the SportsLine’s Machine Learning Model says Falcons RB Bijan Robinson goes Over 68.5 rushing yards (-114 at FanDuel). Robinson ran for 92 yards and a touchdown in Week 14 of last season versus Minnesota, despite the Vikings having the league’s No. 2 run defense a year ago. After replacing their entire starting defensive line in the offseason, it doesn’t appear the Vikings are as stout on the ground. They allowed 119 rushing yards in Week 1, which is more than they gave up in all but four games a year ago.

Robinson is coming off a season with 1,454 rushing yards, which ranked third in the NFL. He averaged 85.6 yards per game, and not only has he eclipsed 65.5 yards in six of his last seven games, but he’s had at least 90 yards on the ground in those six games. Over Minnesota’s last eight games, including the postseason, six different running backs have gone over 65.5 rushing yards, as the SportsLine Machine Learning Model projects Robinson to have 85.6 yards in a 4.5-star prop pick. See more NFL props here, and new users can also target the FanDuel promo code, which offers new users $300 in bonus bets if their first $5 bet wins:

How to make NFL player prop bets for Minnesota vs. Atlanta

In addition, the SportsLine Machine Learning Model says another star sails past his total and has five additional NFL props that are rated four stars or better. You need to see the Machine Learning Model analysis before making any Falcons vs. Vikings prop bets for Sunday Night Football.

Which Vikings vs. Falcons prop bets should you target for Sunday Night Football? Visit SportsLine now to see the top Falcons vs. Vikings props, all from the SportsLine Machine Learning Model.

AI Insights

Prediction: These AI Stocks Could Outperform the “Magnificent Seven” Over the Next Decade

The “Magnificent Seven” stocks, which drove indexes higher over the past couple of years, continued that job in recent months. And for good reason. Most of these tech giants are playing key roles in the high-growth industry of artificial intelligence (AI), a market forecast to reach into the trillions of dollars by the early 2030s. Investors, wanting to benefit from this growth, have piled into these current and potential AI winners.

But the Magnificent Seven stocks aren’t the only ones that may be set to excel in AI and deliver growth to investors. As the AI story progresses, the need for infrastructure capacity and certain equipment could result in surging sales for other companies too. That’s why my prediction is the following three stocks are on track for major strength in AI and may even outperform the Magnificent Seven over the coming decade. Let’s check them out.

Image source: Getty Images.

1. Oracle

Oracle (ORCL -5.05%) started out as a database management specialist, and it still is a giant in this area, but in recent times it’s put the focus on growing its cloud infrastructure business — and this has supercharged the company’s revenue.

AI customers are rushing to Oracle for capacity to run training and inferencing workloads, and this movement helped the company report a 55% increase in infrastructure revenue in the recent quarter. And Oracle predicts this may be just the beginning. The company expects this business to deliver $18 billion in revenue this year — and grow that to $144 billion four years from now.

Investors were so excited about Oracle’s forecasts that the stock surged about 35% in one trading session, adding more than $200 billion in market value. Customers are seeing the value of Oracle’s database technology paired with AI — a combination that allows them to securely apply AI to their businesses — and this may keep the demand for Oracle’s services going strong and the stock price heading higher as the AI story enters its next chapters.

2. CoreWeave

CoreWeave (CRWV -0.65%) has designed its cloud platform specifically for AI workloads, and the company works closely with chip leader Nvidia. So far, this has resulted in CoreWeave’s being the first to make Nvidia’s latest platforms generally available to customers. This is a big plus as companies scramble to gain access to Nvidia’s innovations as soon as possible.

Nvidia also is a believer in CoreWeave’s potential as the chip giant holds shares in the company. As of the second quarter, CoreWeave makes up 91% of Nvidia’s investment portfolio. Considering Nvidia’s knowledge of the AI landscape, this investment is particularly meaningful.

Customers may also like the flexibility of CoreWeave’s services, allowing them to rent graphics processing units (GPUs) by the hour or for the long term. All of this has led to explosive revenue growth for the company. In the latest quarter, revenue tripled to more than $1.2 billion.

The growing need for AI infrastructure should translate into ongoing explosive growth for CoreWeave, and that may make it a stronger stock market performer than long-established players — such as the Magnificent Seven.

3. Broadcom

Broadcom (AVGO 0.19%) is a networking leader, with its products present in a variety of places from your smartphone to data centers. And in recent times, demand from AI customers — for items such as customized chips and networking equipment — has helped revenue soar.

In the recent quarter, Broadcom said AI revenue jumped 63% year over year to $5.2 billion, and the company forecast AI revenue of $6.2 billion in the next quarter. The company already is working on custom chips for three major customers, and demand from them is growing — on top of this, Broadcom just announced a $10 billion order from another customer, one that analysts and press reports say may be OpenAI.

Meanwhile, Broadcom’s expertise in networking is paying off as high-performance systems are needed to connect customers’ growing numbers of compute nodes. As AI customers scale up their platforms, they need to share data between more and more of these nodes — and Broadcom has what it takes to do the job.

We’re still in the early phases of this AI buildout — as mentioned, the AI market may be heading for the trillion-dollar mark — and Broadcom clearly will benefit. And that may help this top tech stock to outperform the Magnificent Seven over the next decade.

Adria Cimino has positions in Oracle. The Motley Fool has positions in and recommends Nvidia and Oracle. The Motley Fool recommends Broadcom. The Motley Fool has a disclosure policy.

-

Business2 weeks ago

Business2 weeks agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms1 month ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy2 months ago

Ethics & Policy2 months agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers3 months ago

Jobs & Careers3 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Education3 months ago

Education3 months agoVEX Robotics launches AI-powered classroom robotics system

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Funding & Business3 months ago

Funding & Business3 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries