AI Research

Artificial Intelligence News Live: AI firms are unprepared for risks of human-level systems, report warns

AI Research

A Deep Learning and Explainable Artificial Intelligence based Scheme for Breast Cancer Detection

This section provides a detailed description of the DXAIB scheme, including the utilized data-set, its pre-processing, the forecasting of breast cancer, and the explainability of the prediction made by the proposed scheme.

Data-set Description

This study used a dataset entitled “Breast Cancer Wisconsin (Diagnostic)” obtained from the UCI ML repository27. The dataset contains a comprehensive collection of 30 clearly defined features, including the mean, standard error, and worst values of 10 specific features (texture, radius, concavity, area, smoothness, compactness, symmetry, perimeter, concave points, and fractal dimension). These features are obtained from ultrasound pictures of a breast mass obtained by fine needle aspiration (FNA). These features delineate the cellular nuclei seen in the photographs. The collection has a total of 569 unique individual samples. The comprehensive dataset provided a solid basis to follow-up analysis since each item comprised several relevant features. The class label functioned as a categorical variable, indicating separate and identifiable groupings. The class label consisted of two well-defined categories, each representing distinct diagnostic results. The category denoted as ‘M’refers to all presently surviving females who have received a diagnosis of breast cancer. Individuals categorized as ‘B’ indicate the lack of breast cancer. The Breast Cancer Wisconsin (BCW) dataset was chosen for several key reasons such as, historical significance and validation of it, its simplicity and interpretability, and it is a clean and well-structured data that make it particularly useful for the development of classification models for breast cancer detection. The BCW dataset has been extensively used and validated in breast cancer research. The dataset contains a comprehensive set of features derived from fine needle aspiration (FNA) of breast masses, focusing on cell characteristics such as radius, texture, and perimeter, which are clinically relevant for identifying malignancies. The dataset’s features are interpretable, making it ideal for initial testing and development of ML models. The BCW dataset is highly structured, with no significant data inconsistencies, which allows for straightforward analysis and model development.

Data Pre-processing

Data preprocessing encompasses many procedures, including cleaning, transformation, and preparation. The aforementioned steps are carried out to ensure the appropriate processing and structuring of the raw data for further analysis. Through the assurance of data consistency and logical progression, this essential process enables rigorous model training and precise predictions. Approaches such as normalization, feature engineering, and managing missing values are employed to improve the usability and efficiency of datasets for training ML models. A scaling methodology was applied to the dataset following rigorous data collection and a thorough feature selection approach. This measure was implemented to maintain consistency and facilitate relevant comparisons of the characteristics. Scaling, or standardization, was used to consistently measure all traits and minimize biases caused by differences in measuring units. The scaling method included subtracting the mean value from each feature and then dividing the resulting value by the standard deviation of the feature. The methodology employed in this work successfully maintained the relative relationships among the various characteristics and conserved the overall organization of the dataset. Statistical scaling techniques provide an equitable and impartial evaluation of the correlations and patterns seen in the dataset. By standardizing all characteristics to a consistent scale, the potential impact of variations in measurement units was reduced, enhancing the study’s trustworthiness. Quantitative representations of categorical data in ‘D’ are obtained via label encoding. Specifically, the target column in ‘D’ comprises categorical values ‘M’ and ‘B’ corresponding to malignant and benign outcomes. The application of label encoding results in assigning the value ‘1’ to the category ‘M’ and the value ‘0’ to the category ‘B’ (algorithm 1: line 3). Label encoding converts categorical data into a format appropriate for various ML approaches. An additional pre-processing stage involves removing superfluous features from the dataset ‘D’ (algorithm 1: lines 4). Following label encoding and feature selection, all occurrences of duplicate data are eliminated from the dataset ‘D’. In present type of critical applications the sensitivity or recall plays a very critical role where no positive case should be predicted as negative case which can eventually lead to fatality later. The used dataset in the present work is having 62.85% of benign cases whereas, 37.15% of malignant cases. So, dataset was biased towards benign class. Thus, Synthetic Minority Over-sampling Technique (SMOTE) which is a data augmentation technique for tabular data-set is used. It created synthetic samples for minority malignant class by interpolating between existing data points. It can enhance recall by generating synthetic examples for the minority class in an imbalanced dataset. Instead of simply duplicating existing instances, SMOTE creates new, synthetic data points by interpolating between nearest neighbors of the minority class. This process expands the feature space of the minority class, helping models learn more diverse patterns and reducing the chance of misclassifying minority instances as negatives.

Breast Cancer Detection

A hybrid ML model that combines Convolutional Neural Networks (CNNs) for feature extraction from tabular dataset with Random Forest for predictive output is developed in this present work. To enhance transparency and interpretability, SHAP (SHapley Additive exPlanations) explainable AI module is included which offers comprehensive insights into the determinants influencing the model’s prediction results. The proposed scheme starts with the loading of data, which is then followed by pre-processing steps. The dataset, denoted as ‘D’, has ‘m’ rows and ‘n’ columns, with ‘m’ indicating the number of rows or data instances and ‘n’ indicating the number of columns or features. Following the successful importation of ‘D’, pre-processing steps are executed as explained in the preceding sub-heading. Subsequently, the data set ‘D’ is partitioned into two subsets: one containing the target feature, including the target class label, and the other including the other features. The proposed DXAIB scheme partitions the dataset into two subsets for training and testing purposes. This is accomplished by using an 80-20 split (algorithm 1, line 7). After the split, data is normalized using the MinMaxScaler function. Following data transformation, the dataset ‘D’ is partitioned into four subsets: X_train, y_train, X_test, and y_test (algorithm 1, line 7). The X_train and y_train datasets are fed into the proposed CNN model, which is a part of the DXAIB scheme. Various layers in the CNN architecture, including convolutional, flattened, dense, and max-pooling layers, include dropout rates. The architectural design of the proposed CNN can be seen in Table 3. Importantly, the proposed CNN is only used for feature learning rather than data categorization. A total of 10 output classes are included in the proposed CNN model. Next, the classification layer of the CNN model is substituted by the RF classifier. The CNN under consideration consists of a total of 10 layers, which include four convolutional layers, one flattened layer, three dense layers, and two max-pooling layers. However, an excessively high number of layers may harm performance, so balancing enhancing accuracy and allocating resources is essential. To address the issue of over-fitting, a dropout rate of ‘0.20’ is applied following every max-pooling and dense layer. After passing through the convolutional and max-pooling layers, the input transforms a planar structure before being sent to the first thick or dense layer. Three thick layers are present, with the first two levels consisting of `512’ and `256’ number of filters, respectively. After the proposed CNN architecture is built, the model is trained using appropriate training data. The validation technique, as shown in algorithm 1, is conducted using validation data with a batch size of ‘64’ epochs and ‘100’ epochs. Before transmitting the data to the RF classification layer, a dense layer is used to modify and restructure it after the training phase. An essential function of this layer is to synchronize the results of the CNN model with the necessary vector shape for the RF layer (algorithm 1: lines 9). Several factors justify the selection of the RF model as the classifier layer, namely its superior accuracy when applied to the dataset compared to other ML techniques. Subsequently, the classification layer generates projections for the resultant class, and the precision of these projections, which measure the model’s adaptive performance, is evaluated. Indeed, the suggested approach is an essential antecedent and decisive factor in directing future evaluations of breast tumor recipients. These patients receive a range of crucial clinical procedures specifically intended to accomplish a thorough assessment. Importantly, our first goal is to determine whether a patient has cancer. Having formulated predictions, this work focuses on clarifying the importance of specific features in identifying patients with various types of cancer.

Explainability

After the class prediction process is completed, the findings or projections are transmitted to the eXplainable Artificial Intelligence (XAI) algorithm for the proposed scheme. Subsequently, the XAI framework is used to provide explanations. The SHAP (SHapley Additive exPlanations) XAI technique is used in this work over others because of its theoretical foundation based on Shapley values, its consistency and additivity, its global and local interpretability, model-agnostic and model-specific variants, its visualization and interpretability, its reliability on feature importance, its ability to capture feature interactions, and its wide adoption and continuous improvement28. It is used in the proposed scheme to engage with the RF layer and provide various explanations. The SHAP approach offers local and global reasons, as shown by the statistical data in the findings section. While the patient may find these explanations unclear, they are crucial for medical practitioners. Upon analysis by a healthcare professional, these clarifications allow them to offer the patient many justifications for a specific disease diagnosis and suggest several alternative diagnostic possibilities (algorithm 1: lines 24-47). After the identification or forecasting stage of the proposed method is finished, the SHAP approach is used to analyze and present many explanations for the predictions produced by the model. SHAP, a well acknowledged and effective method in Comprehensible Artificial Intelligence , is especially developed to uncover the precise influence of certain characteristics on the results produced by a model. The objective of installing SHAP was to achieve accurate and easily understandable explanations in order to improve the transparency of the decision-making framework in the existing system. Prior to implementing SHAP, the proposed ML model predicted outcomes for all patients in the dataset. After that, we calculated SHAP values for all of the model features to see how much of an effect each piece of information had on our prediction technique. The SHAP values measure the effect of each feature’s presence or absence on the model’s output, allowing us to evaluate their relevance. Furthermore, the SHAP scores were meticulously categorized into interpretations for the identified results. More specifically, throughout the stage of some patients, interpretations are generated, offering a comprehensive examination of the main characteristics of each prognosis. Through offering comprehensive explanations customized for each patient, physicians may ascertain the fundamental factors that impacted the diagnosis of each individual patient. By taking this route, they were able to better comprehend how the model arrived at its conclusions. In order to uncover the most prominent trends and patterns, the SHAP values were averaged throughout the sample. Through this aggregate, explanations were extracted on a global scale, revealing underlying features that are regularly linked to forecasts of breast cancer type, whether it is malignant or benign. These global explanations aimed to emphasize overarching patterns in the data and enhance understanding of the system’s functioning in a broader scope. Incorporating SHAP reasoning after the proposed system’s classification phase has shown to be a great help in giving a comprehensive and comprehensible assessment of the scheme’s predictions. The SHAP technique provided clinicians and researchers with profound insights at the individual patient level and on a broader scale. This enabled them to enhance their trust in the model’s evaluations and develop a more profound comprehension of the complex connections between traits and pathological outcomes. Transparency and comprehensibility are crucial for establishing confidence and, hence, determining the practical feasibility and capability of the system to improve breast cancer detection and patient care.

AI Research

Axis Communications launches 12 new artificial intelligence dome cameras

Axis Communications announces the launch of 12 ruggedized indoor and outdoor-ready dome cameras. Based on the ARTPEC-9 chipset, they offer high performance and advanced analytics. AXIS P32 Series offers excellent image quality up to 8 MP. The cameras are equipped with Lightfinder 2.0 and Forensic WDR, ensuring true color and sharp detail even in near total darkness or difficult lighting situations. In addition, OptimizedIR technology enables complete surveillance even in absolute darkness.

Based on the latest platform from Axis, these artificial intelligence dome cameras offer accelerated performance and run advanced analytics applications directly at the edge. For example, they include pre-installed AXIS Object Analytics for detecting, classifying, tracking and counting people, vehicles and vehicle types. They also have AXIS Image Health Analytics, so users receive notifications if an image is blocked, degraded, underexposed or redirected.

Furthermore, some models include an acoustic sensor with AXIS Audio Analytics pre-installed. This alerts users even in the absence of visual cues by detecting shouts, screams or changes in sound level.

Key features:

- Outstanding image quality, up to 8 MP;

- Indoor and outdoor models;

- Variants with pre-installed AXIS Audio Analytics;

- Options with different lens types;

- Integrated cybersecurity through Axis Edge Vault.

These rugged, vandal- and shock-resistant cameras include both indoor and outdoor versions, with outdoor models operating in an extended temperature range from -40°C to +50°C.

In addition, Axis Edge Vault, the hardware cybersecurity platform, protects the device and provides secure storage and key operations, certified to FIPS 140-3 Level 3.

AI Research

Breaking Down AI’s Role in Genomics and Polygenic Risk Prediction – with Dan Elton of the National Human Genome Research Institute

While protein sequencing efforts have amassed hundreds of millions of protein variants, experimentally determined structures remain exceedingly rare, lagging far behind the number of unresolved structures.

The 2024 UniProt knowledgebase catalogs approximately 246 million unique protein sequences, yet the Worldwide Protein Data Bank holds just over 227,000 experimentally determined three-dimensional structures — covering less than 0.1% of known proteins.

De novo structure elucidation remains a prohibitively expensive and time-intensive endeavor. According to a peer-reviewed article in Bioinformatics, the average cost of X-ray crystallization is estimated at $150,000 per protein.

Even with an annual Protein Data Bank throughput exceeding 200,000 new structures, laboratory workflows struggle to keep pace with the relentless pace of sequence discovery, leaving critical drug targets and novel enzymes structurally uncharacterized.

By harnessing deep learning algorithms to predict three-dimensional conformations from primary sequences, AI-driven models like AlphaFold collapse months of crystallographic work into minutes, directly bridging the gap between sequence abundance and structural insight.

Emerj Editorial Director Matthew DeMello recently spoke with Dan Elton, Staff Scientist at the National Human Genome Research Institute, on the ‘AI in Business’ podcast to discuss how AI is revolutionizing protein structure prediction. Elton concentrates on AI-driven protein engineering and neural-network polygenic risk scoring, outlining a vision for how technology can compress R&D timelines and sharpen disease prediction.

Precision health leaders reading this article will find a clear and concise breakdown of critical takeaways from their conversation in two key areas of AI deployment:

- Enhancing polygenic risk stratification: Applying deep learning and neural networks to model nonlinear gene interactions, thereby sharpening disease-risk predictions

- Improving rapid structure elucidation: Employing AI-driven protein folding models to predict three-dimensional protein conformations from amino-acid sequences in minutes, slashing timelines for drug discovery and bespoke enzyme engineering

Listen to the full episode below:

Guest: Dr. Dan Elton, Staff Scientist, National Institutes of Health

Expertise: Artificial Intelligence, Deep Learning, Computational Physics

Brief Recognition: Dr. Dan Elton is currently the Staff Scientist at the National Human Genome Research Institute under the National Institutes of Health. Previously, he worked for the Mass General Brigham, where he looked after the deployment and testing of AI systems in the radiology clinic. He earned his Doctorate in Physics in 2016 from Stony Brook University.

Improving Rapid Structure Elucidation

Traditional structural biology methods have long constrained drug discovery and enzyme design workflows. Elton notes that determining a protein’s three-dimensional structure was an extremely difficult problem.

According to Elton, AlphaFold — an artificial intelligence system that predicts the three-dimensional structure of proteins from their amino acid sequences — bypasses these labor-intensive physics simulations by training deep neural architectures on evolutionary and sequence co-variation patterns. It ultimately collapses weeks of bench work into minutes on modern GPU clusters.

Elton explains that open-access folding databases now host over 200 million predicted structures, democratizing discovery by granting small labs the same AI-driven insights previously limited to large pharmaceutical R&D centers.

By collapsing months of laborious X-ray crystallography or NMR experiments into minutes on a modern GPU cluster, companies can now screen thousands of candidate molecules in silico, iterating designs with agility.

Elton emphasizes that this agility not only accelerates lead optimization but also reallocates experimental budgets toward functional assays and ADMET profiling.

Key AI data inputs include:

- Amino acid sequences paired with multiple sequence alignments to capture evolutionary constraints

- Deep learning models that predict residue-level confidence scores (pLDDT) and contact maps

- High-throughput in silico mutagenesis for de novo enzyme design and stability screening

Broadly, integrating AI predictions with targeted experimental workflows has slashed cost-per-structure metrics by orders of magnitude.

This computational acceleration proves particularly valuable for neglected diseases, where the Drugs for Neglected Diseases Initiative now maintains over 20 new chemical entities in its portfolio, partly through AlphaFold-enabled target identification.

DeepMind estimates that AlphaFold has already potentially saved millions of dollars and hundreds of millions of research years, with over two million users across 190 countries accessing the database.

However, Elton’s perspective acknowledges both the revolutionary potential and remaining limitations. While AlphaFold excels at predicting static protein structures, drug development increasingly requires understanding dynamic protein-protein interactions and conformational changes.

The recently released AlphaFold 3 addresses some of these limitations by modeling interactions between proteins and other molecules, including RNA, DNA, and ligands. Google claims in an interview with PharmaVoice that there was at least a 50% improvement over existing prediction methods for protein interactions.

Enhancing Polygenic Risk Stratification

Building on these structural breakthroughs, Elton next turns from folded proteins to the genome itself, where AI is poised to redefine risk prediction and gene-editing delivery.

Conventional polygenic risk-score frameworks rely on additive, linear regression models that perform well for highly heritable traits like height but fail to capture complex gene–gene interactions.

Elton explains that the way genes are associated with phenotypes is not simply linear. Nonlinearities exist as well, highlighting the limitations of sparse linear predictors.

Neural network and deep learning architectures offer a path to uncover epistatic effects, yet Elton cautions that such models demand unprecedented data and compute scales. He notes that to predict a condition like autism or even intelligence, researchers would need between 300,000 and 700,000 sequences, necessitating tens of trillions of letters or tokens.

In other words, matching the data scale of GPT-4 becomes a prerequisite — demanding robust cohort assembly, cross-biobank harmonization, and petascale compute infrastructure.

Elton candidly notes that the added value of using a neural net or a language model actually might be relatively small for some traits where linear models already capture most genetic effects. For heritable characteristics like height, for example, the added neural net value is relatively small because linear predictors explain all the heritability.

This honest assessment reflects the understanding required to prioritize which genetic traits and clinical applications justify the massive computational investment needed for neural network-based polygenic prediction.

Elton also warns that handling tens of trillions of tokens per project requires more than raw compute; it mandates rigorous data-management frameworks that ensure privacy, regulatory compliance, and security. Cloud architects and life-science IT leaders should therefore adopt:

- Encryption-at-rest

- Role-based access control

- Immutable audit trails to safeguard personally identifiable information

Beyond prediction, Elton mentions that AI is also transforming precision gene editing workflows. Elton describes ex vivo therapies — when blood is extracted, treated with genetic editing, and ultimately returned into the bloodstream.

In this way, AI tools can now fine-tune viral shells so they target the right tissues and optimize guide-RNA instructions to avoid accidental gene cuts.

-

Business3 days ago

Business3 days agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms3 weeks ago

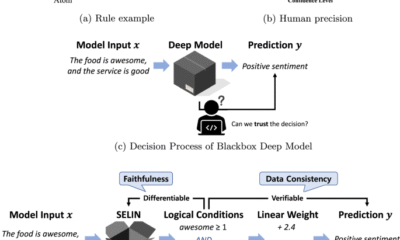

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences3 months ago

Events & Conferences3 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Mergers & Acquisitions2 months ago

Mergers & Acquisitions2 months agoDonald Trump suggests US government review subsidies to Elon Musk’s companies