AI Research

Artificial intelligence-integrated video analysis of vessel area changes and instrument motion for microsurgical skill assessment

We employed a combined AI-based video analysis approach to assess the microvascular anastomosis performance by integrating VA changes and instrument motion. By comparing technical category scores with AI-generated parameters, we demonstrated that the parameters from both AI models encompassed a wide range of technical skills required for microvascular anastomosis. Furthermore, ROC curve analysis indicated that integrating parameters from both AI models improved the ability to distinguish surgical performance compared to using a single AI model. A distinctive feature of this study was the integration of multiple AI models that incorporated both tools and tissue elements.

AI-based technical analytic approach

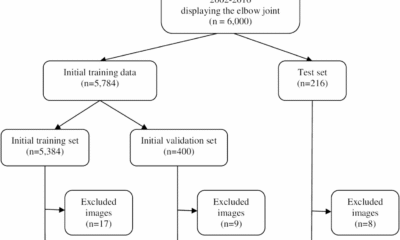

Traditional criteria-based scoring by multiple blinded expert surgeons was a highly reliable method for assessing surgeon performance with minimal interrater bias (Fig. 2 and Supplementary Table 1). However, the significant demand for human expertise and time makes real-time feedback impractical during surgery and training10,11,18. A recent study demonstrated that self-directed learning using digital instructional materials provides non-inferior outcomes in the initial stages of microsurgical skill acquisition compared to traditional instructor-led training28. However, direct feedback from an instructor continues to play a critical role when progressing toward more advanced skill levels and actual clinical practice.

AI technology can rapidly analyze vast amounts of clinical data generated in modern operating theaters, offering real-time feedback capabilities. The proposed method’s reliance on surgical video analysis makes it highly applicable in clinical settings18. Moreover, the manner in which AI is utilized in this study addresses concerns regarding transparency, explainability, and interpretability, which are fundamental risks associated with AI adoption. One anticipated application is AI-assisted devices that can promptly provide feedback on technical challenges, allowing trainees to refine their surgical skills more effectively29,30. Additionally, an objective assessment of microsurgical skills could facilitate surgeon certification and credentialing processes within the medical community.

Theoretically, this approach could help implement a real-time warning system, alerting surgeons or other staff when instrument motion or tissue deformation exceeds a predefined safety threshold, thereby enhancing patient safety17,31. However, a large dataset of clinical cases involving adverse events such as vascular injury, bypass occlusion, and ischemic stroke would be required. For real-time clinical applications, further data collection and computational optimization are necessary to reduce processing latency and enhance practical usability. Given that our AI model can be applied to clinical surgical videos, future research could explore its utility in this context.

Related works: AI-integrated instrument tracking

To contextualize our results, we compared our AI-integrated approach with recent methods implementing instrument tracking in microsurgical practice. Franco-González et al. compared stereoscopic marker-based tracking with a YOLOv8-based deep learning method, reporting high accuracy and real-time capability32. Similarly, Magro et al. proposed a robust dual-instrument Kalman-based tracker, effectively mitigating tracking errors due to occlusion or motion blur33. Koskinen et al. utilized YOLOv5 for real-time tracking of microsurgical instruments, demonstrating its effectiveness in monitoring instrument kinematics and eye-hand coordination34.

Our integrated AI model employs semantic segmentation (ResNet-50) for vessel deformation analysis and a trajectory-tracking algorithm (YOLOv2) for assessment of instrument motion. The major advantage of our approach is its comprehensive and simultaneous evaluation of tissue deformation and instrument handling smoothness, enabling robust and objective skill assessment even under challenging conditions, such as variable illumination and partial occlusion. YOLO was selected due to its computational speed and precision in real-time object detection, making it particularly suitable for live microsurgical video analysis. ResNet was chosen for its effectiveness in detailed image segmentation, facilitating accurate quantification of tissue deformation. However, unlike three-dimensional (3D) tracking methods32, our current method relies solely on 2D imaging, potentially limiting depth perception accuracy.

These comparisons highlight both the strengths and limitations of our approach, emphasizing the necessity of future studies incorporating 3D tracking technologies and expanded datasets to further validate and refine AI-driven microsurgical skill assessment methodologies.

Future challenges

Microvascular anastomosis tasks typically consist of distinct phases, including vessel preparation, needle insertion, suture placement, thread pulling, and knot tying. As demonstrated by our video parameters for each surgical phase (phases A–D), a separate analysis of each surgical phase is essential to enhance skill evaluation and training efficiency. However, our current AI model does not have the capability to automatically distinguish these surgical phases.

Previous studies utilizing convolutional neural networks (CNN) and recurrent neural networks (RNN) have demonstrated high accuracy in recognizing surgical phases and steps, particularly through the analysis of intraoperative video data35,36. Khan et al. successfully applied a combined CNN-RNN model to achieve accurate automated recognition of surgical workflows during endoscopic pituitary surgery, despite significant variability in surgical procedures and video appearances35. Similarly, automated operative phase and step recognition in vestibular schwannoma surgery further highlights the ability of these models to handle complex and lengthy surgical tasks36. Such methods could be integrated into our current AI framework to segment and individually evaluate each distinct phase of microvascular anastomosis, enabling detailed performance analytics and precise feedback.

Furthermore, establishing global standards for video recording is critical for broadly implementing and enhancing computer vision techniques in surgical settings. Developing guidelines for video recording that standardize resolution, frame rate, camera angle, illumination, and surgical field coverage can significantly reduce algorithmic misclassification issues caused by shadows or instrument occlusion18,37. Such standardization ensures consistent data quality, crucial for training accurate and widely applicable AI models across diverse clinical settings 37. These guidelines would facilitate large-scale data sharing and collaboration, substantially improving the reliability and effectiveness of AI-based surgical assessment tools globally.

Technical consideration

The semantic segmentation AI models were designed to assess respect for tissue during the needle manipulation process24. As expected, the Max-ΔVA correlated with respect for tissue in Phase B (from needle insertion to extraction). Proper needle extraction requires following its natural curve to avoid tearing the vessel wall6,7, and these technical nuances were well captured by these parameters. Additionally, the No. of TDE correlated with respect for tissue in Phases C, indicating that even during the process of pulling the threads, surgeons must exercise caution to prevent thread-induced vessel wall injury6,7. These parameters also correlated with instrument handling, efficiency, suturing technique and overall performance—an expected finding, as proper instrument handling and suturing technique are fundamental to respecting tissue. Thus, the technical categories are interrelated and mutually influential.

Trajectory-tracking AI models were designed to assess motion economy and the smoothness of surgical instrument movements25. Motion economy can be represented by the PD during a procedure. The smoothness and coordination of movement are frequently assessed using jerk-based metrics, where jerk is defined as the time derivative of acceleration. Since these jerk indexes are influenced by both movement duration and amplitude, we utilized the NJI, first proposed by Flash and Hogan38. The NJI is calculated by multiplying the jerk index by [(duration interval)5/(path length)2], with lower values indicating smoother movements. The dimensionless NJI has been used as a quantitative metric to evaluate movement irregularities in various contexts, such as jaw movements during chewing39,40, laparoscopic skills41, and microsurgical skills16,25. In this study, the Rt-PD and Lt-NJI correlated with a broad range of technical categories. Despite their distinct roles in microvascular anastomosis, coordinated bimanual manipulation is essential for optimal surgical performance6,7. With regard to Rt-NJI, these trends were particularly evident in Phases C and D, highlighting the importance of the motion smoothness in thread pulling and tying knots in determining overall surgical proficiency.

Overall, integrating these parameters enabled a comprehensive assessment of complex microsurgical skills, as each parameter captured different technical aspects. Despite its effectiveness, the model still exhibited some degree of misclassification when differentiating between good and poor performance. Notably, procedural time—a key determinant of surgical performance24,25—was intentionally excluded from the analysis. Although further exploration of additional parameters remains essential, integrating procedural time could significantly improve the classification accuracy.

This study employed the Stanford Microsurgery and Resident Training scale10,11 as a criteria-based objective assessment tool, as it covers a wide range of microsurgical technical aspects. Future research incorporating leakage tests or the Anastomosis Lapse Index13, which identifies ten distinct types of anastomotic errors, could provide deeper insights into the relationship between the quality of the final product and various technical factors.

Limitations

As mentioned above, a fundamental technical limitation of this analytical approach is the lack of 3D kinematic data, particularly in the absence of depth information. Another constraint was that when the surgical tool was outside the microscope’s visual field, kinematic data of the surgical instrument could not be captured25. Additionally, the semantic segmentation model occasionally misclassified images containing shadows from surgical instruments or hands24. To mitigate this issue, future studies should expand the training dataset to include shadowed images, thereby improving model robustness. Given that the AI model in this study utilized the ResNet-50 and YOLOv2 networks, further investigation is warranted to optimize network architecture selection. Exploring alternative deep learning models or fine-tuning existing architectures could further improve the accuracy and generalizability of surgical video analysis18.

Our study had a relatively small sample size with respect to the number of participating surgeons, although it included surgeons with a diverse range of skills. Moreover, we did not evaluate the data from repeated training sessions to estimate the learning curve or determine whether feedback could enhance training efficacy. Future studies should evaluate the impact of AI-assisted feedback on the learning curve of surgical trainees and assess whether real-time performance tracking leads to more efficient skill acquisition.

AI Research

Which countries are producing more AI Researchers? Where does India stand? – WION

AI Research

3 Artificial Intelligence ETFs to Buy With $100 and Hold Forever

If you want exposure to the AI boom without the hassle of picking individual stocks, these three AI-focused ETFs offer diversified, long-term opportunities.

Artificial intelligence (AI) has been a huge catalyst for the portfolios of many investors over the past several years. Large tech companies are spending hundreds of billions of dollars to build out their AI hardware infrastructure, creating massive winners like semiconductor designer Nvidia.

But not everyone wants to go hunting for the next big AI winner, nor is it easy to know which company will stay in the lead even if you do your own research and find a great artificial intelligence stock to buy. That’s where exchange-traded funds (ETFs) can help.

If you’re afraid of missing out on the AI boom, and have around $100 to invest right now, here are three great AI exchange-traded funds that will allow you to track some of the biggest names in artificial intelligence, no matter who’s leading the pack.

Image source: Getty Images.

1. Global X Artificial Intelligence and Technology ETF

The Global X Artificial Intelligence and Technology ETF (AIQ 0.87%) is one of the top AI ETF options for investors because it holds a diverse group of around 90 stocks, spanning semiconductors, data infrastructure, and software. Its portfolio includes household names like Nvidia, Microsoft, and Alphabet, alongside lesser-known players that give investors exposure to AI companies they might not otherwise consider.

Another strength of AIQ is its global reach: the fund invests in both U.S. and international companies, providing broader diversification across the AI landscape. Of course, this targeted approach comes at a cost. AIQ’s expense ratio of 0.68% is slightly higher than the average ETF (around 0.56%), but it’s in line with other AI-focused funds.

Performance-wise, the Global X Artificial Intelligence and Technology ETF has rewarded investors. Over the past three years, it gained 117%, trouncing the S&P 500‘s 63% return over the same period. While past performance doesn’t guarantee future results, this track record shows how powerful exposure to AI-focused companies can be.

2. Global X Robotics and Artificial Intelligence ETF

As its name suggests, the Global X Robotics and Artificial Intelligence ETF (BOTZ -0.21%) focuses on both robotics and artificial intelligence companies, as well as automation investments. Two key holdings in the fund are Pegasystems, which is an automation software company, as well as Intuitive Surgical, which creates robotic-assisted surgical systems. And yes, you’ll still have exposure to top AI stocks, including Nvidia as well.

Having some exposure to robotics and automation could be a wise long-term investment strategy. For example, UBS estimates that there will be 2 million humanoid robots in the workforce within the next decade and could reach 300 million by 2050 — reaching an estimated market size of $1.7 trillion.

If you’re inclined to believe that robotics is the future, the Global X Robotics and Artificial Intelligence ETF is a good way to spread out your investments across 49 individual companies that are betting on this future. You’ll pay an annual expense ratio of 0.68% for the fund, which is comparable to the Global X Artificial Intelligence and Technology ETF’s fees.

The fund has performed slightly better than the broader market over the past three years — gaining about 68%. Still, as robotics grows in the coming years, this ETF could be a good place to have some money invested.

3. iShares Future AI and Tech ETF

And finally, the iShares Future AI and Tech ETF (ARTY 1.72%) offers investors exposure to 48 global companies betting on AI infrastructure, cloud computing, and machine learning.

Some of the fund’s key holdings include the semiconductor company Advanced Micro Devices, Arista Networks, and the AI chip leader Broadcom, which just inked a $10 billion semiconductor deal with a large new client (widely believed to be OpenAI). In addition to its diversification across AI and tech companies, the iShares Future AI and Tech ETF also has a lower expense ratio than some of its peers, charging just 0.47% annually.

The fund has slightly underperformed the S&P 500 lately, gaining about 61% compared to the broader market’s 63% gains over the past three years. But with its strong diversification among tech and AI leaders, as well as its lower expense ratio, investors looking for a solid play on the future of artificial intelligence will find what they’re looking for in this ETF.

Chris Neiger has no position in any of the stocks mentioned. The Motley Fool has positions in and recommends Advanced Micro Devices, Alphabet, Arista Networks, Intuitive Surgical, Microsoft, and Nvidia. The Motley Fool recommends Broadcom and recommends the following options: long January 2026 $395 calls on Microsoft and short January 2026 $405 calls on Microsoft. The Motley Fool has a disclosure policy.

AI Research

Companies Bet Customer Service AI Pays

Klarna’s $15 billion IPO was more than a financial milestone. It spotlighted how the Swedish buy-now-pay-later (BNPL) firm is grappling with artificial intelligence (AI) at the heart of its operations.

-

Business2 weeks ago

Business2 weeks agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms1 month ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy2 months ago

Ethics & Policy2 months agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi