AI Research

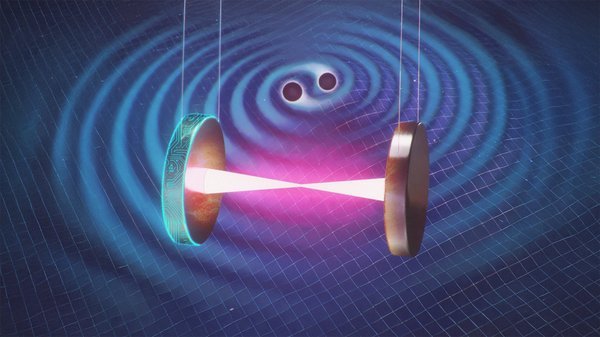

Artificial Intelligence Helps Boost LIGO

The US National Science Foundation LIGO (Laser Interferometer Gravitational-wave Observatory) has been called the most precise ruler in the world for its ability to measure motions smaller than 1/10,000 the width of a proton. By making these extremely precise measurements, LIGO, which consists of two facilities—one in Washington and one in Louisiana—can detect undulations in space-time called gravitational waves that roll outward from colliding cosmic bodies such as black holes.

LIGO ushered in the field of gravitational-wave astronomy beginning in 2015 when it made the first-ever direct detection of these ripples, a discovery that subsequently earned three of its founders the Nobel Prize in Physics in 2017. Improvements to LIGO’s interferometers mean that it now detects an average of about one black hole merger every three days during its current science run. Together with its partners, the Virgo gravitational-wave detector in Italy and KAGRA in Japan, the observatory has in total detected hundreds of black hole merger candidates, in addition to a handful involving at least one neutron star.

Researchers want to further enhance LIGO’s abilities, so that they can detect a larger variety of black-hole mergers, including more massive mergers that might belong to a hypothesized intermediate-mass class bridging the gap between stellar-mass black holes and much larger supermassive black holes residing at the centers of galaxies. They also want to make it easier for LIGO to find black holes with eccentric, or oblong, orbits, as well as catch mergers earlier in the coalescing process, when the dense bodies spiral in toward one another.

To do this, researchers at Caltech and Gran Sasso Science Institute in Italy teamed up with Google DeepMind to develop a new AI method–called Deep Loop Shaping–that can better hush unwanted noise in LIGO’s detectors. The term “noise” can refer to any number of pesky background disturbances that interfere with data collection. The noise can be literal noise, as in sound waves, but in the case of LIGO, the term often refers to a very tiny amount of jiggling in the giant mirrors at the heart of LIGO. Too much jiggling can mask gravitational-wave signals.

Now, reporting in Science, the researchers show that their new AI algorithm, though still a proof-of-concept, quieted motions of the LIGO mirrors by 30 to 100 times more than what is possible using traditional noise-reduction methods alone.

“We were already at the forefront of innovation, making the most precise measurements in the world, but with AI, we can boost LIGO’s performance to detect bigger black holes,” says co-author Rana Adhikari, professor of physics at Caltech. “This technology will help us not only improve LIGO but also build LIGO India and even bigger gravitational-wave detectors.”

The approach could also improve technologies that use control systems. “In the future, Deep Loop Shaping could also be applied to many other engineering problems involving vibration suppression, noise cancellation and highly dynamic or unstable systems important in aerospace, robotics, and structural engineering,” write study co-authors Brendan Tracey and Jonas Buchli, an engineer and scientist, respectively, at Google DeepMind, in a blog post about the study.

The Stillest Mirrors

Both the Louisiana and Washington LIGO facilities are shaped like enormous “L’s,” in which each arm of the L contains a vacuum tube that houses advanced laser technology. Within the 4-kilometer-long tubes, lasers bounce back and forth with the aid of giant 40-kilogram suspended mirrors at each end. As gravitational waves reach Earth from space, they distort space-time in such a way that the length of one arm changes relative to the other by infinitesimally small amounts. LIGO’s laser system detects these minute, subatomic-length changes to the arms, registering gravitational waves.

But to achieve this level of precision, engineers at LIGO must ensure that background noises are kept at bay. This study looked specifically at unwanted noises, or motions, in LIGO’s mirrors that occur when the mirrors shift in orientation from the desired position by very tiny amounts. Although both of the LIGO facilities are relatively far from the coast, one of the strongest sources of these mirror vibrations is ocean waves.

“It’s as if the LIGO detectors are sitting at the beach,” explains co-author Christopher Wipf, a gravitational-wave interferometer research scientist at Caltech. “Water is sloshing around on Earth, and the ocean waves create these very low-frequency, slow vibrations that both LIGO facilities are severely disturbed by.”

The solution to the problem works much like noise-canceling headphones, Wipf explains. “Imagine you are sitting on the beach with noise-canceling headphones. A microphone picks up the ocean sounds, and then a controller sends a signal to your speaker to counteract the wave noise,” he says. “This is similar to how we control ocean and other seismic ground-shaking noise at LIGO.”

However, as is the case with noise-canceling headphones, there is a price. “If you have ever listened to these headphones in a quiet area, you might hear a faint hiss. The microphone has its own intrinsic noise. This self-inflicted noise is what we want to get rid of in LIGO,” Wipf says.

LIGO already handles the problem extremely well using a traditional feedback control system. The controller senses the rumble in the mirrors caused by seismic noise and then counteracts these vibrations, but in a way that introduces a new higher-frequency quiver in the mirrors—like the hiss in the headphones. The controller senses the hiss too and constantly reacts to both types of disturbances to keep the mirrors as still as possible. This type of system is sometimes compared to a waterbed: Trying to quiet waves at one frequency leads to extra jiggling at another frequency. Controllers can automatically sense the disturbances and stabilize a system.

Researchers want to further improve the LIGO control system by reducing this controller-induced hiss, which interferes with gravitational-wave signals in the lower-frequency portion of the observatory’s range. LIGO detects gravitational waves with a frequency between 10 and 5,000 Hertz (humans hear sound waves with a frequency between 20 and 20,000 Hertz). The unwanted hiss lies in the range between 10 and 30 Hertz—and this is where more massive black holes mergers would be picked up, as well as where black holes would be caught near the beginning of their final death spirals (for instance, the famous “chirps” heard by LIGO start in lower frequencies then rise to a higher pitch.)

About four years ago, Jan Harms, a former Caltech research assistant professor who is now a professor at Gran Sasso Science Institute, reached out to experts at Google DeepMind to see if they could help develop an AI method to better control vibrations in LIGO’s mirrors. At that point, Adhikari got involved, and the researchers began working with Google DeepMind to try different AI methods. In the end, they used a technique called reinforcement learning, which essentially taught the AI algorithm how to better control the noise.

“This method requires a lot of training,” Adhikari says. “We supplied the training data, and Google DeepMind ran the simulations. Basically, they were running dozens of simulated LIGOs in parallel. You can think of the training as playing a game. You get points for reducing the noise and dinged for increasing it. The successful ‘players’ keep going to try to win the game of LIGO. The result is beautiful—the algorithm works to suppress mirror noise.”

Richard Murray (BS ‘85), the Thomas E. and Doris Everhart Professor of Control and Dynamical Systems and Bioengineering at Caltech, explains that without AI, scientists and engineers mathematically model a system they want to control in explicit detail. “But with AI, if you train it on a model of sufficient detail, it can exploit features in the system that you wouldn’t have considered using classical methods,” he says. An expert in control theory for complex systems, Murray (who is not an author on the current study) develops AI tools for certain control systems, such as those used in self-driving vehicles.

“We think this research will inspire more students to want to work at LIGO and be part of this remarkable innovation,” Adhikari says. “We are at the bleeding edge of what’s possible in measuring tiny, quantum distances.”

So far, the new AI method was tested on LIGO for only an hour to demonstrate that it works. The team is looking forward to conducting longer duration tests and ultimately implementing the method on several LIGO systems. “This is a tool that changes how we think about what ground-based detectors are capable of,” Wipf says. “It makes an incredibly challenging problem less daunting.”

The Science paper titled “Improving cosmological reach of LIGO using Deep Loop Shaping” was supported in part by the National Science Foundation, which funds LIGO.

AI Research

Palantir CEO Alex Karp says U.S. labor workers won’t lose their jobs to AI—‘it’s not true’

As fears swirl that American manufacturing workers and skilled laborers may soon be replaced by artificial intelligence and robots, Alex Karp, CEO of the AI and data analytics software company Palantir Technologies, hopes to change the narrative.

“It’s not true, and in fact, it’s kind of the opposite,” Karp said in an interview with Fortune Thursday at the company’s commercial customer conference, AIPCon, where Palantir customers showcased how they were using the company’s software platform and generative AI within their own businesses at George Lucas’ Skywalker Ranch in Marin County, Calif.

The primary danger of AI in this country, says Karp, is that workers don’t understand that AI will actually help them in their roles—and it will hardly replace them. “Silicon Valley’s done an immensely crappy job of explaining that,” he said. “If you’re in manufacturing, in any capacity: You’re on the assembly line, you maintain a complicated machine—you have any kind of skilled labor job—the way we do AI will actually make your job more valuable and make you more valuable. But currently you would think—just roaming around the country, and if you listen to the AI narratives coming out of Silicon Valley—that all these people are going to lose their jobs tomorrow.”

Karp made these comments the day before the Bureau of Labor Statistics released its August jobs report, which showcased a climbing unemployment rate and stagnating hiring figures, reigniting fears of whether AI is at all responsible for the broader slowdown. There has been limited data thus far suggesting that generative AI is to blame for the slowing jobs market—or even job cuts for that matter—though a recent ADP hiring report offered a rare suggestion that AI may be one of several factors influencing hiring sentiment. Some executives, including Salesforce’s Marc Benioff, have cited the efficiency gains of AI for layoffs at their companies, and others, like Ford CEO Jim Farley and Amazon CEO Andy Jassy, have made lofty predictions about how AI is on track to replace jobs in the future. Most of these projections have been centered around white collar roles, in particular, versus manufacturing or skilled labor positions.

Karp, who has a PhD in neoclassical social theory and a reputation for being outspoken and contrarian on many issues, argues that fears of AI eliminating skilled labor jobs are unfounded—and he’s committed to “correcting” the public perception.

Earlier this week, Palantir launched “Working Intelligence: The AI Optimism Project,” a quasi-public information and marketing campaign centered around artificial intelligence in the workplace. The project has begun with a series of short blog posts featuring Palantir’s customers and their opinions on AI, as well as a “manifesto” that takes aim at both the “doomers” and “pacifiers” of AI. “Doomers fear, and pacifiers welcome, a future of conformity: a world in which AI flattens human difference. Silicon Valley is already selling such bland, dumbed-down slop,” the manifesto declares, arguing that the true power of AI is not to standardize but to “supercharge” workers.

Jordan Hirsch, who is spearheading the new project at Palantir, said that there are approximately 20 people working on it and that they plan to launch a corresponding podcast.

While Palantir has an obvious commercial interest in dispelling public fears about AI, Karp framed his commitment to the project as something important for society. Fears about job replacement will “feed a kind of weird populism based on a notion that’s not true—that’s going to make the factions on the right and left much, much, much more powerful based on something that’s not true,” he said. “I think correcting that—but not just by saying platitudes, but actually showing how this works, is one of the most important things we have to get on top of.”

Karp said he planned to invest “lots of energy and money” into the AI Optimism Project. When asked how much money, he said he didn’t know yet, but that “we have a lot of money, and it’s one of my biggest priorities.”

Palantir has seen enormous growth within the commercial side of its business in the last two years, largely due to the artificial intelligence product it released in 2023, called “AIP.” Palantir’s revenue surpassed $1 billion for the first time last quarter. And while Palantir only joined the S&P 500 last year, it now ranks as one of the most valuable companies in the world thanks to its soaring stock price.

AI Research

Delaware Partnership to Build AI Skills in Students, Workers

Delaware has announced a partnership with OpenAI on its certification program, which aims to build AI skills in the state among students and workers alike.

The Diamond State’s officials have been exploring how to move forward responsibly with AI, establishing a generative AI policy this year to help inform safe use among public-sector employees, which one official said was the “first step” to informing employees about acceptable AI use. The Delaware Artificial Intelligence Commission also took action this year to advance a “sandbox” environment for testing new AI technologies including agentic AI; the sandbox model has proven valuable for governments across the U.S., from San Jose to Utah.

The OpenAI Certification Program aims to address a common challenge for states: fostering AI literacy in the workforce and among students. It builds on the OpenAI Academy, an open-to-all initiative launched in an effort to democratize knowledge about AI. The initiative’s expansion will enable the company to offer certifications based upon levels of AI fluency, from the basics to prompt engineering. The company is committing to certifying 10 million Americans by 2030.

“As a former teacher, I know how important it is to give our students every advantage,” Gov. Matt Meyer said in a statement. “As Governor, I know our economy depends on workers being ready for the jobs of the future, no matter their zip code.”

The partnership will start with early-stage programming across schools and workforce training programs in Delaware in an effort led by the state’s new Office of Workforce Development, which was created earlier this year. The office will work with schools, colleges and employers in coming months to identify pilot opportunities for this programming, to ensure that every community in the state has access.

Delaware will play a role in shaping how certifications are rolled out at the community level because the program is in its early stages and Delaware is one of the first states to join, per the state’s announcement.

“We’ll obviously use AI to teach AI: anyone will be able to prepare for the certification in ChatGPT’s Study mode and become certified without leaving the app,” OpenAI’s CEO of Applications Fidji Simo said in an article.

This announcement comes on the heels of the federal AI Action Plan’s release. The plan, among other content potentially limiting states’ regulatory authority, aims to invest in skills training and AI literacy.

“By boosting AI literacy and investing in skills training, we’re equipping hardworking Americans with the tools they need to lead and succeed in this new era,” U.S. Secretary of Labor Lori Chavez-DeRemer said in a statement about the federal plan.

Delaware’s partnership with OpenAI for its certification program mirrors this goal, equipping Delawareans with the knowledge to use these tools — in the classroom, in their careers and beyond.

AI skills are a critical part of broader digital literacy efforts; today, “even basic digital skills include AI,” National Digital Inclusion Alliance Director Angela Siefer said earlier this summer.

AI Research

The End of Chain-of-Thought? CoreThink and University of California Researchers Propose a Paradigm Shift in AI Reasoning

For years, the race in artificial intelligence has been about scale. Bigger models, more GPUs, longer prompts. OpenAI, Anthropic, and Google have led the charge with massive large language models (LLMs), reinforcement learning fine-tuning, and chain-of-thought prompting—techniques designed to simulate reasoning by spelling out step-by-step answers.

But a new technical white paper titled CoreThink: A Symbolic Reasoning Layer to reason over Long Horizon Tasks with LLMs from CoreThink AI and University of California researchers argues that this paradigm may be reaching its ceiling. The authors make a provocative claim: LLMs are powerful statistical text generators, but they are not reasoning engines. And chain-of-thought, the method most often used to suggest otherwise, is more performance theater than genuine logic.

In response, the team introduces General Symbolics, a neuro-symbolic reasoning layer designed to plug into existing models. Their evaluations show dramatic improvements across a wide range of reasoning benchmarks—achieved without retraining or additional GPU cost. If validated, this approach could mark a turning point in how AI systems are designed for logic and decision-making.

What Is Chain-of-Thought — and Why It Matters

Chain-of-thought (CoT) prompting has become one of the most widely adopted techniques in modern AI. By asking a model to write out its reasoning steps before delivering an answer, researchers found they could often improve benchmark scores in areas like mathematics, coding, and planning. On the surface, it seemed like a breakthrough.

Yet the report underscores the limitations of this approach. CoT explanations may look convincing, but studies show they are often unfaithful to what the model actually computed, rationalizing outputs after the fact rather than revealing true logic. This creates real-world risks. In medicine, a plausible narrative may mask reliance on spurious correlations, leading to dangerous misdiagnoses. In law, fabricated rationales could be mistaken for genuine justifications, threatening due process and accountability.

The paper further highlights inefficiency: CoT chains often grow excessively long on simple problems, while collapsing into shallow reasoning on complex ones. The result is wasted computation and, in many cases, reduced accuracy. The authors conclude that chain-of-thought is “performative, not mechanistic”—a surface-level display that creates the illusion of interpretability without delivering it.

Symbolic AI: From Early Dreams to New Revivals

The critique of CoT invites a look back at the history of symbolic AI. In its earliest decades, AI research revolved around rule-based systems that encoded knowledge in explicit logical form. Expert systems like MYCIN attempted to diagnose illnesses by applying hand-crafted rules, and fraud detection systems relied on vast logic sets to catch anomalies.

Symbolic AI had undeniable strengths: every step of its reasoning was transparent and traceable. But these systems were brittle. Encoding tens of thousands of rules required immense labor, and they struggled when faced with novel situations. Critics like Hubert Dreyfus argued that human intelligence depends on tacit, context-driven know-how that no rule set could capture. By the 1990s, symbolic approaches gave way to data-driven neural networks.

In recent years, there has been a renewed effort to combine the strengths of both worlds through neuro-symbolic AI. The idea is straightforward: let neural networks handle messy, perceptual inputs like images or text, while symbolic modules provide structured reasoning and logical guarantees. But most of these hybrids have struggled with integration. Symbolic backbones were too rigid, while neural modules often undermined consistency. The result was complex, heavy systems that failed to deliver the promised interpretability.

General Symbolics: A New Reasoning Layer

CoreThink’s General Symbolics Reasoner (GSR) aims to overcome these limitations with a different approach. Instead of translating language into rigid formal structures or high-dimensional embeddings, GSR operates entirely within natural language itself. Every step of reasoning is expressed in words, ensuring that context, nuance, and modality are preserved. This means that differences like “must” versus “should” are carried through the reasoning process, rather than abstracted away.

The framework works by parsing inputs natively in natural language, applying logical constraints through linguistic transformations, and producing verbatim reasoning traces that remain fully human-readable. When contradictions or errors appear, they are surfaced directly in the reasoning path, allowing for transparency and debugging. To remain efficient, the system prunes unnecessary steps, enabling stable long-horizon reasoning without GPU scaling.

Because it acts as a layer rather than requiring retraining, GSR can be applied to existing base models. In evaluations, it consistently delivered accuracy improvements of between 30 and 60 percent across reasoning tasks, all without increasing training costs.

Benchmark Results

The improvements are best illustrated through benchmarks. On LiveCodeBench v6, which evaluates competition-grade coding problems, CoreThink achieved a 66.6 percent pass rate—substantially higher than leading models in its category. In SWE-Bench Lite, a benchmark for real-world bug fixing drawn from GitHub repositories, the system reached 62.3 percent accuracy, the highest result yet reported. And on ARC-AGI-2, one of the most demanding tests of abstract reasoning, it scored 24.4 percent, far surpassing frontier models like Claude and Gemini, which remain below 6 percent.

These numbers reflect more than raw accuracy. In detailed case studies, the symbolic layer enabled models to act differently. In scikit-learn’s ColumnTransformer, for instance, a baseline model proposed a superficial patch that masked the error. The CoreThink-augmented system instead identified the synchronization problem at the root and fixed it comprehensively. On a difficult LeetCode challenge, the base model misapplied dynamic programming and failed entirely, while the symbolic reasoning layer corrected the flawed state representation and produced a working solution.

How It Fits into the Symbolic Revival

General Symbolics joins a growing movement of attempts to bring structure back into AI reasoning. Classic symbolic AI showed the value of transparency but could not adapt to novelty. Traditional neuro-symbolic hybrids promised balance but often became unwieldy. Planner stacks that bolted search onto LLMs offered early hope but collapsed under complexity as tasks scaled.

Recent advances point to the potential of new hybrids. DeepMind’s AlphaGeometry, for instance, has demonstrated that symbolic structures can outperform pure neural models on geometry problems. CoreThink’s approach extends this trend. In its ARC-AGI pipeline, deterministic object detection and symbolic pattern abstraction are combined with neural execution, producing results far beyond those of LLM-only systems. In tool use, the symbolic layer helps maintain context and enforce constraints, allowing for more reliable multi-turn planning.

The key distinction is that General Symbolics does not rely on rigid logic or massive retraining. By reasoning directly in language, it remains flexible while preserving interpretability. This makes it lighter than earlier hybrids and, crucially, practical for integration into enterprise applications.

Why It Matters

If chain-of-thought is an illusion of reasoning, then the AI industry faces a pressing challenge. Enterprises cannot depend on systems that only appear to reason, especially in high-stakes environments like medicine, law, and finance. The paper suggests that real progress will come not from scaling models further, but from rethinking the foundations of reasoning itself.

General Symbolics is one such foundation. It offers a lightweight, interpretable layer that can enhance existing models without retraining, producing genuine reasoning improvements rather than surface-level narratives. For the broader AI community, it marks a possible paradigm shift: a return of symbolic reasoning, not as brittle rule sets, but as a flexible companion to neural learning.

As the authors put it: “We don’t need to add more parameters to get better reasoning—we need to rethink the foundations.”

-

Business1 week ago

Business1 week agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms4 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi