AI Insights

Artificial Intelligence at Fifth Third Bank

Fifth Third Bank, a leading regional financial institution with over 1,100 branches in 11 states, operates four main businesses: commercial banking, branch banking, consumer lending, and wealth and asset management. Founded in 1858 and headquartered in Cincinnati, the bank has assets in excess of $211 billion. During the first quarter of 2025, Fifth Third Bank saw loan growth, net interest margin expansion, and expense discipline, which led to positive operating leverage.

Fifth Third Bank has been at the forefront of technological innovation for decades. Press materials surrounding new systems note that the bank is proud to have launched the first online automated teller system and shared ATM network in the U.S. in 1977 by allowing customers 24/7 banking access.

Additionally, this drastically increased the number of ATMs customers could use since they were able to access ATMs owned by other banks. Fifth Third directly invests in AI to enhance its core banking operations and indirectly invests in AI to improve healthcare through Big Data Healthcare, a wholly owned indirect subsidiary.

Fifth Third’s annual filing in 2024 reflects an uptick in the bank’s technology and communications spending, showing a roughly 14% increase compared to 2022. Fifth Third takes a highly intentional and risk-minded approach to AI, having spent the better part of 2024 focused on establishing key governance foundations before rolling out AI capabilities. Additionally, they concentrate deployment on areas where AI brings genuine business value.

This article examines two AI use cases at Fifth Third Bank:

- Conversational AI for streamlined customer service delivery: Leveraging natural language processing (NLP) to reduce calls received by human agents by double-digit percentages and saving millions in costs.

- Surfacing customer satisfaction scores: Leveraging analytics to analyze customer interactions and improve agent performance.

Redefining Customer Service with Conversational AI

The onset of the pandemic in early 2020 brought the stress of heavy call volumes of contact centers to the forefront. One study emphasizes how a voice-first approach is needed to facilitate contract centers’ ability to handle a sudden surge in calls.

Like many companies, Fifth Third Bank saw customer inquiries skyrocket at the start of the pandemic. The bank was already piloting the concept of a chatbot in the immediate years leading up to the pandemic. Then, in early 2020, as the pandemic unfolded, Fifth Third quickly realized it had to help its call center, which was overwhelmed.

Before Jeanie 2.0, customer service agents on the call center floor were required to scroll up to read the entire conversation history to understand customers’ needs and personalized requests better. Additionally, while Jeanie 1.0 could provide step-by-step instructions to complete specific tasks, it had the following issues:

- Inability to always provide the correct answers to customers’ questions

- Connected customers to agents when it wasn’t necessary

- Required agents to manually search for and use a variety of content to perform different tasks when helping customers, and find the specific script telling them what information they need to send to the customer

- Create their own scripts to answer questions of a more complex range

Screenshot of the Zelle Flow for Jeanie 1.0 showing the problematic early default to agent escalation (Source: UC Center for Business Analytics)

Jeanie’s natural language understanding model is built on LivePerson’s conversational platform and uses traditional AI, according to Senior Director of Conversational AI at Fifth Third Bank, Michelle Grimm.

Jeanie 2.0 is more user-friendly for the Fifth Third agents using it. It uses LivePerson’s predefined content library to streamline and increase efficiency for agents when they are helping customers. The documentation outlines the benefits of using predefined content, including:

- Time savings for agents

- Ensures consistent, error-free responses

- Maintains a professional tone of voice

Fifth Third Bank’s Annual Report highlights the following benefits of Jeanie:

- Reduced calls requiring a live agent by nearly 10%

- Generated over $10 million in annual savings

- Improved customer satisfaction

- Improved employee retention

- Shortened account opening times by more than 60%

The same report also states that Jeanie underwent a significant update in October 2023, resulting in expanding Jeanie’s capabilities by over 300%.

Alex Ross, Sr. Content Producer for LivePerson, explained additional benefits in a blog post, including Jeanie’s ability to respond to over 150 intents and over 30,000 phrases with over 95% accuracy.

Improving Customer Experience (CX) with AI-surfaced Customer Satisfaction Scores

Traditionally, companies use a survey-based system to manage customer experience and agent performance, and Fifth Third Bank was no exception. The bank primarily relied on customer surveys to gain insight into how customers viewed their interactions with the contact center. However, this method has notable shortcomings, especially in an industry where 88% of bank customers report that customer experience is as essential, or even more important, than the actual products and services. These limitations include:

- Low visibility for managers into agent contributions to customer experience

- Identification of only a limited range of coachable topics

Also, metrics such as average handle time were used to evaluate agent performance. With an increase in automation, average handle time loses its ability to serve as an effective metric. The reason is that automation results in the more complex issues being surfaced to agents, which leads to an increase in average handle time. Fifth Third transitioned away from focusing on surveys in favor of sentiment analysis.

According to the case study published by NiCE, Fifth Third had multiple goals related to customer experience and satisfaction, including:

- Reach the top of independent third-party customer experience rankings

- Gain customer sentiment metrics from every interaction

- Obtain insights from a more representative group of bank customers

- Replace the costly, limited-utility survey program

Fifth Third Bank began using Enlighten AI and Nexidia Analytics by NiCE in the hopes of reaching those goals and improving how they coach 700 agents across 3 locations. More specifically, the sentiment score from Enlighten allows Fifth Third to find an ideal range for average handle time for its agents.

In the video below, Michelle Grimm, Senior Director of Conversational AI at Fifth Third Bank, explains how the bank has improved agent feedback with Enlighten AI, citing metrics like average handle time at around the 1:21 mark:

Grimm explains how Fifth Third was able to use NICE Enlighten to identify the optimal AHT range beyond which sentiment declines once the upper end of the range is reached; the optimal range identified was 3-5 minutes.

Fifth Third uses the sentiment scores to refine the coaching process and ultimately improve customer interactions. Grimm mentions that her team uses a color-coded system consisting of a standard red, yellow, and green distribution to focus on positive behaviors for reinforcement and address areas for improvement.

The sentiment scores have served as a basis for improved collaboration across Fifth Third’s three geographically distributed call sites: Cincinnati, Grand Rapids, and the Philippines.

The bank also rolled out Nexidia Analytics in 2021. As part of that effort, they analyzed over 15.7 million interactions involving 2,300 agents.

With the shift from survey to sentiment, Fifth Third Bank saw some immediate benefits, including improved employee productivity, higher employee compliance, and lower costs as speech analytics identified processes that could be automated.

Increased sentiment scores can have wide-reaching effects across the entire enterprise over time. In the above video, Grimm is emphatic that positive feedback to agents translates into customer experience. This is why Grimm says that it’s essential for agents to hone areas in which they’re good as well as focus on areas of improvement. She fully recognizes how customer-based the banking industry is, which is why improving sentiment scores is so vital.

Over time, sustained increased sentiment helps to:

- Strengthen customer loyalty

- Reinforce the bank’s reputation

- Reduce customer churn

- Reduce compliance risks

A 2023 Bain & Company report shows consumers who give high loyalty scores cost less to serve and spend more with their bank. Additionally, they are more likely to recommend their bank to family and friends.

While Fifth Third has not published quantifiable numbers, the bank has indicated in press materials that the use of NiCE Enlighten AI and Nexidia Analytics resulted in increased sentiment scores and lower costs.

AI Insights

Asia Fund Beating 95% of Peers Is Bullish on Chip Gear Makers

Chinese chipmakers are trading at a four-year high versus their US peers, but a top fund manager still sees pockets of opportunity among their equipment suppliers.

Source link

AI Insights

Deep computer vision with artificial intelligence based sign language recognition to assist hearing and speech-impaired individuals

This study proposes a novel HHODLM-SLR technique. The presented HHODLM-SLR technique mainly concentrates on the advanced automatic detection and classification of SL for disabled people. This technique comprises BF-based image pre-processing, ResNet-152-based feature extraction, BiLSTM-based SLR, and HHO-based hyperparameter tuning. Figure 1 represents the workflow of the HHODLM-SLR model.

Image Pre-preprocessing

Initially, the HHODLM-SLR approach utilized BF to eliminate noise in an input image dataset38. This model is chosen due to its dual capability to mitigate noise while preserving critical edge details, which is crucial for precisely interpreting complex hand gestures. Unlike conventional filters, such as Gaussian or median filtering, that may blur crucial features, BF maintains spatial and intensity-based edge sharpness. This confirms that key contours of hand shapes are retained, assisting improved feature extraction downstream. Its nonlinear, content-aware nature makes it specifically efficient for complex visual patterns in sign language datasets. Furthermore, BF operates efficiently and is adaptable to varying lighting or background conditions. These merits make it an ideal choice over conventional pre-processing techniques in this application. Figure 2 represents the working flow of the BF model.

BF is a nonlinear image processing method employed for preserving edges, whereas decreasing noise in images makes it effective for pre-processing in SLR methods. It smoothens the image by averaging pixel strengths according to either spatial proximity or intensity similarities, guaranteeing that edge particulars are essential for recognizing hand movements and shapes remain unchanged. This is mainly valued in SLR, whereas refined edge features and hand gestures are necessary for precise interpretation. By utilizing BF, noise from environmental conditions, namely background clutter or lighting variations, is reduced, improving the clearness of the input image. This pre-processing stage helps increase the feature extraction performance and succeeding detection phases in DL methods.

Feature extraction using ResNet-152 model

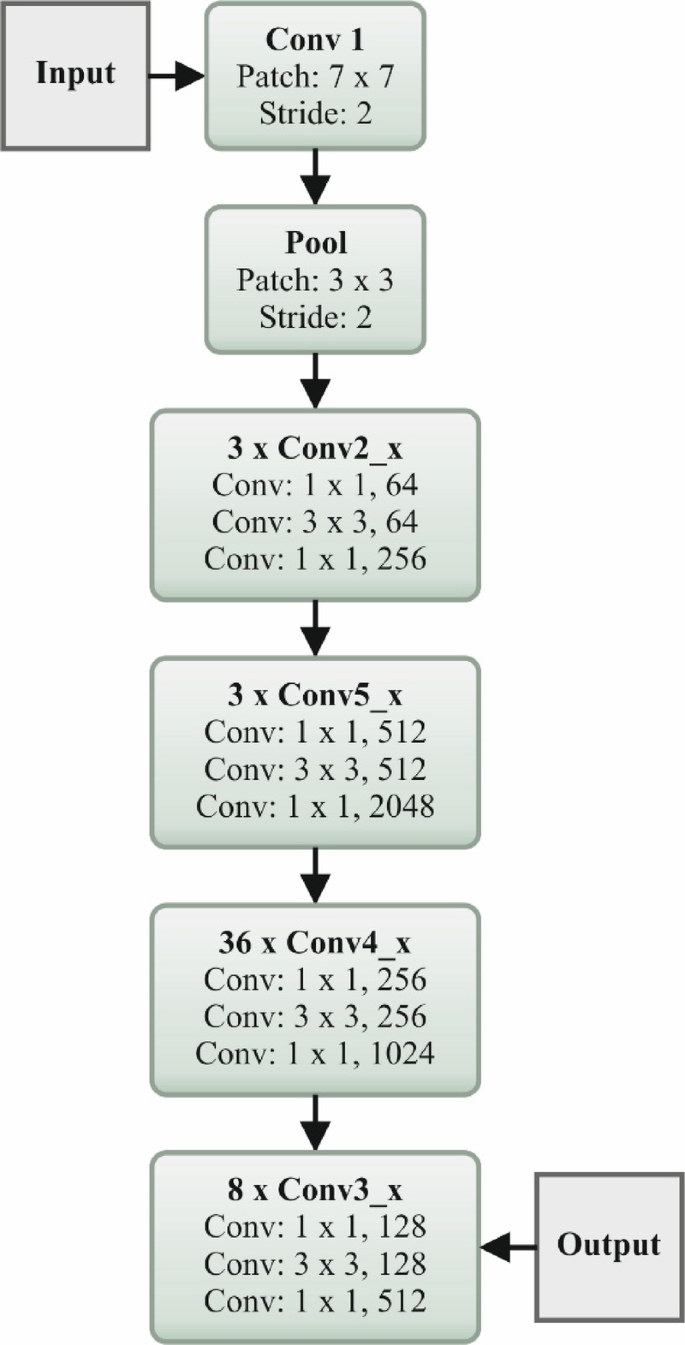

The HHODLM-SLR technique implements the ResNet152 model for feature extraction39. This model is selected due to its deep architecture and capability to handle vanishing gradient issues through residual connections. This technique captures more complex and abstract features that are significant for distinguishing subtle discrepancies in hand gestures compared to standard deep networks or CNNs. Its 152-layer depth allows it to learn rich hierarchical representations, enhancing recognition accuracy. The skip connections in ResNet improve gradient flow and enable enhanced training stability. Furthermore, it has proven effectualness across diverse vision tasks, making it a reliable backbone for SL recognition. This depth, performance, and robustness integration sets it apart from other feature extractors. Figure 3 illustrates the flow of the ResNet152 technique.

The renowned deep residual network ResNet152 is applied as the pre-trained system in deep convolutional neural networks (DCNN) during this classification method. This technique is responsible for handling the problem of vanishing gradients. Then, the ResNet152 output is transferred to the SoftMax classifier (SMC) in the classification procedure. The succeeding part covers the process of categorizing and identifying characteristics. The fully connected (FC) layer, convolution layer (CL), and downsampling layers (DSL) are some of the most general layers that constitute a DCNN (FCL). The networking depth of DL methods plays an essential section in the model of attaining increased classifier outcomes. Later, for particular values, once the CNN is made deeper, the networking precision starts to slow down; however, persistence decreases after that. The mapping function is added in ResNet152 to reduce the influence of degradation issues.

$$\:W\left(x\right)=K\left(x\right)+x$$

(1)

Here, \(\:W\left(x\right)\) denotes the function of mapping built utilizing a feedforward NN together with SC. In general, SC is the identity map that is the outcome of bypassing similar layers straight, and \(\:K(x,\:{G}_{i})\) refers to representations of the function of residual maps. The formulation is signified by Eq. (2).

$$\:Z=K\left(x,\:{G}_{i}\right)+x$$

(2)

During the CLs of the ResNet method, \(\:3\text{x}3\) filtering is applied, and the down-sampling process is performed by a stride of 2. Next, short-cut networks were added, and the ResNet was built. An adaptive function is applied, as presented by Eq. (3), to enhance the dropout’s implementation now.

$$\:u=\frac{1}{n}{\sum\:}_{i=1}^{n}\left[zlog{(S}_{i})+\left(1-z\right)log\left(1-{S}_{i}\right)\right]$$

(3)

Whereas \(\:n\) denotes training sample counts, \(\:u\) signifies the function of loss, and \(\:{S}_{i}\) represents SMC output, the SMC is a kind of general logistic regression (LR) that might be applied to numerous class labels. The SMC outcomes are presented in Eq. (4).

$$\:{S}_{i}=\frac{{e}^{{l}_{k}}}{{\varSigma\:}_{j=1}^{m}{e}^{{y}_{i}}},\:k=1,\:\cdots\:,m,\:y={y}_{1},\:\cdots\:,\:{y}_{m}$$

(4)

In such a case, the softmax layer outcome is stated. \(\:{l}_{k}\) denotes the input vector component and \(\:l,\) \(\:m\) refers to the total neuron counts established in the output layer. The presented model uses 152 10 adaptive dropout layers (ADLs), an SMC, and convolutional layers (CLs).

SLR using Bi-LSTM technique

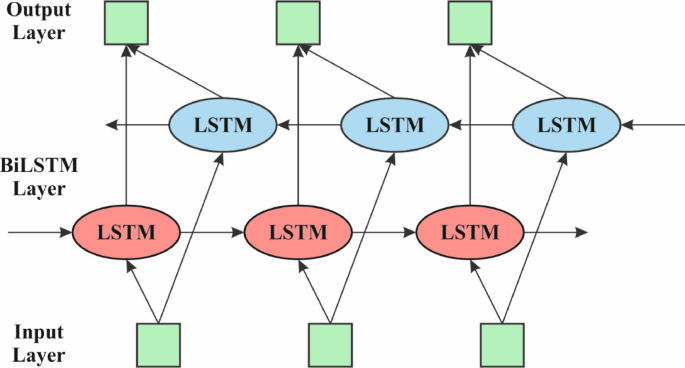

The Bi-LSTM model employs the HHODLM-SLR methodology for performing the SLR process40. This methodology is chosen because it can capture long-term dependencies in both forward and backward directions within gesture sequences. Unlike unidirectional LSTM or conventional RNNs, Bi-LSTM considers past and future context concurrently, which is significant for precisely interpreting the temporal flow of dynamic signs. This bidirectional learning enhances the model’s understanding of gesture transitions and co-articulation effects. Its memory mechanism effectually handles variable-length input sequences, which is common in real-world SLR scenarios. Bi-LSTM outperforms static classifiers like CNNs or SVMs when dealing with sequential data, making it highly appropriate for recognizing time-based gestures. Figure 4 specifies the Bi-LSTM method.

The presented DAE-based approach for removing the feature is defined here. Additionally, Bi-LSTM is applied to categorize the data. The model to solve classification problems consists of the type of supervised learning. During this method, the Bi‐LSTM classification techniques are used to estimate how the proposed architecture increases the performance of the classification. A novel RNN learning model is recommended to deal with this need, which may enhance the temporal organization of the structure. By the following time stamp, the output is immediately fed reverse itself\(\:.\) RNN is an approach that is often applied in DL. Nevertheless, RNN acquires a slanting disappearance gradient exploding problem. At the same time, the memory unit in the LSTM can choose which data must be saved in memory and at which time it must be deleted. Therefore, LSTM can effectively deal with the problems of training challenges and gradient disappearance by mine time-series with intervals in the time-series and relatively larger intervals. There are three layers in a standard LSTM model architecture: hidden loop, output, and input. The cyclic HL, by comparison with the traditional RNN, generally contains neuron nodes. Memory units assist as the initial module of the LSTM cyclic HLs. Forget, input and output gates are the three adaptive multiplication gate components enclosed in this memory unit. All neuron nodes of the LSTM perform the succeeding computation: The input gate was fixed at \(\:t\:th\) time according to the output result \(\:{h}_{t-1}\) of the component at the time in question and is specified in Eq. (5). The input \(\:{x}_{t}\) accurate time is based on whether to include a computation to upgrade the present data inside the cell.

$$\:{i}_{t}={\upsigma\:}\left({W}_{t}\cdot\:\left[{h}_{t-1},\:{x}_{t}\right]+{b}_{t}\right)$$

(5)

A forget gate defines whether to preserve or delete the data according to the additional new HL output and the present-time input specified in Eq. (6).

$$\:{f}_{\tau\:}={\upsigma\:}\left({W}_{f}\cdot\:\left[{h}_{t-1},{x}_{\tau\:}\right]+{b}_{f}\right)$$

(6)

The preceding output outcome \(\:{h}_{t-1}\) of the HL-LSTM cell establishes the value of the present candidate cell of memory and the present input data \(\:{x}_{t}\). * refers to element-to-element matrix multiplication. The value of memory cell state \(\:{C}_{t}\) adjusts the present candidate cell \(\:{C}_{t}\) and its layer \(\:{c}_{t-1}\) forget and input gates. These values of the memory cell layer are provided in Eq. (7) and Eq. (8).

$$\:{\overline{C}}_{\text{t}}=tanh\left({W}_{C}\cdot\:\left[{h}_{t-1},\:{x}_{t}\right]+{b}_{C}\right)$$

(7)

$$\:{C}_{t}={f}_{t}\bullet\:{C}_{t-1}+{i}_{t}\bullet\:\overline{C}$$

(8)

Output gate \(\:{\text{o}}_{t}\) is established as exposed in Eq. (9) and is applied to control the cell position value. The last cell’s outcome is \(\:{h}_{t}\), inscribed as Eq. (10).

$$\:{o}_{t}={\upsigma\:}\left({W}_{o}\cdot\:\left[{h}_{t-1},\:{x}_{t}\right]+{b}_{o}\right)$$

(9)

$$\:{h}_{t}={\text{o}}_{t}\bullet\:tanh\left({C}_{t}\right)$$

(10)

The forward and backward LSTM networks constitute the BiLSTM. Either the forward or the backward LSTM HLs are responsible for removing characteristics; the layer of forward removes features in the forward directions. The Bi-LSTM approach is applied to consider the effects of all features before or after the sequence data. Therefore, more comprehensive feature information is developed. Bi‐LSTM’s present state comprises either forward or backward output, and they are specified in Eq. (11), Eq. (12), and Eq. (13)

$$\:h_{t}^{{forward}} = LSTM^{{forward}} (h_{{t – 1}} ,\:x_{t} ,\:C_{{t – 1}} )$$

(11)

$$\:{h}_{\tau\:}^{backwar\text{d}}=LST{M}^{backwar\text{d}}\left({h}_{t-1},{x}_{t},\:{C}_{t-1}\right)$$

(12)

$$\:{H}_{T}={h}_{t}^{forward},\:{h}_{\tau\:}^{backwar\text{d}}$$

(13)

Hyperparameter tuning using the HHO model

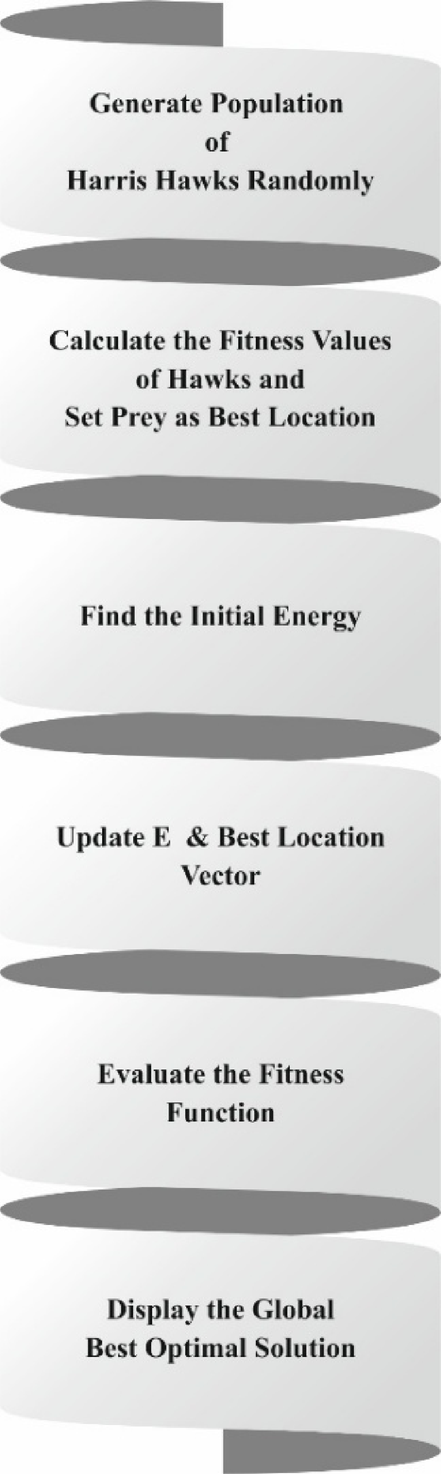

The HHO methodology utilizes the HHODLM-SLR methodology for accomplishing the hyperparameter tuning process41. This model is employed due to its robust global search capability and adaptive behaviour inspired by the cooperative hunting strategy of Harris hawks. Unlike grid or random search, which can be time-consuming and inefficient, HHO dynamically balances exploration and exploitation to find optimal hyperparameter values. It avoids local minima and accelerates convergence, enhancing the performance and stability of the model. Compared to other metaheuristics, such as PSO or GA, HHO presents faster convergence and fewer tunable parameters. Its bio-inspired nature makes it appropriate for complex, high-dimensional optimization tasks in DL models. Figure 5 depicts the flow of the HHO methodology.

The HHO model is a bio-inspired technique depending on Harris Hawks’ behaviour. This model was demonstrated through the exploitation or exploration levels. At the exploration level, the HHO may track and detect prey with its effectual eyes. Depending upon its approach, HHO can arbitrarily stay in a few positions and wait to identify prey. Suppose there is an equal chance deliberated for every perched approach depending on the family member’s position. In that case, it might be demonstrated as condition \(\:q<0.5\) or landed at a random position in the trees as \(\:q\ge\:0.5\), which is given by Eq. (14).

$$\:X\left(t+1\right)=\left\{\begin{array}{l}{X}_{rnd}\left(t\right)-{r}_{1}\left|{X}_{rnd}\left(t\right)-2{r}_{2}X\left(t\right)\right|,\:q\ge\:0.5\\\:{X}_{rab}\left(t\right)-{X}_{m}\left(t\right)-r3\left(LB+{r}_{4}\left(UB-LB\right)\right),q<0.5\end{array}\right.$$

(14)

The average location is computed by the Eq. (15).

$$\:{X}_{m}\left(t\right)=\frac{1}{N}{\sum\:}_{i=1}^{N}{X}_{i}\left(t\right)$$

(15)

The movement from exploration to exploitation, while prey escapes, is energy loss.

$$\:E=2{E}_{0}\left(1-\frac{t}{T}\right)$$

(16)

The parameter \(\:E\) signifies the prey’s escape energy, and \(\:T\) represents the maximum iteration counts. Conversely, \(\:{E}_{0}\) denotes a random parameter that swings among \(\:(-\text{1,1})\) for every iteration.

The exploitation level is divided into hard and soft besieges. The surroundings \(\:\left|E\right|\ge\:0.5\) and \(\:r\ge\:0.5\) should be met in a soft besiege. Prey aims to escape through certain arbitrary jumps but eventually fails.

$$\:\begin{array}{c}X\left(t+1\right)=\Delta X\left(t\right)-E\left|J{X}_{rabb}\left(t\right)-X\left(t\right)\right|\:where\\\:\Delta X\left(t\right)={X}_{rabb}\left(t\right)-X\left(t\right)\end{array}$$

(17)

\(\:\left|E\right|<0.5\) and \(\:r\ge\:0.5\) should meet during the hard besiege. The prey attempts to escape. This position is upgraded based on the Eq. (18).

$$\:X\left(t+1\right)={X}_{rabb}\left(t\right)-E\left|\varDelta\:X\left(t\right)\right|$$

(18)

The HHO model originates from a fitness function (FF) to achieve boosted classification performance. It outlines an optimistic number to embody the better outcome of the candidate solution. The minimization of the classifier error ratio was reflected as FF. Its mathematical formulation is represented in Eq. (19).

$$\begin{gathered} fitness\left( {x_{i} } \right) = ClassifierErrorRate\left( {x_{i} } \right)\: \hfill \\ \quad \quad \quad \quad \quad\,\,\, = \frac{{number\:of\:misclassified\:samples}}{{Total\:number\:of\:samples}} \times \:100 \hfill \\ \end{gathered}$$

(19)

AI Insights

Westpac to Hire Hundreds of Bankers in Business Lending Push

Westpac Banking Corp. plans to hire 350 bankers and turn more to artificial intelligence as it ramps up business lending.

Source link

-

Business3 days ago

Business3 days agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms3 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences3 months ago

Events & Conferences3 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Mergers & Acquisitions2 months ago

Mergers & Acquisitions2 months agoDonald Trump suggests US government review subsidies to Elon Musk’s companies

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi