AI Research

Are you leading with intelligence, or artificial intelligence? 7 skills that matter now

There was a time when writing an email took thought, care, and a quiet few minutes- when messages were typed and not copied, and presentations were made manually slide-by-slide. Then came artificial intelligence, the chief protagonist morphing the corporate workspace. Job descriptions began flaunting and glorifying individuals with adept “AI skills.” But are they truly AI-ready? A shrug of shoulders and a subtle no-nod often answers the question.It’s no longer the era when a handful of AI workshops or revamped dashboards were considered enough to future-proof an organisation. That illusion has faded. As AI shifts from novelty to necessity, leaders are waking up to a sobering truth: the transformation ahead is not just technological, but deeply human.According to the KNOLSKAPE L&D Predictions Report 2025, 75% of companies recognise AI readiness as mission critical, yet most rate their preparedness a modest 5.7 out of 10. Ironically, even with a growing AI culture within organisations, a stark gap remains between aspiration and execution. This chasm can’t be bridged by tech stacks alone—it calls for a new calibre of leadership: agile, ethical, data-literate, and relentlessly committed to learning.

Honest self-assessment and a culture of learning

“Honesty is the best policy.” This age-old adage from school classrooms deserves a seat in the corporate world as well. The biggest misstep in AI adoption is the assumption that an organisation is well-equipped. Readiness is not a badge or a trophy to be achieved; it is a mindset to be nurtured. Encouragingly, 85% of organisations in the Asia-Pacific region have made continuous learning a top priority, a trend outpacing Europe and the Americas as mentioned in the report. But the change only knocks on the door when learning becomes ingrained in the daily routine, not reserved for quarterly retreats.

Ethical stewardship of AI

Speed without conscience is a recipe for disaster. In the rush to capitalise on AI, many leaders sacrifice ethics at the altar of profitability. While 77% of consumers demand strong accountability in AI use, only 11% of leaders prioritise ethical considerations over performance or speed.This leadership blind spot must close, not just because the public demands it, but because long-term trust and responsible innovation depend on it.

Owning problems, not blaming technology

Treating artificial intelligence or emerging technologies as scapegoats is not beneficial. They stem from flawed judgements. AI failures aren’t typically caused by faulty algorithms; they stem from flawed human judgment. Yet, when initiatives falter, blame often shifts to the system rather than the strategy.Leaders must cultivate accountable mindsets. Ownership of both triumphs and missteps signals maturity, and it’s only through this lens that real growth, both organisational and personal, is possible.

Decisions driven by purpose, not panic

FOMO has quietly infiltrated boardrooms. Leaders, eager not to be left behind, often mimic competitors’ AI strategies without clear objectives. But imitation is not innovation.Strategic decision-making rooted in purpose is what separates a meaningful AI programme from a costly detour. In APAC, 42% of leaders have begun prioritising contextual, data-driven decisions tailored to their own organisational DNA — a model worth emulating globally.

Innovation grounded in real need

The allure of flashy tech is powerful and also wasteful. With 21% of companies citing budget constraints as their biggest innovation barrier, the margin for missteps is slim.Effective leaders must champion focused innovation, investing in tools that solve real problems, not just the latest buzz. Innovation isn’t about being first; it’s about being useful.

Data literacy with strategic insight

In the era where artificial intelligence holds the reins of the chariot, it is of high importance for professionals to have an in-depth knowledge of data literacy. There is no shortage of data in the modern enterprise. The real challenge is digging out the right information from it. Many leaders rank the importance of data analytics high (8.5/10), but satisfaction with its application lags behind at 6/10.Bridging this gap requires more than hiring data scientists. Leaders themselves must develop the fluency to interpret insights, challenge assumptions, and guide decisions with clarity. In a world flooded with information, strategic analytics becomes a leadership superpower.

Sustained governance beyond deployment

AI is not human, and per se, even humans need to be supervised. Artificial intelligence is not a one-off rollout; it is a living system that demands oversight. Without sustained governance, even the best AI models degrade into risk and irrelevance.Leaders must build enduring oversight structures: Ethics boards, feedback mechanisms, and regular audits for bias and compliance. True success lies not in launching AI, but in managing it with discipline and foresight long after the hype fades.

Leadership in the age of accountability

Mastering these skills isn’t about ticking boxes on a corporate checklist. It’s about reshaping the very fabric of leadership. The L&D Predictions Report 2025, which surveyed 119 organisations across industries and geographies, makes it clear: intent is high, but impact remains low. To cross that threshold, leaders must become learners again.The future of AI isn’t just about smarter machines, it’s about wiser people. Leaders who take the time to understand themselves, make thoughtful choices, and lead with responsibility won’t just keep up with change. They’ll shape it, building organisations that are not only ready for the future, but built to last.

AI Research

AI Tool Flags Predatory Journals, Building a Firewall for Science

Summary: A new AI system developed by computer scientists automatically screens open-access journals to identify potentially predatory publications. These journals often charge high fees to publish without proper peer review, undermining scientific credibility.

The AI analyzed over 15,000 journals and flagged more than 1,000 as questionable, offering researchers a scalable way to spot risks. While the system isn’t perfect, it serves as a crucial first filter, with human experts making the final calls.

Key Facts

- Predatory Publishing: Journals exploit researchers by charging fees without quality peer review.

- AI Screening: The system flagged over 1,000 suspicious journals out of 15,200 analyzed.

- Firewall for Science: Helps preserve trust in research by protecting against bad data.

Source: University of Colorado

A team of computer scientists led by the University of Colorado Boulder has developed a new artificial intelligence platform that automatically seeks out “questionable” scientific journals.

The study, published Aug. 27 in the journal “Science Advances,” tackles an alarming trend in the world of research.

Daniel Acuña, lead author of the study and associate professor in the Department of Computer Science, gets a reminder of that several times a week in his email inbox: These spam messages come from people who purport to be editors at scientific journals, usually ones Acuña has never heard of, and offer to publish his papers—for a hefty fee.

Such publications are sometimes referred to as “predatory” journals. They target scientists, convincing them to pay hundreds or even thousands of dollars to publish their research without proper vetting.

“There has been a growing effort among scientists and organizations to vet these journals,” Acuña said. “But it’s like whack-a-mole. You catch one, and then another appears, usually from the same company. They just create a new website and come up with a new name.”

His group’s new AI tool automatically screens scientific journals, evaluating their websites and other online data for certain criteria: Do the journals have an editorial board featuring established researchers? Do their websites contain a lot of grammatical errors?

Acuña emphasizes that the tool isn’t perfect. Ultimately, he thinks human experts, not machines, should make the final call on whether a journal is reputable.

But in an era when prominent figures are questioning the legitimacy of science, stopping the spread of questionable publications has become more important than ever before, he said.

“In science, you don’t start from scratch. You build on top of the research of others,” Acuña said. “So if the foundation of that tower crumbles, then the entire thing collapses.”

The shake down

When scientists submit a new study to a reputable publication, that study usually undergoes a practice called peer review. Outside experts read the study and evaluate it for quality—or, at least, that’s the goal.

A growing number of companies have sought to circumvent that process to turn a profit. In 2009, Jeffrey Beall, a librarian at CU Denver, coined the phrase “predatory” journals to describe these publications.

Often, they target researchers outside of the United States and Europe, such as in China, India and Iran—countries where scientific institutions may be young, and the pressure and incentives for researchers to publish are high.

“They will say, ‘If you pay $500 or $1,000, we will review your paper,’” Acuña said. “In reality, they don’t provide any service. They just take the PDF and post it on their website.”

A few different groups have sought to curb the practice. Among them is a nonprofit organization called the Directory of Open Access Journals (DOAJ).

Since 2003, volunteers at the DOAJ have flagged thousands of journals as suspicious based on six criteria. (Reputable publications, for example, tend to include a detailed description of their peer review policies on their websites.)

But keeping pace with the spread of those publications has been daunting for humans.

To speed up the process, Acuña and his colleagues turned to AI. The team trained its system using the DOAJ’s data, then asked the AI to sift through a list of nearly 15,200 open-access journals on the internet.

Among those journals, the AI initially flagged more than 1,400 as potentially problematic.

Acuña and his colleagues asked human experts to review a subset of the suspicious journals. The AI made mistakes, according to the humans, flagging an estimated 350 publications as questionable when they were likely legitimate. That still left more than 1,000 journals that the researchers identified as questionable.

“I think this should be used as a helper to prescreen large numbers of journals,” he said. “But human professionals should do the final analysis.”

A firewall for science

Acuña added that the researchers didn’t want their system to be a “black box” like some other AI platforms.

“With ChatGPT, for example, you often don’t understand why it’s suggesting something,” Acuña said. “We tried to make ours as interpretable as possible.”

The team discovered, for example, that questionable journals published an unusually high number of articles. They also included authors with a larger number of affiliations than more legitimate journals, and authors who cited their own research, rather than the research of other scientists, to an unusually high level.

The new AI system isn’t publicly accessible, but the researchers hope to make it available to universities and publishing companies soon. Acuña sees the tool as one way that researchers can protect their fields from bad data—what he calls a “firewall for science.”

“As a computer scientist, I often give the example of when a new smartphone comes out,” he said.

“We know the phone’s software will have flaws, and we expect bug fixes to come in the future. We should probably do the same with science.”

About this AI and science research news

Author: Daniel Strain

Source: University of Colorado

Contact: Daniel Strain – University of Colorado

Image: The image is credited to Neuroscience News

Original Research: Open access.

“Estimating the predictability of questionable open-access journals” by Daniel Acuña et al. Science Advances

Abstract

Estimating the predictability of questionable open-access journals

Questionable journals threaten global research integrity, yet manual vetting can be slow and inflexible.

Here, we explore the potential of artificial intelligence (AI) to systematically identify such venues by analyzing website design, content, and publication metadata.

Evaluated against extensive human-annotated datasets, our method achieves practical accuracy and uncovers previously overlooked indicators of journal legitimacy.

By adjusting the decision threshold, our method can prioritize either comprehensive screening or precise, low-noise identification.

At a balanced threshold, we flag over 1000 suspect journals, which collectively publish hundreds of thousands of articles, receive millions of citations, acknowledge funding from major agencies, and attract authors from developing countries.

Error analysis reveals challenges involving discontinued titles, book series misclassified as journals, and small society outlets with limited online presence, which are issues addressable with improved data quality.

Our findings demonstrate AI’s potential for scalable integrity checks, while also highlighting the need to pair automated triage with expert review.

AI Research

Researchers Unlock 210% Performance Gains In Machine Learning With Spin Glass Feature Mapping

Quantum machine learning seeks to harness the power of quantum mechanics to improve artificial intelligence, and a new technique developed by Anton Simen, Carlos Flores-Garrigos, and Murilo Henrique De Oliveira, all from Kipu Quantum GmbH, alongside Gabriel Dario Alvarado Barrios, Juan F. R. Hernández, and Qi Zhang, represents a significant step towards realising that potential. The researchers propose a novel feature mapping technique that utilises the complex dynamics of a quantum spin glass to identify subtle patterns within data, achieving a performance boost in machine learning models. This method encodes data into a disordered quantum system, then extracts meaningful features by observing its evolution, and importantly, the team demonstrates performance gains of up to 210% on high-dimensional datasets used in areas like drug discovery and medical diagnostics. This work marks one of the first demonstrations of quantum machine learning achieving a clear advantage over classical methods, potentially bridging the gap between theoretical quantum supremacy and practical, real-world applications.

The core idea is to leverage the quantum dynamics of these annealers to create enhanced feature spaces for classical machine learning algorithms, with the goal of achieving a quantum advantage in performance. Key findings include a method to map classical data into a quantum feature space, allowing classical machine learning algorithms to operate on richer data. Researchers found that operating the annealer in the coherent regime, with annealing times of 10-40 nanoseconds, yields the best and most stable performance, as longer times lead to performance degradation.

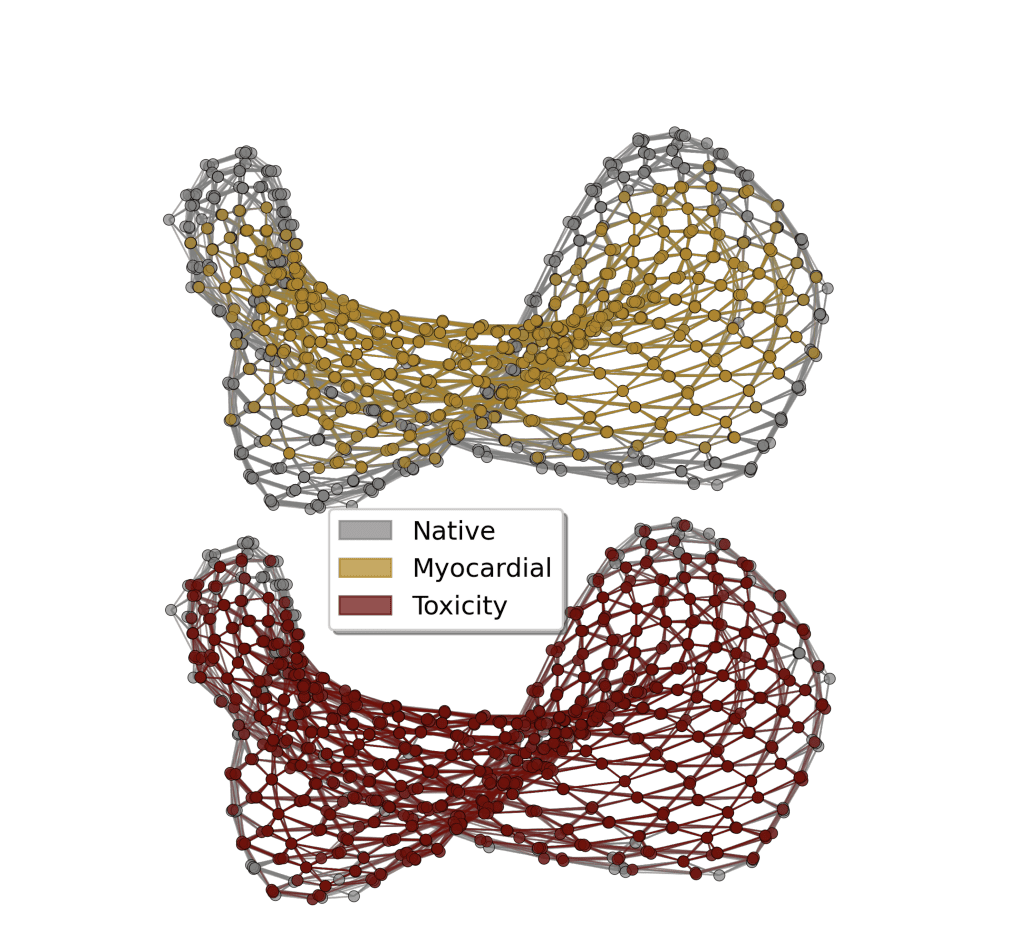

The method was tested on datasets related to toxicity prediction, myocardial infarction complications, and drug-induced autoimmunity, suggesting potential performance gains compared to purely classical methods. Kipu Quantum has launched an industrial quantum machine learning service based on these findings, claiming to achieve quantum advantage. The methodology involves encoding data into qubits, programming the annealer to evolve according to its quantum dynamics, extracting features from the final qubit state, and feeding this data into classical machine learning algorithms. Key concepts include quantum annealing, analog quantum computing, feature engineering, quantum feature maps, and the coherent regime. The team encoded information from datasets into a disordered quantum system, then used a process called “quantum quench” to generate complex feature representations. Experiments reveal that machine learning models benefit most from features extracted during the fast, coherent stage of this quantum process, particularly when the system is near a critical dynamic point. This analog quantum feature mapping technique was benchmarked on high-dimensional datasets, drawn from areas like drug discovery and medical diagnostics.

Results demonstrate a substantial performance boost, with the quantum-enhanced models achieving up to a 210% improvement in key metrics compared to state-of-the-art classical machine learning algorithms. Peak classification performance was observed at annealing times of 20-30 nanoseconds, a regime where quantum entanglement is maximized. The technique was successfully applied to datasets related to molecular toxicity, myocardial infarction complications, and drug-induced autoimmunity, using algorithms including support vector machines, random forests, and gradient boosting. By encoding data into a disordered quantum system and extracting features from its evolution, the researchers demonstrate performance improvements in applications including molecular toxicity classification, diagnosis of heart attack complications, and detection of drug-induced autoimmune responses. Comparative evaluations consistently show gains in precision, recall, and area under the curve, achieving improvements of up to 210% in certain metrics. Researchers found that optimal performance is achieved when the quantum system operates in a coherent regime, with longer annealing times leading to performance degradation due to decoherence. Further research is needed to explore more complex quantum feature encodings, adaptive annealing schedules, and broader problem domains. Future work will also investigate implementation on digital quantum computers and explore alternative analog quantum hardware platforms, such as neutral-atom quantum systems, to expand the scope and impact of this method.

AI Research

Prediction: This Artificial Intelligence (AI) Semiconductor Stock Will Join Nvidia, Microsoft, Apple, Alphabet, and Amazon in the $2 Trillion Club by 2028. (Hint: Not Broadcom)

This company is growing quickly, and its stock is a bargain at the current price.

Big tech companies are set to spend $375 billion on artificial intelligence (AI) infrastructure this year, according to estimates from analysts at UBS. That number will climb to $500 billion next year.

The biggest expense item in building out AI data centers is semiconductors. Nvidia (NVDA -3.38%) has been by far the biggest beneficiary of that spend so far. Its GPUs offer best-in-class capabilities for general AI training and inference. Other AI accelerator chipmakers have also seen strong sales growth, including Broadcom (AVGO -3.70%), which makes custom AI chips as well as networking chips, which ensure data moves efficiently from one server to another, keeping downtime to a minimum.

Broadcom’s stock price has increased more than fivefold since the start of 2023, and the company now sports a market cap of $1.4 trillion. Another year of spectacular growth could easily place it in the $2 trillion club. But another semiconductor stock looks like a more likely candidate to reach that vaunted level, joining Nvidia and the four other members of the club by 2028.

Image source: Getty Images.

Is Broadcom a $2 trillion company?

Broadcom is a massive company with operations spanning hardware and software, but its AI chips business is currently steering the ship.

To that end, AI revenue climbed 46% year over year last quarter to reach $4.4 billion. Management expects the current quarter to produce $5.1 billion in AI semiconductor revenue, accelerating growth to roughly 60%. AI-related revenue now accounts for roughly 30% of Broadcom’s sales, and that’s set to keep climbing over the next few years.

Broadcom’s acquisition of VMware last year is another growth driver. The software company is now fully integrated into Broadcom’s larger operations, and it’s seen strong success in upselling customers to the VMware Cloud Foundation, enabling enterprises to run their own private clouds. Over 87% of its customers have transitioned to the new subscription, resulting in double-digit growth in annual recurring revenue.

But Broadcom shares are extremely expensive. The stock garners a forward P/E ratio of 45. While its AI chip sales are growing quickly and it’s seeing strong margin improvement from VMware, it’s important not to lose sight of how broad a company Broadcom is. Despite the stellar growth in those two businesses, the company is still only growing its top line at about 20% year over year. Investors should expect only incremental margin improvements going forward as it scales the AI accelerator business. That means the business is set up for strong earnings growth, but not enough to justify its 45 times earnings multiple.

Another semiconductor stock trades at a much more reasonable multiple, and is growing just as fast.

The semiconductor giant poised to join the $2 trillion club by 2028

Both Broadcom and Nvidia rely on another company to ensure they can create the most advanced semiconductors in the world for AI training and inference. That company is Taiwan Semiconductor Manufacturing (TSM -3.05%), which actually prints and packages both companies’ designs. Almost every company designing leading-edge chips relies on TSMC for its technological capabilities. As a result, its market share of semiconductor manufacturing has climbed to more than two-thirds.

TSMC benefits from a virtuous cycle, ensuring it maintains and grows its massive market share. Its technology lead helps it win big contracts from companies like Nvidia and Broadcom. That gives it the capital to invest in expanding capacity and research and development for its next-generation process. As a result, it maintains its technology lead while offering enough capacity to meet the growing demand for manufacturing.

TSMC’s leading-edge process node, dubbed N2, will reportedly charge a 66% premium per silicon wafer over the previous generation (N3). That’s a much bigger step-up in price than it’s historically managed, but the demand for the process is strong as companies are willing to spend whatever it takes to access the next bump in power and energy efficiency. While TSMC typically experiences a significant drop off in gross margin as it ramps up a new expensive node with lower initial yields, its current pricing should help it maintain its margins for years to come as it eventually transitions to an even more advanced process next year.

Management expects AI-related revenue to average mid-40% growth per year from 2024 through 2029. While AI chips are still a relatively small part of TSMC’s business, that should produce overall revenue growth of about 20% for the business. Its ability to maintain a strong gross margin as it ramps up the next two manufacturing processes should allow it to produce operating earnings growth exceeding that 20% mark.

TSMC’s stock trades at a much more reasonable earnings multiple of 24 times expectations. Considering the business could generate earnings growth in the low 20% range, that’s a great price for the stock. If it can maintain that earnings multiple through 2028 while growing earnings at about 20% per year, the stock will be worth well over $2 trillion at that point.

Adam Levy has positions in Alphabet, Amazon, Apple, Microsoft, and Taiwan Semiconductor Manufacturing. The Motley Fool has positions in and recommends Alphabet, Amazon, Apple, Microsoft, Nvidia, and Taiwan Semiconductor Manufacturing. The Motley Fool recommends Broadcom and recommends the following options: long January 2026 $395 calls on Microsoft and short January 2026 $405 calls on Microsoft. The Motley Fool has a disclosure policy.

-

Tools & Platforms3 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences3 months ago

Events & Conferences3 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Business1 day ago

Business1 day agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoAstrophel Aerospace Raises ₹6.84 Crore to Build Reusable Launch Vehicle