Jobs & Careers

Apple Plans AI Search Tool Powered by Google Gemini, Leaves Perplexity Out

Apple is preparing to launch an AI-based web search service next year, stepping up competition with OpenAI and Perplexity AI, Bloomberg reported. The system, internally known as World Knowledge Answers, will be integrated with Siri and could later expand to Safari and Spotlight.

The new feature, described by Apple executives as an answer engine, is expected to arrive next Spring as part of a broader Siri overhaul. “The work we’ve done on this end-to-end revamp of Siri has given us the results we needed,” Craig Federighi, head of software engineering at Apple, reportedly told employees in a recent all-hands meeting.

The update will enable Siri to handle more complex queries by tapping LLMs. Apple is testing models from Google and Anthropic, while continuing to use its own Apple Foundation Models for on-device search.

According to Bloomberg, Apple has leaned towards Google’s Gemini for summarisation, citing more favourable financial terms after Anthropic sought over $1.5 billion annually for its Claude model.

The overhaul, internally codenamed Linwood and LLM Siri, will allow the assistant to summarise web results using text, images, videos and local information. Apple is also working on a visual redesign of Siri and a health-focused AI agent, slated for release in 2026.

The initiative comes as Apple maintains its $20 billion-a-year search arrangement with Google. A US judge this week ruled the deal can continue with minor adjustments, easing investor concerns.

Google’s market cap rose by about $228 billion on September 4, closing at a record high of $2.79 trillion.

However, Apple’s SVP of services, Eddy Cue, recently testified that Google searches from Apple devices have declined for the first time in two decades, noting the rise of “formidable competitors” in AI-powered search.

While Apple explored acquisitions of startups such as Perplexity and Mistral, it has opted to develop its own system. Bloomberg reported that Perplexity’s technology was seriously evaluated, but the company is no longer pursuing a deal.

Despite the push, Apple’s AI division has faced attrition. Key members of its Foundation Models team, including founder Ruoming Pang, have left for rivals like Meta, OpenAI and Anthropic.

The post Apple Plans AI Search Tool Powered by Google Gemini, Leaves Perplexity Out appeared first on Analytics India Magazine.

Jobs & Careers

Cisco Announces Agentic Observability Features, Time Series AI Model

Cisco, the global leader in networking, security, and infrastructure, announced a suite of AI-powered observability tools at the conf. 2025 event in Boston, United States.

This includes a unified data fabric, a machine data lake, and a time series foundational model for anomaly and root cause analysis.

Together, the companies aim to help enterprises turn machine-generated data into actionable AI-powered intelligence.

Powered by Splunk, the data platform the company acquired last year, Cisco announced a new AI-powered Splunk observability agent. It deploys AI agents across the entire incident response lifecycle while monitoring both its performance and quality.

For instance, the AI troubleshooting agent is offered as part of Splunk’s Observability Cloud and AppDynamics platform, to autonomously analyse incidents and identify the root cause of issues, enabling teams to resolve them quickly.

Other agents help teams set up alert correlation, summaries of alerts, trends, impacts, and more to troubleshoot faster.

“With the latest innovations in Splunk Observability, we are empowering enterprises to proactively monitor their critical applications and digital services with ease, resolve issues before they escalate, and ensure the value and outcomes they derive from observability are commensurate with the cost,” said Patrick Lin, senior vice president and general manager of Splunk Observability.

Observability is the ability to measure a system’s internal health and performance by analysing metrics, logs, and traces. It applies in various fields, including IT operations, software development, business operations, and others.

The networking and security firm also announced observability tools for these AI agents themselves. This enables continuous monitoring of their quality, security, health, and costs, ensuring they perform as intended.

The company announced that most of these features will seamlessly integrate with several solutions and platforms offered by them and Splunk.

New Cisco Data Fabric, Time Series Foundation Model

Cisco also announced Cisco Data Fabric, powered by the Splunk platform, which helps enterprises efficiently handle machine data at scale.

Enterprises can utilise the Data Fabric for various AI use cases, such as training AI models or correlating multiple streams of both machine data and business data to generate insights.

Machine-generated data is the continuous streams of information produced by sensors, servers, applications, and network devices. This includes network packet counts, CPU/GPU utilisation, readings from data centre sensors, query response times, error codes, and more.

The data flows continuously, often generating terabytes per day from a single enterprise environment, and captures the real-time health and performance of digital infrastructure.

Cisco stated that the framework enables data management across the edge, on-premises, and cloud environments, including SecOps, ITOps, DevOps, and NetOps. It can also federate data across sources like Amazon S3, Apache Iceberg, Delta Lake, Snowflake, and Microsoft Azure, with support for additional sources next year.

Besides, the company also announced a new Machine Data Lake in Splunk, which provides an ‘AI-ready foundation’ for analytics and training AI models.

The company released a Time Series Foundation Model, pre-trained to perform pattern analysis and reasoning across machine data in the time series format. The model allows for anomaly detection, forecasting, and automated root cause analysis across the Cisco Data Fabric.

“Every company has massive volumes of this machine data, but it’s been largely left out of AI for a few reasons: LLMs don’t speak the language of machine data, the information is spread across disparate silos, and the expertise and costs involved can be prohibitive. As a result, we’ve only begun to scratch the surface of what we can do with AI,” said Jeetu Patel, chief product officer at Cisco.

Patel mentioned that although the industry has been successful in training AI models using human-created data such as text, it has not yet been fully explored with data generated by machines, which motivated the recent announcements.

The Time Series Foundation Model will be made available on Hugging Face, the open-source AI model repository, starting in November 2025.

Splunk was acquired by Cisco in 2024 for $28 billion. Their competitors in the AI-enabled observability space include companies such as New Relic. Dynatrace, Datadog, Elastic, and others.

The post Cisco Announces Agentic Observability Features, Time Series AI Model appeared first on Analytics India Magazine.

Jobs & Careers

NVIDIA Launches Rubin CPX GPU for Million-Token AI Workloads

NVIDIA has introduced Rubin CPX, a new class of GPU designed to process massive AI workloads such as million-token coding and long-form video applications. The launch took place at the AI Infra Summit in Santa Clara, where the company also shared new benchmark results from its Blackwell Ultra architecture.

The system is scheduled for availability at the end of 2026. According to NVIDIA, every $100 million invested in Rubin CPX infrastructure could generate up to $5 billion in token revenue.

“Just as RTX revolutionised graphics and physical AI, Rubin CPX is the first CUDA GPU purpose-built for massive-context AI, where models reason across millions of tokens of knowledge at once,” NVIDIA chief Jensen Huang said.

What Rubin CPX Offers

Rubin CPX accelerates attention mechanisms three times faster than earlier NVIDIA GB300 NVL72 systems, enabling longer context sequences without slowing output. The processor integrates into the Vera Rubin NVL144 CPX system, delivering eight exaflops of AI performance, 100 terabytes of memory and 1.7 petabytes per second of memory bandwidth.

AI firms Cursor, Magic and Runway are already working with the platform to expand advanced coding and generative video tools. Customers can also integrate Rubin CPX into existing NVIDIA data centre infrastructure.

Cursor plans to use it to improve developer productivity, while Runway aims to support advanced generative video workflows. “This means creators, from independent artists to major studios, can gain unprecedented speed, realism and control in their work,” Cristóbal Valenzuela, CEO of Runway, said.

Magic is applying the GPU to software agents capable of reasoning across 100-million-token contexts without additional fine-tuning.

Expanding AI with New Blueprint

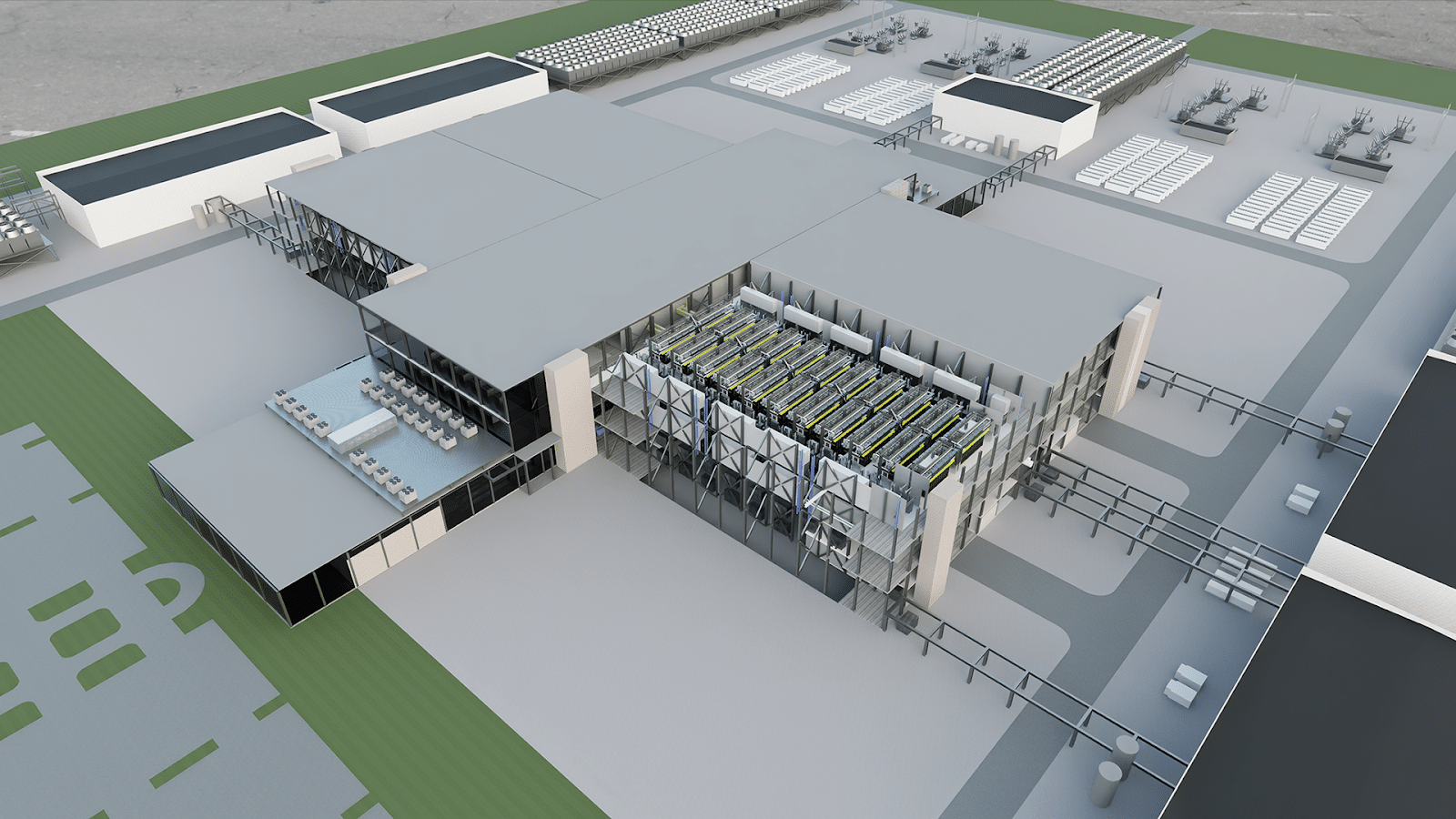

The GPU addresses use cases like analysing codebases with over 1,00,000 lines or processing more than an hour of high-definition video. At the same event, NVIDIA introduced its AI Factory reference designs, a framework for building giga-scale AI data centres.

The initiative integrates compute, cooling, power and simulation into a unified system, moving beyond traditional data centre design. Partners such as Siemens Energy, Schneider Electric, GE Vernova and Jacobs are collaborating on the project. Ian Buck, NVIDIA’s vice president of the data centre business unit, said the goal was to “optimise every watt of energy so that it contributes directly to intelligence generation”.

What is the Benchmark?

Alongside the new hardware, NVIDIA published MLPerf Inference v5.1 results showing record performance for its Blackwell Ultra GPUs. The platform set new per-GPU benchmarks on models such as DeepSeek-R1, Llama 3.1 405B and Whisper.

In particular, the DeepSeek-R1 model achieved more than 5,800 tokens per second per GPU in offline testing, a 4.7x gain over prior Hopper-based systems.

The results highlight how techniques such as NVFP4 quantisation, FP8 key-value caching and disaggregated serving contributed to performance gains, the company claimed. NVIDIA said these improvements translate into higher throughput for AI factories and lower cost per token processed.

The post NVIDIA Launches Rubin CPX GPU for Million-Token AI Workloads appeared first on Analytics India Magazine.

Jobs & Careers

Ray or Dask? A Practical Guide for Data Scientists

Image by Author | Ideogram

As data scientists, we handle large datasets or complex models that require a significant amount of time to run. To save time and achieve results faster, we utilize tools that execute tasks simultaneously or across multiple machines. Two popular Python libraries for this are Ray and Dask. Both help speed up data processing and model training, but they are used for different types of tasks.

In this article, we will explain what Ray and Dask are and when to choose each one.

# What Are Dask and Ray?

Dask is a library used for handling large amounts of data. It is designed to work in a way that feels familiar to users of pandas, NumPy, or scikit-learn. Dask breaks data and tasks into smaller parts and runs them in parallel. This makes it perfect for data scientists who want to scale up their data analysis without learning many new concepts.

Ray is a more general tool that helps you build and run distributed applications. It is particularly strong in machine learning and AI tasks.

Ray also has extra libraries built on top of it, like:

- Ray Tune for tuning hyperparameters in machine learning

- Ray Train for training models on multiple GPUs

- Ray Serve for deploying models as web services

Ray is great if you want to build scalable machine learning pipelines or deploy AI applications that need to run complex tasks in parallel.

# Feature Comparison

A structured comparison of Dask and Ray based on core attributes:

| Feature | Dask | Ray |

|---|---|---|

| Primary Abstraction | DataFrames, Arrays, Delayed tasks | Remote functions, Actors |

| Best For | Scalable data processing, machine learning pipelines | Distributed machine learning training, tuning, and serving |

| Ease of Use | High for Pandas/NumPy users | Moderate, more boilerplate |

| Ecosystem | Integrates with scikit-learn, XGBoost |

Built-in libraries: Tune, Serve, RLlib |

| Scalability | Very good for batch processing | Excellent, more control and flexibility |

| Scheduling | Work-stealing scheduler | Dynamic, actor-based scheduler |

| Cluster Management | Native or via Kubernetes, YARN | Ray Dashboard, Kubernetes, AWS, GCP |

| Community/Maturity | Older, mature, widely adopted | Growing fast, strong machine learning support |

# When to Use What?

Choose Dask if you:

- Use

Pandas/NumPyand want scalability - Process tabular or array-like data

- Perform batch ETL or feature engineering

- Need

dataframeorarrayabstractions with lazy execution

Choose Ray if you:

- Need to run many independent Python functions in parallel

- Want to build machine learning pipelines, serve models, or manage long-running tasks

- Need microservice-like scaling with stateful tasks

# Ecosystem Tools

Both libraries offer or support a range of tools to cover the data science lifecycle, but with different emphasis:

| Task | Dask | Ray |

|---|---|---|

| DataFrames | dask.dataframe |

Modin (built on Ray or Dask) |

| Arrays | dask.array |

No native support, rely on NumPy |

| Hyperparameter tuning | Manual or with Dask-ML | Ray Tune (advanced features) |

| Machine learning pipelines | dask-ml, custom workflows |

Ray Train, Ray Tune, Ray AIR |

| Model serving | Custom Flask/FastAPI setup | Ray Serve |

| Reinforcement Learning | Not supported | RLlib |

| Dashboard | Built-in, very detailed | Built-in, simplified |

# Real-World Scenarios

// Large-Scale Data Cleaning and Feature Engineering

Use Dask.

Why? Dask integrates smoothly with pandas and NumPy. Many data teams already use these tools. If your dataset is too large to fit in memory, Dask can split it into smaller parts and process these parts in parallel. This helps with tasks like cleaning data and creating new features.

Example:

import dask.dataframe as dd

import numpy as np

df = dd.read_csv('s3://data/large-dataset-*.csv')

df = df[df['amount'] > 100]

df['log_amount'] = df['amount'].map_partitions(np.log)

df.to_parquet('s3://processed/output/')

This code reads multiple large CSV files from an S3 bucket using Dask in parallel. It filters rows where the amount column is greater than 100, applies a log transformation, and saves the result as Parquet files.

// Parallel Hyperparameter Tuning for Machine Learning Models

Use Ray.

Why? Ray Tune is great for trying different settings when training machine learning models. It integrates with tools like PyTorch and XGBoost, and it can stop bad runs early to save time.

Example:

from ray import tune

from ray.tune.schedulers import ASHAScheduler

def train_fn(config):

# Model training logic here

...

tune.run(

train_fn,

config={"lr": tune.grid_search([0.01, 0.001, 0.0001])},

scheduler=ASHAScheduler(metric="accuracy", mode="max")

)

This code defines a training function and uses Ray Tune to test different learning rates in parallel. It automatically schedules and evaluates the best configuration using the ASHA scheduler.

// Distributed Array Computations

Use Dask.

Why? Dask arrays are helpful when working with large sets of numbers. It splits the array into blocks and processes them in parallel.

Example:

import dask.array as da

x = da.random.random((10000, 10000), chunks=(1000, 1000))

y = x.mean(axis=0).compute()

This code creates a large random array divided into chunks that can be processed in parallel. It then calculates the mean of each column using Dask’s parallel computing power.

// Building an End-to-End Machine Learning Service

Use Ray.

Why? Ray is designed not just for model training but also for serving and lifecycle management. With Ray Serve, you can deploy models in production, run preprocessing logic in parallel, and even scale stateful actors.

Example:

from ray import serve

@serve.deployment

class ModelDeployment:

def __init__(self):

self.model = load_model()

def __call__(self, request_body):

data = request_body

return self.model.predict([data])[0]

serve.run(ModelDeployment.bind())

This code defines a class to load a machine learning model and serve it through an API using Ray Serve. The class receives a request, makes a prediction using the model, and returns the result.

# Final Recommendations

| Use Case | Recommended Tool |

|---|---|

| Scalable data analysis (Pandas-style) | Dask |

| Large-scale machine learning training | Ray |

| Hyperparameter optimization | Ray |

| Out-of-core DataFrame computation | Dask |

| Real-time machine learning model serving | Ray |

| Custom pipelines with high parallelism | Ray |

| Integration with PyData Stack | Dask |

# Conclusion

Ray and Dask are both tools that help data scientists handle large amounts of data and run programs faster. Ray is good for tasks that need a lot of flexibility, like machine learning projects. Dask is useful if you want to work with big datasets using tools similar to Pandas or NumPy.

Which one you choose depends on what your project needs and the type of data you have. It’s a good idea to try both on small examples to see which one fits your work better.

Jayita Gulati is a machine learning enthusiast and technical writer driven by her passion for building machine learning models. She holds a Master’s degree in Computer Science from the University of Liverpool.

-

Business2 weeks ago

Business2 weeks agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms4 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi