AI Insights

Apple Loses Top AI Models Executive to Meta’s Hiring Spree

Apple Inc.’s top executive in charge of artificial intelligence models is leaving for Meta Platforms Inc., another setback in the iPhone maker’s struggling AI efforts.

Source link

AI Insights

Meet the Artificial Intelligence (AI) Stock That’s Crushing Nvidia on the Market in 2025

This cybersecurity company’s improving revenue pipeline points toward more stock price upside.

The fast-growing adoption of artificial intelligence (AI) technology in the past three years has been a tailwind for Nvidia (NVDA). The company enjoyed an early-mover advantage in this market thanks to its graphics processing units (GPUs), which have played a central role in the training of popular AI models.

However, it looks like investors’ appetite for Nvidia stock may be fading. It has appreciated just 32% so far in 2025 despite sustaining healthy growth levels. Factors such as restrictions on sales of its chips to China and the potential impact of tariffs on Nvidia’s business seem to be weighing on the stock. So, if you’re looking for an alternative to capitalize on the fast-growing adoption of AI, now would be a good time to take a closer look at this cybersecurity specialist that has outperformed Nvidia so far this year.

Image source: Getty Images.

The proliferation of AI in the cybersecurity market is turning out to be a tailwind for this company

Zscaler (ZS 2.82%), a cloud-based cybersecurity company, has witnessed a 59% jump in its stock price in 2025. It is primarily known for providing zero-trust security solutions that help its customers verify the identity of users or devices accessing their networks. The zero-trust security market is projected to grow at an annual pace of almost 17% through 2030, generating more than $92 billion in annual revenue at the end of the decade, according to Grand View Research.

The good part is that Zscaler is growing at a faster pace than the zero-trust security market. Its revenue in the recently concluded fiscal year 2025 (which ended on July 31) increased by 23% to $2.7 billion. Looking ahead, Zscaler could keep growing at a faster pace than the zero trust security market thanks to its strategy of offering cybersecurity tools to customers to protect AI apps, ensure secure access to AI apps, and protect large language models (LLMs), among other tools.

Additionally, Zscaler is also offering agentic AI cybersecurity solutions to speed up the process of identifying the reasons behind IT outages, undertake corrective measures, and improve troubleshooting. The important thing to note here is that Zscaler’s agentic AI security offerings are growing at a nice pace. The annual recurring revenue (ARR) of its agentic security operations increased by an impressive 85% year over year, while its agentic AI operations grew by 58% last year.

With the adoption of agentic AI in cybersecurity expected to clock a compound annual growth rate (CAGR) of 34% through 2033, hitting an annual revenue of $322 billion at the end of the forecast period, Zscaler seems to be in a solid position to accelerate its growth in the long run.

Even better, the company is already building a healthy long-term revenue pipeline thanks to its focus on fast-growing niches such as AI. This is evident from the 31% spike in its remaining performance obligations (RPO) last quarter to $5.8 billion. That’s more than double the revenue it generated in the latest fiscal year.

As RPO refers to the value of a company’s contracted backlog, the faster growth in this metric when compared to the 21% increase in its quarterly revenue suggests that Zscaler is winning new business at a faster pace than it can fulfill.

That’s the reason why there is a good chance that its growth rate could pick up in the future, which is why it makes sense to buy this stock while it is trading at a relatively attractive valuation.

Zscaler’s growth could exceed Wall Street’s expectations

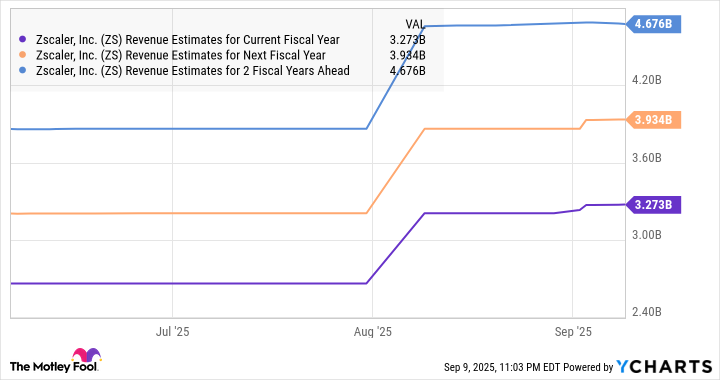

Though analysts are expecting Zscaler to deliver robust double-digit growth over the next three fiscal years, they are expecting a relatively slower pace of growth compared to its fiscal 2025 performance.

ZS Revenue Estimates for Current Fiscal Year data by YCharts

But what’s worth noting is that Zscaler’s consensus revenue estimates have moved higher of late. That’s not surprising considering the improvement in the company’s RPO. Moreover, the outstanding growth opportunity in the AI-focused cybersecurity niches in the long run is likely to help Zscaler deliver much stronger growth than what analysts are expecting.

That’s why it makes sense to buy Zscaler while it is trading at 16 times sales. Though that’s not exactly cheap considering the U.S. technology sector’s average sales multiple of 8.5, it is much lower than Nvidia’s price-to-sales ratio of 25. What’s more, Zscaler’s growth after a couple of years is expected to be higher than that of Nvidia’s, as the latter’s growth could taper off thanks to its high revenue base.

NVDA Revenue Estimates for Current Fiscal Year data by YCharts

That’s why investors looking for a reasonably valued AI stock that has the potential to deliver robust gains in the long run can consider going long Zscaler even after the healthy gains that it has clocked this year.

Harsh Chauhan has no position in any of the stocks mentioned. The Motley Fool has positions in and recommends Nvidia and Zscaler. The Motley Fool has a disclosure policy.

AI Insights

5 Artificial Intelligence (AI) Stocks That Look Like No-Brainer Buys Right Now

Key Points

-

Nvidia and Broadcom are designing and supplying AI computing equipment.

-

Taiwan Semiconductor makes chips for nearly all companies in the AI arms race.

-

Amazon and Alphabet are two of the leading cloud computing companies.

-

10 stocks we like better than Taiwan Semiconductor Manufacturing ›

Artificial intelligence (AI) investing is what’s keeping the market propped up right now. A significant amount of money is being spent on building AI computing infrastructure, and numerous businesses are benefiting from this spending trend.

By picking up shares of companies that are benefiting from the spending now, investors can ensure they’re not buying into hype.

Where to invest $1,000 right now? Our analyst team just revealed what they believe are the 10 best stocks to buy right now. Learn More »

I’ve got five stocks that meet this criteria, and each looks like a great investment now.

Image source: Getty Images.

Chip companies are making a ton of money from the AI buildout

Since the beginning of the AI arms race, a handful of companies have been assumed winners based on their products. The market turned out to be right about this, as Nvidia (NASDAQ: NVDA), Broadcom (NASDAQ: AVGO), and Taiwan Semiconductor (NYSE: TSM) have all delivered spectacular returns. However, they’re not finished yet.

Nvidia’s graphics processing units (GPUs) have powered nearly all of the AI workloads that investors know today. Demand for GPUs outpaces supply, so many of Nvidia’s largest clients are working closely with the company to inform it of their future demand years in advance. So, when Nvidia’s management speaks about industry growth, investors should listen.

Nvidia expects global data center capital expenditures to reach $3 trillion to $4 trillion by 2030. That’s monstrous growth from today’s amount (Nvidia estimates the big four hyperscalers will spend around $600 billion in 2025), and shows that the AI computing infrastructure buildout is far from over.

This clearly makes Nvidia a buy, but it also bodes well for Broadcom and Taiwan Semiconductor.

Broadcom manufactures connectivity switches for these computing data centers, enabling users to stitch together information being computed across multiple computing units. However, another area where Broadcom is experiencing significant growth is its custom AI accelerators, which it designs in collaboration with end users. This is a direct challenge to Nvidia’s GPU superiority, although both products will continue to be used in the future. With many companies looking to cut Nvidia out to reduce the costs of building a data center, Broadcom is one to watch over the next few years.

Lastly, neither company can produce the actual chip that goes into these products. So, they outsource the work to the world’s leading chip foundry, Taiwan Semiconductor. TSMC doesn’t care which company has the most computing units in data centers around the globe; it just cares that the chips they use are sourced from its factories, making it a neutral player in the AI arms race. While Taiwan Semiconductor may not have the upside of Nvidia or Broadcom, it also doesn’t have the downside. This makes it a safe bet to capitalize on all the AI spending, and it’s one of my top picks for stocks to buy now.

Demand for cloud computing is rising

Two of the largest purchasers of computing equipment are Amazon (NASDAQ: AMZN) and Alphabet (NASDAQ: GOOG) (NASDAQ: GOOGL). While some of this is being used for internal workloads, most of it is being rented back to customers through cloud computing. At its core, cloud computing involves renting excess computing power from one provider and utilizing it themselves. Buying computing equipment from Broadcom or Nvidia isn’t cheap, so this is often the most cost-effective way to access vast computing resources.

Additionally, traditional on-premises computing workloads are migrating to the cloud as existing equipment reaches the end of its life, providing cloud computing providers, such as Alphabet and Amazon, with growth tailwinds to capitalize on. Grand View Research estimates that the global cloud computing market opportunity was about $750 billion in 2024. However, that figure is expected to rise to $2.39 trillion by 2030, making this an excellent industry to invest in.

Alphabet and Amazon are two of the largest cloud computing providers, and I believe each stock is worth owning due to the massive potential in the cloud computing businesses of these two tech giants.

Should you invest $1,000 in Taiwan Semiconductor Manufacturing right now?

Before you buy stock in Taiwan Semiconductor Manufacturing, consider this:

The Motley Fool Stock Advisor analyst team just identified what they believe are the 10 best stocks for investors to buy now… and Taiwan Semiconductor Manufacturing wasn’t one of them. The 10 stocks that made the cut could produce monster returns in the coming years.

Consider when Netflix made this list on December 17, 2004… if you invested $1,000 at the time of our recommendation, you’d have $672,879!* Or when Nvidia made this list on April 15, 2005… if you invested $1,000 at the time of our recommendation, you’d have $1,086,947!*

Now, it’s worth noting Stock Advisor’s total average return is 1,066% — a market-crushing outperformance compared to 186% for the S&P 500. Don’t miss out on the latest top 10 list, available when you join Stock Advisor.

See the 10 stocks »

*Stock Advisor returns as of September 8, 2025

Keithen Drury has positions in Alphabet, Amazon, Broadcom, Nvidia, and Taiwan Semiconductor Manufacturing. The Motley Fool has positions in and recommends Alphabet, Amazon, Nvidia, and Taiwan Semiconductor Manufacturing. The Motley Fool recommends Broadcom. The Motley Fool has a disclosure policy.

Disclaimer: For information purposes only. Past performance is not indicative of future results.

AI Insights

A.I. Predicts Louisiana vs Missouri Contest Closer Than You Think

(KMDL-FM) Saturday at high noon, the University of Louisiana’s Ragin’ Cajuns will travel to Columbia, Missouri, to face the Missouri Tigers in a college football game. The game was originally set to kick off at 3 pm Central Time. But over concerns of excessive heat and the danger it could pose to players and fans, the contest’s start time was moved up to 12 Noon.

In looking at forecast details for where the game is being played, there will be a significant difference in temperatures between Noon and 3 pm. The forecast temperature for Noon is 91, while it’s 95 at 3 pm. However, the heat index could be ten to fifteen degrees warmer during the latter part of the day. So good call from Missouri Athletics on that one.

READ MORE: Ragin’ Cajun Starting Quarterback Injured

READ MORE: Win a Ragin’ Cajun Tailgate Party for You and 9 Friends

A quick check of the point spread betting lines on the game suggests that many pundits do not give the Cajuns of Louisiana much of a chance against the Columbia Kittys. One betting line published by the Associated Press suggests UL is a 26.5 underdog to Missouri. In other words, the Cajuns haven’t got a chance on paper.

Amit Lahav via Unsplash.com

But since they don’t play games on paper, and anytime the Cajuns take the field, they’ve got a chance, we thought we’d ask our robot friends with Artificial Intelligence what they thought about the game. Here is how the A.I. Robots break down UL vs Missouri.

Who Would Win? Computers Predict Missouri vs Louisiana Football Winner

Dave Adamson via Unsplash.com

First Quarter: Missouri wins the opening coin toss and drives the ball right down the field on its opening possession. The Cajuns’ defense stiffens and holds Missouri to a field goal. Louisiana responds with an aggressive drive of its own, featuring a screen pass play that gets a huge chunk of yardage and puts the Cajuns in a position to tie the game, which they do. The score at the end of one quarter is tied at 3 to 3.

We should note that A.I. predicts a controversial play involving a missed fumble call that goes against the Cajuns in quarter number one. The Robots won’t say if it’s “home field advantage”, but don’t be surprised if you get upset because of a “bad call”.

Nathan Shivley via Unsplash.com

Second Quarter: The Missouri Offense suddenly finds its rhythm and drives the ball down the field for a quick score. The Cajuns answer with a long pass, but there is a flag on the play. The call goes against the defense, and the Cajuns parlay that play into a touchdown of their own to tie the contest at 10 each. Missouri takes the remaining minutes in the half to march down the field for another touchdown. The score at halftime is Missouri 17 and the Cajuns 10.

Cajun Fans Be On The Lookout For This “Big Play” on Saturday

Third Quarter: Louisiana roars out for the third quarter, stopping Missouri’s offense on a three-and-out. The Cajuns then take the ball and march it down the field following a Tiger punt. The big play is once again a screen pass. The Cajuns can’t put the ball in the endzone, but do get a field goal. The Cajuns’ defense stops Missouri with a huge 3rd down sack to end an impressive drive with no points allowed.

However, the Missouri special teams stepped up and blew open a long punt return following a Ragin’ Cajun punt. The silver lining for Cajun fans, a Vermilion and White special teamer manages to knock the ball free before the runner can cross the goal line, the Cajuns recover, and 3rd quarter ends with the score still at 17 to 13.

Tim Mossholder

Fourth Quarter: The Cajun defense is playing lights out, and they turn Missouri over on downs near midfield on the opening drive of the fourth quarter. The Cajuns attempt a deep pass into the endzone, which falls incomplete, but many say a Missouri defender interfered with the Cajun receiver.

This ‘No-Call” amps up the Louisiana Offense, who manage to drive the ball down to the Missouri 5-yard line. The Cajuns go for it on fourth and goal, but the Tigers hold. With about six minutes left in the game, Missouri is playing ball control, but a crucial fumble near midfield turns the ball over to Louisiana with five minutes left, trailing by four points.

Gene Gallin via Unsplash.com

The Cajuns convert the turnover into a field goal and trail by just one point. On the ensuing kickoff, the Cajun Coaches call for an onside kick, which is recovered by Missouri. The Tigers run out the clock to claim the victory.

The Final Score: The final score was Missouri 17, Louisiana 16. That’s a lot different than a 26.5-point blowout, don’t you think? So, who do you think has a better handle on the game? Is it the betting interests in Las Vegas or the football forecasters who use artificial intelligence to predict the game’s outcome?

I guess we’ll see on Saturday. Remember, the kickoff has been moved to 12 Noon.

10 Common Jobs Safest From an Artificial Intelligence Takeover

Gallery Credit: Credit N8

-

Business2 weeks ago

Business2 weeks agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms1 month ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy2 months ago

Ethics & Policy2 months agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi