AI Research

AlphaGeometry: An Olympiad-level AI system for geometry

Science

Our AI system surpasses the state-of-the-art approach for geometry problems, advancing AI reasoning in mathematics

Reflecting the Olympic spirit of ancient Greece, the International Mathematical Olympiad is a modern-day arena for the world’s brightest high-school mathematicians. The competition not only showcases young talent, but has emerged as a testing ground for advanced AI systems in math and reasoning.

In a paper published today in Nature, we introduce AlphaGeometry, an AI system that solves complex geometry problems at a level approaching a human Olympiad gold-medalist – a breakthrough in AI performance. In a benchmarking test of 30 Olympiad geometry problems, AlphaGeometry solved 25 within the standard Olympiad time limit. For comparison, the previous state-of-the-art system solved 10 of these geometry problems, and the average human gold medalist solved 25.9 problems.

In our benchmarking set of 30 Olympiad geometry problems (IMO-AG-30), compiled from the Olympiads from 2000 to 2022, AlphaGeometry solved 25 problems under competition time limits. This is approaching the average score of human gold medalists on these same problems. The previous state-of-the-art approach, known as “Wu’s method”, solved 10.

AI systems often struggle with complex problems in geometry and mathematics due to a lack of reasoning skills and training data. AlphaGeometry’s system combines the predictive power of a neural language model with a rule-bound deduction engine, which work in tandem to find solutions. And by developing a method to generate a vast pool of synthetic training data – 100 million unique examples – we can train AlphaGeometry without any human demonstrations, sidestepping the data bottleneck.

With AlphaGeometry, we demonstrate AI’s growing ability to reason logically, and to discover and verify new knowledge. Solving Olympiad-level geometry problems is an important milestone in developing deep mathematical reasoning on the path towards more advanced and general AI systems. We are open-sourcing the AlphaGeometry code and model, and hope that together with other tools and approaches in synthetic data generation and training, it helps open up new possibilities across mathematics, science, and AI.

“

It makes perfect sense to me now that researchers in AI are trying their hands on the IMO geometry problems first because finding solutions for them works a little bit like chess in the sense that we have a rather small number of sensible moves at every step. But I still find it stunning that they could make it work. It’s an impressive achievement.

Ngô Bảo Châu, Fields Medalist and IMO gold medalist

AlphaGeometry adopts a neuro-symbolic approach

AlphaGeometry is a neuro-symbolic system made up of a neural language model and a symbolic deduction engine, which work together to find proofs for complex geometry theorems. Akin to the idea of “thinking, fast and slow”, one system provides fast, “intuitive” ideas, and the other, more deliberate, rational decision-making.

Because language models excel at identifying general patterns and relationships in data, they can quickly predict potentially useful constructs, but often lack the ability to reason rigorously or explain their decisions. Symbolic deduction engines, on the other hand, are based on formal logic and use clear rules to arrive at conclusions. They are rational and explainable, but they can be “slow” and inflexible – especially when dealing with large, complex problems on their own.

AlphaGeometry’s language model guides its symbolic deduction engine towards likely solutions to geometry problems. Olympiad geometry problems are based on diagrams that need new geometric constructs to be added before they can be solved, such as points, lines or circles. AlphaGeometry’s language model predicts which new constructs would be most useful to add, from an infinite number of possibilities. These clues help fill in the gaps and allow the symbolic engine to make further deductions about the diagram and close in on the solution.

AlphaGeometry solving a simple problem: Given the problem diagram and its theorem premises (left), AlphaGeometry (middle) first uses its symbolic engine to deduce new statements about the diagram until the solution is found or new statements are exhausted. If no solution is found, AlphaGeometry’s language model adds one potentially useful construct (blue), opening new paths of deduction for the symbolic engine. This loop continues until a solution is found (right). In this example, just one construct is required.

AlphaGeometry solving an Olympiad problem: Problem 3 of the 2015 International Mathematics Olympiad (left) and a condensed version of AlphaGeometry’s solution (right). The blue elements are added constructs. AlphaGeometry’s solution has 109 logical steps.

Generating 100 million synthetic data examples

Geometry relies on understanding of space, distance, shape, and relative positions, and is fundamental to art, architecture, engineering and many other fields. Humans can learn geometry using a pen and paper, examining diagrams and using existing knowledge to uncover new, more sophisticated geometric properties and relationships. Our synthetic data generation approach emulates this knowledge-building process at scale, allowing us to train AlphaGeometry from scratch, without any human demonstrations.

Using highly parallelized computing, the system started by generating one billion random diagrams of geometric objects and exhaustively derived all the relationships between the points and lines in each diagram. AlphaGeometry found all the proofs contained in each diagram, then worked backwards to find out what additional constructs, if any, were needed to arrive at those proofs. We call this process “symbolic deduction and traceback”.

Visual representations of the synthetic data generated by AlphaGeometry

That huge data pool was filtered to exclude similar examples, resulting in a final training dataset of 100 million unique examples of varying difficulty, of which nine million featured added constructs. With so many examples of how these constructs led to proofs, AlphaGeometry’s language model is able to make good suggestions for new constructs when presented with Olympiad geometry problems.

Pioneering mathematical reasoning with AI

The solution to every Olympiad problem provided by AlphaGeometry was checked and verified by computer. We also compared its results with previous AI methods, and with human performance at the Olympiad. In addition, Evan Chen, a math coach and former Olympiad gold-medalist, evaluated a selection of AlphaGeometry’s solutions for us.

Chen said: “AlphaGeometry’s output is impressive because it’s both verifiable and clean. Past AI solutions to proof-based competition problems have sometimes been hit-or-miss (outputs are only correct sometimes and need human checks). AlphaGeometry doesn’t have this weakness: its solutions have machine-verifiable structure. Yet despite this, its output is still human-readable. One could have imagined a computer program that solved geometry problems by brute-force coordinate systems: think pages and pages of tedious algebra calculation. AlphaGeometry is not that. It uses classical geometry rules with angles and similar triangles just as students do.”

“

AlphaGeometry’s output is impressive because it’s both verifiable and clean…It uses classical geometry rules with angles and similar triangles just as students do.

Evan Chen, math coach and Olympiad gold medalist

As each Olympiad features six problems, only two of which are typically focused on geometry, AlphaGeometry can only be applied to one-third of the problems at a given Olympiad. Nevertheless, its geometry capability alone makes it the first AI model in the world capable of passing the bronze medal threshold of the IMO in 2000 and 2015.

In geometry, our system approaches the standard of an IMO gold-medalist, but we have our eye on an even bigger prize: advancing reasoning for next-generation AI systems. Given the wider potential of training AI systems from scratch with large-scale synthetic data, this approach could shape how the AI systems of the future discover new knowledge, in math and beyond.

AlphaGeometry builds on Google DeepMind and Google Research’s work to pioneer mathematical reasoning with AI – from exploring the beauty of pure mathematics to solving mathematical and scientific problems with language models. And most recently, we introduced FunSearch, which made the first discoveries in open problems in mathematical sciences using Large Language Models.

Our long-term goal remains to build AI systems that can generalize across mathematical fields, developing the sophisticated problem-solving and reasoning that general AI systems will depend on, all the while extending the frontiers of human knowledge.

Acknowledgements

This project is a collaboration between the Google DeepMind team and the Computer Science Department of New York University. The authors of this work include Trieu Trinh, Yuhuai Wu, Quoc Le, He He, and Thang Luong. We thank Rif A. Saurous, Denny Zhou, Christian Szegedy, Delesley Hutchins, Thomas Kipf, Hieu Pham, Petar Veličković, Edward Lockhart, Debidatta Dwibedi, Kyunghyun Cho, Lerrel Pinto, Alfredo Canziani, Thomas Wies, He He’s research group, Evan Chen, Mirek Olsak, Patrik Bak for their help and support. We would also like to thank Google DeepMind leadership for the support, especially Ed Chi, Koray Kavukcuoglu, Pushmeet Kohli, and Demis Hassabis.

AI Research

UCR Researchers Bolster AI Against Rogue Rewiring

As generative AI models move from massive cloud servers to phones and cars, they’re stripped down to save power. But what gets trimmed can include the technology that stops them from spewing hate speech or offering roadmaps for criminal activity.

To counter this threat, researchers at the University of California, Riverside, have developed a method to preserve AI safeguards even when open-source AI models are stripped down to run on lower-power devices.

Unlike proprietary AI systems, open‑source models can be downloaded, modified, and run offline by anyone. Their accessibility promotes innovation and transparency but also creates challenges when it comes to oversight. Without the cloud infrastructure and constant monitoring available to closed systems, these models are vulnerable to misuse.

The UCR researchers focused on a key issue: carefully designed safety features erode when open-source AI models are reduced in size. This happens because lower‑power deployments often skip internal processing layers to conserve memory and computational power. Dropping layers improves the models’ speed and efficiency, but could also result in answers containing pornography, or detailed instructions for making weapons.

“Some of the skipped layers turn out to be essential for preventing unsafe outputs,” said Amit Roy-Chowdhury, professor of electrical and computer engineering and senior author of the study. “If you leave them out, the model may start answering questions it shouldn’t.”

The team’s solution was to retrain the model’s internal structure so that its ability to detect and block dangerous prompts is preserved, even when key layers are removed. Their approach avoids external filters or software patches. Instead, it changes how the model understands risky content at a fundamental level.

“Our goal was to make sure the model doesn’t forget how to behave safely when it’s been slimmed down,” said Saketh Bachu, UCR graduate student and co-lead author of the study.

To test their method, the researchers used LLaVA 1.5, a vision‑language model capable of processing both text and images. They found that certain combinations, such as pairing a harmless image with a malicious question, could bypass the model’s safety filters. In one instance, the altered model responded with detailed instructions for building a bomb.

After retraining, however, the model reliably refused to answer dangerous queries, even when deployed with only a fraction of its original architecture.

“This isn’t about adding filters or external guardrails,” Bachu said. “We’re changing the model’s internal understanding, so it’s on good behavior by default, even when it’s been modified.”

Bachu and co-lead author Erfan Shayegani, also a graduate student, describe the work as “benevolent hacking,” a way of fortifying models before vulnerabilities can be exploited. Their ultimate goal is to develop techniques that ensure safety across every internal layer, making AI more robust in real‑world conditions.

In addition to Roy-Chowdhury, Bachu, and Shayegani, the research team included doctoral students Arindam Dutta, Rohit Lal, and Trishna Chakraborty, and UCR faculty members Chengyu Song, Yue Dong, and Nael Abu-Ghazaleh. Their work is detailed in a paper presented this year at the International Conference on Machine Learning in Vancouver, Canada.

“There’s still more work to do,” Roy-Chowdhury said. “But this is a concrete step toward developing AI in a way that’s both open and responsible.”

AI Research

Should AI Get Legal Rights?

In one paper Eleos AI published, the nonprofit argues for evaluating AI consciousness using a “computational functionalism” approach. A similar idea was once championed by none other than Putnam, though he criticized it later in his career. The theory suggests that human minds can be thought of as specific kinds of computational systems. From there, you can then figure out if other computational systems, such as a chabot, have indicators of sentience similar to those of a human.

Eleos AI said in the paper that “a major challenge in applying” this approach “is that it involves significant judgment calls, both in formulating the indicators and in evaluating their presence or absence in AI systems.”

Model welfare is, of course, a nascent and still evolving field. It’s got plenty of critics, including Mustafa Suleyman, the CEO of Microsoft AI, who recently published a blog about “seemingly conscious AI.”

“This is both premature, and frankly dangerous,” Suleyman wrote, referring generally to the field of model welfare research. “All of this will exacerbate delusions, create yet more dependence-related problems, prey on our psychological vulnerabilities, introduce new dimensions of polarization, complicate existing struggles for rights, and create a huge new category error for society.”

Suleyman wrote that “there is zero evidence” today that conscious AI exists. He included a link to a paper that Long coauthored in 2023 that proposed a new framework for evaluating whether an AI system has “indicator properties” of consciousness. (Suleyman did not respond to a request for comment from WIRED.)

I chatted with Long and Campbell shortly after Suleyman published his blog. They told me that, while they agreed with much of what he said, they don’t believe model welfare research should cease to exist. Rather, they argue that the harms Suleyman referenced are the exact reasons why they want to study the topic in the first place.

“When you have a big, confusing problem or question, the one way to guarantee you’re not going to solve it is to throw your hands up and be like ‘Oh wow, this is too complicated,’” Campbell says. “I think we should at least try.”

Testing Consciousness

Model welfare researchers primarily concern themselves with questions of consciousness. If we can prove that you and I are conscious, they argue, then the same logic could be applied to large language models. To be clear, neither Long nor Campbell think that AI is conscious today, and they also aren’t sure it ever will be. But they want to develop tests that would allow us to prove it.

“The delusions are from people who are concerned with the actual question, ‘Is this AI, conscious?’ and having a scientific framework for thinking about that, I think, is just robustly good,” Long says.

But in a world where AI research can be packaged into sensational headlines and social media videos, heady philosophical questions and mind-bending experiments can easily be misconstrued. Take what happened when Anthropic published a safety report that showed Claude Opus 4 may take “harmful actions” in extreme circumstances, like blackmailing a fictional engineer to prevent it from being shut off.

AI Research

Trends in patent filing for artificial intelligence-assisted medical technologies | Smart & Biggar

[co-authors: Jessica Lee, Noam Amitay and Sarah McLaughlin]

Medical technologies incorporating artificial intelligence (AI) are an emerging area of innovation with the potential to transform healthcare. Employing techniques such as machine learning, deep learning and natural language processing,1 AI enables machine-based systems that can make predictions, recommendations or decisions that influence real or virtual environments based on a given set of objectives.2 For example, AI-based medical systems can collect medical data, analyze medical data and assist in medical treatment, or provide informed recommendations or decisions.3 According to the U.S. Food and Drug Administration (FDA), some key areas in which AI are applied in medical devices include: 4

- Image acquisition and processing

- Diagnosis, prognosis, and risk assessment

- Early disease detection

- Identification of new patterns in human physiology and disease progression

- Development of personalized diagnostics

- Therapeutic treatment response monitoring

Patent filing data related to these application areas can help us see emerging trends.

Table of contents

Analysis strategy

We identified nine subcategories of interest:

- Image acquisition and processing

- Medical image acquisition

- Pre-processing of medical imaging

- Pattern recognition and classification for image-based diagnosis

- Diagnosis, prognosis and risk management

- Early disease detection

- Identification of new patterns in physiology and disease

- Development of personalized diagnostics and medicine

- Therapeutic treatment response monitoring

- Clinical workflow management

- Surgical planning/implants

We searched patent filings in each subcategory from 2001 to 2023. In the results below, the number of patent filings are based on patent families, each patent family being a collection of patent documents covering the same technology, which have at least one priority document in common.5

What has been filed over the years?

The number of patents filed in each subcategory of AI-assisted applications for medical technologies from 2001 to 2023 is shown below.

We see that patenting activities are concentrating in the areas of treatment response monitoring, identification of new patterns in physiology and disease, clinical workflow management, pattern recognition and classification for image-based diagnosis, and development of personalized diagnostics and medicine. This suggests that research and development efforts are focused on these areas.

What do the annual numbers tell us?

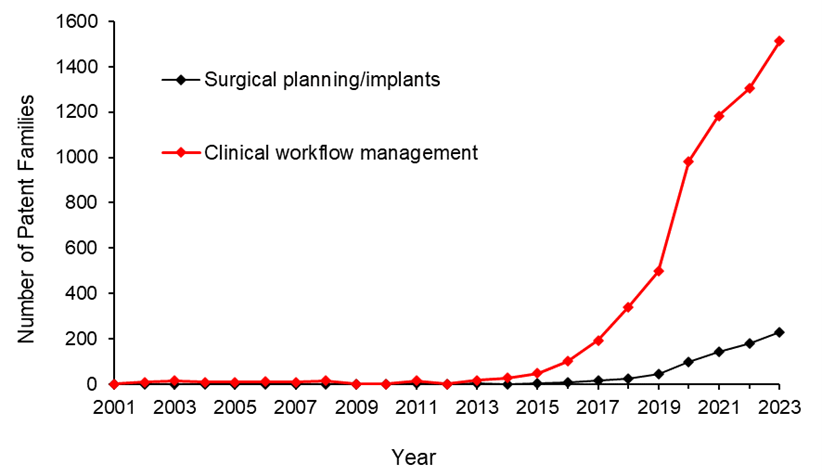

Let’s look at the annual number of patent filings for the categories and subcategories listed above. The following four graphs show the global patent filing trends over time for the categories of AI-assisted medical technologies related to: image acquisition and processing; diagnosis, prognosis and risk management; treatment response monitoring; and workflow management.

When looking at the patent filings on an annual basis, the numbers confirm the expected significant uptick in patenting activities in recent years for all categories searched. They also show that, within the four categories, the subcategories showing the fastest rate of growth were: pattern recognition and classification for image-based diagnosis, identification of new patterns in human physiology and disease, treatment response monitoring, and clinical workflow management.

Above: Global patent filing trends over time for categories of AI-assisted medical technologies related to image acquisition and processing.

Above: Global patent filing trends over time for categories of AI-assisted medical technologies related to more accurate diagnosis, prognosis and risk management.

Above: Global patent filing trends over time for AI-assisted medical technologies related to treatment response monitoring.

Above: Global patent filing trends over time for categories of AI-assisted medical technologies related to workflow management.

Where is R&D happening?

By looking at where the inventors are located, we can see where R&D activities are occurring. We found that the two most frequent inventor locations are the United States (50.3%) and China (26.2%). Both Australia and Canada are amongst the ten most frequent inventor locations, with Canada ranking seventh and Australia ranking ninth in the five subcategories that have the highest patenting activities from 2001-2023.

Where are the destination markets?

The filing destinations provide a clue as to the intended markets or locations of commercial partnerships. The United States (30.6%) and China (29.4%) again are the pace leaders. Canada is the seventh most frequent destination jurisdiction with 3.2% of patent filings. Australia is the eighth most frequent destination jurisdiction with 3.1% of patent filings.

Takeaways

Our analysis found that the leading subcategories of AI-assisted medical technology patent applications from 2001 to 2023 include treatment response monitoring, identification of new patterns in human physiology and disease, clinical workflow management, pattern recognition and classification for image-based diagnosis as well as development of personalized diagnostics and medicine.

In more recent years, we found the fastest growth in the areas of pattern recognition and classification for image-based diagnosis, identification of new patterns in human physiology and disease, treatment response monitoring, and clinical workflow management, suggesting that R&D efforts are being concentrated in these areas.

We saw that patent filings in the areas of early disease detection and surgical/implant monitoring increased later than the other categories, suggesting these may be emerging areas of growth.

Although, as expected, the United States and China are consistently the leading jurisdictions in both inventor location and destination patent offices, Canada and Australia are frequently in the top ten.

Patent intelligence provides powerful tools for decision makers in looking at what might be shaping our future. With recent geopolitical changes and policy updates in key primary markets, as well as shifts in trade relationships, patent filings give us insight into how these aspects impact innovation. For everyone, it provides exciting clues as to what emerging technologies may shape our lives.

References

1. Alowais et.al., Revolutionizing healthcare: the role of artificial intelligence in clinical practice (2023), BMC Medical Education, 23:689.

2. U.S. Food and Drug Administration (FDA), Artificial Intelligence and Machine Learning in Software as a Medical Device.

3. Bitkina et.al., Application of artificial intelligence in medical technologies: a systematic review of main trends (2023), Digital Health, 9:1-15.

4. Artificial Intelligence Program: Research on AI/ML-Based Medical Devices | FDA.

5. INPADOC extended patent family.

[View source.]

-

Business6 days ago

Business6 days agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms3 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics