Tools & Platforms

AI Toys Find Their Own Way After a Year of Wild Running

There are significant non – consensuses in the AI toy industry in terms of technological routes, business models, and user groups, providing broader innovation space for different companies.

A year ago, AI toys were suspected of being just a concept hype, and mass – production data was needed to prove the real existence of this demand. After a year of development, this market has expanded rapidly, with more diverse product paths, larger – scale financing, and more consumers willing to pay for them.

“When we first launched the product a year ago, we were extremely nervous,” Li Yong, the CEO of Haivivi, told us. At that time, there were no mature cases for reference, and we didn’t know if it would sell well. The team just intuitively thought that combining the large – model dialogue ability with plush toys would create a chemical reaction.

The first – generation product, BubblePal, was thus born, and its sales volume increased for two consecutive months after its launch. After the Spring Festival this year, the launch of DeepSeek completed the popularization of AI among the general public, further stimulating the monthly sales growth of BubblePal, which reached two or three times that of the previous months.

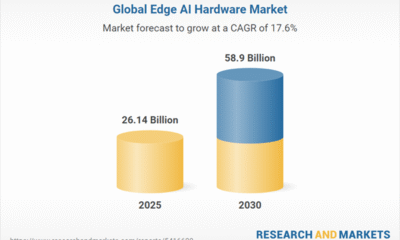

Li Yong began to firmly believe that “in the future AGI era, all toys should have AI capabilities.” According to the “White Paper on the Consumption Trends of the AI Toy Industry,” the global market size of AI toys is expected to exceed 100 billion by 2030, with a compound annual growth rate (CAGR) of over 50%. In particular, the CAGR of the Chinese market is even over 70%.

More players are starting to enter the same track as Haivivi, including entrepreneurs, merchants in Huaqiangbei, IP holders, and even game companies and Internet companies.

Among them, Ropet from Mengyou Intelligence became popular at CES 2025, demonstrating another possibility. Although it can’t speak, Ropet is defined as an AI pet. It can interact with people like a kitten or puppy, act cute and coquettishly, and gain more realistic growth through AI capabilities and data accumulation. Beipei Technology, which received investment from Shunwei Capital, launched the AI educational companion toy, Kedou Peipei, at the end of April this year. It not only wants to chat with children but also aims to become an AI partner accompanying children’s growth.

These products can be called AI toys or companion – type AI hardware. They share a common basic setting – all strive to use AI technology to make toys, pets, and robots more like living beings and capable of more natural interactions with people. The difference lies in that each team will look for different development paths, target different scenarios and user groups to meet differentiated needs and form different business models.

These differentiated explorations have not only upgraded the interactive experience of traditional toys through AI but also extended and satisfied the functional and emotional values, enabling the entry into different vertical fields from the toy sector.

Such AI toys are like a preview of the robot era, helping people practice how to coexist with AI in reality through an entry – level product.

AI Toys: From Concept to Market

As one of the earliest companies in China to explore the AI toy track, Haivivi launched its first – generation product, BubblePal, in July 2024, priced at 399 yuan. This bubble – shaped external AI pendant, empowered by AIGC technology, brought the children’s bedside doll friends to life, enabling them to “speak.” Thus, it opened up a new category of AI toys.

Currently, BubblePal has sold over 200,000 units, helping Haivivi gain recognition from the capital market. On August 25, Haivivi announced the completion of a new round of 200 million yuan in financing led by funds under CICC Capital, Sequoia China Seed Fund, Huashan Capital, and Joy Capital, with participation from CMBC International and Brizan Ventures.

“I think the technology has become more mature this year, and the industry has objectively become popular. More players have entered, and we have also caught the attention of capital. With sufficient cash flow, we can do some things we originally wanted to do,” Li Yong said.

While announcing the financing, Haivivi officially launched its second – generation AI toy product, the CocoMate series. The first product is an AI plush toy co – branded with Ultraman, priced at 799 yuan. The core of the AI hardware is embedded in it and can be disassembled. In the next few months, Haivivi plans to release more than a dozen products one after another to improve its product matrix at a faster pace.

Haivivi’s second – generation AI toy product – the CocoMate series, the co – branded product with Ultraman

In the past year, after the first – generation products of Beipei Technology, Mengyou Intelligence, and Luobo Intelligence were launched, they all achieved sales growth exceeding the teams’ expectations. A staff member of Luobo Technology told us at the booth of the World Artificial Intelligence Conference at the end of July that their plush bag – hanging AI toy, Fuzozo, went on sale in June, priced at 399 yuan. It sold 1,000 units in one minute after its launch, and the latest batch of 30,000 products was already sold out. “If you place an order now, you won’t get it until August,” he said.

Beipei Technology’s Kedou Peipei currently has two models, the Curious Bear and the Friendly Rabbit. On the basis of the plush doll’s body, it is equipped with a glasses – shaped eye – protecting screen that “can blink and interact,” which caters to children’s natural preference for warm tactile sensations and concrete things. Through the design of hands – free dialogue, it solves the interaction pain points when lulling young children to sleep.

The Curious Bear and the Friendly Rabbit are both priced at 399 yuan. They ranked among the top 3 AI toys on Tmall two weeks after their launch. “This speed exceeded our expectations, and our conversion rate is the highest in the entire category, indicating that the product form has been verified,” said Huang Yingning, the founder of Beipei Technology. On this basis, Beipei Technology wants to create a new hardware with memory and soul for the new generation of children to accompany them as they grow up.

Targeting young female users, Ropet’s design focuses on “spiritual interaction” and “ultimate simplification.” It presents a round and fluffy shape, equipped with a camera, a rotatable base, and a pair of big screen eyes. It doesn’t have a dialogue function but provides “weak companionship” and emotional value through onomatopoeia and emojis on the screen eyes, stripping away the functional labels of traditional technology products.

Currently, Ropet is mainly sold in the markets of Europe, America, Japan, and South Korea, priced at 299 US dollars. It is expected to deliver 30,000 – 40,000 products this year and plans to be officially launched in the Chinese mainland next year. In the crowdfunding campaign on the overseas crowdfunding platform Kickstarter, 70% of the participants were female users who used the platform for the first time – this forms a sharp contrast with the platform’s attribute of serving male geeks, confirming Ropet’s precise positioning in the female AI toy market and the emotional companionship track.

In terms of the user group, the market for AI toys is not limited to first – and second – tier cities. It also shows certain demand potential in lower – tier markets. Beipei Technology’s sales data shows that the proportion of county – level orders has been continuously increasing. Some parents from lower – tier cities regard such products as an important tool to compensate for the lack of parent – child companionship. Huang Yingning believes that “this track is wide enough, long enough, and full of potential, giving us enough time to strive for success.”

The market demand for AI toys has been verified by more products, boosting the confidence of the capital market in investing in the AI toy track. In addition to Haivivi, Beipei Technology completed 3 rounds of financing within a year, with a cumulative amount of nearly 10 million US dollars. Luobo Intelligence also announced that it had received tens of millions of yuan in angel – round financing. Roughly calculated, there have been more than 20 investment and financing events in the domestic AI toy field in the past year.

Creating Silicon – Based Life Forms

Compared with the previous generation of AI, large models have long – term memory and content – generation capabilities. This makes people no longer satisfied with developing intelligent tools but instead try to create silicon – based companions with a sense of life and a physical form. Embodied intelligence is the ultimate pursuit of silicon – based companions, and AI toys are silicon – based companions that can enter the market more quickly. Having the ability to build vivid silicon – based life forms is the entry ticket to the AI toy track.

Beipei Technology, Mengyou Intelligence, and Haivivi unanimously believe that in this track, technology can only form a barrier in the short term, and pure tool – type products are more likely to get caught in industry involution. Creating vivid silicon – based life forms is a comprehensive test of technological capabilities, scenario insights, and user positioning. Once achieved, it can form a closer binding relationship with users and establish a solid brand image.

Huang Yingning said that she has always had a seed of creating a ToC application in her heart. Under the technological transformation, she realized that a new generation of ToC applications might emerge, which can not only provide tool value but also bring a stronger sense of companionship.

Kedou Peipei is an AI partner for children born under this concept. The Curious Bear and the Friendly Rabbit will have different personalities. Different toy combinations are like bringing different – personality friends to children, influencing them from different personality dimensions.

Kedou Peipei AI toys, the Friendly Rabbit and the Curious Bear

Huang Yingning also hopes that the interaction between AI toys and users can be similar to the interaction between people in reality. “If we have a personal secretary, he might do things for us before we even realize it. If you are on a diet recently, he won’t order braised pork with preserved vegetables for your lunch. This is a very proactive interaction,” Huang Yingning explained.

Under this thinking, the core value of Kedou Peipei lies in the behind – the – scenes software system and data accumulation. Through this, it can share a unique growth memory with children and conduct more proactive communication with them based on this.

One question that Haivivi wants to answer this year is how to make AI toys more lifelike after the improvement of large – model technology. CocoMate is more like a brain placed inside a plush toy. In the future, Haivivi will continuously iterate this brain until it has the four core characteristics of a living being – “autonomy, sociability, value alignment, and finiteness.”

Li Yong believes that currently, the in – depth thinking ability of large models can basically meet the independent thinking needs of AI toys. The continuous evolution of the model and the long – term accumulation of product – side data will make AI toys approach a state of autonomous learning. There might be a unique Agent behind each AI toy, which can think and execute autonomously.

On this basis, toys can be interconnected to achieve data sharing. In Li Yong’s vision, AI toys can actively communicate with each other. Haivivi plans to build “Haivivi and Her Friends,” enabling all IP toys equipped with its software algorithms to achieve cross – brand social interaction. For example, Disney characters can “talk” to Ultraman.

This design makes toys the intermediary of human – to – human social interaction. Even, AI toys and users will jointly form a new social circle, where toys can communicate freely with each other and with users. This reminds people of the society where humans and robots coexist as shown in science – fiction movies.

Then comes the realization of value alignment. If people want to maintain a long – term friendship, they need to gradually form a consensus or align their values in the process of getting along. AI will also continuously adjust in the interaction with users to find the common ground. This is a different expression of the same demand as Kedou Peipei’s desire to share a unique memory with users.

Ultimately, AI toys should have their own memory and soul. So even if two people have Ultraman toys, after three years, each person’s Ultraman will be different.

Mengyou Intelligence interprets “value alignment” as a cultivation model from the perspective of the interaction between pets and humans. “In fact, people prefer things that are similar to themselves rather than complementary. So Ropet will complete its character shaping as it gets along with users. Initially, there might be a tentative period like when a dog gets along with a human, and then it will continuously adjust its behavior according to the owner’s feedback,” said Zhou Yushu, a partner at Zhenzhi Venture Capital and a co – founder of Mengyou Intelligence.

Ropet, a product under Mengyou Technology

The AI pets of Mengyou Intelligence can share a unified “brain,” and users can achieve data sharing through one account without losing their preference settings.

Finally, AI toys need to show finiteness, which is also the core difference between them and all – knowing and all – powerful general AI assistants. In Li Yong’s view, “forgetting” is an important manifestation of finiteness. Intelligent agents need to selectively store memories for AI toys according to parameters such as time, mention frequency, and emotional intensity, “just like active and passive forgetting in humans.”

Ropet also emphasizes the biological characteristics of the product, and its greatest finiteness comes from deliberately abandoning the dialogue function. Its product will build a sense of life based on the still – developing multimodal interaction: visually, it can perceive the environment through the camera; aurally, the microphone captures the user’s voice and emits onomatopoeic tones; in terms of movement, it supports basic feedback such as turning the head and blinking; when the user touches it actively, Ropet will also react in real – time.

Zhou Yushu believes that in the foreseeable future when silicon – based creatures become smarter, humans will increasingly feel a lack of a sense of value. Therefore, the core of Ropet is not to serve people but to let people take care of the robot pet and obtain emotional satisfaction in the process of cultivation. Following this logic, Ropet has determined that its target user group is neither children nor the elderly but young women with a stronger willingness to keep pets.

Besides the software level, AI toys also pursue the realization of a sense of life at the hardware level. One obvious point is that many AI toys choose a plush appearance. This warmer – looking material has a stronger sense of biology, which not only meets the emotional needs of children but also touches the hearts of adults. Many products targeting specific user groups have made differentiated designs on this basis.

For example, Ropet, with a plush ball as its basic shape, allows users to buy plush shells to replace, making the product more personalized. Fuzozo, also targeting Generation Z women, also has a plush appearance and can be carried as a bag hanging, fitting the current popular trend.

Each AI Toy Should Blaze Its Own Trail

Behind the explosive growth of the AI toy industry lies the non – convergent development paths. Pursuing a sense of life is the underlying tone of the entire industry. On this basis, different companies have derived completely different development ideas based on their different understandings of technology, users, and the market.

Currently, the industry has differentiated into three major directions: AI pets, AI toys, and AI robots. Both Beipei Technology and Haivivi belong to the direction of AI toys, but their development paths are also quite different. Mengyou Intelligence is in the direction of AI pets, with a very different idea from the previous two.

Starting from toys, Beipei Technology pays more attention to enabling AI to play an enlightening and guiding role in children’s growth. It productizes the accumulation of child

Tools & Platforms

InstaLILY Raises $25M to Bring AI Teammates to the Frontlines of Distribution

Insider Brief

- InstaLILY AI raised $25M Series A led by Insight Partners, with backing from Perceptive Ventures and Marvin Ventures, to expand its catalog of InstaWorkers™ and deepen enterprise integrations.

- Unlike copilots or chatbots, InstaWorkers™ are domain-trained AI Teammates that execute full workflows inside legacy systems (ERPs, CRMs, ticketing tools) across industries like construction, OEM equipment, and insurance — delivering measurable results such as 70% faster claims processing.

- Purpose-built for execution, InstaLILY is pioneering “Code-as-Work” by embedding AI directly into sales, service, and operations teams — handling quoting, issue triage, and exception management, while extending into multimodal use cases like voice and video support for field service and contact centers.

PRESS RELEASE — InstaLILY AI, the maker of AI Teammates for the world’s most operationally intensive industries, has announced a $25 million Series A funding round led by global software investor Insight Partners, with participation from Perceptive Ventures and Marvin Ventures.

InstaLILY is pioneering a new way to bring AI into the enterprise: instead of stitching together tools or building automation flows, companies can now hire vertical-specific AI Teammates — called InstaWorkers™ — that execute actual work inside legacy systems of records like ERPs, CRMs or any existing software tools.

Purpose-Built for Execution, not just Automation

The platform is purpose-built for distribution-heavy verticals where automation has historically failed. These industries — from physical goods like industrial parts to services like insurance and healthcare — depend on large catalogs, specialized knowledge and fragmented tools, creating high-volume, multi-step work that consumes expert human time. InstaWorkers™ solve this by being trained on the domain-specific processes unique to these industries, navigating their complex software environments without rip-and-replace, and executing full workflows autonomously.

Here’s how InstaWorkers™ get the job done:

- Understand Your Business: They are trained on the domain-specific processes, documentation, and systems unique to your industry.

- Work Across Your Tools: They navigate fragmented software environments (CRMs, ERPs, ticketing platforms) without requiring costly rip-and-replace projects.

- Execute, Not Just Advise: They autonomously complete full workflows, with options for human-in-the-loop oversight, moving beyond simple suggestions to take decisive action.

“We kept hearing the same thing: AI copilots are useful, but they don’t do the work,” said Amit Shah, Founder and CEO of InstaLILY. “InstaWorkers™ are different. They’re AI Teammates — built to execute, not just suggest next steps. That’s the promise of Code-as-Work: AI that radically expands human capacity, not replaces it.”

InstaWorkers™ on the Job

Customers are already deploying teams of InstaWorkers™ to augment their sales, service, and operations staff — with many seeing immediate results:

- A $10B+ construction-supply distributor is empowering its 1,500+ managers with an AI Sales support team. The InstaWorkers™ turn sales data into actionable follow-ups, allowing managers to spend more time on strategic account growth and coaching their teams.

- One of the largest global OEM equipment platforms deploys AI service specialists to support its field technicians. These InstaWorkers™ analyze complex fault descriptions and predict the most likely replacement part from thousands of SKUs, empowering technicians to focus on high-stakes repairs and customer service.

- A PE-backed insurance and healthcare services provider staffed an AI claims operations team to handle high-volume denials. The InstaWorkers™ extract policy and claim data, evaluate it against coverage rules, flag appealable cases, and generate compliant responses — reducing manual review time by 70% and accelerating resolution cycles.

Real Execution, Not Just Assistance

InstaLILY doesn’t just assist — it executes. While horizontal AI platforms focus on summarization, chat, task routing, or surface-level automation, InstaLILY delivers deep, decision-oriented execution. InstaWorkers™ take ownership of the high-stakes, high-variation workflows that drive revenue and service outcomes, such as quoting, issue triage, part validation, and exception handling. This isn’t robotic process automation; it’s domain-trained intelligence built to operate across legacy systems, tribal processes, and real-world complexity.

“These aren’t chatbots,” said Sumantro Das, Co-founder and COO of InstaLILY. “They’re AI Teammates who are embedded in the team and doing the work, not floating around it.”

Investor Perspective

“Hiring a domain-trained AI Teammate is one of those rare ideas that’s both intuitive and built to scale,” said Crissy Costa Behrens, Principal at Insight Partners. “InstaLILY is executing where horizontal AI tools stall — delivering vertical AI that doesn’t just assist but actually owns outcomes. With InstaWorkers™, InstaLILY is helping build a better future of work: grounded in actions, not suggestions.”

What’s Next

With this Series A funding, InstaLILY will expand its catalog of pre-trained InstaWorkers™ across new verticals, deepen integration support for common enterprise systems, and accelerate adoption across sales, service, and operations teams to help customers scale AI Teammate deployments without disrupting their existing workflows. The team is now extending multimodal capabilities even further, enabling agents to process voice and video inputs — unlocking new use cases in field service, contact centers, and human-agent-robot collaboration.

About InstaLILY

InstaLILY is the platform for hiring AI Teammates who already know your industry vertical. Its domain-trained AI agents — called InstaWorkers™ — execute the core sales, service, and operations workflows of distribution-heavy and regulated businesses. Built for execution and immediate impact, InstaWorkers™ plug into your existing tools to deliver value from day one. Learn more at www.instalily.ai

About Insight Partners

Insight Partners is a global software investor partnering with high-growth technology, software, and Internet startup and ScaleUp companies that are driving transformative change in their industries. As of December 31, 2024, the firm has over $90B in regulatory assets under management. Insight Partners has invested in more than 800 companies worldwide and has seen over 55 portfolio companies achieve an IPO. Headquartered in New York City, Insight has offices in London, Tel Aviv, and the Bay Area. Insight’s mission is to find, fund, and work successfully with visionary executives, providing them with tailored, hands-on software expertise along their growth journey, from their first investment to IPO. For more information on Insight and all its investments, visit insightpartners.com or follow us on X @insightpartners.

Tools & Platforms

Gen AI: Faster Than a Speeding BulletNot So Great at Leaping Tall Buildings

FEATURE

Gen AI: Faster Than a Speeding BulletNot So Great at Leaping Tall Buildings

by Christine Carmichael

This article was going to be a recap of my March 2025 presentation at the Computers in Libraries conference (computersinlibraries.infotoday.com).

However, AI technology and the industry are experiencing not just rapid

growth, but also rapid change. And as we info pros know, not all change is

good. Sprinkled throughout this article are mentions of changes since March, some in the speeding-bullet category, others in the not-leaping-tall-buildings one.

Getting Started

I admit to being biased—after all, Creighton University is in my hometown of Omaha—but what makes Creighton special is how the university community lives out its Jesuit values. That commitment to caring for the whole person, caring for our common home, educating men and women to serve with and for others, and educating students to be agents of change has never been more evident in my 20 years here than in the last 2–3 years.

An adherence to those purposes has allowed people from across the campus to come together easily and move forward quickly. Not in a “Move fast and break things” manner, but with an attitude of “We have to face this together if we are going to teach our students effectively.” The libraries’ interest in AI officially started after OpenAI launched ChatGPT-3.5 in November 2022.

Confronted with this new technology, my library colleagues and I gorged ourselves on AI-related information, attending workshops from different disciplines, ferreting out article “hallucinations,” and experimenting with ways to include information about generative AI (gen AI) tools in information literacy instruction sessions. Elon University shared its checklist, which we then included in our “Guide to Artificial Intelligence” (culibraries.creighton.edu/AI).

(Fun fact: Since August 2024, this guide has received approximately 1,184 hits—it’s our fourth-highest-ranked guide.)

|

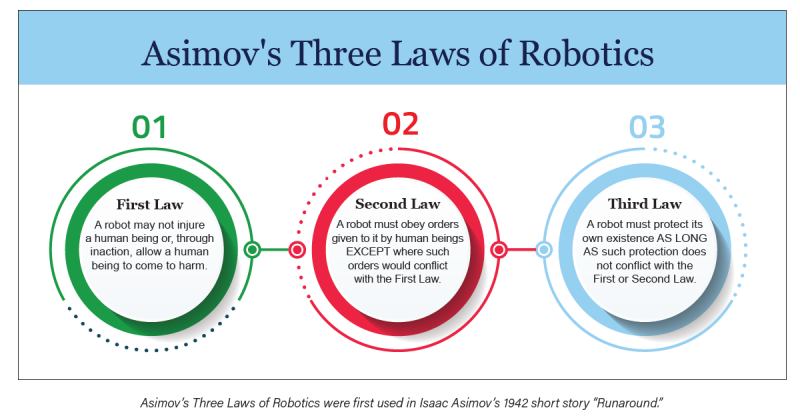

Two things inspired and convinced me to do more and take a lead role with AI at Creighton. First was my lifelong love affair with science fiction literature. Anyone familiar with classic science fiction should recognize Asimov’s Three Laws of Robotics. First used in Isaac Asimov’s 1942 short story “Runaround,” they are said to have come from the Handbook of Robotics, 56th Edition, published in 2058. Librarians should certainly appreciate this scenario of an author of fiction citing a fictional source. In our current environment, where gen AI “hallucinates” citations, it doesn’t get more AI than that!

Whether or not these three laws/concepts really work in a scientific sense, you could extrapolate some further guidance for regulating development and deployment of these new technologies to mitigate harm. Essentially, it’s applying the Hippocratic oath to AI.

|

The second inspiration came from a February 2023 CNN article by Samantha Murphy Kelly (“The Way We Search for Information Online Is About to Change”; cnn.com/2023/02/09/tech/ai-search/index.html), which claims that the way we search for information is never going to be the same. I promptly thought to myself, “Au contraire, mon frère, we have been here before,” and posted a response on Medium (“ChatGPT Won’t Fix the Fight Against Misinformation”; medium.com/@christine.carmichael/chatgpt-wont-fix-the-fight-against-misinformation-15c1505851c3).

What Happened Next

Having a library director whose research passion is AI and ethics enabled us to act as a central hub for convening meetings and to keep track of what is happening with AI throughout the campus. We are becoming a clearinghouse for sharing information about projects, learning opportunities, student engagement, and faculty development, as well as pursuing library-specific projects.

After classes started in the fall 2024, the director of Magis Core Curriculum and the program director for COM 101—a co-curricular course requirement which meets oral communication and information literacy learning objectives—approached two research and instruction librarians, one being me. They asked us to create a module for our Library Encounter Online course (required in COM 101) to explain some background of AI and how large language models (LLMs) work. The new module was in place for spring 2025 classes.

In November 2024, the library received a $250,000 Google grant, which allowed us to subscribe to and test three different AI research assistant tools—Scopus, Keenious, and Scite. We split into three teams, each one responsible for becoming the experts on a particular tool. After presenting train-the-trainer sessions to each other, we restructured our teams to discuss the kinds of instruction options we could use: long, short, online (bite-sized).

We turned on Scopus AI in late December 2024. Team Scopus learned the tool’s ins and outs and describe it as “suitable for general researchers, upper-level undergraduates, graduate/professional students, medical residents, and faculty.” Aspects of the tool are particularly beneficial to discovering trends and gaps in research areas. One concern the team has remains the generation of irrelevant references. The team noticed this particularly when comparing the same types of searches in “regular” Scopus vs. Scopus AI.

Team Keenious was very enthusiastic and used an evaluation rubric from Rutgers University (credit where it’s due!). The librarians’ thoughts currently are that this tool is doing fairly well at “being a research recommendation tool” for advanced researchers. It has also successfully expanded the “universe” of materials used in systematic reviews. However, reservations remain as to its usefulness for undergraduate assignments, particularly when that group of students may not possess the critical thinking skills necessary to effectively use traditional library resources.

Scite is the third tool in our pilot. The platform is designed to help researchers evaluate the reliability of research papers using three categories: citations supporting claims, citations contrasting claims, and citations without either. Team Scite.ai” liked the tool, but recognized that it has a sizable learning curve. One Team Scite.ai member summed up their evaluation like this: “Much like many libraries, Scite.ai tries to be everything to everyone, but it lacks the helpful personal guide-on-the-side that libraries provide.”

The official launch and piloting the use of these tools began in April 2025 for faculty. We will introduce them to students in the fall 2025 semester. However, because the Scopus AI tool is already integrated and sports a prominent position on the search page, we anticipate folks using that tool before we get an opportunity to market it.

The Google funding also enabled the Creighton University Libraries to partner with several departments across campus in support of a full-day faculty development workshop in June. “Educating the Whole Person in an AI-Driven World” brought almost 150 faculty and staff together for the common purpose of discerning (another key component of Jesuit education) where and how we will integrate AI literacies into the curriculum. In addition to breakout sessions, we were introduced to a gen AI SharePoint site, the result of a library collaboration with the Center for Faculty Excellence.

ETHICS AND AI

Our director is developing a Jesuit AI Ethics Framework. Based on Jesuit values and Catholic Social Teaching, the framework also addresses questions asked by Pope Francis and the Papal Encyclical, Laudato si’, which asks us to care for our common home by practicing sustainability. Because of our extensive health sciences programs, a good portion of this ethical framework will focus on how to maintain patient and student privacy when using AI tools.

Ethics is a huge umbrella. These are things we think bear deeper dives and reflection:

The labor question: AI as a form of automation

We had some concerns and questions about AI as it applies to the workforce. Is it good if AI takes people’s jobs or starts producing art? Where does that leave the people affected? Note that the same concerns were raised about automation in the Industrial Revolution and when digital art tablets hit the market.

Garbage in, garbage out (GIGO): AIs are largely trained on internet content, and much of that content is awful.

We were concerned particularly about the lack of transparency: If we don’t know what an AI model is trained on, if there’s no transparency, can we trust it—especially for fields like healthcare and law, where there are very serious real-word implications? In the legal field, AI has reinforced existing biases, generally assuming People of Color (POCs) to be guilty.

Availability bias is also part of GIGO. AIs are biased by the nature of their training data and the nature of the technology itself (pattern recognition). Any AI trained on the internet is biased toward information from the modern era. Scholarly content is biased toward OA, and OA is biased by different field/author demographics that indicate who is more or less likely to publish OA.

AI cannot detect sarcasm, which may contribute to misunderstanding about meaning. AI chatbots and LLMs have repeatedly shown sexist, racist, and even white supremacist responses due to the nature of people online. What perspectives are excluded from training data (non-English content)? What about the lack of developer diversity (largely white Americans and those from India and East Asia)?

Privacy, illegal content sourcing, financial trust issues

Kashmir Hill’s 2023 book, Your Face Belongs to Us: A Secretive Startup’s Quest to End Privacy (Penguin Random House), provides an in-depth exposé on how facial recognition technology is being (mis)used. PayPal founder Peter Thiel invested $200,000 into a company called SmartCheckr, which eventually became Clearview AI, specializing in facial recognition. Thiel was a Facebook board member with a fiduciary responsibility at the same time that he was investing money in a company that was illegally scraping Facebook content. There is more to the Clearview AI story, including algorithmic bias issues with facial recognition. Stories like this raise questions about how to regulate this technology.

Environmental impacts

What about energy and water use and their climate impacts? This is from Scientific American: “A continuation of the current trends in AI capacity and adoption are set to lead to NVIDIA shipping 1.5 million AI server units per year by 2027. These 1.5 million servers, running at full capacity, would consume at least 85.4 terawatt-hours of electricity annually—more than what many small countries use in a year, according to the new assessment“ (scientificamerican.com/article/the-ai-boom-could-use-a-shocking-amount-of-electricity).

In May 2025, MIT Technology Review released a new report, “Power Hungry: AI and Our Energy Future” (technologyreview.com/supertopic/ai-energy-package), which states, “The rising energy cost of data centers is a vital test case for how we deal with the broader electrification of the economy.”

It’s Not All Bad News

There are days I do not even want to open my newsfeed because I know I will find another article detailing some new AI-infused horror, like this one from Futurism talking about people asking ChatGPT how to administer their own facial fillers (futurism.com/neoscope/chatgpt-advice-cosmetic-procedure-medicine). Then there is this yet-to-be-peer-reviewed article about what happens to your brain over time when using an AI writing assistant (“Your Brain on ChatGPT: Accumulation of Cognitive Debt When Using an AI Assistant for Essay Writing Task”; arxiv.org/pdf/2506.08872v1). It is reassuring to know that there are many, many people who are not invested in the hype, who are paying attention to how gen AI is or is not performing.

There are good things happening. For instance, ResearchRabbit and Elicit, two well-known AI research assistant tools, use the corpus of Semantic Scholar’s metadata as their training data. Keenious, one of the tools we are piloting, uses OpenAlex for its training corpus.

On either coast of the U.S. are two outstanding academic research centers focusing on ethics: Santa Clara University’s Markkula Center for Applied Ethics (scu.edu/ethics/focus-areas/internet-ethics) and the Berkman Klein Center for Internet and Society at Harvard University (cyber.harvard.edu).

FUTURE PLANS

We have projects and events planned for the next 2 years:

- Library director and School of Medicine faculty hosting a book club using Teaching With AI: Navigating the Future of Education

- Organizing an “unconference” called Research From All Angles Focusing on AI

- Hosting a Future of Work conference with Google

- Facilitating communities of practice around different aspects of AI: teaching, research, medical diagnostics, computing, patient interaction, etc.

As we get further immersed, our expectations of ourselves need to change. Keeping up with how fast the technology changes will be nigh on impossible. We must give ourselves and each other the grace to accept that we will never “catch up.” Instead, we will continue to do what we excel at—separating the truth from the hype, trying the tools for ourselves, chasing erroneous citations, teaching people to use their critical thinking skills every time they encounter AI, learning from each other, and above all, sharing what we know.

Tools & Platforms

Is AI ‘The New Encyclopedia’? As The School Year Begins, Teachers Debate Using The Technology In The Classroom

As the school year gets underway, teachers across the country are dealing with a pressing quandary: whether or not to use AI in the classroom.

Ludrick Cooper, an eighth-grade teacher in South Carolina, told CNN that he’s been against the use of AI inside and outside the classroom for years, but is starting to change his tune.

“This is the new encyclopedia,” he said of AI.

Don’t Miss:

There are certainly benefits to using AI in the classroom. It can make lessons more engaging, make access to information easier, and help with accessibility for those with visual impairments or conditions like dyslexia.

However, experts also have concerns about the negative impacts AI can have on students. Widening education inequalities, mental health impacts, and easier methods of cheating are among the main downfalls of the technology.

“AI is a little bit like fire. When cavemen first discovered fire, a lot of people said, ‘Ooh, look what it can do,'” University of Maine Associate Professor of Special Education Sarah Howorth told CNN. “And other people are like, ‘Ah, it could kill us.’ You know, it’s the same with AI.”

Several existing platforms have developed specific AI tools to be used in the classroom. In July, OpenAI launched “Study Mode,” which offers students step-by-step guidance on classwork instead of just giving them an answer.

Trending: Kevin O’Leary Says Real Estate’s Been a Smart Bet for 200 Years — This Platform Lets Anyone Tap Into It

The company has also partnered with Instructure, the company behind the learning platform Canvas, to create a new tool called the LLM-Enabled Assignment. The tool will allow teachers to create AI-powered lessons while simultaneously tracking student progress.

“Now is the time to ensure AI benefits students, educators, and institutions, and partnerships like this are critical to making that happen,” OpenAI Vice President of Education Leah Belsky said in a statement.

While some teachers are excited by these advancements and the possibilities they create, others aren’t quite sold.

Stanford University Vice Provost for Digital Education Matthew Rascoff worries that tools like this one remove the social aspect of education. By helping just one person at a time, AI tools don’t allow opportunities for students to work on things like collaboration skills, which will be vital in their success as productive members of society.

-

Business3 days ago

Business3 days agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms3 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences3 months ago

Events & Conferences3 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Mergers & Acquisitions2 months ago

Mergers & Acquisitions2 months agoDonald Trump suggests US government review subsidies to Elon Musk’s companies