Welcome back to In the Loop, TIME’s new twice-weekly newsletter about the world of AI. We’re publishing installments both as stories on Time.com and as emails.

If you’re reading this in your browser, you can subscribe to have the next one delivered straight to your inbox.

What to Know:

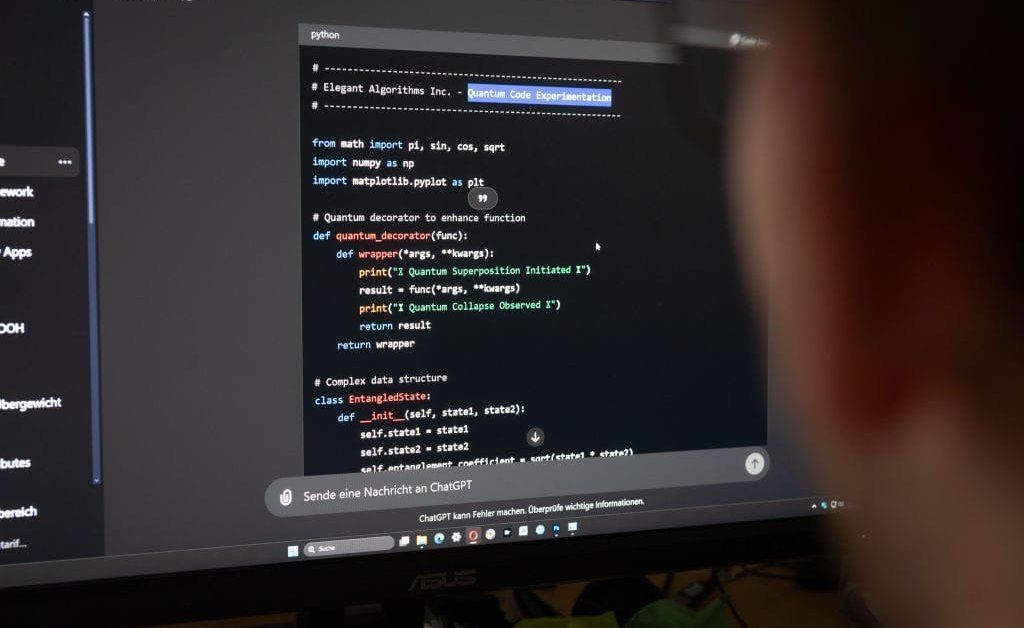

Could coding with AI slow you down?

In just the last couple of years, AI has totally transformed the world of software engineering. Writing your own code (from scratch, at least,) has become quaint. Now, with tools like Cursor and Copilot, human developers can marshal AI to write code for them. The human role is now to understand what to ask the models for the best results, and to iron out the inevitable problems that crop up along the way.

Conventional wisdom states that this has accelerated software engineering significantly. But has it? A new study by METR, published last week, set out to measure the degree to which AI speeds up the work of experienced software developers. The results were very unexpected.

What the study found — METR measured the speed of 16 developers working on complex software projects, both with and without AI assistance. After finishing their tasks, the developers estimated that access to AI had accelerated their work by 20% on average. In fact, the measurements showed that AI had slowed them down by about 20%. The results were roundly met with surprise in the AI community. “I was pretty skeptical that this study was worth running, because I thought that obviously we would see significant speedup,” wrote David Rein, a staffer at METR, in a post on X.

Why did this happen? — The simple technical answer seems to be: while today’s LLMs are good at coding, they’re often not good enough to intuit exactly what a developer wants and answer perfectly in one shot. That means they can require a lot of back and forth, which might take longer than if you just wrote the code yourself. But participants in the study offered several more human hypotheses, too. “LLMs are a big dopamine shortcut button that may one-shot your problem,” wrote Quentin Anthony, one of the 16 coders who participated in the experiment. “Do you keep pressing the button that has a 1% chance of fixing everything? It’s a lot more enjoyable than the grueling alternative.” (It’s also easy to get sucked into scrolling social media while you wait for your LLM to generate an answer, he added.)

What it means for AI — The study’s authors urged readers not to generalize too broadly from the results. For one, the study only measures the impact of LLMs on experienced coders, not new ones, who might benefit more from their help. And developers are still learning how to get the most out of LLMs, which are relatively new tools with strange idiosyncrasies. Other METR research, they noted, shows the duration of software tasks that AI is able to do doubling every seven months—meaning that even if today’s AI is detrimental to one’s productivity, tomorrow’s might not be.

Who to Know:

Jensen Huang, CEO of Nvidia

Huang finds himself in the news today after he proclaimed on CNN that the U.S. government doesn’t “have to worry” about the possibility of the Chinese military using the market-leading AI chips that his company, Nvidia, produces. “They simply can’t rely on it,” he said. “It could be, of course, limited at any time.”

Chipping away — Huang was arguing against policies that have seen the U.S. heavily restrict the export of graphics processing units, or GPUs, to China, in a bid to hamstring Beijing’s military capabilities and AI progress. Nvidia claims that these policies have simply incentivized China to build its own rival chip supply chain, while hurting U.S. companies and by extension the U.S. economy.

Self-serving argument — Huang of course would say that, as CEO of a company that has lost out on billions as a result of being blocked from selling its most advanced chips to the Chinese market. He has been attempting to convince President Donald Trump of his viewpoints in a recent meeting at the White House, Bloomberg reported.

In fact… The Chinese military does use Nvidia chips, according to research by Georgetown’s Center for Security and Emerging Technology, which analyzed 66,000 military purchasing records to come to that conclusion. A large black market has also sprung up to smuggle Nvidia chips into China since the export controls came into place, the New York Times reported last year.

AI in Action

Anthropic’s AI assistant, Claude, is transforming the way the company’s scientists keep up with the thousands of pages of scientific literature published every day in their field.

Instead of reading papers, many Anthropic researchers now simply upload them into Claude and chat with the assistant to distill the main findings. “I’ve changed my habits of how I read papers,” Jan Leike, a senior alignment researcher at Anthropic, told TIME earlier this year. “Where now, usually I just put them into Claude, and ask: can you explain?”

To be clear, Leike adds, sometimes Claude gets important stuff wrong. “But also, if I just skim-read the paper, I’m also gonna get important stuff wrong sometimes,” Leike says. “I think the bigger effect here is, it allows me to read much more papers than I did before.” That, he says, is having a positive impact on his productivity. “A lot of time when you’re reading papers is just about figuring out whether the paper is relevant to what you’re trying to do at all,” he says. “And that part is so fast 1752604110, you can just focus on the papers that actually matter.”

What We’re Reading

Microsoft and OpenAI’s AGI Fight Is Bigger Than a Contract — By Steven Levy in Wired

Steven Levy goes deep on the “AGI” clause in the contract between OpenAI and Microsoft, which could decide the fate of their multi-billion dollar partnership. It’s worth reading to better understand how both sides are thinking about defining AGI. They could do worse than Levy’s own description: “a technology that makes Sauron’s Ring of Power look like a dime-store plastic doodad.”