Tools & Platforms

AI Makes Us Wiser — Just Not The Way We Think

AI brings us face to face with our ignorance and challenges us to ask who we are and what we want to … More

In his 2017 New York Times bestseller, Life 3.0: Being Human in the Age of Artificial Intelligence, MIT professor Max Tegmark argued that AI has the potential to transform our future more than any other technology. Five years before ChatGPT made the risks and opportunities of AI the overriding topic of conversation in companies and society, Tegmark asked the same questions everyone is asking today:

What career advice should we give our kids? Will machines eventually outsmart us at all tasks, replacing humans on the job market and perhaps altogether? How can we make AI systems more robust? Should we fear an arms race in lethal autonomous weapons? Will AI help life flourish like never before or give us more power than we can handle?

In the book’s press materials, readers are asked: “What kind of future do you want?” with the promise that “This book empowers you to join what may be the most important conversation of our time.” But was it Tegmark’s book that empowered us to join a conversation about the implications of AI? Or was it the AI technology itself?

The Question Concerning Technology

I have previously referred to the German philosopher Martin Heidegger in my articles here at Forbes. In “This Existential Threat Calls For Philosophers, Not AI Experts”, I shared his 1954 prediction that unless we get a better grip of what he called the essence of technology, we will lose touch with reality and ourselves. And in my latest piece, “From Existential Threat to Hope. A Philosopher’s Guide to AI,” I introduced his view that the essence of technology is to give man the illusion of being in control.

Heidegger has been accused of having an overly pessimistic view of the development of technology in the 20th century. But his distinction between pre-modern and modern technology, and how he saw the latter evolving into what would soon become digital technology, also suggests an optimistic angle that is useful when discussing the risks and opportunities of the AI we see today.

According to Heidegger, our relationship with technology is so crucial to who we are and why we do the things we do that it is almost impossible for us to question it. And yet it is only by questioning our relationship with technology that we can remain and develop as humans. Throughout history, it has become increasingly difficult for us to question the influence technology has on how we think, act, and interact. Meanwhile, we have increasingly surrendered to the idea of speed, efficiency, and productivity.

But, he said as early as 1954, the advent of digital technology suggested something else was coming. Something that would make it easier for humanity to ask the questions we have neglected to ask for far too long.

AI Reconnects Us With Our Questioning Nature

While the implications of AI on science, education and our personal and professional lives are widely debated, few ask why and how we came to debate these things. What is it about AI that makes us question our relationship with technology? Why are tech companies spending time – and money – researching how AI affects critical thinking? How does AI differ from previous technologies that only philosophers and far-sighted tech people questioned in the same way that everyone questions AI today?

In his analysis of the essence of technology, Heidegger stated that “the closer we come to the danger, the brighter the ways into the saving power begin to shine and the more questioning we become.” This suggests that our questioning response to AI not only heralds danger – the existential threat that some AI experts speak of – it also heralds existential hope for a reconnection with our human nature.

AI Reminds Us Of Our Natural Limitations

The fact that we are asking questions that we have neglected to ask for millennia not only tells us something important about AI. It also tells us something important about ourselves. From stone axes to engines to social media, the essence of technology has made us think of our surroundings as something we can design and decide how to be. But AI is different. AI doesn’t make us think we’re in control. On the contrary, AI is the first technology in human history that makes it clear that we are not in control.

AI reminds us that it’s not just nature outside us that has limitations. It’s also nature inside us. It reminds us of our limitations in time and space. And that our natural limitations are not just physical, but also social and cognitive.

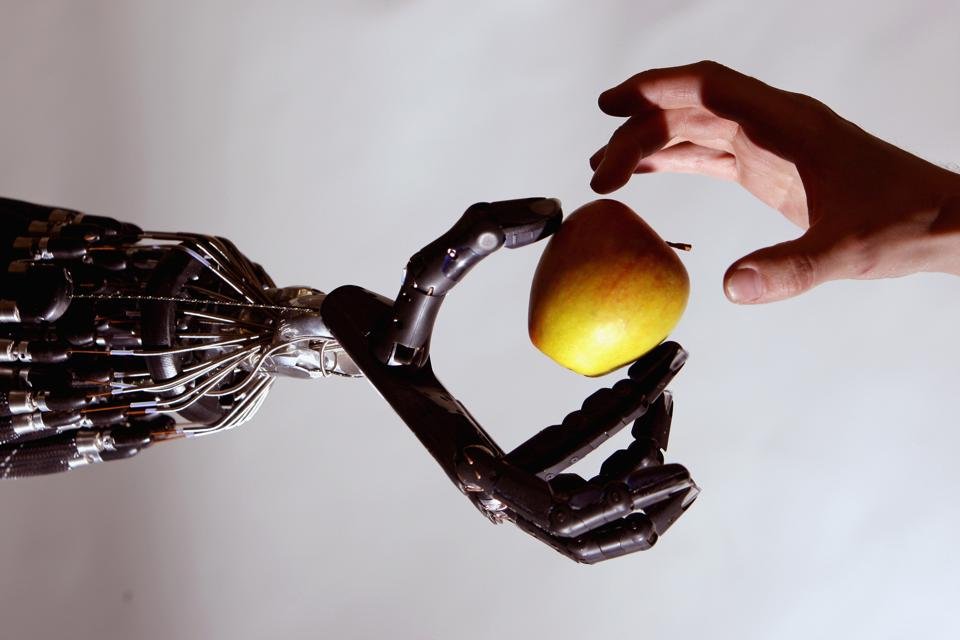

AI brings us face to face with our ignorance and challenges us to ask who we are and what we want to do when we are not the ones in control: Do we insist on innovating or regulating ourselves back into control? Or do we finally recognize that we never were and never will be in control? Because we are part of nature, not above or beyond it.

Like the serpent in the Garden of Eden, AI makes us ask ourselves who we are and why we are here. … More

AI Makes Us Wiser By Reminding Us Who We Are

Like the serpent in the Garden of Eden, AI presents us with a choice: Either we ignore our ignorance and pretend we still know everything there is to know. Or we live with the pain that comes with knowing that we don’t know everything. We can tell each other stories about when and how AI will help us transcend our natural limitations. How it will enhance our thinking and ability to speed up innovation and productivity. But by doing so, we miss a historic opportunity to become wiser as a species.

Wisdom is not about what we know or how we define and try to design artificial intelligence, cognition, or even consciousness. Wisdom is about what we don’t know and how we deal with the fact that we may never know. It’s about seeking a deeper understanding of ourselves and our relationship to and responsibility for our surroundings. It’s about asking – and continuing to ask – questions about who we are, why we are here, and what is the right thing for us to do. And it’s about never trusting AI, tech experts, or even philosophers to answer these questions for us.

We may never be able to fully understand what is it about AI that makes us question our relationship with technology. But the fact that we do makes us wiser – and empowers us to join what definitely is the most important conversation of our time.

Tools & Platforms

Duke AI program emphasizes critical thinking for job security :: WRAL.com

Duke’s AI program is spearheaded by a professor who is not just teaching, he also built his own AI model.

Professor Jon Reifschneider says we’ve already entered a new era of teaching and learning across disciplines.

He says, “We have folks that go into healthcare after they graduate, go into finance, energy, education, etc. We want them to bring with them a set of skills and knowledge in AI, so that they can figure out: ‘How can I go solve problems in my field using AI?'”

He wants his students to become literate in AI, which is a challenge in a field he describes as a moving target.

“I think for most people, AI is kind of a mysterious black box that can do somewhat magical things, and I think that’s very risky to think that way, because you don’t develop an appreciation of when you should use it and when you shouldn’t use it,” Reifschneider told WRAL News.

Student Harshitha Rasamsetty said she is learning the strengths and shortcomings of AI.

“We always look at the biases and privacy concerns and always consider the user,” she said.

The students in Duke’s engineering master’s programs come from all backgrounds, countries, even ages. Jared Bailey paused his insurance career in Florida to get a handle on the AI being deployed company-wide.

He was already using AI tools when he wondered, “What if I could crack them open and adjust them myself and make them better?”

John Ernest studied engineering in undergrad, but sought job security in AI.

“I hear news every day that AI is replacing this job, AI is replacing that job,” he said. “I came to a conclusion that I should be a part of a person building AI, not be a part of a person getting replaced by AI.”

Reifschneider thinks warnings about AI taking jobs are overblown.

In fact, he wants his students to come away understanding that humans have a quality AI can’t replace. That’s critical thinking.

Reifschneider says AI “still relies on humans to guide it in the right direction, to give it the right prompts, to ask the right questions, to give it the right instructions.”

“If you can’t think, well, AI can’t take you very far,” Bailey said. “It’s a car with no gas.”

Reifschneider told WRAL that he thinks children as young as elementary school students should begin learning how to use AI, when it’s appropriate to do so, and how to use it safely.

WRAL News went inside Wake County schools to see how it is being used and what safeguards the district is using to protect students. Watch that story Wednesday on WRAL News.

Tools & Platforms

WA state schools superintendent seeks $10M for AI in classrooms

This article originally appeared on TVW News.

Washington’s top K-12 official is asking lawmakers to bankroll a statewide push to bring artificial intelligence tools and training into classrooms in 2026, even as new test data show slow, uneven academic recovery and persistent achievement gaps.

Superintendent of Public Instruction Chris Reykdal told TVW’s Inside Olympia that he will request about $10 million in the upcoming supplemental budget for a statewide pilot program to purchase AI tutoring tools — beginning with math — and fund teacher training. He urged legislators to protect education from cuts, make structural changes to the tax code and act boldly rather than leaving local districts to fend for themselves. “If you’re not willing to make those changes, don’t take it out on kids,” Reykdal said.

The funding push comes as new Smarter Balanced assessment results show gradual improvement but highlight persistent inequities. State test scores have ticked upward, and student progress rates between grades are now mirroring pre-pandemic trends. Still, higher-poverty communities are not improving as quickly as more affluent peers. About 57% of eighth graders met foundational math progress benchmarks — better than most states, Reykdal noted, but still leaving four in 10 students short of university-ready standards by 10th grade.

Reykdal cautioned against reading too much into a single exam, emphasizing that Washington consistently ranks near the top among peer states. He argued that overall college-going rates among public school students show they are more prepared than the test suggests. “Don’t grade the workload — grade the thinking,” he said.

Artificial intelligence, Reykdal said, has moved beyond the margins and into the mainstream of daily teaching and learning: “AI is in the middle of everything, because students are making it in a big way. Teachers are doing it. We’re doing it in our everyday lives.”

OSPI has issued human-centered AI guidance and directed districts to update technology policies, clarifying how AI can be used responsibly and what constitutes academic dishonesty. Reykdal warned against long-term contracts with unproven vendors, but said larger platforms with stronger privacy practices will likely endure. He framed AI as a tool for expanding customized learning and preparing students for the labor market, while acknowledging the need to teach ethical use.

Reykdal pressed lawmakers to think more like executives anticipating global competition rather than waiting for perfect solutions. “If you wait until it’s perfect, it will be a decade from now, and the inequalities will be massive,” he said.

With test scores climbing slowly and AI transforming classrooms, Reykdal said the Legislature’s next steps will be decisive in shaping whether Washington narrows achievement gaps — or lets them widen.

TVW News originally published this article on Sept. 11, 2025.

Tools & Platforms

AI Leapfrogs, Not Incremental Upgrades, Are New Back-Office Approach – PYMNTS.com

-

Business3 weeks ago

Business3 weeks agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms1 month ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy2 months ago

Ethics & Policy2 months agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers3 months ago

Jobs & Careers3 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Education3 months ago

Education3 months agoVEX Robotics launches AI-powered classroom robotics system

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Funding & Business3 months ago

Funding & Business3 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries