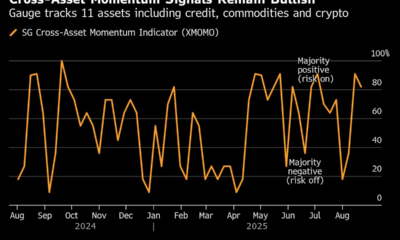

In cities across the United States, an ambitious goal is gaining traction: Vision Zero, the strategy to eliminate all traffic fatalities and severe injuries. First implemented in Sweden in the 1990s, Vision Zero has already cut road deaths there by 50 percent from 2010 levels. Now, technology companies like Stop for Kids and Obvio.ai are trying to bring the results seen in Europe to U.S. streets with AI-powered camera systems designed to keep drivers honest, even when police aren’t around.

Local governments are turning to AI-powered cameras to monitor intersections and catch drivers who see stop signs as mere suggestions. The stakes are high: About half of all car accidents happen at intersections, and too many end in tragedy. By automating enforcement of rules against rolling stops, speeding, and failure to yield, these systems aim to change driver behavior for good. The carrot is safer roads and lower insurance rates; the stick is citations for those who break the law.

The Origins of Stop for Kids

Stop for Kids, based in Great Neck, N.Y., is one company leading the charge in residential areas and school zones. Co-founder and CEO Kamran Barelli was driven by personal tragedy: In 2018, his wife and three-year-old son were struck by an inattentive driver while crossing the street. “The impact launched them nearly 18 meters down the street, where they landed hard on the asphalt pavement,” Barelli says. Both survived, but the experience left him determined to find a solution.

He and his neighbors pressed the municipality to put up radar speed signs. But they turned out to be counterproductive. “Teenagers would race to see who could trigger the highest number,” Barelli says. “And extra police only worked until drivers texted each other to watch out.”

So Barelli and his brother, longtime software entrepreneurs, pivoted their tech business to develop an AI-enabled camera system that never takes a day off and can see in the dark. Installed at intersections, the cameras detect vehicles that fail to come to a full stop; then the system automatically issues citations. It uses AI to draw digital “bounding boxes” around vehicles to track their behavior without looking at faces or activities inside a car. If a driver stops properly, any footage is deleted immediately. Videos of violations, on the other hand, are stored securely and linked with DMV records to issue tickets to vehicle owners. The local municipality determines the amount of the fine.

Stop for Kids has already seen promising results. In a 2022 pilot of the tech in the Long Island town of Saddle Rock, N.Y., compliance with stop signs jumped from just 3 percent to 84 percent within 90 days of installing the cameras. Today, that figure stands at 94 percent, says Barelli. “The remaining 6 percent of non-compliance comes overwhelmingly from visitors to the area who aren’t aware that the cameras are in place.” Since then, the company has installed its camera systems in municipalities in New York and Florida, with a few cities in California up next.

In a Stop for Kids pilot project, cameras installed at intersections drastically improved drivers’ compliance with stop signs over three months.Stop for Kids

Still, some experts say they’ll wait to pass judgment on the technology’s efficacy. “Those results are impressive,” says Daniel Schwarz, a senior privacy and technology strategist at the New York Civil Liberties Union (NYCLU). “But these marketing claims are rarely backed up by independent studies that validate what these AI technologies can really do.”

Privacy Issues in Automated Ticketing Systems

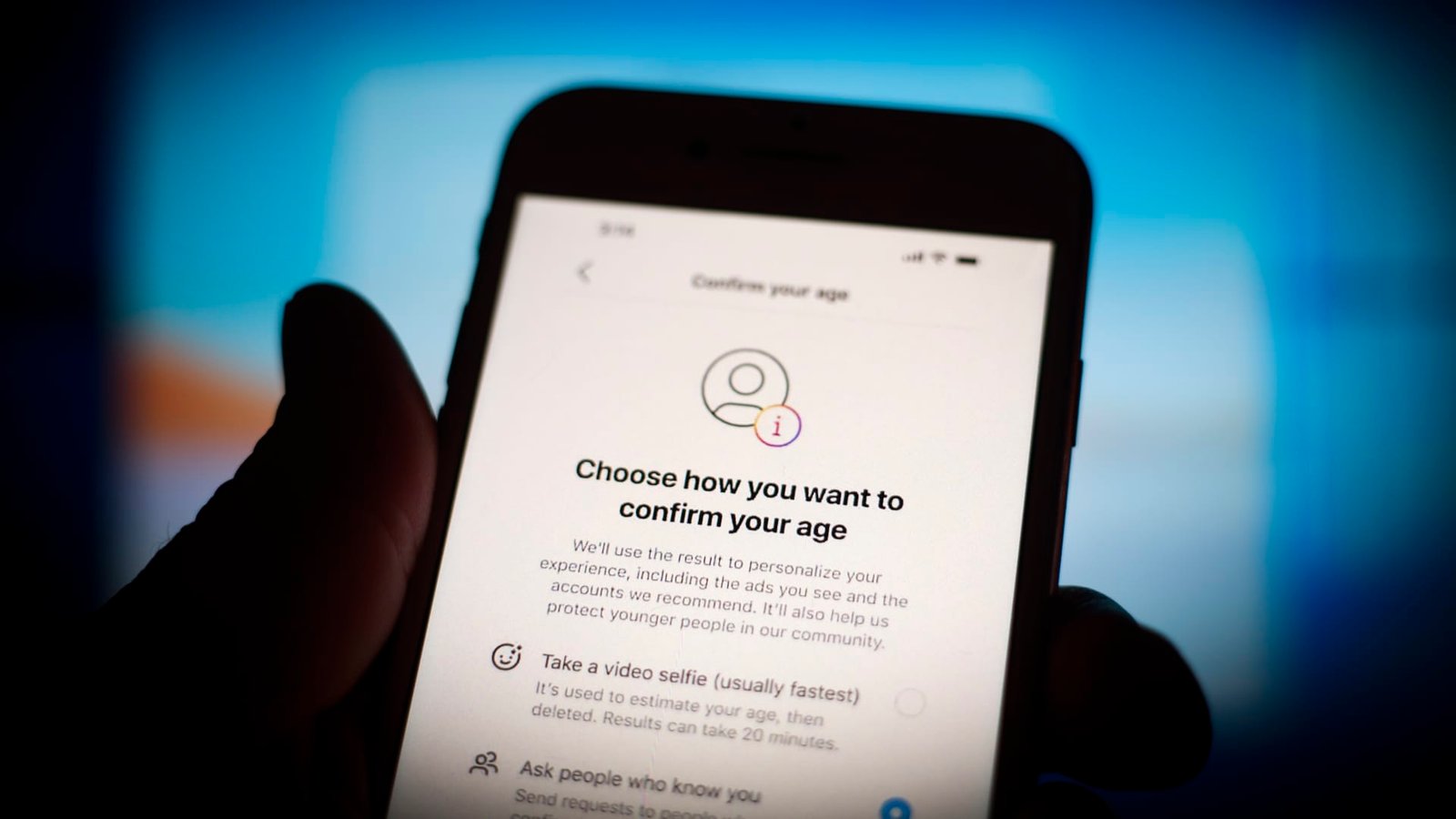

Privacy is a big concern for communities considering camera enforcement. In the Stop for Kids system, faces inside vehicles and in the rest of the scene are automatically blurred. Identifying images come only from an AI license plate reader. No personal DMV data is shared except with local authorities handling citations. The company has created an online evidence portal that allows vehicle owners to review footage and dispute tickets, helping ensure the system remains fair and transparent.

Watchdog groups are not convinced that this type of technology won’t be subject to mission creep. They say that gear originally introduced to help reach the sympathetic goal of lowering traffic deaths may be updated to do things outside of that scope.

“Expanding the overall goal of such a deployment is as simple as software push,” says NYCLU’s Schwarz. “More functionalities could be introduced, additional features that raise more civil liberties concerns or present other dangers that perhaps the prior version did not.”

Obvio.ai’s Approach

Meanwhile, in San Carlos, Calif., another startup is taking a similar approach with its own twist. Founded in 2023, Obvio.ai has designed a solar-powered, AI-enabled camera system that mounts on utility poles and street lamps near intersections. Like Stop for Kids, Obvio’s system detects rolling stops, illegal turns, and failures to yield. But instead of automating the entire setup, local governments review potential infractions before any citations are issued, ensuring a human is always in the loop.

Obvio.ai co-founder and president Dhruv Maheshwari says the company’s cameras run on solar power and connect to its cloud server via 5G, making them easy to deploy without major construction. Obvio’s AI processor, installed on site with the camera, uses computer vision models to identify cars, bicycles, and pedestrians in real time. The system continuously streams footage but only stores clips when a violation is likely. Everything else is automatically deleted within hours to protect privacy. And, as with Stop for Kids’ tech, the cameras do not use facial recognition to identify drivers—just the vehicle’s license plate.

Last summer, Obvio.ai partnered with Maryland’s Prince George’s County for a pilot program across towns like Colmar Manor, Morningside, Bowie, and College Park. Within weeks, stop-sign violations were cut in half. In Bowie, local leaders avoided concerns about the camera system rollout being a “ticketing for profit” scheme by sending warning letters instead of fines during the trial period.

Vision Zero Is the Target

Though both Stop for Kids and Obvio.ai declined to offer any specifics about where their cameras will appear next, Barelli told IEEE Spectrum that about 60 towns on Long Island, near the place where it conducted its pilot, are interested. “They asked the state legislature to provide a clear framework governing what they can do with systems like ours,” Barelli says. “Right now, it’s being considered by the State Senate.”

“Ultimately, we hope our technology becomes obsolete,” says Maheshwari. “We want drivers to do the right thing, every time. If that means we don’t issue any tickets, that means zero revenue but complete success.”

From Your Site Articles

Related Articles Around the Web