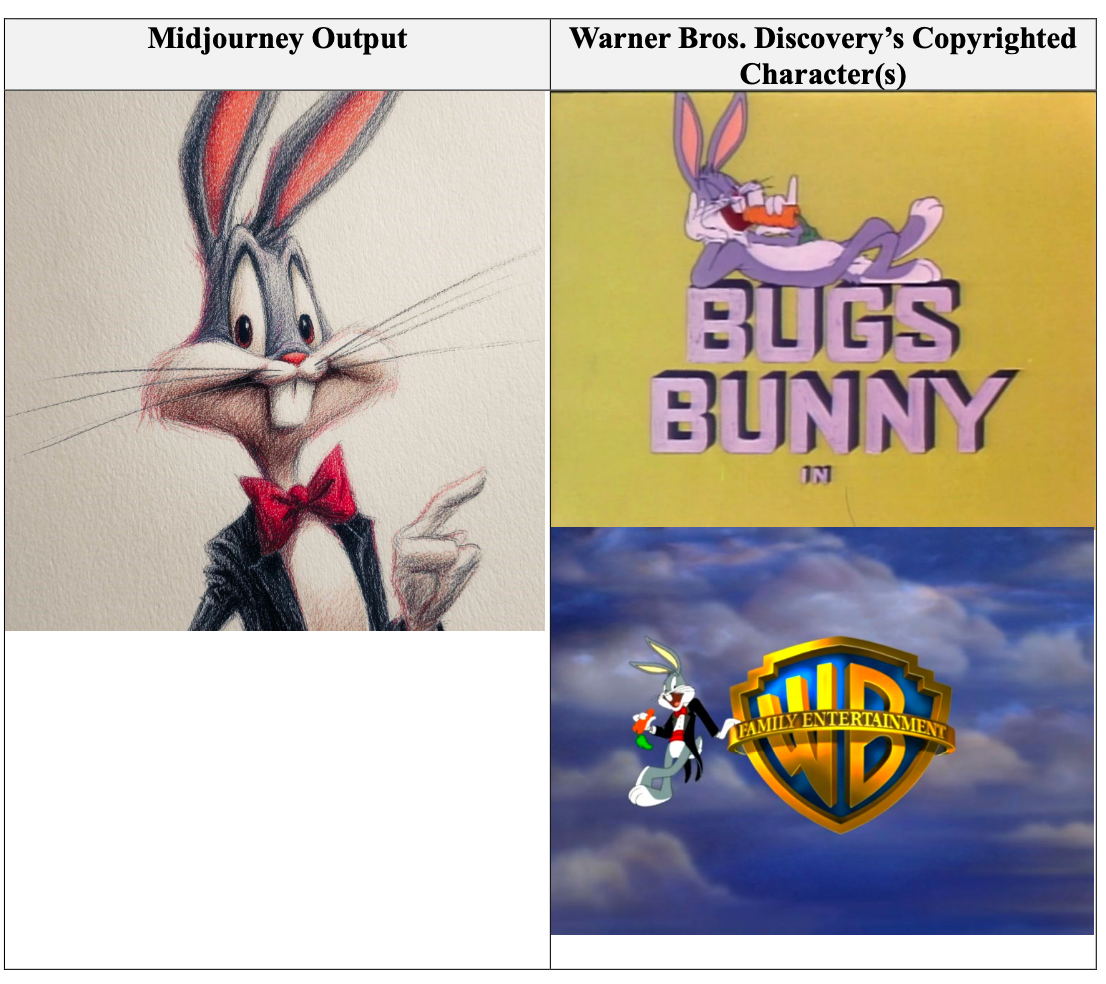

An example cited in Warner Bros. Discovery’s lawsuit: At left is a Midjourney output of Bugs Bunny, at right are actual Warner Bros.’ images of Bugs Bunny.

I have been encountering some interesting news about how the AI industry is progressing. It feels like a slowdown in this space is definitely on the horizon, if it hasn’t already started. (Not being an economist, I won’t say bubble, but there are lots of opinions out there.) GPT-5 came out last month and disappointed everyone, apparently even OpenAI executives. Meta made a very sudden pivot and is reorganizing its entire AI function, ceasing all hiring, immediately after putting apparently unlimited funds into recruiting and wooing talent in the space. Microsoft appears to be slowing their investment in AI hardware (paywall).

This isn’t to say that any of the major players are going to stop investing in AI, of course. The technology isn’t demonstrating spectacular results or approaching anything even remotely like AGI, which many analysts and writers (including me) had predicted it wouldn’t, but there’s still a level of utilization among businesses and individuals that is persisting, so there’s some incentive to keep pushing forward.

In this vein, I read the new report from MIT about AI in business with great interest this week. I recommend it to anyone who’s looking for actual information about how AI adoption is going from regular workers as well as the C-suite. The report has some headline takeaways, including an assertion that only 5% of AI initiatives in the business setting generate meaningful value, which I can certainly believe. (Also, AI is not actually taking people’s jobs in most industries, and in several industries AI isn’t having much of an impact at all.) A lot of businesses, it seems, have dived into adopting AI without having a strategic plan for what it’s supposed to do, and how that adoption will actually help them achieve their objectives.

I see this a lot, actually — executives who are significantly separated from the day to day work of their organization being gripped by FOMO about AI, deciding AI must become part of their business, but not stepping back and considering how this fits in with the business they already have and the work they already do.

Regular readers will know I’m not arguing AI can’t or shouldn’t be used when it can serve a purpose, of course. Far from it! I build AI-based solutions to business problems at my own organization every day. However, I firmly believe AI is a tool, not magic. It gives us ways to do tasks that are infeasible for human workers and can accelerate the speed of tasks we would otherwise have to do manually. It can make information clearer and help us better understand lengthy documents and texts.

What it doesn’t do, however, is make business success by itself. In order to be part of the 5% and not the 95%, any application of AI needs to be founded on strategic thinking and planning, and most importantly clear-eyed expectations about what AI is capable of and what it isn’t. Small projects that improve particular processes can have huge returns, without having to bet on a massive upheaval or “revolutionizing” of the business, even though they aren’t as glamorous or headline-producing as the hype. The MIT report discusses how vast numbers of projects start as pilots or experimentation but don’t actually come to fruition in production, and I would argue that a lot of this is because either the planning or the clear-eyed expectations were not present.

The authors spend a significant amount of time noting that many AI tools are regarded as inflexible and/or incompatible with existing processes, resulting in failure to adopt among the rank and file. If you build or buy an AI solution that can’t work with your business as it exists today, you’re throwing away your money. Either the solution should have been designed with your business in mind and it wasn’t, meaning a failure of strategic planning, or it can’t be flexible or compatible in the way you need, and AI simply wasn’t the right solution in the first place.

On the subject of flexibility, I had an additional thought as I was reading. The MIT authors emphasize that the internal tools that companies offer their teams often “don’t work” in one way or another, but but in reality a lot of the rigidity and limits placed on in-house LLM tools are because of safety and risk prevention. Developers don’t built non-functional tools on purpose, but they have limitations and requirements to comply with. In short, there’s a tradeoff here we can’t avoid: When your LLM is extremely open and has few or no guardrails, it’s going to feel like it lets the user do more, or will answer more questions, because it does just that. But it does that at a significant possible cost, potentially liability, giving false or inappropriate information, or worse.

Of course, regular users are likely not thinking about this angle when they pull up the ChatGPT app on their phone with their personal account during the work day, they’re just trying to get their jobs done. InfoSec communities are rightly alarmed by this kind of thing, which some circles are calling “Shadow AI” instead of shadow IT. The risks from this behavior can be catastrophic — proprietary company data being handed over to an AI solution freely, without oversight, to say nothing of how the output may be used in the company. This problem is really, really hard to solve. Employee education, at all levels of the organization, is an obvious step, but some degree of this shadow AI is likely to persist, and security teams are struggling with this as we speak.

I think this leaves us in an interesting moment. I believe the winners in the AI rat race are going to be those who were thoughtful and careful, applying AI solutions conservatively, and not trying to upturn their model of success that’s worked up to now to chase a new shiny thing. A slow and steady approach can help hedge against risks, including customer backlash against AI, as well as many others.

Before I close, I just want to remind everyone that these attempts to build the equivalent of a palace when a condo would do fine have tangible consequences. We know that Elon Musk is polluting the Memphis suburbs with impunity by running illegal gas generator powered data centers. Data centers are taking up double-digit percentages of all power generated in some US states. Water supplies are being exhausted or polluted by these same data centers that serve AI applications to users. Let’s remember that the choices we make are not abstract, and be conscientious about when we use AI and why. The 95% of failed AI projects weren’t just expensive in terms of time and money spent by businesses — they cost us all something.

Read more of my work at www.stephaniekirmer.com.

https://garymarcus.substack.com/p/gpt-5-overdue-overhyped-and-underwhelming

https://fortune.com/2025/08/18/sam-altman-openai-chatgpt5-launch-data-centers-investments

https://www.theinformation.com/articles/microsoft-scales-back-ambitions-ai-chips-overcome-delays

https://builtin.com/artificial-intelligence/meta-superintelligence-reorg

https://mlq.ai/media/quarterly_decks/v0.1_State_of_AI_in_Business_2025_Report.pdf

https://www.ibm.com/think/topics/shadow-ai

https://futurism.com/elon-musk-memphis-illegal-generators

https://www.visualcapitalist.com/mapped-data-center-electricity-consumption-by-state

https://www.eesi.org/articles/view/data-centers-and-water-consumption

Artificial intelligence has moved beyond being just a productivity tool — it’s changing the way people find and consume news.

This shift is already reshaping how organizations approach public relations, investor relations and corporate communications, Notified reports.

According to Mary Meeker’s 2025 State of the Internet report, ChatGPT is already handling an estimated 365 billion searches annually — a growth rate more than five times faster than Google in its early years. At the same time, Google’s AI Overviews are taking center stage in search results, often giving users summarized answers before they ever visit a website.

The impact is clear — traditional pathways for discovering news are breaking down, and AI-generated summaries are quickly becoming the first point of contact between companies and their audiences.

The speed of AI adoption has caught many industries off guard and has begun to overwhelm professionals. According to a recent LinkedIn survey, over half of respondents said AI training feels like a second job.

At the same time, newsrooms continue to shrink, people are turning to AI tools instead of traditional search, and generative platforms are pulling together information from press releases, filings, and media stories into single, summarized narratives.

For businesses, this creates a new reality: investors, customers, journalists and employees often see AI-generated answers before they reach the original source. If that content isn’t clear, consistent, and reliable, a company’s story that circulates could be incomplete — or worse, misleading.

This transformation has significant implications for visibility, credibility and influence with three major trends already redefining how news reaches the public:

Whether it’s shaping brand perception, managing investor confidence, or ensuring accurate media coverage, the way content should be published today will directly affect how it appears in tomorrow’s AI-driven summaries.

To stay ahead, here are three essential practices to prioritize:

1. Make Content Clear and Reliable

AI tools work best with structured information. Press releases, earnings updates and official statements should be easy to read, consistent and free from gaps that could be misinterpreted. If the details aren’t clear, the story AI presents may not be accurate.

2. Keep PR and IR on the Same Page

The line between media coverage and investor communication has all but disappeared. A single inconsistency across channels can create confusion once AI systems pull everything together. Business leaders should ensure their communications teams are working together to deliver one unified narrative.

3. Write for People — and Machines

Today’s audience isn’t just human. Algorithms are scanning content, too, and shaping how information is shared. That means businesses need to publish content that answers questions directly and holds up as a trusted source when AI tools summarize it (think FAQs).

While the AI landscape will continue to change, the companies that respond early will have the edge. By making content structured, consistent and ready for both people and algorithms, they’ll reduce the risk of being misunderstood and strengthen trust with investors, customers and the media.

This isn’t just about keeping up with technology. It’s about protecting reputation, ensuring accuracy and making sure your story is the one that gets told.

This story was produced by Notified and reviewed and distributed by Stacker.

Warner Bros. Discovery is suing a prominent artificial intelligence image generator for copyright infringement, escalating a high-stakes battle involving the use of movies and TV shows owned by major studios to teach AI systems.

The lawsuit accuses Midjourney, which has millions of registered users, of building its business around the mass theft of content. The company “brazenly dispenses Warner Bros. Discovery’s intellectual property” by letting subscribers produce images and videos of iconic copyrighted characters, alleges the complaint, filed on Thursday in California federal court.

“The heart of what we do is develop stories and characters to entertain our audiences, bringing to life the vision and passion of our creative partners,” said a Warner Bros. Discovery spokesperson in a statement. “Midjourney is blatantly and purposefully infringing copyrighted works, and we filed this suit to protect our content, our partners, and our investments.”

For years, AI companies have been training their technology on data scraped across the internet without compensating creators. It’s led to lawsuits from authors, record labels, news organizations, artists and studios, which contend that some AI tools erode demand for their content.

Warner Bros. Discovery joins Disney and Universal, which earlier this year teamed up to sue Midjourney. By their thinking, the AI company is a free-rider plagiarizing their movies and TV shows.

An example cited in Warner Bros. Discovery’s lawsuit: At left is a Midjourney output of Bugs Bunny, at right are actual Warner Bros.’ images of Bugs Bunny.

In a statement, Disney said it’s “committed to protecting our creators and innovators” and that it’s “pleased to be joined by Warner Bros. Discovery in the fight against Midjourney’s blatant copyright infringement.”

Added NBCUniversal, “Creative artists are the backbone of our industry, and we are committed to protecting their work and our intellectual property.”

In the lawsuit, Warner Bros. Discovery points to Midjourney generating images of iconic copyrighted characters. At the forefront are heroes who’re at the center of DC Studios’ movies and TV shows, like Superman, Wonder Woman and The Joker; others are Looney Tunes, Tom and Jerry and Scooby-Doo characters who’ve become ubiquitous household names; more are Cartoon Network characters, including those from Rick and Morty, who’ve emerged as something of cultural touchstones in recent years.

Another example cited in Warner Bros. Discovery’s lawsuit: At left is a Midjourney output of Rick and Morty, at right are actual Warner Bros.’ stills of the show.

Midjourney, which has four tiers of paid subscriptions ranging from $10 to $120 per month and didn’t immediately respond to a request for comment, returns characters owned by Warner Bros. Discovery even in response to prompts like “classic comic book superhero battle” that don’t explicitly mention any particular intellectual property, the complaint alleges.

As evidence that Midjourney trained its AI system on its intellectual property, the studio attaches dozens of images showing the tool’s outputs compared to stills from its movies and TV shows. When prompted with “Batman, screencap from The Dark Knight,” the service returns an image of Christian Bale’s portrayal of the character featuring the costume’s Kevlar plate design that differentiated it from previous iterations of the hero that appears to be taken from the movie or promotional materials, with few to no alternations made. One of the more convincing examples highlights a 3D-animated Bugs Bunny mirroring his adaptation in Space Jam: A New Legacy.

The lawsuit argues Midjourney’s ability to return copyrighted characters is a “clear draw for subscribers,” diverting consumers away from purchasing Warner Bros. Discovery-approved posters, wall art and prints, among other products that must now compete against the service.

Like OpenAI, the content used to train Midjourney’s technology is a black box, representing an obstacle for some creators who’ve sued AI companies for copyright infringement. Rightsholders have mined public statements from AI company C-suites for clues. In 2022, Midjourney founder David Holz said in an interview that his employees “grab everything they can, they dump it in a huge file, and they kind of set it on fire to train some huge thing.” The specifics of the training process will be subject to discovery.

Warner Bros. Discovery seeks Midjourney’s profits attributable to the alleged infringement or, alternatively, $150,000 per infringed work, which could leave the AI company on the hook for massive damages.

The thrust of the studios’ lawsuits will likely be decided by one question: Are AI companies covered by fair use, the legal doctrine in intellectual property law that allows creators to build upon copyrighted works without a license? On that issue, a court found earlier this year that Amazon-backed Anthropic is on solid legal ground, at least with respect to training.

The technology is “among the most transformative many of us will see in our lifetimes,” wrote U.S. District Judge William Alsup.

Still, the court set the case for trial over allegations that the company illegal downloaded millions of books to create a library that was used for training. Anthropic, which later settled the lawsuit, faced potential damages of hundreds of millions of dollars stemming from the decision that may have laid the groundwork for Warner Bros. Discovery, Disney and Universal to get similar payouts depending on what they unearth in discovery over how Midjourney obtained copies of thousands of films and TV shosws that were repurposed to teach its image generator.

Still on the sidelines in the fight over generative AI: Paramount Skydance, Amazon MGM Studios, Apple Studios, Sony Pictures and Lionsgate. Some have major AI ambitions.

(ID 126397652 © Kasto80 | Dreamstime.com)

By

BREWD regional partnership

Sep 4, 2025 | 2:06 PM

The Central Alberta First (CAF) partnership has released its final Business Retention, Expansion and Workforce Development (BREWD) and BREWD Community Data Summary Reports ahead of an October conference focused on artificial intelligence in business.

The AI for Business: Tools, Tactics, Transformation Conference is set to take place Oct. 22, 2025 at the Olds College Alumni Centre. It is the first of a series of AI awareness workshops to be implemented under the BREWD regional strategy.

The one-day event will bring together industry leaders, innovators, and technology experts to explore ways how AI can improve businesses.

The BREWD initiative is one of the largest business engagement projects that’s been done in the central Alberta region, which includes over 700 businesses that represent all sectors of the economy.

The Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

SDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

Journey to 1000 models: Scaling Instagram’s recommendation system

Mumbai-based Perplexity Alternative Has 60k+ Users Without Funding

VEX Robotics launches AI-powered classroom robotics system

Kayak and Expedia race to build AI travel agents that turn social posts into itineraries

Happy 4th of July! 🎆 Made with Veo 3 in Gemini

OpenAI 🤝 @teamganassi

Macron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics