Tools & Platforms

AI Dashcam Boosts Driver Safety

Driving trucks is one of the unappreciated backbones of modern civilization. It’s also hard and sometimes dangerous work. But technology is being spun up to make the job safer and easier.

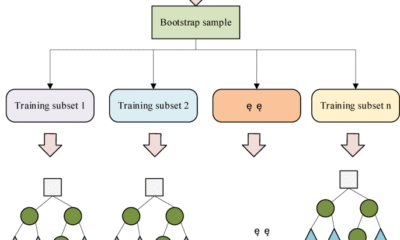

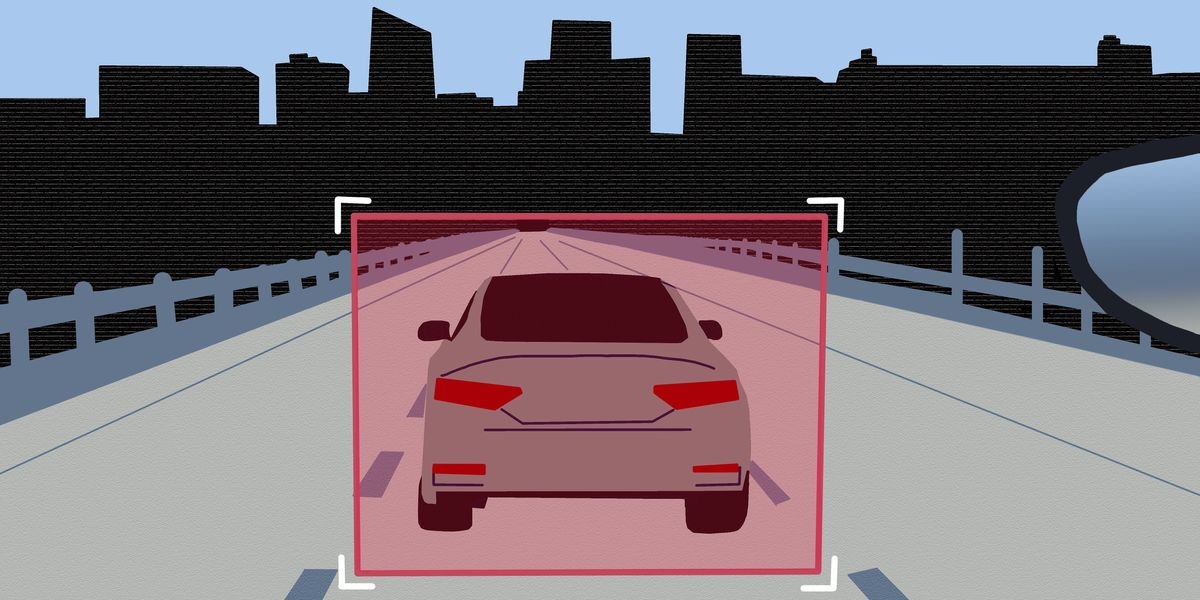

A new class of devices is being targeted at fleets that helps drivers elude accidents by flagging risky situations. The new systems use convolutional neural networks running in the vehicle (“edge” AI) and in the cloud to fuse data inputs from on-board vehicle diagnostics, along with data from cameras facing the driver and the roadway. The result are systems that can assess, in real time, the risk of collision and warn drivers in time to avoid most of them.

One of the most advanced of the new systems is from a company called Nauto. Earlier this year, the Virginia Tech Transportation Institute (VTTI) put the AI-enabled safety system from the Palo Alto, Calif.–based startup through its paces on the same Virginia Smart Roads controlled-access test tracks where it conducted a 2023 benchmark study evaluating three similar products. VTTI says this year’s testing was performed under the same scenarios of distracted driving, rolling stops, tailgating, and night driving.

According to the Virginia Tech researchers, Nauto’s dashcam matched or outperformed the previously benchmarked gadgets in detection accuracy—and provided feedback that translated more directly into information supervisors could use to address and correct risky driver behavior. “This study allowed us to evaluate driver monitoring technologies in a controlled, repeatable way, so we could clearly measure how the [Nauto] system responded to risky behaviors,” says Susan Soccolich, a senior research associate at VTTI.

MIT driver attention researcher Bryan Reimer, who was not involved in the study, says the real value of systems like Nauto’s lies beyond monitoring. “Many companies focus only on monitoring, but monitoring alone is just an enabler—the sensor, like radar in adaptive cruise control or forward collision warning. The real art lies in the support systems that shape driver behavior. That’s what makes Nauto unique.”

Reducing Alert Fatigue in Trucking Safety

“One of our primary goals is to issue alerts only when corrective action is still possible,” says Nauto CEO Stefan Heck. Just as important, he adds, is a design meant to avoid “alert fatigue,” a well-known phenomenon where alerts triggered when situations don’t actually call for it makes would-be responders less apt to take heed. False alerts have long plagued driver-assist systems, causing drivers to eventually disregard even the most serious warnings.

Nauto claims its alerts are accurate more than 90 percent of the time, because it combines more than ten distraction and drowsiness indicators. Among the inattention indicators the system tracks are head nodding or tilting, yawning, change in eye blink rate, long eyelid closures (indicating something called microsleeps), and gaze drifting from the road for extended periods (what happens when people text and drive). If a pedestrian enters the crosswalk and the driver is awake, alert, and not driving too fast, the system will remain silent under the assumption that the driver will slow down or stop so the person on foot can cross the street without incident. But if it notices that the driver is scrolling on their phone, it will sound an alarm—and perhaps trigger a visual warning too—in time to avoid causing injury.

While VTTI did not specifically test false-positive rates, it did measure detection accuracy across multiple scenarios. Soccolich reports that in Class 8 tractor tests, the system issued audible in-cab alerts for 100 percent of handheld calls, outgoing texts, discreet lap use of a smartphone, and seat belt violations, as well as 95 percent of rolling stops. For tailgating a lead vehicle, it alerted in 50 percent of trials initially, but after adjustment, delivered alerts in 100 percent of cases.

Nauto’s alarms can be triggered not only in the driver’s cabin but also in fleet supervisors’ offices of the trucking company that uses the system. But Nauto structures its alerts to prioritize the driver: Warnings—for all but the most high-risk situations—go to the cab of the truck, allowing self-correction, while supervisors are notified only when the system detects recklessness or a pattern of lower-risk behavior that requires corrective action.

“Many companies focus only on monitoring, but monitoring alone is just an enabler—the sensor, like radar in adaptive cruise control or forward collision warning. The real art lies in the support systems that shape driver behavior. That’s what makes Nauto unique.” –Bryan Reimer, MIT

The company packages its vehicle hardware in a windshield-mounted dashcam that plugs into a truck’s on-board diagnostics port. With forward- and driver-facing cameras and direct access to vehicle data streams, the device continuously recalculates risk. A delivery driver glancing at a phone while drifting from their lane, for example, triggers an immediate warning and a notice to supervisors that the driver’s behavior warrants being called on the carpet for their recklessness.

By contrast, a rural stop sign roll-through at dawn might trigger nothing more than a cheerful reminder to come to a complete stop next time. There are more complex cases, as when a driver is following another vehicle too closely. On a sunny day, in light traffic, the system might let it go, holding back from issuing a warning about the tailgating. But if it starts to rain, the system recognizes the change in safe stopping distance and updates its risk calculation. The driver is told to back off so there’s enough space to stop the truck in time on the rain-slick road if the lead car suddenly slams on its brakes.

Nauto aims to give drivers three to four seconds to steer clear, brake gently, or refocus. “The better response isn’t always slamming on the brakes,” Heck says. “Sometimes swerving is safer, and no automated braking system today will do that.”

AI Dashcams Lower Trucking Collision Rates

According to a 2017 Insurance Institute for Highway safety (IIHS) report, if all vehicles in the United States were equipped with both forward collision warning with automatic emergency braking in 2014, “almost 1 million police-reported rear-end crashes and more than 400,000 injuries in such crashes could have been prevented.” A separate IIHS study concluded that putting both technologies on a vehicle was good enough to prevent half of all such collisions. Heck, pointing to those numbers as well as to the Nauto system’s ability to sense danger originating both outside and inside a truck, claims his company’s AI-enabled dashcam can help cut the incidence of collisions even further than those built-in advanced driver assistance systems do.

Vehicle damage obviously costs a lot of money and time to fix. Fleets also pay follow-on costs such as those associated with driver turnover, a persistent problem in trucking. Lower crash rates, conversely, cut recruitment and training costs and reduce insurance premiums—giving fleet managers strong incentive to implement technologies like this new class of AI dashcams.

Today, Nauto’s dashcam is an aftermarket add-on about the size of a smartphone, but the company envisions future vehicles with the technology embedded as a software feature. With insurers increasingly setting their rates based on telematics from fleets, the ability to combine video evidence, vehicle data, and driver monitoring could reshape how risk is calculated and rates are set.

Ultimately the effectiveness of these risk assessment–and-alerting devices hinges on driver trust. If the driver believes that the system is designed to make them a better, safer motorist rather than to serve as a surveillance tool so the company can look over their shoulder, they’ll be more likely to accept input from their electronic copilot—and less likely to crash.

From Your Site Articles

Related Articles Around the Web

Tools & Platforms

OpenAI to burn through $115B by 2029 – Computerworld

The AI emperor is not wearing any clothes — I mean, seriously, he’s as naked as a jaybird. Or to be more precise, the big-name AI companies are burning through unprecedented amounts of money this year. With no clear plan to make a profit anytime soon. (Sure, Nvidia is coining cash with its chip foundries, but the AI software companies are another matter.)

For example, we now know — thanks to analysis from The Information — that OpenAI will burn $115 billion (that’s billion with a capital B) by 2029. That’s up $80 billion from previous estimates. On top of that, OpenAI has ordered $10 billion of Broadcom’s yet-to-ship AI chips for its yet-to-break-ground proprietary data centers. Oh, and there’s the $500 billion that OpenAI and friends SoftBank, Oracle, and MGX are already committed to spending on the Stargate Project AI data centers.

It’s not just OpenAI. Meta, Amazon, Alphabet, and Microsoft will collectively spend up to $320 billion in 2025 on AI-related technologies. Amazon alone aims to invest more than $100 billion on its AI initiatives, while Microsoft will dedicate $80 billion to datacenter expansion for AI workloads. And Meta’s CEO has set an AI budget of around $60 billion for this year.

Tools & Platforms

Babylon Bee 1, California 0: Court Strikes Down Law Regulating Election-Related AI Content | American Enterprise Institute

By reducing traditional barriers of content creation, the AI revolution holds the potential to unleash an explosion in creative expression. It also increases the societal risks associated with the spread of misinformation. This tension is the subject of a recent landmark judicial decision, Babylon Bee v Bonta (hat tip to Ajit Pai, whose social media account remains an outstanding follow). The eponymous satirical news site and others challenged California’s AB 2839, which prohibited the dissemination of “materially deceptive” AI-generated audio or video content related to elections. Although the court recognized that the case presented a novel question about “synthetically edited or digitally altered” content, it struck down the law, concluding that the rise of AI does not justify a departure from long-standing First Amendment principles.

AB 2839 was California’s attempt to regulate the use of AI and other digital tools in election-related media. The law defined “materially deceptive” content as audio or visual material that has been intentionally created or altered so that a reasonable viewer would believe it to be an authentic recording. It applied specifically to depictions of candidates, elected officials, election officials, and even voting machines or ballots, where the altered content was “reasonably likely” to harm a candidate’s electoral prospects or undermine public confidence in an election. While the statute carved out exceptions for candidates making deepfakes of themselves and for satire or parody, those exceptions required prominent disclaimers stating that the content had been manipulated.

The court recognized that the electoral context raises the stakes for both parties. Because the law regulated speech on the basis of content, the court applied strict scrutiny: The law is constitutional only if it serves a compelling governmental interest and is the least restrictive means of protecting that interest. On the one hand, the court recognized that the state has a compelling interest in preserving the integrity of its election process. California noted how AI-generated robocalls purporting to be from President Biden encouraged New Hampshire voters not to go to the polls during the 2024 primary. But on the other hand, the Supreme Court has recognized that political speech occupies the “highest rung” of First Amendment protection. That tension is the opinion’s throughline: While elections justify significant regulation, they also demand the most protection for individual speech.

But it ultimately held that California struck the wrong balance. The state argued that the bill was a logical extension of traditional harmful speech regulations such as defamation or fraud. But the court ruled that the law reached much further. It did not limit liability to instances of actual harm, but to any content “reasonably likely” to cause material harm. And importantly, it did not limit recovery to actual candidate victims, but instead allowed any recipient of allegedly deceptive content to sue for damages. This private right of action deputized roving “censorship czars” across the state whose malicious or politically motivated suits risk chilling a broad swath of political expression.

Given this breadth, the court found the law’s safe harbor was insufficient. The law exempted satirical content (such as that produced by the Bee) as long as it carried a disclaimer that the content was digitally manipulated, in accordance with the act’s formatting requirements. But the court found that this compelled disclosure was itself unconstitutional, as it drowned out the plaintiff’s message: “Put simply, a mandatory disclaimer for parody or satire would kill the joke.” This was especially true in contexts such as mobile devices, where the formatting requirements meant the disclaimer would take up the entire screen—a problem that I have discussed elsewhere in the context of Federal Trade Commission disclaimer rules.

Perhaps most importantly, the court recognized the importance of counter-speech and market solutions as alternative remedies to disinformation. It credited crowd-sourced fact-checking such as X’s community notes, and AI tools such as Grok, as scalable solutions already being adopted in the marketplace. And it noted that California could fund AI educational campaigns to raise awareness of the issue or form committees to combat disinformation via counter-speech.

The court’s emphasis on private, speech-based solutions points the way forward for other policymakers wrestling with deepfakes and other AI-generated disinformation concerns. Private, market-driven solutions offer a more constitutionally sound path than empowering the state to police truth and risk chilling protected expression. The AI revolution is likely to disrupt traditional patterns of content creation and dissemination in society. But fundamental First Amendment principles are sufficiently flexible to adapt to these changes, just as they have when presented with earlier disruptive technologies. When presented with problematic speech, the first line of defense is more speech—not censorship. Efforts at the state or federal level to regulate AI-generated content should respect this principle if they are to survive judicial scrutiny.

Tools & Platforms

AI And Creativity: Hero, Villain – Or Something Far More Nuanced? – New Technology

As part of SXSW’s first tever UK edition, Lewis Silkin

brought together a packed room to hear five esharp minds –

photographer-advocate Isabelle Doran, tech founder Guy Gadney,

licensing entrepreneur Benjamin Woollams, Commercial partners Laura

Harper and Phil Hughes – wrestle with one deceptively simple

question: is AI a hero or a villain in the creative world?

Spoiler: it’s neither. Over sixty fast-paced minutes, the

panel dug into the real-world impact of generative models, the gaps

in current law and the uneasy economics facing everyone from

freelancers to broadcasters. We’ve distilled the conversation

into six take-aways that matter to anyone who creates, commissions

or monetises content.

1. Generative AI is already taking work – fast

“Generative AI is competing with creators in their

place of work,” warned Isabelle Doran, citing her

Association of Photographers’ latest survey. In September 2024,

30% of respondents had lost a commission to AI; five months later

that figure ehit 58%. The fallout runs wider than photographers.

When a shoot is cancelled, stylists, assistants and post-production

teams stand idle too – a ripple effect the panel believes

that policy-makers ignore at their peril.

2. Yet the tech also unlocks new forms of storytelling

Guy Gadney was quick to balance the gloom: “It’s a

proper tsunami in the sense of the breadth and volume that’s

changing,” he said, “but it also lets us ask

what stories we can tell now that we couldn’t

before.” His company, Charismatic AI, is building tools

that let writers craft interactive narratives at a speed and scale

unheard of two years ago. The opportunity, he argued, lies in

marrying that capability with fair economic models rather than

trying to “block the tide“.

3. The law isn’t a free-for-all – but it is

fragmenting

Laura Harper cut through the noise: “The status quo at

the moment is uncertain and it depends on what country you’re

operating in.” In the UK, copyright can subsist in

computer-generated works; in the US, it can’t. EU rules require

commercial text-and-data miners to respect opt-outs; UK law

doesn’t – yet. Add pergent notions of “fair

use” and you get a regulatory patchwork that leaves creators

guessing and investors hesitating.

4. Transparency is the missing link

Phil Hughes nailed the practical blocker: “We can’t

build sensible licensing schemes until we know what data went into

each model.” Without a statutory duty to disclose

training sets, claims for compensation – or even informed

consent – stall. Isabelle Doran backed him up, pointing to

Baroness Kidron’s amendment that would force openness via the

UK’s Data Act. The Lords have now sent that proposal back to

the Commons five times; every extra week, more unlicensed works are

scraped.

5. Collective licensing could spread the load

Inpidual artists can’t negotiate with OpenAI on equal terms,

but Benjamin Woollams sees hope in a pooled approach. “Any

sort of compensation is probably where we should start,”

he said, arguing for collective rights management to mirror how

music collecting societies handle radio play. At True Rights

he’s developing pricing tools to help influencers understand

usage clauses before they sign them – a practical step

towards standardisation in a market famous for anything but.

6. Personality rights may be the next frontier

Copyright guards expression; it doesn’t stop a model cloning

your voice, gait or mannerisms. “We need to strengthen

personality rights,” Isabelle Doran urged, echoing calls

from SAG-AFTRA and beyond. Think passing off on steroids: a legal

shield for the look, sound and biometric data that make a performer

unique. Laura Harper agreed – but reminded us that

recognition is only half the battle. Enforcement mechanisms,

cross-border by default, must follow fast.

Where does that leave us?

AI is not marching creators to the cliff edge, but it is forcing

a reckoning. The panel’s consensus was clear:

- We can’t uninvent generative tools – nor should

we. - Creators deserve both transparency and a cut of the value

chain. - Government must move quickly, or the UK risks watching

leverage, investment and talent drift overseas

As Phil Hughes put it in closing:

“We all know artificial intelligence has unlocked

extraordinary possibilities across the creative industries. The

question is whether we’re bold enough and organised enough to

make sure those possibilities pay off for the people whose

imagination feeds the machine.”

The content of this article is intended to provide a general

guide to the subject matter. Specialist advice should be sought

about your specific circumstances.

-

Business2 weeks ago

Business2 weeks agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms1 month ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy2 months ago

Ethics & Policy2 months agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi