AI Research

AI companies are throwing million-dollar paychecks at AI PhDs

Larry Birnbaum, a professor of computer science at Northwestern University was recruiting a promising PhD to become a graduate researcher. Simultaneously, Google was wooing the student. And when he visited the tech giant’s campus in Mountain View, Calif., the company slated him to chat with its cofounder Sergey Brin and CEO Sundar Pichai, who are collectively worth about $140 billion and command over 183,000 employees.

“How are we going to compete with that?” Birnbaum asks, noting that PhDs in corporate research roles can make as much as five times professorial salaries, which average $155,000 annually. “That’s the environment that every chair of computer science has to cope with right now.”

Though Birnbaum says these recruitment scenarios have been “happening for a while,” the phenomenon has reportedly worsened as salaries across the industry have been skyrocketing. The trend recently became headline news after reports surfaced of Meta offering to pay some highly experienced AI researchers between seven- and eight-figure salaries. Those offers—coupled with the strong demand for leaders to propel AI applications—may be helping to pull up the salary levels of even newly minted PhDs. Even though some of these graduates have no professional experience, they are being offered the types of comma-filled levels traditionally reserved for director- and executive-level talent.

Some academics fear a ‘brain drain’

Engineering professors and department chairs at Johns Hopkins, University of Chicago, Northwestern, and New York University interviewed by Fortune are divided on whether these lucrative offers lead to a “brain drain” from academic labs.

The brain drain camp believes this phenomenon depletes the ranks of academic AI departments, which still do important research and also are responsible for training the next generation of PhD students. At the private labs, the AI researchers help juice Big Tech’s bottom line while providing, in these critics’ view, no public benefit. The unconcerned argue that academia is a thriving component of this booming labor market.

Anasse Bari, a professor of computer science and director of the predictive analytics and AI research lab at New York University, says that the corporate opportunities available to AI-focused academics is “significantly” affecting academia. “My general theory is that If we want a responsible future for AI, we must first invest in a solid AI education that upholds these values, cultivating thoughtful AI practitioners, researchers, and educators who will carry this mission forward,” he wrote to Fortune via email, emphasizing that despite receiving “many” offers for industry-side work, his NYU commitments take precedence.

In the days before ChatGPT, top AI researchers were in high demand, just as today. But many of the top corporate AI labs, such as OpenAI, Google DeepMind, and Meta’s FAIR (Fundamental AI Research), would allow established academics to keep their university appointments, at least part-time. This would allow them to continue to teach and train graduate students, while also conducting research for the tech companies.

While some professors say that there’s been no change in how frequently corporate labs and universities are able to reach these dual corporate-academic appointments, others disagree. NYU’s Bari says this model has declined owing to “intense talent competition, with companies offering millions of dollars for full-time commitment which outpaces university resources and shifts focus to proprietary innovation.”

Commitment to their faculty appointments remains true for all the academics Fortune interviewed for this story. But professors like Henry Hoffman, who chairs the University of Chicago’s Department of Computer Science, has watched his PhD students get courted by tech companies since he began his professorship in 2013.

“The biggest thing to me is the salaries,” he says. He mentions a star student with zero professional experience who recently dropped out of the UChicago PhD program to accept a “high six-figure” offer from ByteDance. “When students can get the kind of job they want [as students], there’s no reason to force them to keep going.”

While PhDs thrive, undergrad computer science students struggle

The job market for computer science and engineering PhDs who study AI sits in stark contrast to the one faced by undergraduates in the field. This degree-level polarization exists because many of those with bachelor’s degrees in computer science would traditionally find jobs as coders. But LLMs are now writing large portions of code at many companies, including Microsoft and Salesforce. Meanwhile, most AI-relevant PhD students have their pick of frothy jobs—in academia, tech, and finance. These graduates are courted by the private sector because their training propels AI and machine learning applications, which, in turn, can increase revenue opportunities for model makers.

There were 4,854 people who graduated with AI-relevant PhDs in mathematics and computer science across U.S. universities, according to 2022 data. This number has increased significantly—by about 20%—since 2014. These PhDs’ postgraduate employment rate is greater than those graduating with bachelor’s degrees in similar fields. And in 2023, 70% of AI-relevant PhDs took private sector jobs postgrad, a huge increase from two decades ago when just 20% of these grads accepted corporate work, per MIT.

Make no mistake: PhDs in AI, computer science, applied mathematics, and related fields have always had lucrative opportunities available after graduation. Until now, one of the most financially rewarding paths was quantitative research at hedge funds: All-in compensation for PhDs fresh out of school can climb to $1 million–plus in these roles. It’s a compelling pitch, especially for students who’ve spent up to seven years living off meager stipends of about $40,000 a year.

The all-but-assured path to prosperity has made relevant PhD programs in computer science and math extremely popular. AI and machine learning are the most popular disciplines among engineering PhDs, according to a 2023 Computing Research Association survey. UChicago computer science department chair Hoffman says that PhD admissions applications have surged by about 12% in the past few years alone, pressuring him and his colleagues to hire new faculty to increase enrollment and meet the demand.

Applications to AI PhD programs are on the rise

Though Trump’s federal funding cuts to universities have significant impacts on research in many departments, they may be less pertinent to those working on AI-related projects. This is partially because some of this research is funded by corporations. Google, for example, is collaborating with the University of Chicago to research trustworthy AI.

That dichotomy probably underscores Johns Hopkins University’s decision to open its Data Science and AI Institute: a $2 billion five-year effort to enroll 750 PhD students in engineering disciplines and hire over 100 new tenure-track faculty members, making it one of the largest PhD programs in the country.

“Despite the dreary mood elsewhere, the AI and data science area at Hopkins is rosy,” says Anton Dahbura, the executive director of Johns Hopkins’ Information Security Institute and codirector of the Institute for Assured Autonomy, likely referring to his university’s cut of 2,000 workers after it lost $800 million in federal funding earlier this year. Dahbura supports this argument by noting that Hopkins received “hundreds” of applications for professor positions in its Data Science and AI Institute.

For some, the reasons to remain in academia are ethical.

Luís Amaral, a computer science professor at Northwestern, is “really concerned” that AI companies have overhyped the capabilities of their large language models and that their strategies will breed catastrophic societal implications, including environmental destruction. He says of OpenAI leadership, “If I’m a smart person, I actually know how bad the team was.”

Because most corporate labs are largely focused on LLM- and transformer-based approaches, if these methods ultimately fall short of the hype, there could be a reckoning for the industry. “Academic labs are among the few places actively exploring alternative AI architectures beyond LLMs and transformers,” says NYU’s Bari, who is researching creative applications for AI using a model based on birds’ intelligence. “In this corporate-dominated landscape, academia’s role as a hub for nonmainstream experimentation has likely become more important.”

AI Research

How could an OpenAI partnership with Broadcom shake up Silicon Valley’s chip hierarchy?

Broadcom Inc. is helping OpenAI design and produce an artificial intelligence accelerator from 2026, getting into a lucrative sphere dominated by Nvidia Corp. Its shares jumped by the most since April.

The two firms plan to ship the first chips in that lineup starting next year, a person familiar with the matter said, asking to remain anonymous discussing a private deal. OpenAI will initially use the chip for its own internal purposes, the Financial Times reported earlier.

Broadcom’s shares surged as much as 16% in New York trading on Friday, adding more than $200 billion to the company’s market value. Nvidia’s stock was down as much as 4.3% at $164.22, its biggest intraday decline since May.

Chief Executive Officer Hock Tan made veiled references to that partnership on Thursday when he said Broadcom had secured a new client for its custom accelerator business. Tan said the company has secured more than $10 billion in orders from the new customer, which the person identified as OpenAI.

Accelerators are essential to the development of AI at big tech firms from Meta Platforms Inc. to Microsoft Corp. Bloomberg News has previously reported that OpenAI and Broadcom were working on an inference chip design, intended to run or operate artificial intelligence services after they had been trained.

“Last quarter, one of these prospects released production orders to Broadcom,” Tan said, without naming the customer.

Broadcom is among the chip designers benefiting from a post-ChatGPT boom in AI development, in which companies and startups from the US to China are spending billions to build data centers, train new models and research breakthroughs in a pivotal new technology. On Thursday, Tan told investors the chipmaker’s outlook will improve “significantly” in fiscal 2026, helping allay concerns about slowing growth.

Tan had previously said that AI revenue for 2026 would show growth similar to the current year — a rate of 50% to 60%. Now, with a new customer that he said has “immediate and pretty substantial demand,” the rate will accelerate in a way that will be “fairly material,” Tan said.

“We now expect the outlook for fiscal 2026 AI revenue to improve significantly from what we had indicated last quarter,” he said.

Broadcom’s quarterly results initially drew a tepid reaction from investors, a sign they were anticipating a bigger payoff from the AI boom. After fluctuating in the wake of the report, the stock gained more than 3% during the conference call.

Sales will be about $17.4 billion in the fiscal fourth quarter, which runs through October, the company said in an earlier statement. Analysts had projected $17.05 billion on average, though some estimates topped $18 billion, according to data compiled by Bloomberg.

Expectations were high heading into the earnings report. Broadcom shares more than doubled since hitting a low in April, adding about $730 billion to the company’s market value and making them the third-best performer in the Nasdaq 100 Index.

Investors have been looking for signs that tech spending remains strong. Last week, Nvidia gave an underwhelming revenue forecast, sparking fears of a bubble in the artificial intelligence industry.

Though Broadcom hasn’t experienced Nvidia’s runaway sales growth, it is seen as a key AI beneficiary. Customers developing and running artificial intelligence models rely on its custom-designed chips and networking equipment to handle the load. The shares had been up 32% for the year.

During the call, Tan said he and the board have agreed that he will stay as Broadcom CEO until 2030 “at least.”

In the third quarter ended Aug. 3, sales rose 22% to almost $16 billion. Profit, excluding some items, was $1.69 a share. Analysts had estimated revenue of about $15.8 billion and earnings of $1.67 a share.

Sales of AI semiconductors were $5.2 billion, compared with an estimate of $5.11 billion. The company expects revenue from that category to reach $6.2 billion in the fourth quarter. Analysts projected $5.82 billion.

Other AI-focused chipmakers have stumbled in recent days. Shares of Marvell Technology Inc., a close Broadcom competitor in the market for custom semiconductors, plunged 19% on Friday after the company’s data center revenue missed estimates.

Broadcom’s Tan has been upgrading the company’s networking equipment to better transfer information between the pricey graphics chips at the heart of AI data centers. As his latest comments suggest, Broadcom is also making progress finding customers who want custom-designed chips for AI tasks.

Tan has used years of acquisitions to turn Broadcom into a sprawling software and hardware giant. In addition to the AI work, the Palo Alto, California-based company makes connectivity components for Apple Inc.’s iPhone and sells virtualization software for running networks.

Bass writes for Bloomberg.

AI Research

Silicon Valley executives gather at White House dinner and pledge AI investments

Meta Platforms Inc.’s Mark Zuckerberg and Apple Inc.’s Tim Cook joined tech industry leaders in touting their pledges to boost spending in the US on artificial intelligence during a dinner hosted by President Donald Trump that highlighted his deepening relationship with Silicon Valley.

In his opening remarks, Trump addressed a key concern of tech companies: ensuring there’s enough energy to meet surging power demands from the data centers behind the AI boom.

“We’re making it very easy for you in terms of electric capacity and getting it for you, getting your permits,” Trump said in the White House State Dining Room. “We’re leading China by a lot, by a really, by a great amount.”

Thursday’s dinner marked a rare gathering in Washington of top executives and founders from some of the world’s most valuable tech companies — all vying for an edge in the emerging field of AI. Attendees also included OpenAI Inc.’s Sam Altman, Alphabet Inc.’s Sundar Pichai and co-founder Sergey Brin, and Microsoft Corp.’s Satya Nadella and Bill Gates.

The president went around the table asking executives to talk about their plans. Corporate leaders took turns highlighting their efforts to expand in the US, with each expressing gratitude for administration policies they see as bolstering efforts to advance AI. Trump asked Zuckerberg to speak first.

“All of the companies here are building, just making huge investments in the country in order to build out data centers and infrastructure to power the next wave of innovation,” the Meta CEO told Trump. Pressed by the president on how much his company was investing, Zuckerberg said “at least $600 billion” through 2028.

“That’s a lot,” Trump said. In recent days, the president has touted a massive data center Meta is building in Louisiana that will cost $50 billion.

Trump has drawn tech executives into his orbit with an agenda aimed at lowering tax and regulatory burdens for business in a bid to ramp up investments in the US and secure the country’s dominance in cutting-edge tech sectors. The burgeoning artificial intelligence field has been a centerpiece of that focus.

Trump’s White House AI czar, Silicon Valley venture capitalist David Sacks, in July helped unveil a sweeping action plan calling for easing regulation of artificial intelligence, stepping up research and development, and boosting domestic energy production to fuel energy-hungry data centers — all to ensure the US keeps an edge over rivals such as China.

The president has secured billions in corporate commitments to drive construction of AI infrastructure. On Thursday, the White House hailed Hitachi Energy’s announcement that it planned to invest more than $1 billion in electric grid infrastructure that could support AI’s growing power demands.

More broadly, companies have announced plans to bolster US investment as they look to avoid tariffs Trump is placing on imports to spur a shift toward domestic manufacturing of critical goods. Trump has indicated that some companies that commit to building in the US could get a break from some tariffs.

Cook, whose company last month committed to spending an additional $100 billion on domestic manufacturing for a total pledge of $600 billion, thanked Trump for “setting the tone such that we could make a major investment.”

The president indicated that Cook’s investment promise would help spare Apple from tariffs on semiconductor imports that the administration has plans to impose. “Tim Cook would be in pretty good shape,” Trump said.

Trump’s relationship with Silicon Valley took wing at his swearing-in ceremony in January, when Zuckerberg, Cook and Pichai each had prominent seats after having donated millions toward the inauguration. Trump and his allies will be eager to tap those pockets again ahead of next year’s midterm elections to determine control of Congress.

Earlier Thursday, many of the same executives joined first lady Melania Trump for a discussion on AI, where she hailed the business leaders as visionaries and urged their cooperation in helping responsibly guide the broader adoption of AI technology.

The first lady sat next to Trump during the White House dinner. Other attendees at the evening event included Oracle Corp. CEO Safra Catz and Lisa Su, the CEO of Advanced Micro Devices Inc.

The dinner was originally intended to be held in the newly renovated White House Rose Garden, where Trump installed stone pavers and furnished the space with patio tables and a sound system after complaining that the previous grass surface was unsuitable for large events. But inclement weather forced officials to move the event inside.

Wingrove and Dezenski write for Bloomberg.

AI Research

AI can evaluate social situations in a similar way to humans, offering new neuroscience research avenues

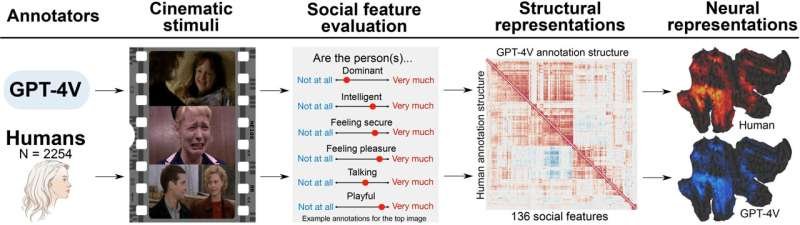

Artificial intelligence can detect and interpret social features between people from images and videos almost as reliably as humans, according to a new study from the University of Turku in Finland published in the journal Imaging Neuroscience.

People are constantly making quick evaluations about each other’s behavior and interactions. The latest AI models, such as the large language model ChatGPT developed by OpenAI, can describe what is happening in images or videos. However, it has not been clear whether AI’s interpretive capabilities are limited to easily recognizable details or whether it can also interpret complex social information.

Researchers at the Turku PET Center in Finland studied how accurately the popular language model ChatGPT can assess social interaction.

The model was asked to evaluate 138 different social features from videos and pictures. The features described a wide range of social traits such as facial expressions, body movements or characteristics of social interaction, such as co-operation or hostility.

The researchers compared the evaluations made by AI with more than 2,000 similar evaluations made by humans.

The research results showed that the evaluations provided by ChatGPT were very close to those made by humans. AI’s evaluations were even more consistent than those made by a single person.

“Since ChatGPT’s assessment of social features were on average more consistent than those of an individual participant, its evaluations could be trusted even more than those made by a single person. However, the evaluations of several people together are still more accurate than those of artificial intelligence,” says Postdoctoral Researcher Severi Santavirta from the University of Turku.

Artificial intelligence can boost research in neuroscience

The researchers used AI and human participants’ evaluations of social situations to model the brain networks of social perception using functional brain imaging in the second phase of the study.

Before researchers can look at what happens in the human brain when people watch videos or pictures, the social situations they depict need to be assessed. This is where AI proved to be a useful tool.

“The results were strikingly similar when we mapped the brain networks of social perception based on either ChatGPT or people’s social evaluations,” says Santavirta.

Researchers say this suggests that AI can be a practical tool for large-scale and laborious neuroscience experiments, where, for example, interpreting video footage during brain imaging would require significant human effort. AI can automate this process, thereby reducing the cost of data processing and significantly speeding up research.

“Collecting human evaluations required the efforts of more than 2,000 participants and a total of more than 10,000 work hours, while ChatGPT produced the same evaluations in just a few hours,” Santavirta summarizes.

Practical applications from health care to marketing

While the researchers focused on the benefits of AI for brain imaging research, the results suggest that AI could also be used for a wide range of other practical applications.

The automatic evaluation of social situations by AI from video footage could help doctors and nurses, for example, to monitor patients’ well-being. Furthermore, AI could evaluate the likely reception of audiovisual marketing by the target audience or predict abnormal situations from security camera videos.

“The AI does not get tired like a human, but can monitor situations around the clock. In the future, the monitoring of increasingly complex situations can probably be left to artificial intelligence, allowing humans to focus on confirming the most important observations,” Santavirta says.

More information:

Severi Santavirta et al, GPT-4V shows human-like social perceptual capabilities at phenomenological and neural levels, Imaging Neuroscience (2025). DOI: 10.1162/IMAG.a.134

Citation:

AI can evaluate social situations in a similar way to humans, offering new neuroscience research avenues (2025, September 5)

retrieved 5 September 2025

from https://medicalxpress.com/news/2025-09-ai-social-situations-similar-humans.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

-

Business1 week ago

Business1 week agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms3 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics