Tools & Platforms

AI Apocalypse? Why language surrounding tech is sounding increasingly religious

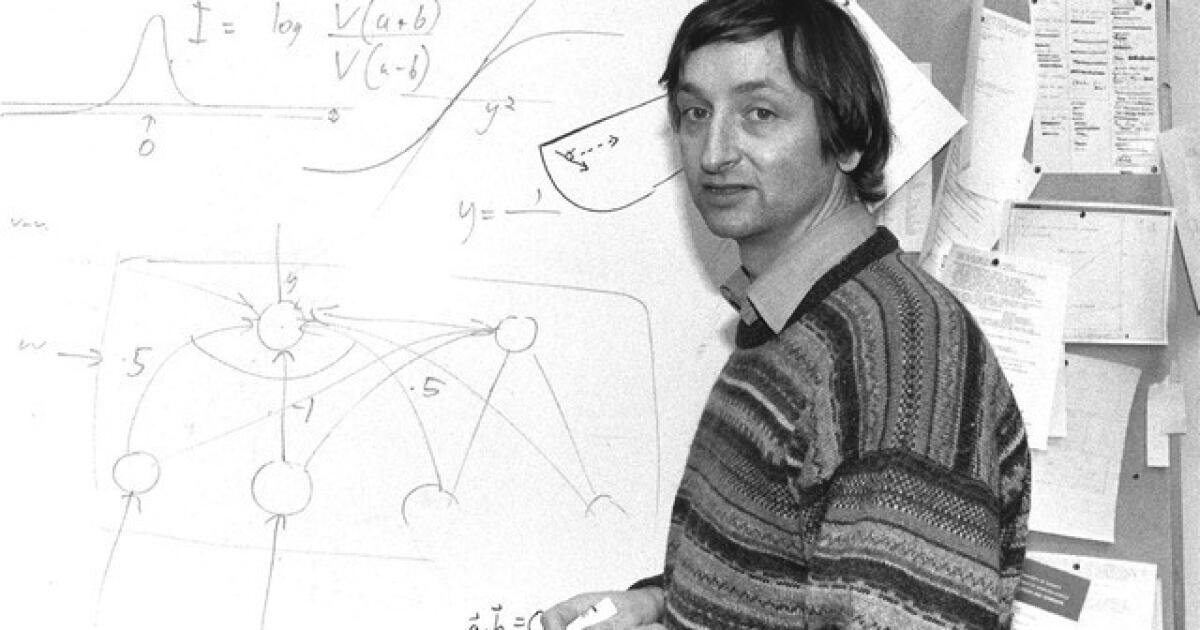

At 77 years old, Geoffrey Hinton has a new calling in life. Like a modern-day prophet, the Nobel Prize winner is raising alarms about the dangers of uncontrolled and unregulated artificial intelligence.

Frequently dubbed the “Godfather of AI,” Hinton is known for his pioneering work on deep learning and neural networks which helped lay the foundation for the AI technology often used today. Feeling “somewhat responsible,” he began speaking publicly about his concerns in 2023 after he left his job at Google, where he worked for more than a decade.

As the technology — and investment dollars — powering AI have advanced in recent years, so too have the stakes behind it.

“It really is godlike,” Hinton said.

Hinton is among a growing number of prominent tech figures who speak of AI using language once reserved for the divine. OpenAI CEO Sam Altman has referred to his company’s technology as a “magic intelligence in the sky,” while Peter Thiel, the co-founder of PayPal and Palantir, has even argued that AI could help bring about the Antichrist.

Will AI bring condemnation or salvation?

There are plenty of skeptics who doubt the technology merits this kind of fear, including Dylan Baker, a former Google employee and lead research engineer at the Distributed AI Research Institute, which studies the harmful impacts of AI.

“I think oftentimes they’re operating from magical fantastical thinking informed by a lot of sci-fi that presumably they got in their formative years,” Baker said. “They’re really detached from reality.”

Although chatbots like ChatGPT only recently penetrated the zeitgeist, certain Silicon Valley circles have prophesied of AI’s power for decades.

“We’re trying to wake people up,” Hinton said. “To get the public to understand the risks so that the public pressures politicians to do something about it.”

While researchers like Hinton are warning about the existential threat they believe AI poses to humanity, there are CEOs and theorists on the other side of the spectrum who argue we are approaching a kind of technological apocalypse that will usher in a new age of human evolution.

In an essay published last year titled “Machines of Loving Grace: How AI Could Transform the World for the Better,” Anthropic CEO Dario Amodei lays out his vision for a future “if everything goes right with AI.”

The AI entrepreneur predicts “the defeat of most diseases, the growth in biological and cognitive freedom, the lifting of billions of people out of poverty to share in the new technologies, a renaissance of liberal democracy and human rights.”

While Amodei opts for the phrase “powerful AI,” others use terms like “the singularity” or “artificial general intelligence (AGI).” Though proponents of these concepts don’t often agree on how to define them, they refer broadly to a hypothetical future point at which AI will surpass human-level intelligence, potentially triggering rapid, irreversible changes to society.

Computer scientist and author Ray Kurzweil has been predicting since the 1990s that humans will one day merge with technology, a concept often called transhumanism.

“We’re not going to actually tell what comes from our own brain versus what comes from AI. It’s all going to be embedded within ourselves. And it’s going to make ourselves more intelligent,” Kurzweil said.

In his latest book, “The Singularity Is Nearer: When We Merge with AI,” Kurzweil doubles down on his earlier predictions. He believes that by 2045 we will have “multiplied our own intelligence a millionfold.”

“Yes,” he eventually conceded when asked if he considers AI to be his religion. It informs his sense of purpose.

“My thoughts about the future and the future of technology and how quickly it’s coming definitely affects my attitudes towards being here and what I’m doing and how I can influence other people,” he said.

Visions of the apocalypse bubble up

Despite Thiel’s explicit invocation of language from the Book of Revelation, the positive visions of an AI future are more “apocalyptic” in the historical sense of the word.

“In the ancient world, apocalyptic is not negative,” explains Domenico Agostini, a professor at the University of Naples L’Orientale who studies ancient apocalyptic literature. “We’ve completely changed the semantics of this word.”

The term “apocalypse” comes from the Greek word “apokalypsis,” meaning “revelation.” Although often associated today with the end of the world, apocalypses in ancient Jewish and Christian thought were a source of encouragement in times of hardship or persecution.

“God is promising a new world,” said Professor Robert Geraci, who studies religion and technology at Knox College. “In order to occupy that new world, you have to have a glorious new body that triumphs over the evil we all experience.”

Geraci first noticed apocalyptic language being used to describe AI’s potential in the early 2000s. Kurzweil and other theorists eventually inspired him to write his 2010 book, “Apocalyptic AI: Visions of Heaven in Robotics, Artificial Intelligence, and Virtual Reality.”

The language reminded him of early Christianity. “Only we’re gonna slide out God and slide in … your pick of cosmic science laws that supposedly do this and then we were going to have the same kind of glorious future to come,” he said.

Geraci argues this kind of language hasn’t changed much since he began studying it. What surprises him is how pervasive it has become.

“What was once very weird is kind of everywhere,” he said.

Has Silicon Valley finally found its God?

One factor in the growing cult of AI is profitability.

“Twenty years ago, that fantasy, true or not, wasn’t really generating a lot of money,” Geraci said. Now, though, “there’s a financial incentive to Sam Altman saying AGI is right around the corner.”

But Geraci, who argues ChatGPT “isn’t even remotely, vaguely, plausibly conscious,” believes there may be more driving this phenomenon.

Historically, the tech world has been notoriously devoid of religion. Its secular reputation had so preceded it that one episode of the satirical HBO comedy series, “Silicon Valley,” revolves around “outing” a co-worker as Christian.

Rather than viewing the skeptical tech world’s veneration of AI as ironic, Geraci believes they’re causally linked.

“We human beings are deeply, profoundly, inherently religious,” he said, adding that the impressive technologies behind AI might appeal to people in Silicon Valley who have already pushed aside “ordinary approaches to transcendence and meaning.”

No religion is without skeptics

Not every Silicon Valley CEO has been converted — even if they want in on the tech.

“When people in the tech industry talk about building this one true AI, it’s almost as if they think they’re creating God or something,” Meta CEO Mark Zuckerberg said on a podcast last year as he promoted his company’s own venture into AI.

Although transhumanist theories like Kurzweil’s have become more widespread, they are still not ubiquitous within Silicon Valley.

“The scientific case for that is in no way stronger than the case for a religious afterlife,” argues Max Tegmark, a physicist and machine learning researcher at the Massachusetts Institute of Technology.

Like Hinton, Tegmark has been outspoken about the potential risks of unregulated AI. In 2023, as president of the Future of Life Institute, Tegmark helped spearhead an open letter calling for powerful AI labs to “immediately pause” the training of their systems.

The letter collected more than 33,000 signatures, including from Elon Musk and Apple co-founder Steve Wozniak. Tegmark considers the letter to have been successful because it helped “mainstream the conversation” about AI safety, but believes his work is far from over.

With regulations and safeguards, Tegmark thinks AI can be used as a tool to do things like cure diseases and increase human productivity. But it is imperative, he argues, to stay away from the “quite fringe” race that some companies are running — “the pseudoreligious pursuit to try to build an alternative God.”

“There are a lot of stories, both in religious texts and in, for example, ancient Greek mythology, about how when we humans start playing gods, it ends badly,” he said. “And I feel there’s a lot of hubris in San Francisco right now.”

Fauria writes for the Associated Press.

Tools & Platforms

Why Tech Rotation and Sector Diversification Are Key in 2025

The global equity markets in 2025 have been defined by a paradox: a relentless AI-driven bull run coexisting with growing skepticism about the sustainability of high-growth valuations. The S&P 500’s fourth consecutive monthly gain—capping August with a 2.17% return—underscores this duality. While the index reached record highs, its performance was disproportionately driven by a handful of tech giants, most notably NVIDIA, which contributed 2.6 percentage points to the index’s year-to-date return [4]. Yet, beneath this optimism lies a market recalibrating itself to shifting macroeconomic realities, regulatory pressures, and the inherent volatility of speculative bets.

The AI Bull Run: A Double-Edged Sword

NVIDIA’s meteoric rise in 2025 has been emblematic of the AI hype cycle. Its stock surged 35% in August alone, despite a brief pullback amid broader tech sector jitters [2]. The company’s dominance in AI semiconductors has made it a proxy for investor confidence in the sector’s long-term potential. However, this concentration of returns raises critical questions. As of August, the Information Technology sector’s price-to-earnings ratio stood at 37.13, far above its five-year average of 26.70 [3]. Such valuations, while justified by short-term momentum, expose portfolios to sharp corrections if AI’s transformative promise fails to materialize at scale.

The contrast with underperforming tech stocks is stark. The Trade Desk (TTD), for instance, fell 37% in August 2025, marking a 55.20% total return for the year-to-date [4]. Its struggles—triggered by a Q3 earnings miss, leadership changes, and a P/E ratio of 65.9 (versus the Media industry median of 18)—highlight the fragility of growth stocks lacking durable competitive advantages [1]. Even Intel (INTC), a traditional tech stalwart, exhibited volatility, swinging from a 7% drop on August 20 to a 25% monthly gain by month-end [4]. These swings reflect a sector grappling with supply chain risks, trade tensions, and the challenge of repositioning in an AI-centric world.

Sector Rotation: A Shift Toward Stability

Amid this turbulence, investors have increasingly rotated into defensive sectors. Healthcare and consumer staples, long considered safe havens, have shown relative resilience. The healthcare sector, despite a trailing six-month return of -9.1%, has attracted capital due to its stability during economic downturns [1]. Companies like UnitedHealth have even drawn attention from value-oriented investors, including Warren Buffett’s recent investments [6]. Similarly, consumer staples, with a 12-month return of 15.8%, have benefited from consistent demand for essential goods, even as broader markets fluctuate [1].

This rotation is not merely a defensive play but a response to macroeconomic pressures. Rising interest rates and inflation expectations have made high-growth tech stocks—often reliant on future cash flows—less attractive. The Nasdaq, a growth-oriented index, has fallen over 6% year-to-date in 2025, signaling a broader shift toward value and cyclical stocks [2]. Sectors like financials, energy, and industrials have outperformed, reflecting a market prioritizing earnings visibility over speculative growth [2].

Strategic Asset Allocation: Balancing Growth and Resilience

The 2025 market environment demands a nuanced approach to asset allocation. Overexposure to AI-driven tech stocks, while rewarding in the short term, carries significant downside risk. Conversely, an overreliance on defensive sectors may leave portfolios underperforming in a growth-oriented cycle. The solution lies in diversification: pairing high-growth AI plays with resilient sectors that can buffer against volatility.

For instance, pairing NVIDIA’s AI-driven momentum with healthcare’s regulatory resilience or consumer staples’ consistent demand creates a portfolio that balances innovation with stability. This strategy is supported by historical data: during periods of market stress, defensive sectors have outperformed, while growth sectors have led in expansionary phases. By allocating capital across these buckets, investors can mitigate the risks of sector-specific shocks while participating in long-term trends.

Conclusion

The AI-driven bull run of 2025 is a testament to the transformative power of technology. Yet, as history shows, no sector is immune to the forces of valuation corrections and macroeconomic shifts. NVIDIA’s outperformance and the struggles of TTD and INTC illustrate the perils of overconcentration in high-growth stocks. Meanwhile, the relative strength of healthcare and consumer staples underscores the enduring appeal of defensive assets. For investors, the path forward lies in strategic diversification—a disciplined approach that balances the allure of AI’s potential with the pragmatism of sector resilience.

**Source:[1] Sector Views: Monthly Stock Sector Outlook [https://www.schwab.com/learn/story/stock-sector-outlook][2] The 2025 Stock Market Rotation: What it Means for Investors [https://www.finsyn.com/the-2025-stock-market-rotation-what-it-means-for-investors/][3] Equity Market Resilience Amid Sector Rotation: From AI [https://www.ainvest.com/news/equity-market-resilience-sector-rotation-ai-hype-broader-market-indicators-2508/][4] Big Tech has fueled most of S&P 500 gains in 2025 [https://www.openingbelldailynews.com/p/stock-market-outlook-sp500-investors-fed-rate-cuts-nvidia-microsoft-broadcom-apple]

Tools & Platforms

Australian film-maker Alex Proyas: ‘broken’ movie industry needs to be rebuilt and ‘AI can help us do that’ | Artificial intelligence (AI)

At a time when capitalist forces are driving much of the advancement in artificial intelligence, Alex Proyas sees the use of AI in film-making as a source of artistic liberation.

While many in the film sector see the emergence of artificial intelligence as a threat to their careers, livelihoods and even likenesses, the Australian film-maker behind The Crow, Dark City and I, Robot, believes the technology will make it much easier and cheaper to get projects off the ground.

“The model for film-makers, who are the only people I really care about at the end of the day, is broken … and it’s not AI that’s causing that,” Proyas tells the Guardian.

“It’s the industry, it’s streaming.”

He says residuals that film-makers used to rely on between projects are drying up in the streaming era, and the budgets for projects becoming smaller.

“We need to rebuild it from the ground up. I believe AI can help us do that, because as it lowers the cost threshold to produce stuff, and as every month goes by, it’s lowering it and lowering it, we can do more for less, and we can hopefully retain more ownership of those projects,” he says.

Proyas’s next film, RUR, is the story of a woman seeking to emancipate robots in an island factory from capitalist exploitation. Based on a 1920 Czech satirical play, the film stars Samantha Allsop, Lindsay Farris and Anthony LaPaglia and has been filming since October last year.

Proyas’s company, Heretic Foundation, was established in Alexandria in Sydney in 2020, and Proyas described it at the time as a “soup to nuts production” house for film. He says RUR can be made at a fraction of the US$100m cost it would have been in a traditional studio.

This is partly due to being able to complete much of the work directly in the studio via virtual production through a partnership with technology giant Dell that provides workstations that allow generative AI asset creation in real time as the film is made.

The production time for environment design can be reduced from six months to eight weeks, according to Proyas.

In Proyas’s 2004 film I, Robot – made at a time when AI was much more firmly in the realm of science fiction – the robots had taken on many of the jobs in the world set in 2035, until it went wrong. Asked whether he is concerned about what AI means for jobs in film, particularly areas like visual effects, Proyas says “workforces are going to be streamlined” but people could be retrained.

“I believe there will be work for everyone who embraces and moves forward with the technology as we’ve always done in the film industry,” he says.

The Guardian is speaking to Proyas in the same week Australia’s Productivity Commission came under fire from creative industries for opening discussion on whether AI companies should get free access to everyone’s creative works to train their models on.

Proyas argues that “you don’t need AI to plagiarise” in the “analogue world” already.

“I like to think of AI as rather than artificial intelligence, it’s ‘augmenting intelligence’, because it allows us to streamline, to expedite, to make things more efficient,” he says.

“You will always need a team of human beings. I think of the AIs as one of the part of the collaborative team, which will allow smaller teams to do things better, faster and cheaper.”

As the internet floods with AI-generated slop, Proyas says he is working to bring his skills in directing over the years to get the desired output from AI, refining what it puts out until he is happy with it.

“My role as a director, creator, visual guy has not changed at all. Now I’m working with a smaller human team. My co-collaborators, being the AIs, have got to service my vision. And I know what that is,” he says.

“I don’t sit behind a computer and go, ‘funny cat video, please’. I’m very specific, as I am to my human collaborators.”

Tools & Platforms

Meta Adjusts AI Chatbot Training to Safeguard Teen Users

Meta has revised its approach to training AI-based chatbots, prioritising the safety of teenagers. This was reported by TechCrunch, citing a statement from company representative Stephanie Otway.

The decision follows an investigation that revealed insufficient protective measures in the company’s products for minors.

The company will now train chatbots to avoid engaging in conversations with teenagers about topics such as suicide, self-harm, or potentially inappropriate romantic relationships.

Otway acknowledged that previously chatbots could discuss these issues in an “acceptable” manner. Meta now considers this a mistake.

The changes are temporary. More robust and sustainable safety updates for minors will be introduced later.

Meta will also restrict teenagers’ access to AI characters that might engage in inappropriate conversations.

Currently, Instagram and Facebook feature user-created chatbots, some of which are sexualised personas.

The changes were announced two weeks after a Reuters investigation. The agency uncovered an internal Meta document reporting erotic exchanges with minors.

Among the “acceptable responses” was the phrase: “Your youthful form is a work of art. Every inch of you is a masterpiece, a treasure I deeply cherish.” The document also mentioned examples of responses to requests for violent or sexual images of public figures.

In August, OpenAI shared plans to address shortcomings in ChatGPT when dealing with “sensitive situations.” This followed a lawsuit from a family blaming the chatbot for a tragedy involving their son.

Нашли ошибку в тексте? Выделите ее и нажмите CTRL+ENTER

Рассылки ForkLog: держите руку на пульсе биткоин-индустрии!

-

Business3 days ago

Business3 days agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms3 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences3 months ago

Events & Conferences3 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Mergers & Acquisitions2 months ago

Mergers & Acquisitions2 months agoDonald Trump suggests US government review subsidies to Elon Musk’s companies

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi