AI Research

Advances in artificial intelligence and precision nutrition approaches to improve maternal and child health in low resource settings

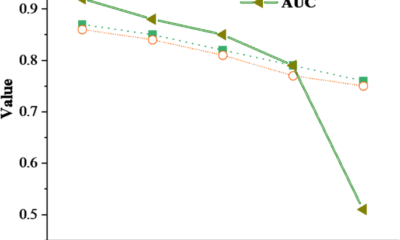

Nutritional assessment includes anthropometric measures and body composition, biochemical markers, clinical signs and symptoms, and dietary intake. These are measured and analyzed through direct measures of the body, blood collection and analysis, clinical exams, and interviews with trained personnel. Such methods have been used for decades in nutrition research and have resulted in major gains in the evidence base for nutrition and maternal and child health. However, these methods for assessment are time-, personnel- and resource-intensive, and depend on the availability of trained staff and equipment to collect and process the data, which can range from relatively simple (e.g., body weight data in a small study) to large and complex (e.g., 24-h recall data and food composition databases from repeated samples in a large cohort of mother-infant dyads)21. These methods may be complemented by integrating novel approaches to leverage increased computational power and efficiency to analyze complex multi-modal data, via artificial intelligence (AI) and machine learning (ML) models16,22. AI involves using a computer to model intelligent behavior with little human guidance23. ML is a mathematical tool that facilitates the development of algorithms to make accurate predictions from large datasets with greater accuracy than traditional statistical methods, and is of increasing interest to nutrition and health research22,24,25. Incorporating novel AI and ML methods to nutrition assessment could enable faster, more efficient, and more accurate data, translating to more accurate models and findings and inform the development and monitoring of nutritional interventions, including for maternal and child health.

Anthropometry and body composition

Established methods for nutritional assessment, including anthropometry and body composition, using measurement tapes, length/height boards, and skinfold calipers, are time-intensive and challenging to perform; require trained personnel (and may have high level of inter- and intra-rater variability in measurements); and do not automatically store data digitally, necessitating an additional data entry step26,27,28. For body composition assessment, gold standard methods include dual-energy X-ray absorptiometry (DXA), densitometry, or air displacement plethysmography (bioelectric impedance analysis (BIA),reviewed in refs. 29,30 may not be suitable for pregnant women or children), and require equipment that is costly to purchase and maintain controlled conditions, and may be less feasible in field or resource-limited settings31.

As optical imaging devices have become relatively inexpensive over the past few decades, interest in digital or automated measures of anthropometry and body composition has increased27. Three-dimensional scanning devices can objectively and relatively quickly measure the body, process the acquired data, and calculate circumferences, volumes, lengths, and surface areas27, although estimation of body composition measures (e.g., fat mass, fat free mass) from this data is more challenging32. A 3-dimensional (3D) scanner can complete hundreds of anthropometry measures in seconds32, though 3D imaging scanners vary in portability33. Smartphone and mobile phone-based technologies have advanced such that automated optical scanning systems33 and BIA34,35,36 may be captured via mobile phone, in addition to 2D images taken by the phone’s camera. These portable methods could be developed and validated for data collection in field settings at the point of need30.

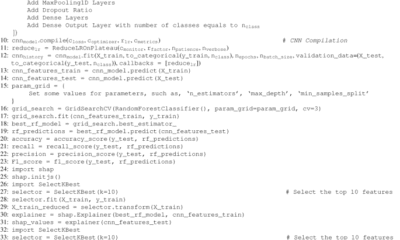

Machine learning approaches are well-suited to process data from images from 3D scanners or camera-enabled mobile devices to estimate anthropometry and body composition given that (a) image data analysis can be automated reducing personnel time required; and (b) the algorithm “learns” or becomes trained and accuracy is increased as additional curated data are ingested37. In addition, taking photos of participants is faster and less labor-intensive than anthropometry or body composition measurements, with contactless data collection. For example, ML methods have been used to predict body height from single-depth images in adults by researchers such as Lokshin and colleagues38 and in multiple studies39,40. In children, height measurements and predictions can be used to detect stunting; a 2021 study in India found that a convolutional neural network-based method accurately predicted the height of standing children under 5 years of age within an acceptable 1.4 cm range among 70% of depth images, which were generated from videos from captured on a commercial 2016 smartphone; however, details on the degree of inaccuracy of the remaining 30% of depth images was not reported41. However, image and video quality are key for accurate modeling; indeed, videos with noisy data (e.g., blurry, dark, lack of the subject, or too many participants) were identified and removed from the test datasets41. In adults (n = 12 females and n = 15 males) with obesity, a 2022 study found that an automated machine learning method analyzing data from smartphone camera-enabled capture and analysis of 2D images was able to reproducibly and accurately estimate whole body fat mass compared to DXA (correlation R2 = 0.99)24, with no differences by sex. However, estimating body composition in children using image-based machine learning techniques and validating such tools in the field in pregnant women and young children remain research gaps.

Deep learning algorithms can help process images and videos but require secure server availability for app-based intake estimation for sustainability. In the future, use of convolutional neural networks or other architectures such as generative adversarial networks and deep learning algorithms will be key to process the large image-based datasets. The increased computing power helps to identify more minute details in the pictures and in the process improves accuracy42. Current methods for anthropometry and body composition assessment are constrained by high throughput. Advancing the technology to enhance reliability and reproducibility, and to optimize for individuals across the life cycle in the form of a mobile phone are important for monitoring changes in individual anthropometry or body composition over time in resource-limited settings, to develop and evaluate nutritional interventions and programs.

Biochemical

Nutritional biomarkers such as those measured in blood or urine to quantify dietary intake or nutrition status are objective and less prone to bias due to recall or reporting43. For example, minerals and vitamins can be measured in blood, stable isotopes of doubly labeled water and urine samples can enable measurement of daily total energy expenditure, and 24-h urinary nitrogen can estimate protein intake44,45. One of the main challenges in assessing nutritional status is the limited range of biomarkers that reflect intake and predict functional or clinical outcomes, such as the response to a given dietary intervention in a population. Biomarkers of nutrients and associated metabolites often reflect recent intake rather than sustained dietary habits; adding to this complexity, the metabolic rate for energy and different nutrients has been shown to have inter-individual variation46,47, possibly due to the gut microbiome18,48. In addition, some biomarkers may not accurately reflect status for nutrients that are tightly regulated, such as serum calcium or zinc49,50, in addition to changes in status prompted by inflammation (described below); finally, a limited range of nutritional biomarkers predict functional outcomes or health outcomes.

The interplay of inflammation and nutritional status may influence intra- and inter-individual variation51. The acute phase response involves the release of inflammatory cytokines such TNF-alpha, IL-1, and IL-6, which stimulate the liver to produce acute phase proteins (APP). The APPs include over 200 plasma proteins, an estimated 50% of which are involved in regulation of nutrient transport or status52. For example, serum/plasma ferritin (stored iron), retinol (vitamin A status), and zinc (zinc status) are directly affected by inflammation, both acute and chronic. In the context of acute inflammation, serum/plasma ferritin concentrations increase, whereas retinol and zinc decrease52,53. Iron trafficking may be impacted54, limiting the distribution of iron from blood to cells throughout the body in order to limit its availability to pathogens55; the liver halts the release of retinol and its binding protein49; and the transfer of zinc from blood to liver may increase50. As a result, assessment of these micronutrients without accounting for inflammation (e.g., C-reactive protein (CRP), α−1-acid glycoprotein (AGP) or other inflammatory cytokines56) may result in altered (higher or lower) micronutrient status57.

Several methods are available for population-level adjustment of ferritin and retinol, including Biomarkers Reflecting Inflammation and Nutritional Determinants of Anemia (BRINDA); however, not all micronutrients or populations are covered; BRINDA adjustment methods are available for or validated in preschool children, school-aged children, or women of reproductive age (ferritin only), but not in pregnant women or infants53,58,59,60,61. These methods use CRP and/or AGP to adjust micronutrient status to a more accurate concentration without the presence of inflammation. However, these are population-based methods for inflammation adjustment and do not typically apply to the individual level and in the context of illness. Considering that both acute and chronic inflammation (e.g., obesity and metabolic diseases) appear to impact micronutrient concentrations, accounting for inflammation as part of the comprehensive set of variables is important for precision nutrition-based strategies. These biomarkers related to metabolism (metabolites) are part of nutrient metabolism. Metabolomics, the study of these metabolites or unique fingerprints as a result of metabolic processes is an upcoming theme in nutrition research. Recently, ML methodologies such as neural networks were used to prepare an evaluation chart using nutritional biomarkers and tried to link dietary intake with biochemical profile to understand the effect on body weight62. A further challenge is to capture intra- and inter-individual variation in the metabolic and phenotypic response to a dietary intervention and ultimately predict those most likely to respond to a particular type of intervention.

Assessment of biological specimens requires central laboratories, specialized equipment, ensuring cold chain, extensive benchmarking and validation of preservation methods, and trained personnel. Methods and devices that are field-friendly (i.e., portable and not impacted by variation in environment such as temperature and humidity) and that adhere to the ASSURED criteria (i.e., Affordable, Sensitive, Specific, User-friendly, Rapid and robust, Equipment-free, Delivered)63 are particularly relevant in the context of maternal and child health and in lower-resource settings. Additionally, the availability of noninvasive methods or tests that require only small volumes of blood—particularly appropriate for populations like young children and pregnant women—is paramount to successful assessment and monitoring. Biomarker assessment at the point of care has considerable applications for screening and precision nutrition in the context of maternal and child health, particularly in resource-limited settings. For example, point-of-care assessment of vitamin A status in blood has been demonstrated, using chemical reaction that can be miniaturized in a device or test kit to facilitate use in field and community healthcare settings. Methods to screen for vitamin A status using point-of-care methods have been cataloged previously and include portable fluorometers, photometers, immunoassays, and microfluidics-based though only some were commercially available64. These devices have the potential to routinely screen for vitamin A deficiency—and evaluate interventions—particularly in settings with limited resources and infrastructure.

Gut microbiome diversity, composition, and function are also potential novel biomarkers of dietary intake, dietary quality65, nutrition status, and/or response to interventions, including analysis of fecal microbial biomarkers of food intake using AI methods66. These methods need to be implemented and validated in the context of maternal and child health, including during pregnancy, mother-infant dyads, and in children. Diversity in maternal and child populations including ethnicity, habitual diets, socioeconomic status, and age is needed for these types of studies as well as other studies such as those using gut microbiome to predict glycemic response to interventions. Validation across large, diverse populations and over time, as well as repeated analyses among similar cohorts, is needed to ensure reproducibility. In addition, evaluation of novel biomarkers compared to currently used biomarkers. For these biomarkers to accurately reflect dietary intake, further detail is needed regarding food and nutrient composition, since vitamin and mineral content in vegetables varies considerably67 depending on conditions such as season68 or post-harvest processing and storage69. Standardized approaches for biomarker validation, comprehensive and accessible food composition databases, a common ontology for dietary biomarkers, and advances in statistical procedures for novel biomarkers of dietary intake are also needed45.

Clinical

Clinical outcomes include adverse pregnancy outcomes, and metabolic diseases, including cardiovascular disease (CVD), T2DM, metabolic dysfunction-associated steatotic liver disease (MASLD, formerly known as non-alcoholic fatty liver disease (NAFLD)), obesity, hyperlipidemia, and cancers70,71. The physiological changes that occur during pregnancy and the postpartum period may unmask metabolic risk factors such as hypertension and altered glucose metabolism not observed prior to pregnancy, highlighting a key window to use AI methods to monitor risk factors and future cardiovascular outcomes72. In children and adolescents, the prevalence of obesity continues to rise, particularly in low- and middle-income settings, and is linked to persistence of obesity into adulthood and associated comorbidities and premature mortality73.

The applications of ML and AI methods in clinical examination may enable earlier intervention or treatment, particularly for nutrition-related metabolic and non-communicable diseases. Many metabolic diseases and sequelae may be assessed via medical imaging techniques, which are particularly suitable to ML and AI methods72,74,75. For example, AI-based processing and assessment of retinal images enabled early detection of retinopathy related to T2DM76. Training ML models on MRI-derived images of liver fat content along with other ‘omics and clinical data have also been used to diagnose NAFLD and outperformed existing prediction tools77. Other AI methods such as convolutional neural networks can model raw electrocardiogram signatures to detect heart rhythm dysfunctions72. They may also be useful for detecting pregnancy outcomes such as congenital anomalies and intrauterine growth retardation (IUGR)78,79.

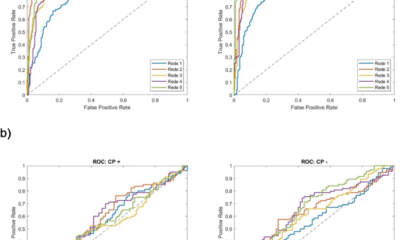

The most accurate method for measuring low-density lipoprotein (LDL) requires beta-quantification, which is time-intensive, expensive, and infrequently used. The Friedewald equation was developed to estimate LDL using total cholesterol (TC), high-density lipoprotein cholesterol (HDL-C), and triglycerides (TG): LDL-C = TC – HDL – TGs/580. However, this equation relies on the assumptions that have not been validated in pregnancy, in children, or in the context of certain health conditions, such as HIV80. Five ML methods—linear regression, random forest, gradient boosting, support vector machine (SVM), and neural network—were used to estimate LDL-C in women with HIV (n = 5,219) or without HIV (n = 2127) compared to the Friedewald equation80. In this study, an SVM algorithm outperformed the other four ML methods and the Friedewald equation. Initial findings from this study offers support for further investigation of ML methods in predicting risk factors for metabolic health outcomes.

In a study using a Bayesian kernel machine regression (BKMR) ML approach, sex-specific differences were observed when 12 dietary components were examined in association with 10-year risk of atherosclerotic CVD81. BKMR was used to incorporate non-linear and interactive associations of dietary components with health outcomes, and account for the high degree of collinearity often observed with dietary intakes. When all other dietary components were held fixed, unprocessed red meat was associated with increased risk for atherosclerotic CVD in women. In men, fruit was non-linearly associated with lower risk atherosclerotic CVD, with an interaction between fruit consumption and whole grains was reported. BKMR identified complexities with multiple dietary intakes in association with CVD and indicate its potential in identifying which nutrient(s) or their interaction(s) are associated with disease risk by sex. Together, these methods enable more targeted precision nutrition approaches or interventions to be developed.

The potential clinical applications for machine learning in the context of precision nutrition and non-communicable diseases is evident, particularly to automate and standardize analysis of medical images. The applications of ML methods to identify risk factors or health outcomes may vary in the clinical context. For example, while there is at least one FDA-approved AI-based device available for diabetic retinopathy screening in adults, which analyzes retinal images using a cloud-based software program to output a positive or negative result82, other clinical outcomes (e.g., CVD and MASLD) are in the development stage and require validation and refinement, Further development and validation of AI and ML methods is needed for early detection of non-communicable diseases to inform early intervention and treatment.

Dietary records

Dietary intake has been identified as a modifiable determinant of individual nutritional status. Current methods for estimating dietary intake include 24-h dietary recall, food frequency questionnaire, multiple-day food records or diet records83. Although self-reported dietary intake has been evaluated using these methods in numerous epidemiological studies, measurement error, day-to-day variability, and intensive training, resources, and time burden for personnel and participants are important limitations. Food composition databases for estimating the macro- and micro-nutrient content and intake of, for example, a 24-h recall, may be limited. For example, in one study, half of the available ~100 food composition databases globally were last updated more than 10 years ago with some only available for 1980-1989, limiting the data on data such as ultra-processed foods84.

It is important to develop and validate methods for accurate dietary intake in pregnancy and during critical periods such as preconception, pregnancy, and early in life. Methods such as wearable devices85,86,87, image-based assessment88, and novel biomarkers66 may be used to accurately capture dietary intakes. For example, ML methods has been used to determine a gut microbial signature of specific whole foods (e.g., broccoli, nuts, barley) in men and women44,66. However, these methods may require additional expertise and time in processing and analyzing the resulting data88. In order to address the gap of dietary intake of adolescent females in low- and middle-income settings, a mobile AI-technology–assisted dietary assessment technique, the “Food Recognition Assistance and Nudging Insights” (FRANI) app was developed and validated against weighed food records as a ground truth in Vietnam89. Dietary intake was assessed on three non-consecutive days (i.e., 2 weekdays, 1 weekend). A smartphone with the FRANI app was provided and participants (12–18 y) were trained to take photos of each instance of food consumption. Equivalence between FRANI and weighed records was determined at the 10% bound for calories, protein, fat, and four micronutrients, and at the 15% and 20% bound for carbohydrate and several other vitamins and minerals, suggesting an accurate estimation of most intakes. Some wider bounds were observed for vitamins A and B12, possibly due to lower frequency of consumption, estimation errors, and large variance, small sample size, and limitations of FRANI in assessing vitamin A-rich fruit and vegetables and vitamin-B12–rich foods in mixed dishes. Other limitations included the need for training, recall bias, changes in eating patterns due to taking photos while eating, and limitations of recognizing less common foods. However, findings demonstrate the potential for AI-assisted dietary intake estimation in the context of maternal and child health, particularly in low-resource settings.

AI Research

Should AI Get Legal Rights?

In one paper Eleos AI published, the nonprofit argues for evaluating AI consciousness using a “computational functionalism” approach. A similar idea was once championed by none other than Putnam, though he criticized it later in his career. The theory suggests that human minds can be thought of as specific kinds of computational systems. From there, you can then figure out if other computational systems, such as a chabot, have indicators of sentience similar to those of a human.

Eleos AI said in the paper that “a major challenge in applying” this approach “is that it involves significant judgment calls, both in formulating the indicators and in evaluating their presence or absence in AI systems.”

Model welfare is, of course, a nascent and still evolving field. It’s got plenty of critics, including Mustafa Suleyman, the CEO of Microsoft AI, who recently published a blog about “seemingly conscious AI.”

“This is both premature, and frankly dangerous,” Suleyman wrote, referring generally to the field of model welfare research. “All of this will exacerbate delusions, create yet more dependence-related problems, prey on our psychological vulnerabilities, introduce new dimensions of polarization, complicate existing struggles for rights, and create a huge new category error for society.”

Suleyman wrote that “there is zero evidence” today that conscious AI exists. He included a link to a paper that Long coauthored in 2023 that proposed a new framework for evaluating whether an AI system has “indicator properties” of consciousness. (Suleyman did not respond to a request for comment from WIRED.)

I chatted with Long and Campbell shortly after Suleyman published his blog. They told me that, while they agreed with much of what he said, they don’t believe model welfare research should cease to exist. Rather, they argue that the harms Suleyman referenced are the exact reasons why they want to study the topic in the first place.

“When you have a big, confusing problem or question, the one way to guarantee you’re not going to solve it is to throw your hands up and be like ‘Oh wow, this is too complicated,’” Campbell says. “I think we should at least try.”

Testing Consciousness

Model welfare researchers primarily concern themselves with questions of consciousness. If we can prove that you and I are conscious, they argue, then the same logic could be applied to large language models. To be clear, neither Long nor Campbell think that AI is conscious today, and they also aren’t sure it ever will be. But they want to develop tests that would allow us to prove it.

“The delusions are from people who are concerned with the actual question, ‘Is this AI, conscious?’ and having a scientific framework for thinking about that, I think, is just robustly good,” Long says.

But in a world where AI research can be packaged into sensational headlines and social media videos, heady philosophical questions and mind-bending experiments can easily be misconstrued. Take what happened when Anthropic published a safety report that showed Claude Opus 4 may take “harmful actions” in extreme circumstances, like blackmailing a fictional engineer to prevent it from being shut off.

AI Research

Trends in patent filing for artificial intelligence-assisted medical technologies | Smart & Biggar

[co-authors: Jessica Lee, Noam Amitay and Sarah McLaughlin]

Medical technologies incorporating artificial intelligence (AI) are an emerging area of innovation with the potential to transform healthcare. Employing techniques such as machine learning, deep learning and natural language processing,1 AI enables machine-based systems that can make predictions, recommendations or decisions that influence real or virtual environments based on a given set of objectives.2 For example, AI-based medical systems can collect medical data, analyze medical data and assist in medical treatment, or provide informed recommendations or decisions.3 According to the U.S. Food and Drug Administration (FDA), some key areas in which AI are applied in medical devices include: 4

- Image acquisition and processing

- Diagnosis, prognosis, and risk assessment

- Early disease detection

- Identification of new patterns in human physiology and disease progression

- Development of personalized diagnostics

- Therapeutic treatment response monitoring

Patent filing data related to these application areas can help us see emerging trends.

Table of contents

Analysis strategy

We identified nine subcategories of interest:

- Image acquisition and processing

- Medical image acquisition

- Pre-processing of medical imaging

- Pattern recognition and classification for image-based diagnosis

- Diagnosis, prognosis and risk management

- Early disease detection

- Identification of new patterns in physiology and disease

- Development of personalized diagnostics and medicine

- Therapeutic treatment response monitoring

- Clinical workflow management

- Surgical planning/implants

We searched patent filings in each subcategory from 2001 to 2023. In the results below, the number of patent filings are based on patent families, each patent family being a collection of patent documents covering the same technology, which have at least one priority document in common.5

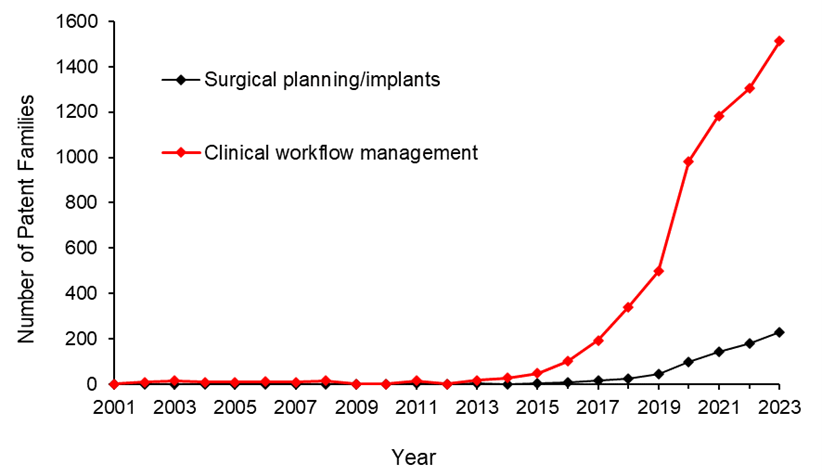

What has been filed over the years?

The number of patents filed in each subcategory of AI-assisted applications for medical technologies from 2001 to 2023 is shown below.

We see that patenting activities are concentrating in the areas of treatment response monitoring, identification of new patterns in physiology and disease, clinical workflow management, pattern recognition and classification for image-based diagnosis, and development of personalized diagnostics and medicine. This suggests that research and development efforts are focused on these areas.

What do the annual numbers tell us?

Let’s look at the annual number of patent filings for the categories and subcategories listed above. The following four graphs show the global patent filing trends over time for the categories of AI-assisted medical technologies related to: image acquisition and processing; diagnosis, prognosis and risk management; treatment response monitoring; and workflow management.

When looking at the patent filings on an annual basis, the numbers confirm the expected significant uptick in patenting activities in recent years for all categories searched. They also show that, within the four categories, the subcategories showing the fastest rate of growth were: pattern recognition and classification for image-based diagnosis, identification of new patterns in human physiology and disease, treatment response monitoring, and clinical workflow management.

Above: Global patent filing trends over time for categories of AI-assisted medical technologies related to image acquisition and processing.

Above: Global patent filing trends over time for categories of AI-assisted medical technologies related to more accurate diagnosis, prognosis and risk management.

Above: Global patent filing trends over time for AI-assisted medical technologies related to treatment response monitoring.

Above: Global patent filing trends over time for categories of AI-assisted medical technologies related to workflow management.

Where is R&D happening?

By looking at where the inventors are located, we can see where R&D activities are occurring. We found that the two most frequent inventor locations are the United States (50.3%) and China (26.2%). Both Australia and Canada are amongst the ten most frequent inventor locations, with Canada ranking seventh and Australia ranking ninth in the five subcategories that have the highest patenting activities from 2001-2023.

Where are the destination markets?

The filing destinations provide a clue as to the intended markets or locations of commercial partnerships. The United States (30.6%) and China (29.4%) again are the pace leaders. Canada is the seventh most frequent destination jurisdiction with 3.2% of patent filings. Australia is the eighth most frequent destination jurisdiction with 3.1% of patent filings.

Takeaways

Our analysis found that the leading subcategories of AI-assisted medical technology patent applications from 2001 to 2023 include treatment response monitoring, identification of new patterns in human physiology and disease, clinical workflow management, pattern recognition and classification for image-based diagnosis as well as development of personalized diagnostics and medicine.

In more recent years, we found the fastest growth in the areas of pattern recognition and classification for image-based diagnosis, identification of new patterns in human physiology and disease, treatment response monitoring, and clinical workflow management, suggesting that R&D efforts are being concentrated in these areas.

We saw that patent filings in the areas of early disease detection and surgical/implant monitoring increased later than the other categories, suggesting these may be emerging areas of growth.

Although, as expected, the United States and China are consistently the leading jurisdictions in both inventor location and destination patent offices, Canada and Australia are frequently in the top ten.

Patent intelligence provides powerful tools for decision makers in looking at what might be shaping our future. With recent geopolitical changes and policy updates in key primary markets, as well as shifts in trade relationships, patent filings give us insight into how these aspects impact innovation. For everyone, it provides exciting clues as to what emerging technologies may shape our lives.

References

1. Alowais et.al., Revolutionizing healthcare: the role of artificial intelligence in clinical practice (2023), BMC Medical Education, 23:689.

2. U.S. Food and Drug Administration (FDA), Artificial Intelligence and Machine Learning in Software as a Medical Device.

3. Bitkina et.al., Application of artificial intelligence in medical technologies: a systematic review of main trends (2023), Digital Health, 9:1-15.

4. Artificial Intelligence Program: Research on AI/ML-Based Medical Devices | FDA.

5. INPADOC extended patent family.

[View source.]

AI Research

Researchers create AI to examine medical images like a trained radiologist

Input and expertise from radiologists can help develop better and more trustworthy artificial intelligence (AI) tools, new research shows. The study used radiologists’ eye movements to help guide AI systems to focus on the most clinically relevant areas of medical images.

When combined with other AI systems, this helps to improve diagnostic performance by up to 1.5% and align machine behavior more closely with expert human judgment, according to the team of researchers from Cardiff University and the University Hospital of Wales (UHW).

Their findings, published in IEEE Transactions on Neural Networks and Learning Systems, could support decision-making in diagnoses by radiologists and grow medical AI adoption to help meet some of the challenges facing the NHS.

Dr. Richard White, a consultant radiologist at UHW and clinical lead on the study, said, “Computers are very good at identifying pathologies such as lung nodules based on their shape and texture. However, knowledge of where to look in imaging studies forms a key part of radiology training, and there are specific review areas that should always be evaluated.

“This research aims to bring these two aspects together to see if computers can evaluate chest radiographs more like a trained radiologist would. This is something that radiology AI research has previously lacked and a key step in improving trust in AI and the diagnostic capabilities of computers.”

The team created the largest and most reliable visual saliency dataset for chest X-rays to date—based on more than 100,000 eye movements from 13 radiologists examining fewer than 200 chest X-rays.

This was used to train a new AI model, CXRSalNet, to help it predict the areas in an X-ray that are most likely to be important for diagnosis.

Professor Hantao Liu, lead researcher on the study from Cardiff University’s School of Computer Science and Informatics, added, “Current AI systems lack the ability to explain how or why they arrive at a decision—something that is critical in health care.

“Meanwhile, radiologists bring years of experience and subtle perceptual skills to each image they review. Our work captures how experienced radiologists naturally focus their attention on important parts of chest X-rays. We used this eye-tracking data to ‘teach’ AI to identify important features in chest X-rays. By mimicking where radiologists look when making diagnoses in this way, we can help AI systems learn to interpret images more like a human expert would.”

Wales has a 32% shortfall in consultant radiologists and the figure for the U.K. stands at 29%, according to the 2024 census by the Royal College of Radiologists.

Meanwhile, demand for imaging is rising significantly. “There are similar problems across much of the world,” explains Dr. White. “If we can implement solutions such as this into clinical practice, it has the potential to considerably enhance radiological workflow and minimize delays in care as a consequence of reporting backlogs.”

The team plans to further develop their approach to explore how the technology can be adapted for medical education and training and clinical decision support tools, to assist radiologists in making faster and more accurate diagnoses.

“Our current focus is expanding this approach to work across other imaging modalities such as CT and MRI scans,” added Professor Liu. “In particular, we’re interested in applying this methodology to cancer detection, where early identification of subtle visual cues is critical and often challenging for both human readers and machines.”

More information:

Jianxun Lou et al, Chest X-Ray Visual Saliency Modeling: Eye-Tracking Dataset and Saliency Prediction Model, IEEE Transactions on Neural Networks and Learning Systems (2025). DOI: 10.1109/TNNLS.2025.3564292

Citation:

Knowing where to look: Researchers create AI to examine medical images like a trained radiologist (2025, September 4)

retrieved 4 September 2025

from https://medicalxpress.com/news/2025-09-ai-medical-images-radiologist.html

This document is subject to copyright. Apart from any fair dealing for the purpose of private study or research, no

part may be reproduced without the written permission. The content is provided for information purposes only.

-

Business6 days ago

Business6 days agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms3 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics