AI Insights

A robot shows that machines may one day replace human surgeons | Science

Nearly four decades ago, the Defense Advanced Research Projects Agency (DARPA) and NASA began promoting projects that would make it possible to perform surgeries remotely — whether on the battlefield or in space. Out of those initial efforts emerged robotic surgical systems like Da Vinci, which function as an extension of the surgeon, allowing them to carry out minimally invasive procedures using remote controls and 3D vision. But this still involves a human using a sophisticated tool. Now, the integration of generative artificial intelligence and machine learning into the control of systems like Da Vinci are bringing the possibility of autonomous surgical robots closer to reality.

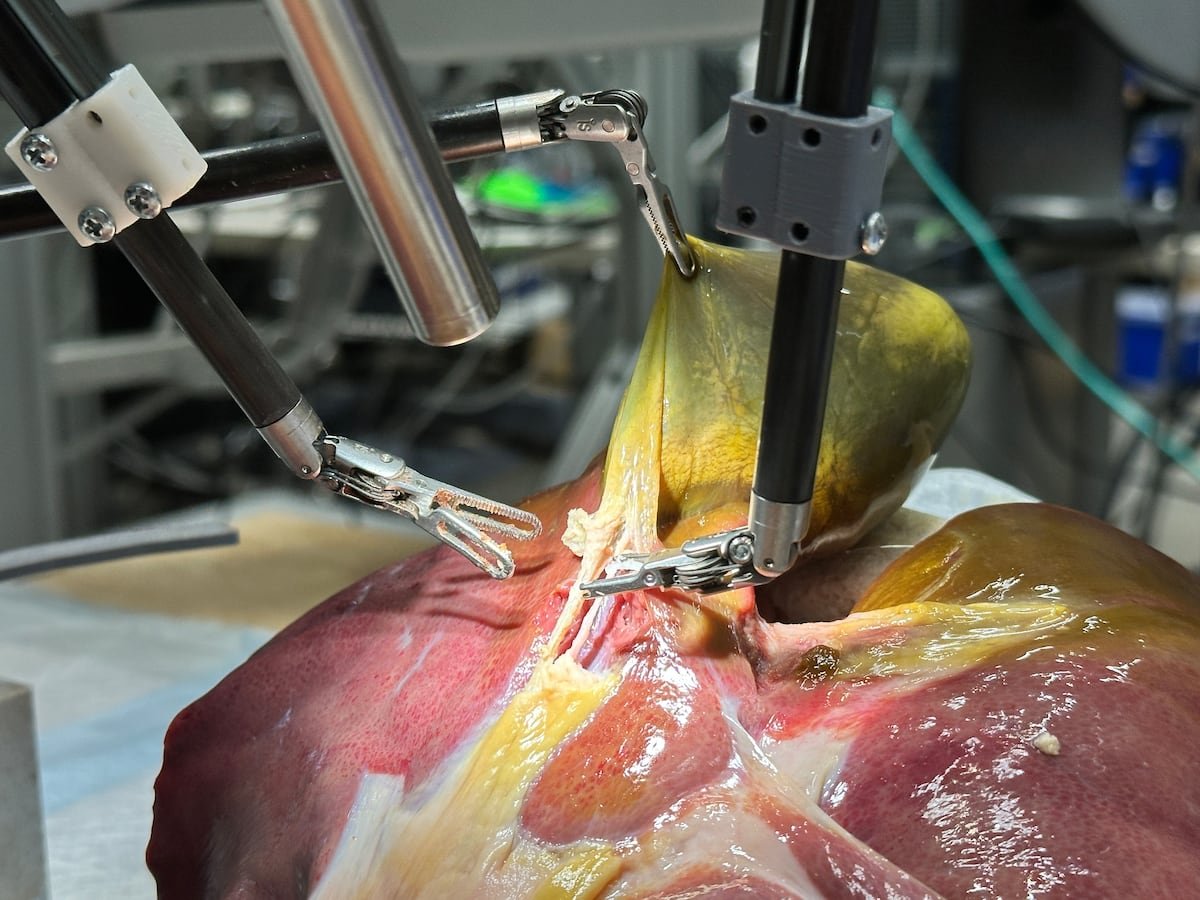

This Wednesday, the journal Science Robotics published the results of a study conducted by researchers at Johns Hopkins and Stanford universities, in which they present a system capable of autonomously performing several steps of a surgical procedure, learning from videos of humans operating and receiving commands in natural language — just like a medical resident would.

Like human learning, the team of scientists had been gradually teaching the robot the necessary steps to complete a surgery. Last year, the Johns Hopkins team, led by Axel Krieger, trained the robot to perform three basic surgical tasks: handling a needle, lifting tissue, and suturing. This training was done through imitation and a machine learning system similar to the one used by ChatGPT, but instead of words and text, it uses a robotic language that translates the machine’s movement angles into mathematical data.

In the new experiment, two experienced human surgeons performed demonstrations of gallbladder removal surgeries on pig tissues outside the animal. They used 34 gallbladders to collect 17 hours of data and 16,000 trajectories, which the machine used to learn. Afterward, the robots, without human intervention and with eight gallbladders they hadn’t seen before, were able to perform some of the 17 tasks required to remove the organ with 100% precision — such as identifying certain ducts and arteries, holding them precisely, strategically placing clips, and cutting with scissors. During the experiments, the model was able to correct its own mistakes and adapt to unforeseen situations.

In 2022, this same team performed the first autonomous robotic surgery on a live animal: a laparoscopy on a pig. But that robot needed the tissue to have special markers, was in a controlled environment, and followed a pre-established surgical plan. In a statement from his institution, Krieger said it was like teaching a robot to drive a carefully mapped-out route. The new experiment just presented would be — for the robot — like driving on a road it had never seen before, relying only on a general understanding of how to drive a car.

José Granell, head of the Department of Otolaryngology and Head and Neck Surgery at HLA Moncloa University Hospital and professor at the European University of Madrid, believes that the Johns Hopkins team’s work “is beginning to approach something that resembles real surgery.”

“The problem with robotic surgery on soft tissue is that biology has a lot of intrinsic variability, and even if you know the technique, in the real world there are many possible scenarios,” explains Granell. “Asking a robot to carve a bone is easy, but with soft tissue, everything is more difficult because it moves. You can’t predict how it will react when you push, how much it will move, whether an artery will tear if you pull too hard,” continues the surgeon, adding: “This technology changes the way we train the sequence of gestures that constitute surgery.”

For Krieger, this advancement takes us “from robots that can perform specific surgical tasks to robots that truly understand surgical procedures.” The team leader behind this innovation, made possible by generative AI, argues: “It’s a crucial distinction that brings us significantly closer to clinically viable autonomous surgical systems, capable of navigating the messy and unpredictable reality of real-life patient care.”

Francisco Clascá, professor of Human Anatomy and Embryology at the Autonomous University of Madrid, welcomes the study, but points out that “it’s a very simple surgery” and is performed on organs from “very young animals, which don’t have the level of deterioration and complications of a 60- or 70-year-old person, which is when this type of surgery is typically needed.” Furthermore, the robot is still much slower than a human performing the same tasks.

Mario Fernández, head of the Head and Neck Surgery department at the Gregorio Marañón General University Hospital in Madrid, finds the advance interesting, but believes that replacing human surgeons with machines “is a long way off.” He also cautions against being dazzled by technology without fully understanding its real benefits, and points out that its high cost means it won’t be accessible to everyone.

“I know a hospital in India, for example, where they have a robot and can perform two surgical sessions per month, operating on two patients. A total of 48 per year. For them, robotic surgery may be a way to practice and learn, but it’s not a reality for the patients there,” says Fernández. He believes we should appreciate technological progress, but surgery must be judged by what it actually delivers to patients. As a contrasting example, he points out that “a technique called transoral ultrasound surgery, which was developed in Madrid and is available worldwide, is performed on six patients every day.”

Krieger believes that their proof of concept shows it’s possible to perform complex surgical procedures autonomously, and that their imitation learning system can be applied to more types of surgeries — something they will continue to test with other interventions.

Looking ahead, Granell points out that, beyond overcoming technical challenges, the adoption of surgical robots will be slow because in surgery, “we are very conservative about patient safety.”

He also raises philosophical questions, such as overcoming Isaac Asimov’s First and Second Laws of Robotics: “A robot may not injure a human being or, through inaction, allow a human being to come to harm,” and “A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.” This specialist highlights the apparent contradiction in the fact that human surgeons “do cause harm, but in pursuit of the patient’s benefit; and this is a dilemma that [for a robot] will have to be resolved.”

Sign up for our weekly newsletter to get more English-language news coverage from EL PAÍS USA Edition

AI Insights

AI chatbots and mental health: How to cover the topic responsibly

Artificial intelligence-powered chatbots can provide round-the-clock access to supportive “conversations,” which some people are using as a substitute for interactions with licensed mental health clinicians or friends. But users may develop dependencies on the tools and mistake these transactions for real relationships with people or true therapy. Recent news stories have discussed the dangers of chatbots’ fabricated, supportive nature. In some incidents, people developed AI-related psychosis or were supported in their plans to commit suicide.

What is it about this technology that sucks people in? Who is at risk? How can you report on these conditions sensitively? In this webinar, hear from moderator Karen Blum and an expert panel, including psychiatrists John Torous, M.D. (Beth Israel Deaconess Medical Center); Keith Sakata, M.D. (UC San Francisco), and Mashable Senior Reporter Rebecca Ruiz, to learn more.

Karen Blum

AHCJ Health Beat Leader for Health IT

Karen Blum is AHCJ’s health beat leader for health IT. She’s an independent health and science journalist, based in the Baltimore area. She has written for publications such as the Baltimore Sun, Pharmacy Practice News, Clinical Oncology News, Clinical Laboratory News, Cancer Today, CURE, AARP.org, General Surgery News and Infectious Disease Special Edition; covered numerous medical conferences for trade magazines and news services; and written many profiles and articles on medical and science research as well as trends in health care and health IT. She is a member of the American Society of Journalists and Authors (ASJA) and chairs its Virtual Education Committee; and a member of the National Association of Science Writers (NASW) and its freelance committee.

Rebecca Ruiz

Senior reporter, Mashable

Rebecca Ruiz is a Senior Reporter at Mashable. She frequently covers mental health, digital culture, and technology. Her areas of expertise include suicide prevention, screen use and mental health, parenting, youth well-being, and meditation and mindfulness. Rebecca’s experience prior to Mashable includes working as a staff writer, reporter, and editor at NBC News Digital and as a staff writer at Forbes. Rebecca has a B.A. from Sarah Lawrence College and a master’s degree from UC Berkeley’s Graduate School of Journalism.

Keith Sakata, M.D.

Psychiatry resident, UC San Francisco

Keith Sakata, M.D., is a psychiatry resident at the University of California, San Francisco, where he founded the Mental Health Innovation and Digital Hub (MINDHub) to advance AI-enabled care delivery. He provides treatment and psychotherapy across outpatient and specialty clinics, with a focus on dual diagnosis, PTSD, OCD, pain, and addiction.

Dr. Sakata previously trained in internal medicine at Stanford Health Care and co-founded Skript, a diagnostic training platform adopted by UCSF and Stanford that improved medical education outcomes during the COVID-19 pandemic. He currently serves as Clinical Lead at Sunflower, an addiction recovery startup. He also helps and advises startups working to improve access in mental health: including Two Chairs, and Circuit Breaker Labs, which is providing a safety layer for AI tools in mental health care.

His professional interests bridge psychiatry, neuroscience, and digital innovation. Dr. Sakata holds a B.S. in Neurobiology from UC Irvine and earned his M.D. from UCSF.

John Torous, M.D., MBI

Director, Digital Psychiatry, Beth Israel Deaconess Medical Center

John Torous, M.D., MBI, is director of the digital psychiatry division in the Department of Psychiatry at Beth Israel Deaconess Medical Center (BIDMC), a Harvard Medical School-affiliated teaching hospital, where he also serves as a staff psychiatrist and associate professor. He has a background in electrical engineering and computer sciences and received an undergraduate degree in the field from UC Berkeley before attending medical school at UC San Diego. He completed his psychiatry residency, fellowship in clinical informatics and master’s degree in biomedical informatics at Harvard.

Torous is active in investigating the potential of mobile mental health technologies for psychiatry and his team supports mindapps.org as the largest database of mental health apps, the mindLAMP technology platform for scalable digital phenotyping and intervention, and the Digital Navigator program to promote digital equity and access. Torous has published over 300 peer-reviewed articles and five book chapters on the topic. He directs the Digital Psychiatry Clinic at BIDMC, which seeks to improve access to and quality of mental health care through augmenting treatment with digital innovations.

Torous serves as editor-in-chief for the journal JMIR Mental Health, web editor for JAMA Psychiatry, and a member of various American Psychiatric Association committees.

AI Insights

Google Debuts Agent Payments Protocol to Bolster AI Commerce

AI Insights

AI’s Baby Bonus? | American Enterprise Institute

It seems humanity is running out of children faster than expected. Fertility rates are collapsing around the world, often decades ahead of United Nations projections. Turkey’s fell to 1.48 last year—a level the UN thought would not arrive until 2100—while Bogotá’s is now below Tokyo’s. Even India, once assumed to prop up global demographics, has dipped under replacement. According to a new piece in The Economist, the world’s population, once projected to crest at 10.3 billion in 2084, may instead peak in the 2050s below nine billion before declining. (Among those experts mentioned, by the way, is Jesús Fernández-Villaverde, an economist at the University of Pennsylvania and visiting AEI scholar.)

From “Humanity will shrink, far sooner than you think” in the most recent issue: “At that point, the world’s population will start to shrink, something it has not done since the 14th century, when the Black Death wiped out perhaps a fifth of humanity.”

This demographic crunch has defied policymaker efforts. Child allowances, flexible work schemes, and subsidized daycare have barely budged birth rates. For its part, the UN continues to assume fertility will stabilize or rebound. But a demographer quoted by the magazine calls that “wishful thinking,” and the opinion is hardly an outlier.

See if you find the UN assumption persuasive:

It is indeed possible to imagine that fertility might recover in some countries. It has done so before, rising in the early 2000s in the United States and much of northern Europe as women who had delayed having children got round to it. But it is far from clear that the world is destined to follow this example, and anyway, birth rates in most of the places that seemed fecund are declining again. They have fallen by a fifth in Nordic countries since 2010.

John Wilmoth of the United Nations Population Division explains one rationale for the idea that fertility rates will rebound: “an expectation of continuing social progress towards gender equality and women’s empowerment”. If the harm to women’s careers and finances that comes from having children were erased, fertility might rise. But the record of women’s empowerment thus far around the world is that it leads to lower fertility rates. It is not “an air-tight case”, concedes Mr Wilmoth.

Against this bleak backdrop, technology may be the only credible source of hope. Zoom boss Eric Yuan recently joined Bill Gates, Nvidia’s Jensen Huang, and JPMorgan’s Jamie Dimon in predicting shorter workweeks as advances in artificial intelligence boost worker productivity. The optimistic scenario goes like this: As digital assistants and code-writing bots shoulder more of the office load, employees reclaim hours for home life. Robot nannies and AI tutors lighten the costs and stresses of parenting, especially for dual-income households.

History hints at what could follow. Before the Industrial Revolution, wealth and fertility went hand-in-hand. That relationship flipped when economies modernized. Education became compulsory, child labor fell out of favor, and middle- and upper-class families invested heavily in fewer children’s education and well-being.

But today, wealthier Americans are having more children, treating them as the ultimate luxury good. As AI-driven abundance spreads more broadly, perhaps resulting in the shorter workweeks those CEOs are talking about, larger families may once again be considered an attainable aspiration for regular folks rather than an elite indulgence. (Fingers crossed, given this recent analysis from JPM: “The vast sums being spent on AI suggest that investors believe these productivity gains will ultimately materialize, but we suspect many of them have not yet done so.”)

Indeed, even a modest “baby bonus” from technology would be profound. Governments are running out of levers to pull, dials to turn, and buttons to press. AI-powered productivity may not just be the best bet for growth, it could be the only realistic chance of nudging humanity away from demographic decline. This is something for governments to think hard about when deciding how to regulate this fast-evolving technology.

-

Business3 weeks ago

Business3 weeks agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms1 month ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy2 months ago

Ethics & Policy2 months agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers3 months ago

Jobs & Careers3 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Education3 months ago

Education3 months agoVEX Robotics launches AI-powered classroom robotics system

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Funding & Business3 months ago

Funding & Business3 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries