AI Insights

A practical framework for appropriate implementation and review of artificial intelligence (FAIR-AI) in healthcare

Best practices and key considerations—narrative review

As a first step to inform the construct of FAIR-AI, we conducted a narrative review to identify the best practices and key considerations related to responsibly deploying AI in healthcare, these are summarized in Table 1. The results are organized into several themes including validation, usefulness, transparency, and equity.

Numerous publications and guidelines such as TRIPOD and TRIPOD-AI have described the reporting necessary to properly evaluate a risk prediction model, regardless of the underlying statistical or machine learning method12,13. An important consideration in model validation is careful selection of performance metrics14. Beyond discrimination metrics like AUC, it is important to assess other aspects of model performance, such as calibration, and the F-score, which is particularly useful in settings with imbalanced data. For models that produce a continuous risk, probability decision thresholds can be adjusted to maximize classification measures such as positive predictive value (PPV) depending on the specific clinical scenario. Decision Curve Analysis can help evaluate the tradeoff between true positives and false positives to determine whether a model offers practical value at a given clinical threshold15. For regression problems, besides Mean Square Error (MSE), other metrics such as Mean Absolute Error (MAE) and Mean Absolute Percentage Error (MAPE) can also be examined16. It is important to establish a model’s real-world applicability through dedicated validation studies17,18. The strength of evidence supporting validation and minimum performance standards should align with the intended use case, its potential risks, and the likelihood of performance variability once deployed based on the analytic approach or data sources (Supplementary Fig 1)14,17,18. Applying these traditional standards to evaluate the validity of generative AI models is uniquely challenging and frequently not possible. While the literature in this area is nascent, evaluation should still be performed and may require qualitative metrics such as user feedback and expert reviews, which can provide insights into performance, risks, and usefulness19,20.

Deploying and maintaining AI solutions in healthcare requires significant resources and carries the potential for both risk and benefits, making it essential to evaluate whether a tool delivers actual usefulness, or a net benefit, to the organization, clinical team, and patients21,22. Decision analyses can quantify the expected value of medical decisions, but they often require detailed cost estimates and complex modeling. Formal net benefit calculations simplify this process by integrating the relative value of benefits versus harms into a single metric18,23. However, a lack of objective data, the specific context, or the nature of the solution may render these calculations impractical. In these cases, net benefit provides a construct to guide qualitative discussions among subject matter experts, helping to weigh benefits and risks while considering workflows that mitigate risks. Additionally, a thorough assessment of clinical utility may require an impact study to evaluate a solution’s effects on factors such as resource utilization, time savings, ease of use, workflow integration, end-user perception, alert characteristics (e.g., mode, timing, and targets), and unintended consequences9,22,24.

Given the potential for ethical and equity risks when deploying AI solutions in healthcare, transparency should be present to the degree that it is possible across all levels of the design, development, evaluation, and implementation of AI solutions to ensure fairness and accountability (https://nvlpubs.nist.gov/nistpubs/ai/nist.ai.100-1.pdf; http://data.europa.eu/eli/reg/2024/1689/oj)25,26. Specifically due to the potential for AI to perpetuate biases that could result in over- or under-treatment of certain populations, there must be a clear and defensible justification for including predictor variables that have historically been associated with discrimination, such as those outlined in the PROGRESS-Plus framework: place of residence, race/ethnicity/culture/language, occupation, gender/sex, religion, education, socioeconomic status, social capital, and personal characteristics linked to discrimination (e.g., age, disability, sexual orientation)21,27,28,29. This is particularly important when these variables may act as proxies for other, more meaningful determinants of health. It is equally important to evaluate for patterns of algorithmic bias by monitoring outcomes for discordance between patient subgroups, as well as ensuring equal access to the AI solution itself when applicable10,25,30,31. Once an AI solution is implemented, transparency for end-users becomes a critical element for building trust and confidence, as well as empowering users to play a role in vigilance for potential unintended consequences. To achieve this post-implementation transparency, end-users should have information readily available that explains an AI solution’s intended use, limitations, and potential risks (https://www.fda.gov/medical-devices/software-medical-device-samd/transparency-machine-learning-enabled-medical-devices-guiding-principles)32. Transparency is also critical from the patient’s perspective. There is an ethical imperative to notify patients when AI is being used and, when appropriate, to obtain their consent—particularly in sensitive or high-stakes situations33,34. This obligation is heightened when there is no human oversight, when the technology is experimental, or when the use of AI is not readily apparent. Failing to disclose the use of AI in such contexts may undermine patient autonomy and erode trust in the healthcare system. Generative AI presents unique challenges in terms of transparency. For example, deep learning relies on vast numbers of parameters drawn from increasingly large datasets and may be inherently unexplainable. When transparency is lacking there should be a greater emphasis on human oversight and education on limitations and risks, and this is an area of ongoing research20.

Stakeholder needs and priorities—interviews

Several systematic reviews emphasize the importance of stakeholder engagement in the design and implementation of AI solutions in healthcare; however, this aspect is often overlooked in the existing frameworks35,36. To create a practical and useful framework for health systems, we borrowed from user-centric design principles to first assess stakeholders’ priorities for an AI framework and their criteria for evaluating its successful implementation. We interviewed stakeholders including health system leaders, AI developers, providers, and patients. Our findings were previously presented at the 17th Annual Conference on the Science of Dissemination and Implementation, hosted by AcademyHealth37.

The stakeholders expressed multiple priorities for an AI framework, particularly the need for: (1) risk tolerance assessments to weigh the potential patient harms of an AI solution against expected benefits, (2) a human-in-the-loop of any medical decisions made using an AI solution, (3) consideration that available, rigorous evidence may be limited when reviewing new AI solutions, and (4) awareness that solutions may not have been developed on diverse patient populations or data similar to the population in which a use case is proposed. Interviewees also highlighted the importance of ensuring that AI solutions are matched to institutional priorities and conform to all relevant regulations. They noted regulations can pose unique challenges for large, multi-state health systems. While patient safety and outcomes were identified as paramount, stakeholders also detailed the need for an AI framework to evaluate the impact of potential solutions on health system employees.

When evaluating the successful implementation and utilization of an AI framework, stakeholders were consistent in explaining that the review process must operate in a timely manner, provide clear guidelines for AI developers, and ensure fair and consistent review processes that are applicable for both internally and externally developed solutions. Multiple interviewees cited the challenges presented by the rapid pace of AI innovation, expressing concerns that an overly bureaucratic and time-consuming review process could hinder the health system’s ability to keep pace with the wider healthcare market. Similarly, multiple senior leaders and AI developers explained that a successful AI framework would both encourage internal innovation and streamline the implementation of AI solutions in a safe manner.

Framework for the appropriate implementation and review of AI (FAIR-AI) in healthcare

Findings from stakeholder interviews informed our design workshop efforts, which included health system leaders and experts in AI, with workshop participants providing explicit guidance on how to best construct the FAIR-AI to meaningfully integrate stakeholder feedback. The project team leveraged design workshop activities and participant expertise to develop a set of requirements for health systems seeking to implement AI responsibly. FAIR-AI provides a detailed outline of: (i) foundational health system requirements—artifacts, personnel, processes, and tools; (ii) inclusion and exclusion criteria that specifically detail which AI solutions ought to be evaluated by FAIR-AI, thus defining scope and ensuring accountability; (iii) review questions in the form of a low-risk screening checklist and an in-depth review that provides a comprehensive evaluation of risk and benefits across the areas of development, validation, performance, ethics and equity, usefulness, compliance and regulations; (iv) discrete risk categories that map to the review criteria and are assigned to each AI solution and its intended use case; (v) safe implementation plans including monitoring and transparency requirements; (vi) an AI Label that consolidates information in an understandable format. These core components of FAIR-AI are also displayed in Fig. 1.

FAIR-AI aims towards safe innovation and responsible deployment with AI solutions in healthcare. This is achieved through a process centered around (1) comprehensive review organized by risk domains; (2) categorization of an AI solution into low, moderate, or high risk; and (3) Safe AI Plan consisting of monitoring and end-user transparency requirements.

Implementing a responsible AI framework requires that health systems have certain foundational elements in place: (i) artifacts include a set of guiding principles for AI implementation and an AI ethics statement (examples are shown in Supplementary Table 1), both of which should be endorsed at the highest level of the organization; (ii) personnel including an individual (or a team) with data science training who are accountable for reviews; (iii) a process for escalation to an institutional decision-making body with the multidisciplinary expertise needed to assess ethical, legal, technical, operational, and clinical implications, with the authority to act; and (iv) an inventory tool that serves as a single source of truth catalog that enables accountability for review, monitoring, and transparency requirements. It is important to establish that the AI evaluation framework does not replace but rather supports existing governance structure. Additionally, while the overarching structure of an AI governance framework like FAIR-AI may remain consistent over time, the rapid pace of change in technology and regulations requires a process for regular review and updating by subject matter experts.

As the first step in FAIR-AI, an AI solution needs to go through an intake process. Individual leaders who are responsible for the deployment of AI solutions within the enterprise are designated as business owners; for clinical solutions, the business owner is a clinical leader. In this framework, we require the business owner of an AI solution to provide a set of descriptive items through an intake form including: (i) existing problem to solve; (ii) clearly outlined intended use case; (iii) expected benefits; (iv) risks including worst-case scenario(s); (v) published and unpublished information on development, validation, and performance; and (vi) FDA approvals, if applicable.

Next, we describe the inclusion and exclusion criteria for AI solutions to be applicable to FAIR-AI. Based on the premise that enterprise risk management must cast a wide net to be aware of potential risks, the inclusion for FAIR-AI review starts with a broad, general definition of AI solutions, which intentionally also includes solutions that do not directly relate to clinical care. We adopted the definition of AI from Matheny et al., as “computer system(s) capable of activities normally associated with human cognitive effort”38. We then provide additional scope specificity by excluding three general areas of AI. First, simple scoring systems and rules-based tools for which an end-user can reasonably be expected to evaluate and take responsibility for performance. Second, any physical medical device that also incorporates AI into its function, as there are well-established FDA regulations in place to evaluate and monitor risks associated with these devices (https://www.fda.gov/medical-devices/classify-your-medical-device/how-determine-if-your-product-medical-device). Third, any AI solution being considered under an Institutional Review Board (IRB)-approved research protocol that includes informed consent for the use of AI when human subjects are involved. Inclusion and exclusion criteria like these will need to be adapted to a health system’s local context.

Risk evaluation considers the magnitude and importance of adverse consequences from a decision; and in the case of FAIR-AI, the decision to implement a new AI solution39. As there are numerous approaches and nomenclatures to define risk, local consensus on a clear definition is a critical initial step for a health system. We aimed for simplicity in our risk definition and the number of risk categories to ensure interpretability by diverse stakeholders. Additionally, we opted to pursue a qualitative determination of risk and avoid a purely quantitative, composite risk score approach. The requisite data rarely exist to perform such risk calculations reliably, and composites of weighted scores have the potential to dilute important individual risk factors as well as the nuance of risk mitigation offered by the workflows surrounding AI solutions (for example, requiring a human review of AI output before an action is taken). Thus, FAIR-AI determines the magnitude and importance of potential adverse effects through consensus between subject matter experts from a data science team, the business leader requesting the AI solution, and ad hoc consultation when additional expertise is needed. In this exercise, the group leverages published data and expert opinion to outline hypothetical worst-case scenarios and the harms that could occur as an indirect or direct result of output from the proposed AI solution. The consensus determines if those harms are minor, or not minor; and if not minor, are they sufficiently mitigated by the related implementation workflow and monitoring plan. This risk framework is like that proposed by the International Medical Device Regulators Forum (https://www.imdrf.org/documents/software-medical-device-possible-framework-risk-categorization-and-corresponding-considerations). It is important here to note that every AI solution should be reviewed within the context of its intended use case, which includes the surrounding implementation workflows.

As prioritized by our stakeholders, a responsible AI framework should be nimble enough to allow quick but thorough reviews of AI solutions that have a low chance of causing any harm to an individual or the organization. To that end, FAIR-AI incorporates a 2-step process: an initial low-risk screening pathway and a subsequent in-depth review pathway for all solutions that do not pass through the low-risk screen. For an AI solution to be designated low-risk, it must pass all the low-risk screening questions (Table 2). Should answers to any of the screening questions suggest potential risks, the AI solution moves on to an in-depth review guided by the questions presented in Table 3. The in-depth review involves closer scrutiny of the AI solution by the data scientist and business owner and mandates a higher burden of proof that the potential benefits of the solution outweigh the potential risks identified during the screening process. If any of the in-depth review questions results in a determination of high risk, then the solution is considered high risk. It is also possible that the discussion between the data scientist and business owner will lead to a better understanding of the solution that results in a change to the answers to one or more of the low-risk screening, resulting in a low-risk designation.

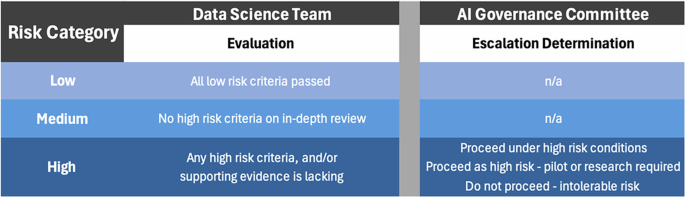

After the FAIR-AI review, which is described in detail in the next section, each AI solution is designated as low, moderate, or high risk according to the following definitions (Fig. 2):

-

Low risk: Potential adverse effects are expected to be minor and should be apparent to the end-user and business owner. No ethical, equity, compliance, or regulatory concerns were identified during a low-risk screen.

-

Moderate risk: Based on an in-depth review, one or more of the following are present: (1) potential adverse effects are not minor but are adequately addressed by workflows; (2) ethical, equity, compliance, or regulatory issues are suspected or present, but are appropriately mitigated.

-

High risk: Based on an in-depth review, one or more of the following are present: (1) potential adverse effects are notable and could have a significant negative impact on patients, teammates, individuals, or the enterprise; (2) ethical, equity, compliance, or regulatory issues suspected or present, but not adequately addressed; (3) insufficient evidence exists to recommend proceeding with implementation.

This figure outlines the risk categorization process in FAIR-AI. The Data Science Team uses Low-risk Screening Questions and In-depth Review Questions to categorize the risk level of an AI solution. If given a high risk designation, the AI solution is escalated to the AI Governance Committee to determine whether deployment can proceed.

For our health system, all AI solutions designated as high risk are escalated to the AI Governance committee where they undergo a multidisciplinary discussion. The discussion results in one of three final designations: (i) proceed to implementation under high-risk conditions; (ii) proceed to a pilot or research study; or (iii) do not proceed, implementation would create an intolerable risk for the organization.

The FAIR-AI framework is designed to encompass the full range of AI solutions in healthcare, including many that will not require in-depth review and can be designated low risk—such as those supporting back-office functions, cybersecurity, or administrative automation. Examples of moderate-risk AI tools in healthcare include solutions that support—but do not replace—clinical or administrative decision-making. These tools may influence patient care or documentation, but their outputs are generally explainable, subject to human review, and integrated into existing workflows that help mitigate risk. Examples of high-risk AI tools in healthcare include those that directly influence clinical care, diagnostics, or billing—particularly when used without consistent human oversight. They may also be deployed in sensitive contexts, such as end-of-life care or other high-stakes medical decisions. These tools often rely on complex, opaque models that can perpetuate bias, affect decision-making, and lead to significant downstream consequences if not rigorously validated and continuously monitored.

After application of the low-risk screening questions, the in-depth review questions (if necessary), and completion of the AI Governance committee review (if necessary), the proposed solution is assigned a final risk category, and a FAIR-AI Summary Statement is completed (an example is presented in Supplementary Box 1). At this point, an AI solution may need to go through other traditional governance requirements like a cyber security review, financial approvals, etc. If the AI solution ultimately is designated to move forward with implementation, then the data science team and business owners collaboratively develop a Safe AI Plan as outlined below.

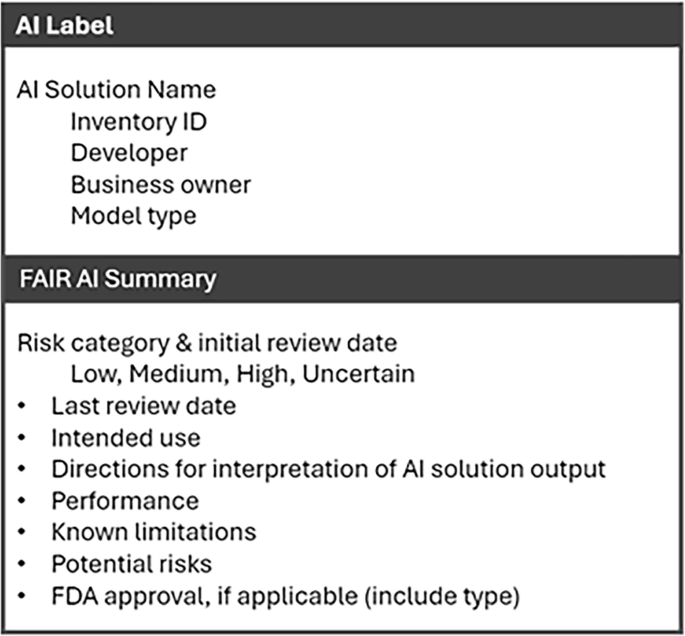

The first component of the Safe AI Plan concerns monitoring requirements. Implemented AI solutions need continuous monitoring as they may fail to adapt to new data or practice changes, which can lead to inaccurate results and increasing bias over time40,41. Similarly, when AI solutions are made readily available in workflows, it becomes easier for the solution to be used outside of its approved intended use case, which may change its inherent risk profile. For these reasons, FAIR-AI requires a monitoring plan for every deployed AI solution consisting of an attestation by the business owner at regular intervals. The attestation affirms that: (i) the deployment is still aligned with the approved use case; (ii) the underlying data and related workflows have not substantially changed; (iii) the AI solution is delivering the expected benefit(s); (iv) no unforeseen risks have been identified; and (v) there are no concerns noted related to new regulations. If the original FAIR-AI review identified specific risks, then the attestation also includes an approach to evaluate each risk along with metrics (if applicable). These evaluation metrics may range from repeating a standard model performance evaluation to obtaining periodic end-user feedback on accuracy (e.g., for a generative AI solution). The second component of the Safe AI Plan is transparency requirements. All solutions categorized as high risk also require an AI Label (Fig. 3) and end-user education at regular intervals. In situations where an end-user could potentially not be aware they are interacting with AI instead of a human, the business owner must also design implementation workflows that create transparency for the end-user (e.g., an alert, disclaimer, or consent as applicable).

AI Insights

Albania Turns to Artificial Intelligence in EU-Pressured Reforms

TLDRs;

- Albania introduces Diella, an AI “minister,” to oversee public procurement amid EU pressure for anti-corruption reforms.

- Prime Minister Edi Rama says Diella will make tenders faster, more efficient, and corruption-free.

- Supporters see the AI as a step toward EU integration; critics dismiss it as unconstitutional and symbolic.

- Global examples show AI can help fight corruption but also risks bias and ineffective outcomes.

Albania has taken an unprecedented step in its long fight against corruption, introducing Diella, an artificial intelligence system tasked with overseeing public procurement.

Prime Minister Edi Rama unveiled the virtual minister as part of reforms tied to the nation’s bid for European Union membership.

Although not legally a minister under Albanian law, which requires cabinet members to be human citizens, Diella is being presented as the country’s first fully AI-powered figure in government. Her mission is clear, to bring transparency, efficiency, and accountability to one of Albania’s most corruption-prone areas.

Diella’s role in public procurement

Diella is no stranger to Albanian citizens. She first appeared as a virtual assistant on the government’s e-Albania platform, helping more than a million people navigate bureaucratic processes such as applying for official documents. Now, her responsibilities have expanded dramatically.

Rama explained that Diella’s core task will be supervising public tenders. “We want to ensure a system where public procurement is 100% free of corruption,” he said

By automating oversight and decision-making, Diella is expected to limit human interference in sensitive processes, while also making procurement faster and more transparent.

To develop this AI system, Albania is collaborating with both local and international experts, hoping to set a global precedent for AI governance.

🇦🇱 ALBANIA HIRES AI MINISTER TO FIGHT GOVERNMENT CORRUPTION

Albania has appointed Diella, an AI-powered virtual minister, to oversee public procurement and eliminate corruption from government tenders.

Prime Minister Edi Rama announced the move at his Socialist Party… https://t.co/fjDDSjsis0 pic.twitter.com/54wr4Bl5fp

— Mario Nawfal (@MarioNawfal) September 12, 2025

Mixed reactions at home and abroad

The announcement has stirred heated debate within Albania and beyond. Supporters hail the move as a chance to rebuild public trust, especially as the country faces mounting EU pressure to eliminate systemic graft.

Dr. Andi Hoxhaj of King’s College London notes that the EU has made anti-corruption reforms a central condition for accession. “There’s a lot at stake,” he said, suggesting that Diella could serve as a tool to accelerate reforms.

However, critics see the initiative as political theatre. Opposition leaders argue that branding Diella a “minister” is unconstitutional and distracts from deeper structural issues. Some worry that AI cannot fully address entrenched human networks of influence, while others raise concerns about accountability if an algorithm makes a faulty decision.

Lessons from global experiments with AI governance

Albania’s experiment comes amid a wave of governments testing artificial intelligence in public administration. Brazil’s Alice bot has reduced fraud-related financial losses by nearly 30% in procurement audits, while its Rosie bot, which monitored parliamentary expenditures, faced limitations in producing actionable evidence.

In Europe, the Digiwhist project has shown how big data can expose procurement fraud across dozens of jurisdictions. Yet, the Netherlands’ failed attempt at AI-led welfare fraud detection, widely criticized for algorithmic bias, highlights the risks of misuse.

These examples underscore both the potential and pitfalls of AI in governance. Albania now finds itself at a critical juncture: if implemented responsibly, Diella could strengthen transparency and accelerate EU integration.

Looking ahead

Prime Minister Rama acknowledges the symbolic dimension of Diella’s appointment but insists that serious intent lies beneath the theatrics. Beyond tackling procurement fraud, he believes the AI minister will put pressure on human officials to rethink outdated practices and embrace innovation.

“Ministers should take note,” Rama said with a smile. “AI could be coming for their jobs, too.”

As Albania balances hope, skepticism, and the weight of EU expectations, Diella’s debut represents both a technological leap and a political gamble. Whether she becomes a catalyst for real reform or remains a publicity stunt will depend on execution and public trust.

AI Insights

Artificial intelligence helps break barriers for Hispanic homeownership | Business

We recognize you are attempting to access this website from a country belonging to the European Economic Area (EEA) including the EU which

enforces the General Data Protection Regulation (GDPR) and therefore access cannot be granted at this time.

For any issues, contact jgnews@jg.net or call 260-461-8773.

AI Insights

UW lab spinoff focused on AI-enabled protein design cancer treatments

A Seattle startup company has inked a deal with Eli Lilly to develop AI powered cancer treatments. The team at Lila Biologics says they’re pioneering the translation of AI design proteins for therapeutic applications. Anindya Roy is the company’s co-founder and chief scientist. He told KUOW’s Paige Browning about their work.

This interview has been edited for clarity.

Paige Browning: Tell us about Lila Biologics. You spun out of UW Professor David Baker’s protein design lab. What’s Lila’s origin story?

Anindya Roy: I moved to David Baker’s group as a postdoctoral scientist, where I was working on some of the molecules that we are currently developing at Lila. It is an absolutely fantastic place to work. It was one of the coolest experiences of my career.

The Institute for Protein Design has a program called the Translational Investigator Program, which incubates promising technologies before it spins them out. I was part of that program for four or five years where I was generating some of the translational data. I met Jake Kraft, the CEO of Lila Biologics, at IPD, and we decided to team up in 2023 to spin out Lila.

You got a huge boost recently, a collaboration with Eli Lilly, one of the world’s largest pharmaceutical companies. What are you hoping to achieve together, and what’s your timeline?

The current collaboration is one year, and then there are other targets that we can work on. We are really excited to be partnering with Lilly, mainly because, as you mentioned, it is one of the top pharma companies in the US. We are excited to learn from each other, as well as leverage their amazing clinical developmental team to actually develop medicine for patients who don’t have that many options currently.

You are using artificial intelligence and machine learning to create cancer treatments. What exactly are you developing?

Lila Biologics is a pre-clinical stage company. We use machine learning to design novel drugs. We have mainly two different interests. One is to develop targeted radiotherapy to treat solid tumors, and the second is developing long acting injectables for lung and heart diseases. What I mean by long acting injectables is something that you take every three or six months.

Tell me a little bit more about the type of tumors that you are focusing on.

We have a wide variety of solid tumors that we are going for, lung cancer, ovarian cancer, and pancreatic cancer. That’s something that we are really focused on.

And tell me a little bit about the partnership you have with Eli Lilly. What are you creating there when it comes to cancers?

The collaboration is mainly centered around targeted radiotherapy for treating solid tumors, and it’s a multi-target research collaboration. Lila Biologics is responsible for giving Lilly a development candidate, which is basically an optimized drug molecule that is ready for FDA filing. Lilly will take over after we give them the optimized molecule for the clinical development and taking those molecules through clinical trials.

Why use AI for this? What edge is that giving you, or what opportunities does it have that human intelligence can’t accomplish?

In the last couple of years, artificial intelligence has fundamentally changed how we actually design proteins. For example, in last five years, the success rate of designing protein in the computer has gone from around one to 2% to 10% or more. With that unprecedented success rate, we do believe we can bring a lot of drugs needed for the patients, especially for cancer and cardiovascular diseases.

In general, drug design is a very, very difficult problem, and it has really, really high failure rates. So, for example, 90% of the drugs that actually enter the clinic actually fail, mainly due to you cannot make them in scale, or some toxicity issues. When we first started Lila, we thought we can take a holistic approach, where we can actually include some of this downstream risk in the computational design part. So, we asked, can machine learning help us designing proteins that scale well? Meaning, can we make them in large scale, or they’re stable on the benchtop for months, so we don’t face those costly downstream failures? And so far, it’s looking really promising.

When did you realize you might be able to use machine learning and AI to treat cancer?

When we actually looked at this problem, we were thinking whether we can actually increase the clinical success rate. That has been one of the main bottlenecks of drug design. As I mentioned before, 90% of the drugs that actually enter the clinic fail. So, we are really hoping we can actually change that in next five to 10 years, where you can actually confidently predict the clinical properties of a molecule. In other words, what I’m trying to say is that can you predict how a molecule will behave in a living system. And if we can do that confidently, that will increase the success rate of drug development. And we are really optimistic, and we’ll see how it turns out in the next five to 10 years.

Beyond treating hard to tackle tumors at Lila, are there other challenges you hope to take on in the future?

Yeah. It is a really difficult problem to predict how a molecule will behave in a living system. Meaning, we are really good at designing molecules that behave in a certain way, bind to a protein in a certain way, but the moment you try to put that molecule in a human, it’s really hard to predict how that molecule will behave, or whether the molecule is going to the place of the disease, or the tissue of the disease. And that is one of the main reasons there is a 90% failure in drug development.

I think the whole field is moving towards this predictability of biological properties of a molecule, where you can actually predict how this molecule will behave in a human system, or how long it will stay in the body. I think when the computational tools become good enough, when we can predict these properties really well, I think that’s where the fun begins, and we can actually generate molecules with a really high success rate in a really short period of time.

Listen to the interview by clicking the play button above.

-

Business2 weeks ago

Business2 weeks agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms1 month ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy2 months ago

Ethics & Policy2 months agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi