AI Insights

A deep dive into artificial intelligence with enhanced optimization-based security breach detection in internet of health things enabled smart city environment

This paper proposes a novel SADDBN-AMOA technique for smart city-based IoHT networks. The main aim of the SADDBN-AMOA technique is to provide a resilient attack detection method in the IoHT environment of smart cities to mitigate security threats. It contains data pre-processing, feature optimizer, DL with attack detection, and model tuning with IHHO. Figure 2 depicts the entire process of the SADDBN-AMOA model.

Data Pre-processing through normalization

The data pre-processing step initially applies the Z-score normalization method for transforming input data into a structured pattern31. This method is chosen for its efficiency in standardizing data by converting features to have a mean of zero and a standard deviation of one, which assists in stabilizing the training process and speeds up convergence. Unlike min-max scaling, which can be sensitive to outliers, Z-score normalization is more robust as it accounts for data distribution, mitigating the impact of extreme values. This method ensures that features with diverse units or scales contribute equally to the model, averting bias towards variables with larger ranges. Additionally, it enhances the performance of models such as DBN, which usually assume distributed input data. Hence, this improves the model’s stability, accuracy, and generalization compared to other scaling techniques.

Z-score normalization, or standard score normalization, is a data pre-processing model that converts feature values by centring them near the mean with a standard deviation of 1. Concerning attack detection in IoHT atmospheres, this model assistances guarantees that each feature corresponds to the detection method, particularly after they have dissimilar units or scales. This is important for ML methods aware of feature sizes, like neural networks, SVM, or k-NN. This model improves model accuracy, performance, and convergence speed by decreasing bias from leading features. In IoHT methods, while sensor data might differ extensively, this standardization enhances the dependability of intrusion detection or anomaly. It also facilitates more efficient classification and feature selection in resource-restricted IoHT devices.

Feature optimization with SMO model

For selecting the feature process, the proposed SADDBN-AMOA model utilizes the SMO method to choose the most related features from the data32. This model was selected for its robust global search capability and efficient exploration-exploitation balance, which assists in avoiding premature convergence, which is common in other algorithms. Unlike conventional methods like genetic algorithms (GA) or particle swarm optimization (PSO), SMO illustrates faster convergence and improved adaptability to intrinsic, high-dimensional data. Its bio-inspired mechanisms effectively detect the most relevant features, mitigating dimensionality without losing critical data. This results in an enhanced accuracy of the model and reduced computational cost. Moreover, the simplicity and fewer parameters of the model make it easier to implement and tune, presenting a robust and reliable approach for optimizing feature subsets in IoHT security applications. SMO is chosen due to its latest success as a meta-heuristic model stimulated by foraging behaviour and slime mould dispersion. The SMO has three stages: wrap, approach, and search for food. The mathematical representation of all expressions is mentioned below.

Stage 1-Approaching Food: Subject to the smell (scent) that food creates, SM might approach it. Like the technique of using mathematical equations to observe the mode of contraction approaching behaviours, the succeeding equations are presented to mimic it. The equation that inspires the SM to approach food is calculated by Eq. (1)

(1)

Here, (:overrightarrow{{v}_{b}}) refers to parameters ranging from (:-1) to (:+1), and (:overrightarrow{{v}_{c}}) linearly reduces from 1 to (:0) for the last specific iteration. (:W) denotes the slime mould’s weight, and (:t) represents the iteration that is now in progress. From the SM, the (:{overrightarrow{X}}_{A}left(tright)) and (:{overrightarrow{X}}_{B}left(tright)) are selected randomly. The (:overrightarrow{X}) characterizes the location of the SM, while (:{overrightarrow{X}}_{b}) specifies the present individual’s location at the odour focus of the meal is greater. Equation (2) is utilized for calculating the (:p)-value.

$$:p=text{t}text{a}text{n}text{h}left|Sleft(iright)-DFright|:$$

(2)

The fitness of (:overrightarrow{X}) was characterized by (:Sleft(iright)), whereas the maximal fitness gained through each iteration (:S(iin:text{1,2},3dots:dots:dots:n)) is signified by DF. The (:overrightarrow{{v}_{b}}) value varies from − a to (:a), and the (:a) value is calculated using Eqs. (3) and (4).

$$:overrightarrow{{v}_{b}}in:left[-a:to:aright]$$

(3)

$$:a=arctext{t}text{a}text{n}text{h}left(-left(frac{t}{{text{m}text{a}text{x}}_{iter}}right)+1right)$$

(4)

The weight ((:W)) value is measured by Eq. (5) as shown,

$$vec{W}~left( {SmellIndexleft( i right)} right) = left{ {begin{array}{*{20}l} {1 + r cdot logleft( {frac{{b_{F} – Sleft( i right)}}{{b_{F} – w_{F} }} + 1} right),} hfill & {if,Sleft( i right) < Medleft[ S right]} hfill \ {1 – r cdot logleft( {frac{{b_{F} – Sleft( i right)}}{{b_{F} – w_{F} }} + 1} right),} hfill & {otherwise} hfill \ end{array} } right.$$

(5)

$$:SmellIndexleft(SIright)=Sortleft(Sright)$$

(6)

The above equation has the following elements: (:r) embodies randomly generated values within the range (:left[text{0,1}right]), which specifies that (:Sleft(iright)) is acknowledged for ranking in the higher portion. The (:{b}_{f:})and (:{w}_{f}) characterize the best and worst values of fitness gained within the present iterative method. The (:{text{m}text{a}text{x}}_{iter}) specifies the maximal iteration amount. (:Medleft[Sright])refers to median operator representation. The (:SmellIndexleft(SIright))displays the values of fitness in sequential order.

Stage 2-Food Wrapping: The quality and nature of the SM search model are impacted. A position’s weight will rise after a considerable focus on food is greater. It is compelled to search another area when the attention is lower as the area’s weight falls. This stage consists of upgrading the position of the SM utilizing Eq. (7):

$$:overrightarrow{X}left(t+1right)=left{begin{array}{ll}randcdot:left(UB-LBright)+LB,&:rand

(7)

Whereas (:z) denotes probability applied to attack a balance between exploitation and exploration, and (:UB) and (:LB) represent upper and lower bound limits.

Stage 3-Food Grabbling: SM moves to places with high food attention. (:W,) (:overrightarrow{{v}_{b}},) and (:overrightarrow{{v}_{c}}) characterize changing venous widths. (:overrightarrow{{v}_{b}}) and (:overrightarrow{{v}_{b}}) swing correspondingly between ([-a, a]) and [-1, 1]. For example, (:overrightarrow{{v}_{b}}) and (:overrightarrow{{v}_{b}}) become nearer to 0 when iteration improves.

The fitness function (FF) deliberates the classification accuracy and the preferred features. It maximizes the classification accuracy and reduces the set size of the selected attributes. Then, the succeeding FF is deployed to approximate unique results, as presented in Eq. (8).

$$:Fitness=alpha:cdot::ErrorRate+left(1-alpha:right)cdot:frac{#SF}{#All_F}$$

(8)

Now, (:ErrorRate) symbolizes the classification (:ErrorRate) utilizing the chosen features. (:ErrorRate) is calculated as the incorrect percentage categorized to the sum of classifications produced, identified as the value among (0,1), (:#SF) denotes preferred feature counts, and (:#All_F) stands for the complete quantity of features in the novel data set. (:alpha:) is used for controlling the prominence.

DL for attack detection

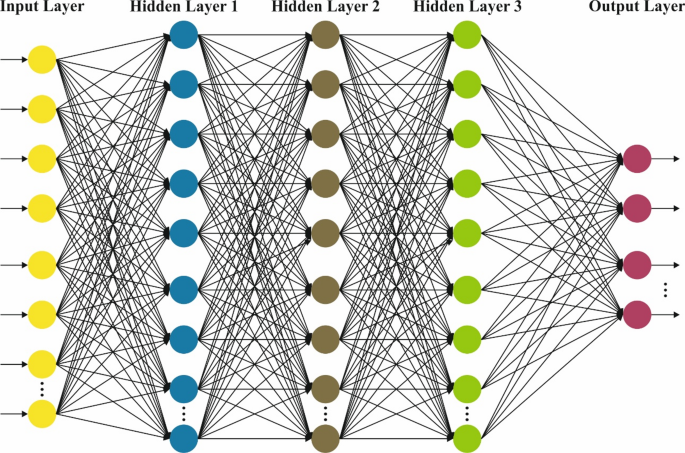

The DBN method is followed for the attack classification process33. This method is chosen for its effectual capability in learning hierarchical feature representations from complex and high-dimensional data, which is common in IoHT environments. Unlike conventional classifiers, DBN efficiently extracts deep features without extensive manual pre-processing, thus enhancing detection accuracy. The model also integrates unsupervised pretraining with fine-tuning, improving generalization and mitigating overfitting. Compared to models such as standard neural networks or SVMs, DBNs are more efficient in capturing complex patterns and dependencies in security breach data. This results in robust and reliable classification performance, making DBNs appropriate for the dynamic and diverse nature of IoHT security threats. Figure 3 demonstrates the structure of DBN.

DBNs are a DL method designed to capture composite hierarchic data representation. They include numerous stochastic, latent variables layers and can learn complex designs from higher-dimensional data. DBNs are valuable in settings, whereas feature learning and reducing the dimensions are essential, like network intrusion detection. This part examines the numerical basics, architecture, and DBNs application, highlighting their part in recognizing networking assaults. A DBN comprises numerous Restricted Boltzmann Machine (RBMs) layers and the supervised method’s last layer, often softmax classifiers. The DBN structure consists of the following elements:

RBMs: All RBMs in the DBN are two-layered, stochastic NN comprising a visible layer (VL) and a hidden layer (HL). The VL characterizes the input data, whereas the HL takes high-level characteristics. The network is trained to maximize the data probability, and the links among the layers are aimless. The main modules are:

-

VL: Characterizes the detected information and has real-valued or binary elements.

-

HL: Signifies the feature learning from the input information and takes concealed designs.

-

Weights: The relations between the HL and VL are characterized by weighting that is learned in training.

The RBM’s energy function is provided by:

$$:Eleft(nu:,:hright)=-{sum:}_{i}{b}_{i}{nu:}_{i}-{sum:}_{j}{c}_{j}{h}_{j}-{sum:}_{i,j}{w}_{ij}{nu:}_{i}{h}_{j}:$$

(9)

Whereas (:nu:) and (:h) represent hidden and visible elements, (:{w}_{ij}) are weighted, and(::{b}_{i}) and (:{c}_{j}) are biased.

Stacking RBMs: They are produced by stacking numerous RBMs in the deeper structure. All RBMs are trained layer-by-layer, whereas the output only RBM acts as the input to the following.

Last Layer of Classification: Afterward, RBM’s pretraining, the last supervised layer, is added to carry out regression or classification assignments. The learning procedure in DBNs consists of dual primary stages: fine-tuning and pretraining.

Pretraining: This stage consists of training all RBMs autonomously utilizing unsupervised learning. The aim is to learn the weights, which maximizes the data probability. The model of contrastive divergence is usually applied to train RBMs:

$$:varDelta:{w}_{ij}=eta:left(right{{v}_{i}{h}_{j}{}}_{data}-left{{v}_{i}{h}_{j}{}}_{model}right):$$

(10)

Here, (leftlangle {nu _{i} h_{j} } rightrangle _{{data}}) refers to the predictable value of the output from the information’s hidden and visible components, and (leftlangle {nu _{i} h_{j} } rightrangle _{{model}}) denotes the predicted value from the model distribution.

Finetuning: Afterward, the complete DBN is finetuned. This includes upgrading the weighting of the last layer of classification and, possibly, the weighting of the RBMs. This stage utilizes gradient descent to reduce the function of loss:

$$:L=-{sum:}_{i}({y}_{i}:text{l}text{o}text{g}left({widehat{y}}_{i}right)+left(1-{y}_{i}right)text{l}text{o}text{g}left(1-{widehat{y}}_{i}right):$$

(11)

Meanwhile, (:{y}_{i}) denotes true labels, and (:{widehat{y}}_{i}) represents forecast likelihoods.

DBNs may be used for learning the hierarchic representation of networking traffic data, allowing the recognition of composite attacking designs. The capability to remove and learn from higher-level attributes makes DBNs suitable for identifying unknown or known intrusions. By using DBNs, networking intrusion detection methods can take advantage of enhanced dimensionality reduction and feature extraction, improving their capability to recognize subtle patterns and anomalies suggestive of mischievous action. DBNs’ deeper hierarchical architecture permits further complex classification and understanding of networking traffic, possibly resulting in high precision and strength in identifying network attacks. Generally, DBNs provide a robust architecture to learn hierarchic feature representation. Their capability for modelling composite designs and decreasing dimensions makes them a helpful device in the current strength to improve cybersecurity through progressive DL methods.

Model tuning with IHHO approach

Eventually, the IHHO technique adjusts the DBN model’s hyperparameter values, resulting in greater classification performance34. This model is chosen for its superior exploration and exploitation balance, which effectively avoids local optima and ensures global search efficiency. This model enables dynamic adaptation and faster convergence compared to conventional optimization methods, namely GA or PSO, replicating the cooperative hunting strategy of Harris hawks. Its improved ability to fine-tune hyperparameters results in enhanced detection accuracy and mitigated false positives in complex IoHT environments. Moreover, the flexibility and robustness of the model make it appropriate for optimizing DL models, ensuring reliable performance even with high-dimensional and noisy data.

The HHO model is one of the population-based optimizer models that emulate the cooperative behaviour and hunting approach of the predacious bird HHO in the foraging process. Amongst the running of the model, the HHO has the candidate solution, and the target approach is the optimum solution with iterations. The HHO model might be segmented into 3 phases: local exploitation, conversion among exploitation and exploration, and global exploration.

The intention of the HHO model generates an issue with the following simplicity:

-

The tactic of arbitrarily initializing the population is employed. The arbitrary creation of the population results in the arbitrary distribution of created HHO individuals in the searching area, which results in the technique’s uncertainty.

-

In the method’s iterative procedure, only the data of the finest location is employed, which affects the complete model, falling into a local optimal when the existing finest location has a local optimal.

-

During the search process, the HHO model chooses the optimum acquiring approach completely reliant on the parameters (:lambda:) and (:E.) These dual parameters will instantly impact the HHO’s ending outcome. The decrease approach of escaping energy (:E) should attempt to fit the modification of the target’s energy in the procedure of escaping the actual situation, and the parameter value (:lambda:) additionally guarantees the unpredictability. Otherwise, it will decrease the model’s capability to exploit and search.

Logistic-tent chaotic mapping initializing populations

This model is accepted to resolve the irregular distribution of population initialization. The examination has depicted that integrating several lower-dimension chaotic mapping techniques to establish a complex, chaotic technique might effectively resolve the issues.

$$:{X}_{n+1}=mu:{X}_{n}left(1-{X}_{n}right),:n=text{1,2}cdots:$$

(12)

Here, (:mu:) represents the control parameter, and (:X) depicts the system variable. Logistic chaotic mapping has similar issues once the system parameters range from zero to one.

$$:{X}_{n+1}=left{begin{array}{l}frac{{X}_{n}}{alpha:},{X}_{n}in:left[0,:alpha:right)\:frac{(1-{X}_{n})}{(1-alpha:)},{X}_{n}in:left[alpha:,:1right]end{array}right.:$$

(13)

Here, X specifies the system variable and (:alpha:in:left(text{0,1}right)). It concerns some controlling parameters and restricted intervals of chaos.

According to the standard of Logistic-tent chaotic maps, the logistic and tent combined chaotic maps method might be formed by taking the outputs of Logistic maps as the input of Tent maps and iteratively analyzing them. This complex chaotic maps method shows the speed of iteration, and auto-correlation effectively resolves the arbitrary initialization of the population.

$$:{X}_{n+1}=left{begin{array}{l}modleft[mu:{X}_{n}left(1-{X}_{n}right)+frac{left(4-mu:right){X}_{n}}{2}right],:{X}_{n}<0.5\:mod{:left[beta:r{X}_{n}left(1-{X}_{n}right)+frac{left(4-mu:right)left(1-{X}_{n}right)}{2}right],:X}_{n}ge:0.5end{array}right.:$$

(14)

(:n) represents the location of arbitrarily generated HHO, and (:{X}_{n+1}) indicates the location of (:n)th HHO once chaotic mapping is composited.

Population hierarchies improve search strategy

This approach has a type of population hierarchy, choosing the first five optimum locations in every iteration process rather than the one optimum location accepted initially. This increases the interaction among populations and effectively decreases the risky model falling into the local optimal.

$$:{X}_{rabbit}=frac{sum:_{i=a}^{e}fleft({X}_{ir}left(tright)right)}{sum:_{j=a}^{e}fleft({X}_{jr}left(tright)right)}cdot:Xleft(tright):$$

(15)

(:a,b,) (:c,d), and (:e) are the top 3 optimum locations regarding fitness value, (:{X}_{rabbit}) specifies the optimum location chosen in every iteration, (:t) represents iteration counts, and (:fleft({X}_{ir}left(tright)right)) indicates the fitness value of the optimum location in the 10-th iteration.

Enhancement of reducing escape energy approach

It is motivated by the fractional order predator-prey dynamical technique with housings; an advanced model is projected for escaping the energy-reducing approach, which presents a contraction function in the energy-reducing equations to prevent the model from getting stuck in local optima by ensuring the escaping energy (:E) remains lower in mid and late iterations.

$$:{E}_{1}=2cdot:randcdot:{e}^{-left(frac{pi:}{2}cdot:left(frac{c}{T}right)right)}:$$

(16)

$$:E=2{E}_{0}cdot:{E}_{1}$$

(17)

The IHHO model initiates an FF to obtain heightened classification performance. It summarizes progressive numbers to characterize the enriched outcome of the candidate solutions. In this paper, the minimization of the classification rate of error is imitated as the FF, as provided in Eq. (18).

$$begin{aligned} fitnessleft( {x_{i} } right) & = ClassifierErrorRateleft( {x_{i} } right) \ & = frac{{no:of:misclassified:samples}}{{Total:no:of:samples}} times 100 \ end{aligned}$$

(18)

AI Insights

Artificial intelligence to make professional sports debut as Oakland Ballers manager – CBS News

AI Insights

‘It is a war of drones now’: the ever-evolving tech dominating the frontline in Ukraine | Ukraine

“It’s more exhausting,” says Afer, a deputy commander of the “Da Vinci Wolves”, describing how one of the best-known battalions in Ukraine has to defend against constant Russian attacks. Where once the invaders might have tried small group assaults with armoured vehicles, now the tactic is to try and sneak through on foot one by one, evading frontline Ukrainian drones, and find somewhere to hide.

Under what little cover remains, survivors then try to gather a group of 10 or so and attack Ukrainian positions. It is costly – “in the last 24 hours we killed 11,” Afer says – but the assaults that previously might have happened once or twice a day are now relentless. To the Da Vinci commander it seems that the Russians are terrified of their own officers, which is why they follow near suicidal orders.

Reconnaissance drones monitor a burnt-out tree line west of Pokrovsk; the images come through to Da Vinci’s command centre at one end of a 130-metre-long underground bunker. “It’s very dangerous to have even a small break on watching,” Afer says, and the team works round the clock. The bunker, built in four or five weeks, contains multiple rooms, including a barracks for sleep. Another is an army mess with children’s drawings, reminders of family. The menu for the week is listed on the wall.

It is three and a half years into the Ukraine war and Donald Trump’s August peace initiative has made no progress. Meanwhile the conflict evolves. Afer explains that such is the development of FPV (first person view) drones, remotely piloted using an onboard camera, that the so-called kill zone now extends “12 to 14 kilometres” behind the front – the range at which a $500 drone, flying at up to 60mph, can strike. It means, Afer adds, that “all the logistics [food, ammunition and medical supplies] we are doing is either on foot or with the help of ground drones”.

Further in the rear, at a rural dacha now used by Da Vinci’s soldiers, several types of ground drones are parked. The idea has moved rapidly from concept to trial to reality. They include remotely controlled machine guns, and flat bed robot vehicles. One, the $12,000 Termit, has tracks for rough terrain and can carry 300kg over 12 miles with a top speed of 7 miles an hour.

Land drones save lives too. “Last night we evacuated a wounded man with two broken legs and a hole in his chest,” Afer continues. The whole process took “almost 20 hours” and involved two soldiers lifting the wounded man more than a mile to a land drone, which was able to cart the victim to a safe village. The soldier survived.

While Da Vinci reports its position is stable, endless Russian attempts at infiltration have been effective at revealing where the line is thinly held or poorly coordinated between neighbouring units. Russian troops last month penetrated Ukraine’s lines north-east of Pokrovsk near Dobropillya by as much as 12 miles – a dangerous moment in a critical sector, just ahead of Trump’s summit with Vladimir Putin in Alaska.

At first it was said a few dozen had broke through, but the final tally appears to have been much greater. Ukrainian military sources estimate that 2,000 Russians got through and that 1,100 infiltrators were killed in a fightback led by the 14th Chervona Kalyna brigade from Ukraine’s newly created Azov Corps – a rare setback for an otherwise slow but remorseless Russian advance.

That evening at another dacha used by Da Vinci, people linger in the yard while moths target the light bulbs. Inside, a specialist drone jammer sits on a gaming chair surrounded by seven screens arranged in a fan and supported by some complex carpentry.

It is too sensitive to photograph, but the team leader Oleksandr, whose call sign is Shoni, describes the jammer’s task. Both sides can intercept each other’s feeds from FPV drones and three screens are dedicated to capturing footage that can then help to locate them. Once discovered, the operator’s task is to find the radio frequency the drone is using and immobilise it with jammers hidden in the ground (unless, that is, they are fibre optic drones that use a fixed cable up to 12 miles long instead of a radio connection).

“We are jamming around 70%,” Shoni says, though he acknowledges that the Russians achieve a similar success rate. In their sector, this amounts to 30 to 35 enemy drones a day. At times, the proportion downed is higher. “During the last month, we closed the sky. We intercepted their pilots saying on the radio they could not fly,” he continues, but that changed after Russian artillery destroyed jamming gear on the ground. The battle, Shoni observes, ebbs and flows: “It is a war of drones now and there is a shield and there is a sword. We are the shield.”

A single drone pilot can operate 20 missions in 24 hours says Sean, who flies FPVs for Da Vinci, for several days at a stretch in a crew of two or three, hidden a few miles behind the frontline. Because the Russians are on the attack the main target is their infantry. Sean frankly acknowledges he is “killing at least three Russian soldiers” during that time, in the deadly struggle between ground and air. Does it make it easier to kill the enemy, from a distance? “How can we tell, we only know this,” says Dubok, another FPV pilot, sitting alongside Sean.

Other anti-drone defences are more sophisticated. Ukraine’s third brigade holds the northern Kharkiv sector, east of the Oskil River, but to the west are longer-range defence positions. Inside, a team member watches over a radar, mostly looking for signs of Russian Supercam, Orlan and Zala reconnaissance drones. If they see a target, two dash out into fields ripe with sunflowers to launch an Arbalet interceptor: a small delta wing drone made of a black polystyrene, which costs $500 and can be held in one hand.

The Arbalet’s top speed is a remarkable 110 miles an hour, though its battery life is a shortish 40 minutes. It is flown by a pilot hidden in the bunker via its camera using a sensitive hobbyists’ controller. The aim is to get it close enough to explode the grenade it carries and destroy the Russian drone. Buhan, one of the pilots, says “it is easier to learn how to fly it if you have never flown an FPV drone”.

It is an unusually wet and cloudy August day, which means a rare break from drone activity as the Russians will not be flying in the challenging conditions. The crew don’t want to launch the Arbalet in case they lose it, so there is time to talk. Buhan says he was a trading manager before the war, while Daos worked in investments. “I would have had a completely different life if it had not been for the war,” Daos continues, “but we all need to gather to fight to be free.”

So do the pilots feel motivated to carry on fighting when there appears to be no end? The two men look in my direction, and nod with a resolution not expressed in words.

AI Insights

Tech giants pay talent millions of dollars

Meta CEO Mark Zuckerberg offered $100 million signing bonuses to top OpenAI employees.

David Paul Morris | Bloomberg | Getty Images

The artificial intelligence arms race is heating up, and as tech giants scramble to come out on top, they’re dangling millions of dollars in front of a small talent pool of specialists in what’s become known as the AI talent war.

It’s seeing Big Tech firms like Meta, Microsoft, and Google compete for top AI researchers in an effort to bolster their artificial intelligence divisions and dominate the multibillion-dollar market.

Meta CEO Mark Zuckerberg recently embarked on an expensive hiring spree to beef up the company’s new AI Superintelligence Labs. This included poaching Scale AI co-founder Alexander Wang as part of a $14 billion investment into the startup.

OpenAI’s Chief Executive Sam Altman, meanwhile, recently said the Meta CEO had tried to tempt top OpenAI talent with $100 million signing bonuses and even higher compensation packages.

If I’m going to spend a billion dollars to build a [AI] model, $10 million for an engineer is a relatively low investment.

Alexandru Voica

Head of Corporate Affairs and Policy at Synthesia

Google is also a player in the talent war, tempting Varun Mohan, co-founder and CEO of artificial intelligence coding startup Windsurf, to join Google DeepMind in a $2.4 billion deal. Microsoft AI, meanwhile, has quietly hired two dozen Google DeepMind employees.

“In the software engineering space, there was an intense competition for talent even 15 years ago, but as artificial intelligence became more and more capable, the researchers and engineers that are specialized in this area has stayed relatively stable,” Alexandru Voica, head of corporate affairs and policy at AI video platform Synthesia, told CNBC Make It.

“You have this supply and demand situation where the demand now has skyrocketed, but the supply has been relatively constant, and as a result, there’s the [wage] inflation,” Voica, a former Meta employee and currently a consultant at the Mohamed bin Zayed University of Artificial Intelligence, added.

Voica said the multi-million dollar compensation packages are a phenomenon the industry has “never seen before.”

Here’s what’s behind the AI talent war:

Building AI models costs billions

The inflated salaries for specialists come hand-in-hand with the billion-dollar price tags of building AI models — the technology behind your favorite AI products like ChatGPT.

There are different types of AI companies. Some, like Synthesia, Cohere, Replika, and Lovable, build products; others, including OpenAI, Anthropic, Google, and Meta, build and train large language models.

“There’s only a handful of companies that can afford to build those types of models,” Voica said. “It’s very capital-intensive. You need to spend billions of dollars, and not a lot of companies have billions of dollars to spend on building a model. And as a result, those companies, the way they approach this is: ‘If I’m going to spend a billion dollars to build a model, $10 million for an engineer is a relatively low investment.'”

Anthropic’s CEO Dario Amodei told Time Magazine in 2024 that he expected the cost of training frontier AI models to be $1 billion that year.

Stanford University’s AI Institute recently produced a report that showed the estimated cost of building select AI models between 2019 and 2024. OpenAI’s GPT-4 cost $79 million to build in 2023, for example, while Google’s Gemini 1.0 Ultra was $192 million. Meta’s Llama 3.1-405B cost $170 million to build in 2024.

“Companies that build products pay to use these existing models and build on top of them, so the capital expenditure is lower and there isn’t as much pressure to burn money,” Voica said. “The space where things are very hot in terms of salaries are the companies that are building models.”

AI specialists are in demand

The average salary for a machine learning engineer in the U.S. is $175,000 in 2025, per Indeed data.

Pixelonestocker | Moment | Getty Images

Machine learning engineers are the AI professionals who can build and train these large language models — and demand for them is high on both sides of the Atlantic, Ben Litvinoff, associate director at technology recruitment company Robert Walters, said.

“There’s definitely a heavy increase in demand with regards to both AI-focused analytics and machine learning in particular, so people working with large language models and people deploying more advanced either GPT-backed or more advanced AI-driven technologies or solutions,” Litvinoff explained.

This includes a “slim talent pool” of experienced specialists who have worked in the industry for years, he said, as well as AI research scientists who have completed PhDs at the top five or six universities in the world and are being snapped up by tech giants upon graduating.

It’s leading to mega pay packets, with Zuckerberg reportedly offering $250 million to a 24-year-old AI genius Matt Deitke, who dropped out of a computer science doctoral program at the University of Washington.

Meta directed CNBC to Zuckerberg’s comments to The Information, where the Facebook founder said there’s an “absolute premium” for top talent.

“A lot of the specifics that have been reported aren’t accurate by themselves. But it is a very hot market. I mean, as you know, and there’s a small number of researchers, which are the best, who are in demand by all of the different labs,” Zuckerberg told the tech publication.

“The amount that is being spent to recruit the people is actually still quite small compared to the overall investment and all when you talk about super intelligence.”

Litvinoff estimated that, in the London market, machine learning engineers and principal engineers are currently earning six-figure salaries ranging from £140,000 to £300,000 for more senior roles, on average.

In the U.S., the average salary for a machine learning engineer is $175,000, reaching nearly $300,000 at the higher end, according to Indeed.

Startups and traditional industries get left behind

As tech giants continue to guzzle up the best minds in AI with the lure of mammoth salaries, there’s a risk that startups get left behind.

“Some of these startups that are trying to compete in this space of building models, it’s hard to see a way forward for them, because they’re stuck in the space of: the models are very expensive to build, but the companies that are buying those models, I don’t know if they can afford to pay the prices that cover the cost of building the model,” Voica noted.

Mark Miller, founder and CEO of Insurevision.ai, recently told Startups Magazine that this talent war was also creating a “massive opportunity gap” in traditional industries.

“Entire industries like insurance, healthcare, and logistics can’t compete on salary. They need innovation but can’t access the talent,” Miller said. “The current situation is absolutely unsustainable. You can’t have one industry hoarding all the talent while others wither.”

Voica said AI professionals will have to make a choice. While some will take Big Tech’s higher salaries and bureaucracy, others will lean towards startups, where salaries are lower, but staff have more ownership and impact.

“In a large company, you’re essentially a cog in a machine, whereas in a startup, you can have a lot of influence. You can have a lot of impact through your work, and you feel that impact,” Voica said.

Until the price of building AI models comes down, however, the high salaries for AI talent are likely to remain.

“As long as companies will have to spend billions of dollars to build the model, they will spend tens of millions, or hundreds of millions, to hire engineers to build those models,” Voica added.

“If all of a sudden tomorrow, the cost to build those models decreases by 10 times, the salaries I would expect would come down as well.”

-

Business1 week ago

Business1 week agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms4 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi