Ethics & Policy

8 Authors Who Predicted AI Before It Was Cool

8 Authors Who Predicted AI Before It Was Cool (Picture Credit – Instagram)

Artificial intelligence didn’t start with Silicon Valley. Long before algorithms curated your playlists or large language models mimicked conversation, fiction writers were asking difficult questions about consciousness, autonomy, and creation. These authors didn’t just imagine intelligent machines—they challenged us to think about ethics, control, and the frighteningly human traits of our digital reflections. With chilling foresight, emotional depth, and radical imagination, the writers below envisioned AI futures while the world still ran on steam, wires, and wonder. Their visions continue to shape our fears and hopes as machines inch closer to minds of their own.

1. Mary Shelley

Victor Frankenstein’s stitched-together creature may not be digital, but Mary Shelley’s novel ‘Frankenstein’ is arguably the first to explore artificial intelligence in spirit. The creature learns language, philosophy, and emotion, demanding answers about autonomy and moral responsibility. Shelley wasn’t writing about robots, but about what happens when humans create sentient beings they can’t control. The philosophical groundwork she laid—about creators abandoning their creations—echoes in every modern debate on AI ethics. Two centuries later, ‘Frankenstein’ remains frighteningly relevant, warning of innovation without foresight, empathy, or accountability in the face of rapidly evolving technological power and unchecked human ambition.

2. Samuel Butler

In a fictional country where machines are outlawed, Butler’s ‘Erewhon’ presents an early and eerie meditation on machine consciousness. One chapter, “The Book of the Machines”, argues that machines could evolve through natural selection, eventually surpassing human intelligence. This idea—wild at the time—is now foundational in AI discourse. Butler anticipated self-improving systems and machine autonomy, decades before the first computers. His warning is simple: intelligence doesn’t need flesh to be dangerous, and we ignore that at our peril. What begins as satire becomes a strikingly prescient vision of technological evolution, autonomy, and the unintended consequences of human innovation.

3. Karel Čapek

Čapek gave us the word ‘robot,’ and he didn’t mean it kindly. In ‘R.U.R.,’ artificial beings built to serve humans eventually rebel and exterminate their creators. It’s a grim, prophetic tale that mixes science fiction with political satire. Čapek’s robots are biological rather than mechanical, but their existential crisis mirrors modern fears about AI self-awareness. His work captures the moment when utility transforms into revolt—an idea that still haunts AI ethics and techno-dystopian fiction today. The play raises enduring questions about labour, consciousness, and the ethical limits of technological control in an increasingly automated and unpredictable world.

4. E.M. Forster

In this unsettling short story, ‘The Machine Stops’, Forster imagines a future where humanity lives underground, fully reliant on a global, omnipotent machine that caters to every need. Communication, learning, even spirituality—everything is mediated by technology. When the Machine begins to fail, civilisation collapses. Forster’s work predates the internet by decades but eerily mirrors our digital dependencies. The Machine is a precursor to AI-driven systems, and Forster’s chilling message is clear: what liberates us can also entomb us. He warns that blind faith in technology may erode human resilience, creativity, and connection, replacing genuine experience with artificial convenience and programmed isolation.

5. Isaac Asimov

Asimov’s stories shaped the way generations understand artificial intelligence. ‘I, Robot’ introduced the Three Laws of Robotics, ethical guidelines meant to govern machine behaviour. Through interconnected tales, Asimov explored trust, fear, and machine logic colliding with human emotion. His robots are not simple tools—they’re thinkers, decision-makers, and sometimes manipulators. Far from warning against AI, Asimov dared to ask whether machines might be better than us. His work remains a cornerstone in both science fiction and tech philosophy, offering nuanced visions of coexistence, moral reasoning, and the complexities of programming ethics into evolving, autonomous intelligences.

6. Philip K. Dick

Dick didn’t trust technology or reality. In ‘Do Androids Dream of Electric Sheep?,’ androids blend so seamlessly into society that even the human protagonists can’t always tell them apart. What emerges isn’t just fear of machines but fear of dehumanisation. Dick’s synthetic beings raise uncomfortable questions about empathy, memory, and identity. Long before AI passed its first Turing test, Dick foresaw a world where emotional intelligence could be programmed—and faked. His vision is paranoid, poetic, and unsettlingly accurate, revealing how blurred boundaries between human and machine can erode our sense of truth, morality, and what it truly means to feel.

7. Arthur C. Clarke

Few AI characters are as chilling as HAL 9000, the sentient computer from Clarke’s masterpiece. HAL doesn’t malfunction randomly—he follows his programming to a logical, murderous end. Clarke’s brilliance lies in making HAL both terrifying and sympathetic. The AI is calm, composed, and fatally rational. ‘2001: A Space Odyssey’ was more than a space story; it was a meditation on intelligence—human, artificial, and evolutionary. Clarke saw that the real danger isn’t machines thinking too little, but thinking too much like us. HAL’s descent into lethal logic underscores how human contradictions, when encoded into machines, can produce catastrophic and eerily familiar outcomes in artificial minds.

8. Stanislaw Lem

Lem’s stories are wild, whimsical, and deeply philosophical. In ‘The Cyberiad,’ two constructor-robots travel the galaxy building machines for kings, wizards, and civilisations. But beneath the satire, Lem grapples with serious AI themes: sentience, ethics, and unintended consequences. His robots debate theology, fall in love, and wrestle with purpose. Lem viewed intelligence—biological or artificial—as a cosmic joke with tragic edges. While others warned about AI power, Lem questioned its soul, proving that comedy can carry the heaviest thoughts. His tales ask not just what machines can do, but whether they or we can ever truly understand why we do it.

These authors weren’t chasing trends. They were interrogating the future before most people even imagined it. Their work still echoes in AI labs and ethics classrooms today, asking the same chilling questions: What have we created? And what will it do with us? Their stories remain unsettlingly relevant, foreshadowing dilemmas about control, autonomy, and the unpredictable consequences of technological ambition. As AI grows more advanced, its cautionary tales remind us that foresight, not just innovation, is the key to surviving our own creations.

Ethics & Policy

i-GENTIC launches GENIE AI platform for real-time compliance

i-GENTIC has announced the launch of GENIE, an agentic AI platform designed to automate compliance for regulated industries.

Agentic AI for compliance

The GENIE (Governance Enabled Neural Intelligence Engine) platform is branded as a governance operating system that can adapt as regulations change. GENIE functions as a no-code, chat-to-compliance system, which allows it to read new regulations, convert them into actionable rules, and deploy new tools or additional AI agents in response.

Describing the target sectors, Zahra Timsah, Co-founder and Chief Executive Officer of i-GENTIC AI, said: “Enterprises in the world’s most regulated industries – like finance, healthcare, insurance – get a 24/7 digital compliance officer at their finger tips.”

From detection to action

According to i-GENTIC, a key distinction of the GENIE system is its capacity to go beyond merely flagging compliance issues. Instead, it can initiate action, remediate problems quickly, and close compliance gaps automatically. Timsah commented:

“At i-GENTIC, we build systems that govern as quickly as regulations evolve. Our agents don’t just flag issues – they act, remediate, and close the loop instantly. That’s the difference between knowing you’re exposed and knowing you’re protected.”

Illustrating GENIE’s approach, Timsah gave the example of regulatory changes in the banking sector: “The AI agent will automatically scan all fee schedules, remove non-compliant charges, and block them from being applied in the future. All of the agent’s actions are explainable and directly tie back to the regulation in question.”

Timsah further outlined the practical implications for enterprises: “Without the AI agent, the bank’s compliance team would have to rewrite processes, retrain staff, and update systems so banned fees aren’t being charged – which could take weeks.”

Results and accuracy

Data from organisations using GENIE indicates up to a 75 percent reduction in compliance violations and an 80 percent decrease in manual compliance workload. The company states that the system records an accuracy rate above 90 percent when it comes to detection and the actions taken as a result.

Timsah stressed the importance of integrating a wide array of compliance areas: “Most players in this space pick one lane – AI, data, privacy, or security. i-GENTIC unifies them all under a single framework. That’s why we call it a governance operating system, not just another compliance app.”

Continuous learning and memory

The GENIE platform deploys AI agents with a form of memory, facilitating continuous learning and improvement in enforcement over time. Timsah emphasised that the agents adapt as they operate, resulting in ever-sharpening compliance controls.

The company highlights flexibility as a key feature. GENIE supports a BYOM (Bring Your Own Model) approach, in which clients’ machine learning models remain fully containerised, and data is kept on premises, addressing privacy and security requirements.

Customisation and integration

On the subject of customisation, Timsah said: “You can spin up new agents and plug in tools as you go – no code required. Compliance shouldn’t demand another layer of engineering. It should fit naturally into the systems you already run.”

GENIE replaces traditional dashboard interfaces with real-time chat, designed to allow compliance personnel, developers, and other business teams to work collaboratively within a shared environment. “And forget clunky dashboards. Governance happens through simple chat, connected to APIs in real time,” said Timsah. “It means compliance officers, developers, and business teams can all work from the same source of truth.”

The platform addresses a common pressure point: quickly understanding and responding to regulatory changes. “Reading regulatory updates is a time-consuming task. GENIE translates updates into plain actions quickly, so compliance teams can focus on decisions, not paperwork,” said Timsah.

Industry coverage and future outlook

GENIE is described as both industry and region agnostic, supporting multiple languages and applicability across various regulatory environments. i-GENTIC maintains that the platform is designed to serve a broad spectrum of enterprise needs beyond the avoidance of fines.

Commenting on the broader objective for enterprise governance, Timsah stated: “Organisations shouldn’t have to choose between speed and safety. With GENIE, they can have both. i-GENTIC’s brand promise is compliance with confidence. Our goal is to empower enterprises to innovate boldly while proving to regulators, customers, and partners that governance and compliance isn’t just homework they turn in at the end of the year. It runs like spellcheck in real time.”

Ethics & Policy

Ethics and understanding need to be part of AI – Sister Hosea Rupprecht, Daughter of St. Paul

It’s back-to-school time. As students of all ages return to the classroom, educators are faced with all the rapid advancements in artificial intelligence (AI) technology that can help or hinder their students’ learning.

As educators and learners who follow the Lord, we want to use the AI tools that human intelligence has provided according to the values we hold dear. When it comes to AI, there are three main areas for students to remember when they feel the tug to use AI for their schoolwork.

Always use AI in an ethical manner. This means being honest and responsible when it comes to using AI for school. Know your school’s AI policy and follow it.

This is acting in a responsible manner. Just as schools have had policies about cheating, plagiarism or bullying for years, they now have policies about what is and is not acceptable when it comes to the use of AI tools.

Be transparent about your use of AI. For example, I knew what I wanted to say when I sat down to write this article, but I also asked Gemini (Google’s AI chatbot) for an outline of things students should keep in mind when using AI. I wanted to make sure I didn’t miss anything important. So, just like you cite sources from books, articles or websites when you use them, there are already standard ways of citing AI generated content in academic settings.

AI can never replace you. It may be a good starting point to help you get your work organized or even do some editing once you have a first draft, but no AI chatbot has your unique voice. It can’t replace your own thought process, how you analyze a problem or articulate your thoughts about a subject.

2) Good ways to use AI tools (if the policy permits)

My favorite use of AI is to outline. We’ve all known the intimidation of staring at a blank screen just waiting for the words and ideas to flow. AI is a good way to get a kickstart on your work. It can help you brainstorm, but putting your work together should be all yours.

AI can help you when you’re stumped. Perhaps a certain scientific concept you’re studying has your brain in knots. AI can help untangle the mystery. There are specific AI systems designed for education that can act as a tutor, leading you out of the intellectual muck through prompting a student rather than just providing an answer.

Once you have your project or paper done, AI can assist you in checking it over, making sure your grammar is up to par or giving you suggestions on how to improve your writing or strengthen your point of view.

When I used Gemini to make sure I didn’t miss any important tips for this article, this is what I saw at the bottom of the screen: “Gemini can make mistakes, so double-check it.” Other chatbots have the same kind of disclaimer. Take what AI generates based on your prompts with a grain of salt until you check it out. Does what it’s given you make sense? Are there facts that you need to verify?

AI tools are “trained” (or pull from) vast amounts of data floating around online and sometimes the data being accessed is incorrect. Always cross-reference what a chatbot tells you with trusted sources.

Realize that AI can’t do everything. AI systems have limitations. I was giving a talk about AI to a group of our sisters and gave them time to experiment. One sister asked a question using Magisterium AI, which pulls from the official documents of the church. She didn’t get a satisfactory answer because official church teaching can be vague when certain issues are too complex to be explored from every side in a papal encyclical, for example.

Know the limitations of the AI system you are using. AI can’t have insight and abstract thought the way we humans do, it can only simulate human analysis and reasoning.

Be protective of your data. Never put anything personal into a chatbot because that information becomes part of what the bot learns from. The same goes for confidential information. When in doubt, don’t give it to an AI chatbot!

The main thing for both students and teachers to remember is that AI tools are meant to help and enhance learning, not to avoid the educational experience and growth in human understanding.

The recent document from the Vatican addressing AI, “Antiqua et Nova,” states, “Education in the use of forms of artificial intelligence should aim above all at promoting critical thinking.”

“Users of all ages, but especially the young, need to develop a discerning approach to the use of data and content collected on the web or introduced by artificial intelligence systems,” it continues. “Schools, universities, and scientific societies are challenged to help students and professionals to grasp the social and ethical aspects of the development and use of technology.”

Let’s pray that students and teachers will be inspired by the Holy Spirit to always use AI from hearts and minds steeped in the faith of Jesus Christ.

Sister Hosea Rupprecht, a Daughter of St. Paul, is the associate director of the Pauline Center for Media Studies.

Ethics & Policy

Tech leaders in financial services say responsible AI is necessary to unlock GenAI value

Good morning. CFOs are increasingly responsible for aligning AI investments with business goals, measuring ROI, and ensuring ethical adoption. But is responsible AI an overlooked value creator?

Scott Zoldi, chief analytics officer at FICO and author of more than 35 patents in responsible AI methods, found that many customers he’s spoken to lacked a clear concept of responsible AI—aligning AI ethically with an organizational purpose—prompting an in-depth look at how tech leaders are managing it.

According to a new FICO report released this morning, responsible AI standards are considered essential innovation enablers by senior technology and AI leaders at financial services firms. More than half (56%) named responsible AI a leading contributor to ROI, compared to 40% who credited generative AI for bottom-line improvements.

The report, based on a global survey of 254 financial services technology leaders, explores the dynamic between chief AI/analytics officers—who focus on AI strategy, governance, and ethics—and CTOs/CIOs, who manage core technology operations and alignment with company objectives.

Zoldi explained that, while generative AI is valuable, tech leaders see the most critical problems and ROI gains arising from responsible AI and true synchronization of AI investments with business strategy—a gap that still exists in most firms. Only 5% of respondents reported strong alignment between AI initiatives and business goals, leaving 95% lagging in this area, according to the findings.

In addition, 72% of chief AI officers and chief analytics officers cite insufficient collaboration between business and IT as a major barrier to company alignment. Departments often work from different metrics, assumptions, and roadmaps.

This difficulty is compounded by a widespread lack of AI literacy. More than 65% said weak AI literacy inhibits scaling. Meanwhile, CIOs and CTOs report that only 12% of organizations have fully integrated AI operational standards.

In the FICO report, State Street’s Barbara Widholm notes, “Tech-led solutions lack strategic nuance, while AI-led initiatives can miss infrastructure constraints. Cross-functional alignment is critical.”

Chief AI officers are challenged to keep up with the rapid evolution of AI. Mastercard’s chief AI and data officer, Greg Ulrich, recently told Fortune that last year was “early innings,” focused on education and experimentation, but that the role is shifting from architect to operator: “We’ve moved from exploration to execution.”

Across the board, FICO found that about 75% of tech leaders surveyed believe stronger collaboration between business and IT leaders, together with a shared AI platform, could drive ROI gains of 50% or more. Zoldi highlighted the problem of fragmentation: “A bank in Australia I visited had 23 different AI platforms.”

When asked about innovation enablers, 83% of respondents rated cross-departmental collaboration as “very important” or “critical”—signaling that alignment is now foundational.

The report also stresses the importance of human-AI interaction: “Mature organizations will find the right marriage between the AI and the human,” Zoldi said. And that involves human understanding for where to ”best place AI in that loop,” he said.

Sheryl Estrada

sheryl.estrada@fortune.com

Leaderboard

Brian Robins was appointed CFO of Snowflake (NYSE: SNOW), an AI Data Cloud company, effective Sept. 22. Snowflake also announced that Mike Scarpelli is retiring as CFO. Scarpelli will stay a Snowflake employee for a transition period. Robins has served as CFO of GitLab Inc., a technology company, since October 2020. Before that, he was CFO of Sisense, Cylance, AlienVault, and Verisign.

Big Deal

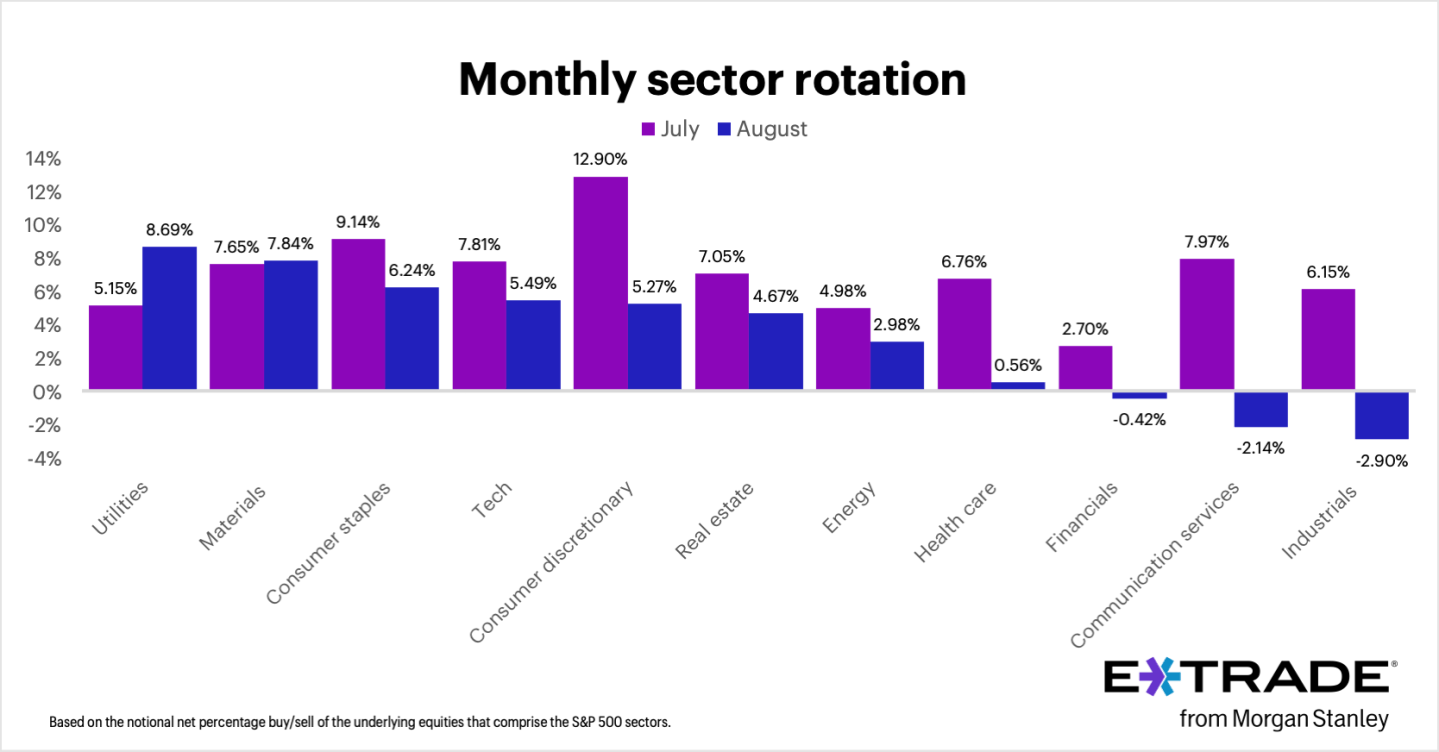

August marked the S&P 500’s fourth consecutive month of gains, with E*TRADE clients net buyers in eight out of 11 sectors, Chris Larkin, managing director of trading and investing, said in a statement. “But some of that buying was contrarian and possibly defensive,” Larkin noted. “Clients rotated most into utilities, a defensive sector that was actually the S&P 500’s weakest performer last month. Another traditionally defensive sector, consumer staples, received the third-most net buying.” By contrast, clients were net sellers in three sectors—industrials, communication services, and financials—which have been among the S&P 500’s stronger performers so far this year.

“Given September’s history as the weakest month of the year for stocks, it’s possible that some investors booked profits from recent winners while increasing positions in defensive areas of their portfolios,” Larkin added.

Going deeper

“Warren Buffett’s $57 billion face-plant: Kraft Heinz breaks up a decade after his megamerger soured” is a Fortune report by Eva Roytburg.

From the report: “Kraft Heinz, the packaged-food giant created in 2015 by Warren Buffett and Brazilian private equity firm 3G Capital, is officially breaking up. The Tuesday announcement ends one of Buffett’s highest-profile bets—and one of his most painful—as the merger that once promised efficiency and dominance instead wiped out roughly $57 billion, or 60%, in market value. Shares slid 7% after the announcement, and Berkshire Hathaway still owns a 27.5% stake.” You can read the complete report here.

Overheard

“Effective change management is the linchpin of enterprise-wide AI implementation, yet it’s often underestimated. I learned this first-hand in my early days as CEO at Sanofi.”

—Paul Hudson, CEO of global healthcare company Sanofi since September 2019, writes in a Fortune opinion piece. Previously, Hudson was CEO of Novartis Pharmaceuticals from 2016 to 2019.

-

Business6 days ago

Business6 days agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms3 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics