Books, Courses & Certifications

Teaching with Heart: How Professor Peter Chin Brings Connection and Excellence to Dartmouth’s Online MEng

When you picture a machine learning class, you might imagine a sea of code, dense math, and a professor droning on in a dark lecture hall. But for Professor Peter Chin, the experience is anything but that. It’s personal, dynamic, and rooted in a belief that learning should be rigorous and human. As one of the founding faculty members of Dartmouth’s online Master of Engineering in Computer Engineering, Professor Chin is not only shaping the way students learn machine learning remotely, he’s helping redefine what a modern graduate engineering education can be.

Background and Motivation: From BU to Hanover—and into the Cloud

Peter Chin joined Dartmouth three years ago, after nearly a decade teaching at Boston University. Now a professor of engineering and the leader of the LISP (Learning, Intelligence, and Signal Processing) research group, Chin’s work weaves together elements of game theory and graph theory.

When the dean of engineering approached him about developing a machine learning course for Dartmouth’s newly envisioned online MEng, Chin’s response was an immediate yes.

But what followed was a year-long process of adapting an in-person course into something fully online, fully asynchronous, and fully worthy of the Dartmouth name. “I didn’t realize just how much work it would be,” he admits. “It took a year or more to get the slides, recordings, and edits done.” One 14-hour Saturday in the library studio was just one of many marathon efforts to ensure the content met his high standards.

The result? The machine learning course, one of the program’s two foundational courses, alongside signal processing. Professor Chin considers both to be a gateway to the program, and it’s a first impression that he takes seriously.

Teaching Online: Rigor, Realism, and a Little Reinvention

Professor Chin approached the online format with both excitement and realism. “During COVID, I taught over Zoom, but that was synchronous. With this program, it’s asynchronous. It was hard to know how the longer lectures would land.”

With support from Dartmouth’s Learning Design and Innovation team, he transformed his usual two-hour lectures into bite-sized, high-impact videos, aiming to keep them under ten minutes. It was a major shift, but something the Dartmouth team knew would be more digestible for online learners. “I had years of lecture material and had to rethink everything,” he says. “But once we figured it out, the result was great. Our production team was amazing and helped make everything so engaging.”

Still, Chin didn’t want to lose the classroom energy. He holds twice-weekly live sessions, a standard for all Dartmouth online MEng courses, and broadcasts from an actual Dartmouth classroom.

“I want students to feel like they’re here, part of the campus. I talk about current events in AI, adapt the material, and keep things as up-to-date as possible.”

About the Course: Practical, Challenging, and Always Evolving

Chin’s machine learning course is designed to reflect the real demands of the tech world. Students tackle real-world projects, adapt to new topics as they emerge, and actively apply what they learn immediately.

“My background is in pure math, where things never change. In AI, it’s the opposite. Things change so fast! There are topics I taught two years ago that I’ve already phased out. It’s important to me to keep the courses current.”

The online platform supports this dynamic structure. Students collaborate on team projects from day one, matched across time zones and skill sets. “Learning is more fun when you do it together,” Chin emphasizes. “That group aspect is built into every course in the program.”

Mid-course feedback is taken seriously, too. “Last year, some students felt it was too much work, so we adjusted mid-stream. It’s important to listen to student feedback.”

Industry Relevance: Built for Real Careers

Most of Chin’s students are full-time professionals in the tech industry. Some want promotions; others want to pivot into more technical roles. One student, he recalls, landed a job at Meta after taking his class.

“I wrote a recommendation letter for him, and months later he emailed to say, ‘Thank you so much, I got the job!’ That was really special.”

That level of connection isn’t rare. Chin makes a point to get to know his students and respects the challenge they take on by studying while working.

“Bless their hearts,” he says. “Sometimes I don’t know how they do it all. But we honor that. We design this program for people who are working moms and busy professionals.”

The Future of Engineering: Stay Curious, Stay Resilient

Asked about the future, Chin doesn’t hesitate. “Curiosity is key. You don’t have to be an AI expert, but you’ll be successful if you stay curious and persistent.”

He’s encouraged by the motivation he sees in his students. And he believes the mindset taught at Dartmouth—of growth, adaptation, and intellectual resilience—is what sets graduates up for success.

“If you’re the kind of employee who grows as your company grows, you’re invaluable. That’s what we try to nurture.”

Final Thoughts: Is This the Right Path for You?

Professor Chin is realistic about the commitment required for Dartmouth’s online MEng. “It’s not easy. It’s rigorous. But if you’re looking for a high-quality education, and one that understands the reality of having a busy life, this could be a great fit.”

His advice? Talk to current students. Reach out to faculty. Don’t assume it’s impossible.

“We know our students are working parents, career changers, and lifelong learners. We’ll do everything we can to make it possible for you.”

And if you ever want to visit? You’re welcome.

“Some of our online students have visited the campus. It’s special. Dartmouth has a real sense of place, and when you come here, you feel it.”

Ready to take the next step? If you’re curious, ambitious, and interested in an online engineering degree designed for working professionals, Dartmouth’s online Master of Engineering in Computer Engineering might be your next step. With professors like Peter Chin leading the way, you won’t just learn, you’ll connect, grow, and thrive, 100% online, hands-on, and head-first into the future.

Books, Courses & Certifications

10 generative AI certifications to grow your skills

AWS Certified AI Practitioner

Amazon’s AWS Certified AI Practitioner certification validates your knowledge of AI, machine learning (ML), and generative AI concepts and use cases, as well as your ability to apply those in an enterprise setting. The exam covers the fundamentals of AI and ML, generative AI, applications of foundation models, and guidelines for responsible use of AI, as well as security, compliance, and governance for AI solutions. It’s targeted at business analysts, IT support professionals, marketing professionals, product and project managers, line-of-business or IT managers, and sales professionals. It’s an entry-level certification and there are no prerequisites to take the exam.

Cost: $100

Certified Generative AI Specialist (CGAI)

Offered through the Chartered Institute of Professional Certifications, the Certified Generative AI Specialist (CGAI) certification is designed to teach you the in-depth knowledge and skills required to be successful with generative AI. The course covers principles of generative AI, data acquisition and preprocessing, neural network architectures, natural language processing (NLP), image and video generation, audio synthesis, and creative AI applications. On completing the learning modules, you will need to pass a chartered exam to earn the CGAI designation.

Books, Courses & Certifications

Enhance video understanding with Amazon Bedrock Data Automation and open-set object detection

In real-world video and image analysis, businesses often face the challenge of detecting objects that weren’t part of a model’s original training set. This becomes especially difficult in dynamic environments where new, unknown, or user-defined objects frequently appear. For example, media publishers might want to track emerging brands or products in user-generated content; advertisers need to analyze product appearances in influencer videos despite visual variations; retail providers aim to support flexible, descriptive search; self-driving cars must identify unexpected road debris; and manufacturing systems need to catch novel or subtle defects without prior labeling.In all these cases, traditional closed-set object detection (CSOD) models—which only recognize a fixed list of predefined categories—fail to deliver. They either misclassify the unknown objects or ignore them entirely, limiting their usefulness for real-world applications.Open-set object detection (OSOD) is an approach that enables models to detect both known and previously unseen objects, including those not encountered during training. It supports flexible input prompts, ranging from specific object names to open-ended descriptions, and can adapt to user-defined targets in real time without requiring retraining. By combining visual recognition with semantic understanding—often through vision-language models—OSOD helps users query the system broadly, even if it’s unfamiliar, ambiguous, or entirely new.

In this post, we explore how Amazon Bedrock Data Automation uses OSOD to enhance video understanding.

Amazon Bedrock Data Automation and video blueprints with OSOD

Amazon Bedrock Data Automation is a cloud-based service that extracts insights from unstructured content like documents, images, video and audio. Specifically, for video content, Amazon Bedrock Data Automation supports functionalities such as chapter segmentation, frame-level text detection, chapter-level classification Interactive Advertising Bureau (IAB) taxonomies, and frame-level OSOD. For more information about Amazon Bedrock Data Automation, see Automate video insights for contextual advertising using Amazon Bedrock Data Automation.

Amazon Bedrock Data Automation video blueprints support OSOD on the frame level. You can input a video along with a text prompt specifying the desired objects to detect. For each frame, the model outputs a dictionary containing bounding boxes in XYWH format (the x and y coordinates of the top-left corner, followed by the width and height of the box), along with corresponding labels and confidence scores. You can further customize the output based on their needs—for instance, filtering by high-confidence detections when precision is prioritized.

The input text is highly flexible, so you can define dynamic fields in the Amazon Bedrock Data Automation video blueprints powered by OSOD.

Example use cases

In this section, we explore some examples of different use cases for Amazon Bedrock Data Automation video blueprints using OSOD. The following table summarizes the functionality of this feature.

| Functionality | Sub-functionality | Examples |

| Multi-granular visual comprehension | Object detection from fine-grained object reference | "Detect the apple in the video." |

| Object detection from cross-granularity object reference | "Detect all the fruit items in the image." |

|

| Object detection from open questions | "Find and detect the most visually important elements in the image." |

|

| Visual hallucination detection | Identify and flag object mentionings in the input text that do not correspond to actual content in the given image. | "Detect if apples appear in the image." |

Ads analysis

Advertisers can use this feature to compare the effectiveness of various ad placement strategies across different locations and conduct A/B testing to identify the most optimal advertising approach. For example, the following image is the output in response to the prompt “Detect the locations of echo devices.”

Smart resizing

By detecting key elements in the video, you can choose appropriate resizing strategies for devices with different resolutions and aspect ratios, making sure important visual information is preserved. For example, the following image is the output in response to the prompt “Detect the key elements in the video.”

Surveillance with intelligent monitoring

In home security systems, producers or users can take advantage of the model’s high-level understanding and localization capabilities to maintain safety, without the need to manually enumerate all possible scenarios. For example, the following image is the output in response to the prompt “Check dangerous elements in the video.”

Custom labels

You can define your own labels and search through videos to retrieve specific, desired results. For example, the following image is the output in response to the prompt “Detect the white car with red wheels in the video.”

Image and video editing

With flexible text-based object detection, you can accurately remove or replace objects in photo editing software, minimizing the need for imprecise, hand-drawn masks that often require multiple attempts to achieve the desired result. For example, the following image is the output in response to the prompt “Detect the people riding motorcycles in the video.”

Sample video blueprint input and output

The following example demonstrates how to define an Amazon Bedrock Data Automation video blueprint to detect visually prominent objects at the chapter level, with sample output including objects and their bounding boxes.

The following code is our example blueprint schema:

The following code is out example video custom output:

For the full example, refer to the following GitHub repo.

Conclusion

The OSOD capability within Amazon Bedrock Data Automation significantly enhances the ability to extract actionable insights from video content. By combining flexible text-driven queries with frame-level object localization, OSOD helps users across industries implement intelligent video analysis workflows—ranging from targeted ad evaluation and security monitoring to custom object tracking. Integrated seamlessly into the broader suite of video analysis tools available in Amazon Bedrock Data Automation, OSOD not only streamlines content understanding but also help reduce the need for manual intervention and rigid pre-defined schemas, making it a powerful asset for scalable, real-world applications.

To learn more about Amazon Bedrock Data Automation video and audio analysis, see New Amazon Bedrock Data Automation capabilities streamline video and audio analysis.

About the authors

Dongsheng An is an Applied Scientist at AWS AI, specializing in face recognition, open-set object detection, and vision-language models. He received his Ph.D. in Computer Science from Stony Brook University, focusing on optimal transport and generative modeling.

Dongsheng An is an Applied Scientist at AWS AI, specializing in face recognition, open-set object detection, and vision-language models. He received his Ph.D. in Computer Science from Stony Brook University, focusing on optimal transport and generative modeling.

Lana Zhang is a Senior Solutions Architect in the AWS World Wide Specialist Organization AI Services team, specializing in AI and generative AI with a focus on use cases including content moderation and media analysis. She’s dedicated to promoting AWS AI and generative AI solutions, demonstrating how generative AI can transform classic use cases by adding business value. She assists customers in transforming their business solutions across diverse industries, including social media, gaming, ecommerce, media, advertising, and marketing.

Lana Zhang is a Senior Solutions Architect in the AWS World Wide Specialist Organization AI Services team, specializing in AI and generative AI with a focus on use cases including content moderation and media analysis. She’s dedicated to promoting AWS AI and generative AI solutions, demonstrating how generative AI can transform classic use cases by adding business value. She assists customers in transforming their business solutions across diverse industries, including social media, gaming, ecommerce, media, advertising, and marketing.

Raj Jayaraman is a Senior Generative AI Solutions Architect at AWS, bringing over a decade of experience in helping customers extract valuable insights from data. Specializing in AWS AI and generative AI solutions, Raj’s expertise lies in transforming business solutions through the strategic application of AWS’s AI capabilities, ensuring customers can harness the full potential of generative AI in their unique contexts. With a strong background in guiding customers across industries in adopting AWS Analytics and Business Intelligence services, Raj now focuses on assisting organizations in their generative AI journey—from initial demonstrations to proof of concepts and ultimately to production implementations.

Raj Jayaraman is a Senior Generative AI Solutions Architect at AWS, bringing over a decade of experience in helping customers extract valuable insights from data. Specializing in AWS AI and generative AI solutions, Raj’s expertise lies in transforming business solutions through the strategic application of AWS’s AI capabilities, ensuring customers can harness the full potential of generative AI in their unique contexts. With a strong background in guiding customers across industries in adopting AWS Analytics and Business Intelligence services, Raj now focuses on assisting organizations in their generative AI journey—from initial demonstrations to proof of concepts and ultimately to production implementations.

Books, Courses & Certifications

How Skello uses Amazon Bedrock to query data in a multi-tenant environment while keeping logical boundaries

This is a guest post co-written with Skello.

Skello is a leading human resources (HR) software as a service (SaaS) solution focusing on employee scheduling and workforce management. Catering to diverse sectors such as hospitality, retail, healthcare, construction, and industry, Skello offers features including schedule creation, time tracking, and payroll preparation. With approximately 20,000 customers and 400,000 daily users across Europe as of 2024, Skello continually innovates to meet its clients’ evolving needs.

One such innovation is the implementation of an AI-powered assistant to enhance user experience and data accessibility. In this post, we explain how Skello used Amazon Bedrock to create this AI assistant for end-users while maintaining customer data safety in a multi-tenant environment. Amazon Bedrock is a fully managed service that offers a choice of high-performing foundation models (FMs) through a single API, along with a broad set of capabilities to build generative AI applications with security, privacy, and responsible AI.

We dive deep into the challenges of implementing large language models (LLMs) for data querying, particularly in the context of a French company operating under the General Data Protection Regulation (GDPR). Our solution demonstrates how to balance powerful AI capabilities with strict data protection requirements.

Challenges with multi-tenant data access

As Skello’s platform grew to serve thousands of businesses, we identified a critical need: our users needed better ways to access and understand their workforce data. Many of our customers, particularly those in HR and operations roles, found traditional database querying tools too technical and time-consuming. This led us to identify two key areas for improvement:

- Quick access to non-structured data – Our users needed to find specific information across various data types—employee records, scheduling data, attendance logs, and performance metrics. Traditional search methods often fell short when users had complex questions like “Show me all part-time employees who worked more than 30 hours last month” or “What’s the average sick leave duration in the retail department?”

- Visualization of data through graphs for analytics – Although our platform collected comprehensive workforce data, users struggled to transform this raw information into actionable insights. They needed an intuitive way to create visual representations of trends and patterns without writing complex SQL queries or learning specialized business intelligence tools.

To address these challenges, we needed a solution that could:

- Understand natural language questions about complex workforce data

- Correctly interpret context and intent from user queries

- Generate appropriate database queries while respecting data access rules

- Return results in user-friendly formats, including visualizations

- Handle variations in how users might phrase similar questions

- Process queries about time-based data and trends

LLMs emerged as the ideal solution for this task. Their ability to understand natural language and context, combined with their capability to generate structured outputs, made them perfectly suited for translating user questions into precise database queries. However, implementing LLMs in a business-critical application required careful consideration of security, accuracy, and performance requirements.

Solution overview

Using LLMs to generate structured queries from natural language input is an emerging area of interest. This process enables the transformation of user requests into organized data structures, which can then be used to query databases automatically.

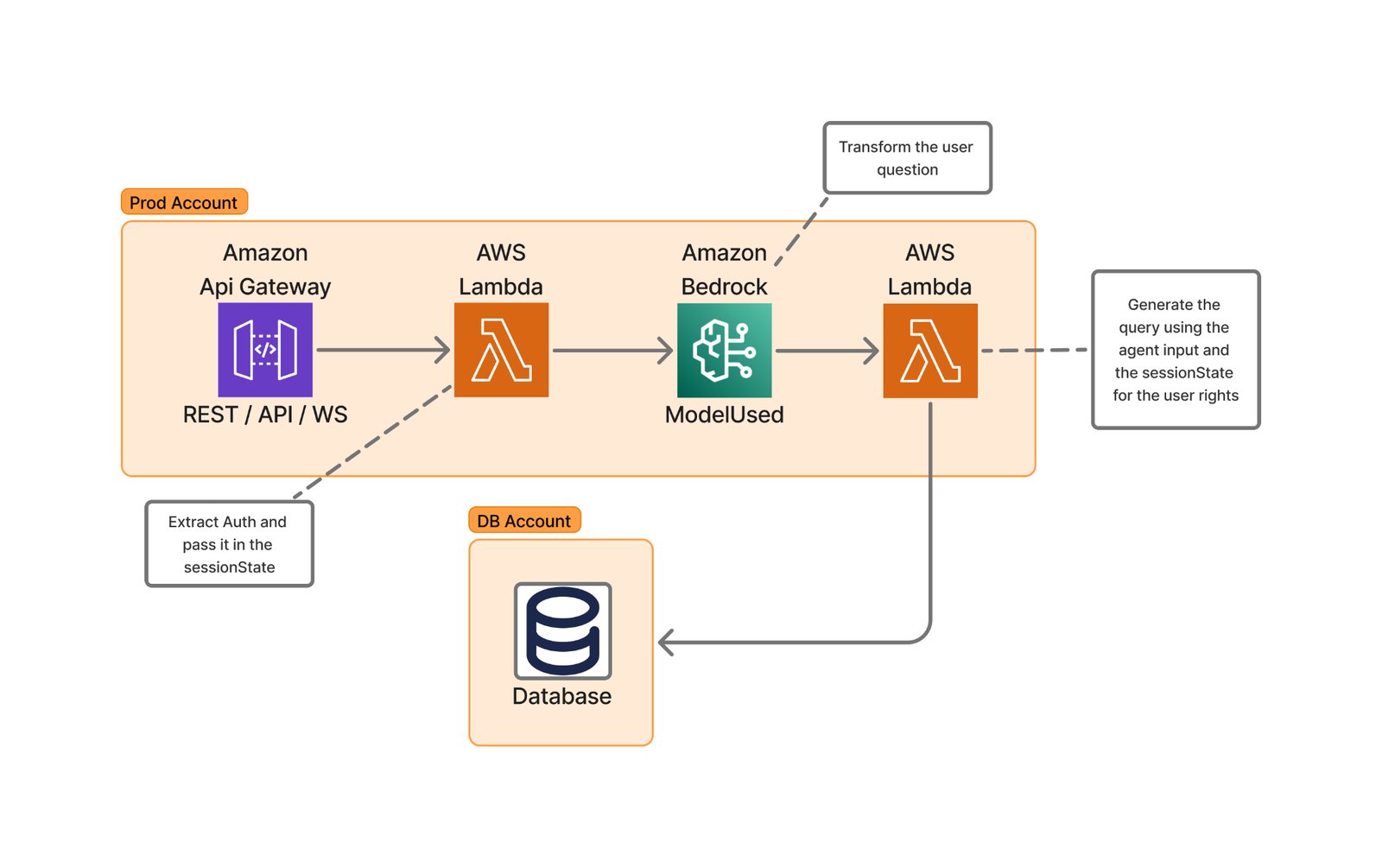

The following diagram of Skello’s high-level architecture illustrates this user request transformation process.

The implementation using AWS Lambda and Amazon Bedrock provides several advantages:

- Scalability through serverless architecture

- Cost-effective processing with pay-as-you-go pricing

- Low-latency performance

- Access to advanced language models like Anthropic’s Claude 3.5 Sonnet

- Rapid deployment capabilities

- Flexible integration options

Basic query generation process

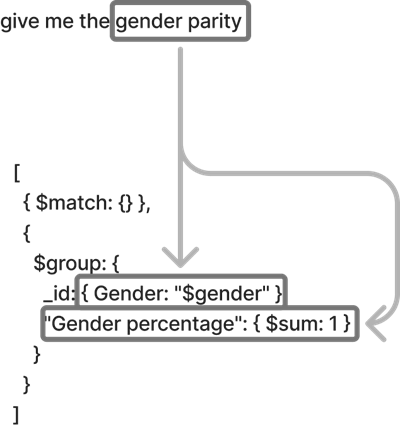

The following diagram illustrates how we transform natural language queries into structured database requests. For this example, the user asks “Give me the gender parity.”

The process works as follows:

- The authentication service validates the user’s identity and permissions.

- The LLM converts the natural language to a structured query format.

- The query validation service enforces compliance with security policies.

- The database access layer executes the query within the user’s permitted scope.

Handling complex queries

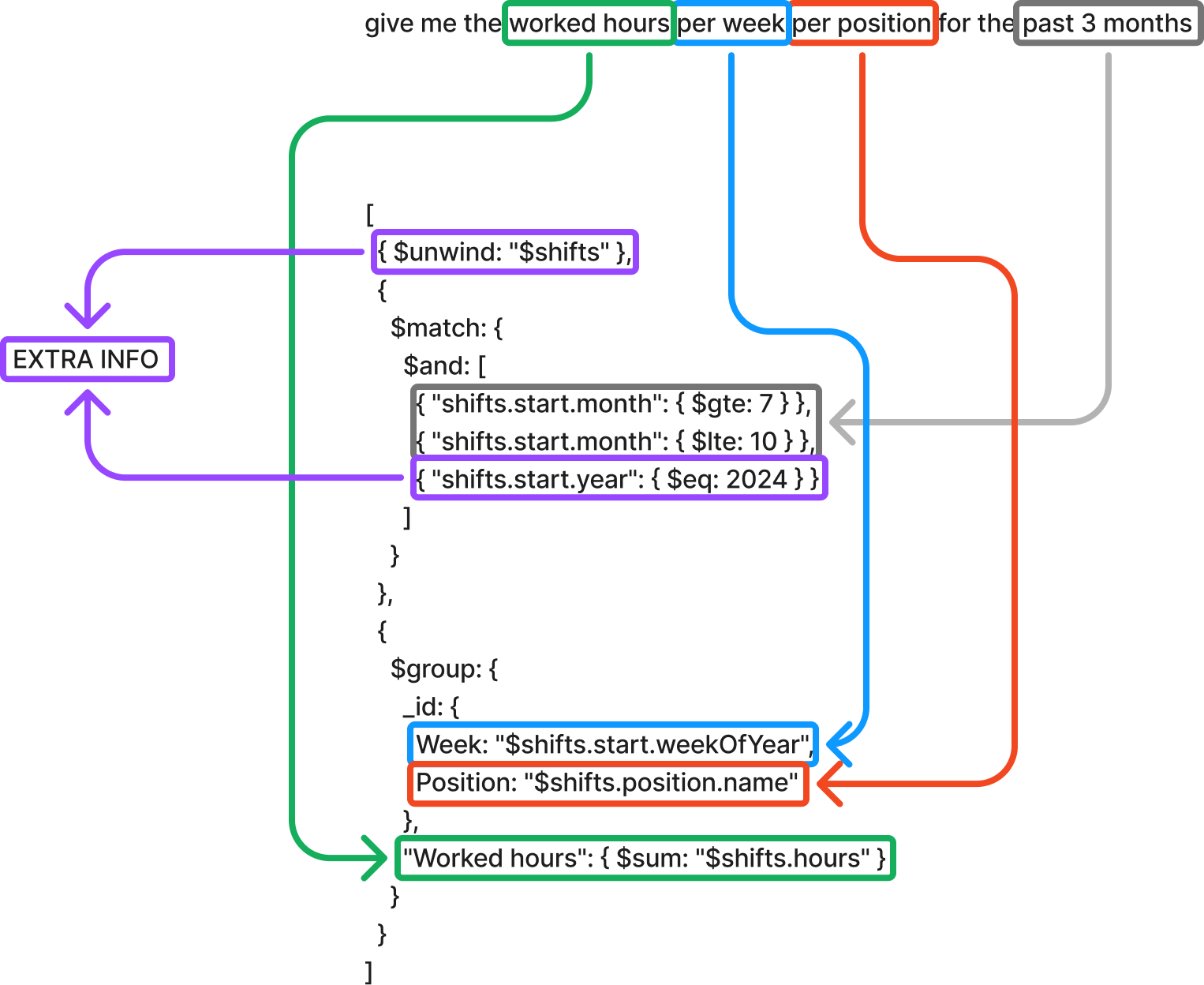

For more sophisticated requests like “Give me the worked hours per week per position for the last 3 months,” our system completes the following steps:

- Extract query components:

- Target metric: worked hours

- Aggregation levels: week, position

- Time frame: 3 months

- Generate temporal calculations:

- Use relative time expressions instead of hard-coded dates

- Implement standardized date handling patterns

Data schema optimization

To make our system as efficient and user-friendly as possible, we carefully organized our data structure—think of it as creating a well-organized filing system for a large office.

We created standardized schema definitions, establishing consistent ways to store similar types of information. For example, date-related fields (hire dates, shift times, vacation periods) follow the same format. This helps prevent confusion when users ask questions like “Show me all events from last week.” It’s similar to having all calendars in your office using the same date format instead of some using MM/DD/YY and others using DD/MM/YY.

Our system employs consistent naming conventions with clear, predictable names for all data fields. Instead of technical abbreviations like emp_typ_cd, we use clear terms like employee_type. This makes it straightforward for the AI to understand what users mean when they ask questions like “Show me all full-time employees.”

For optimized search patterns, we strategically organized our data to make common searches fast and efficient. This is particularly important because it directly impacts user experience and system performance. We analyzed usage patterns to identify the most frequently requested information and designed our database indexes accordingly. Additionally, we created specialized data views that pre-aggregate common report requests. This comprehensive approach means questions like “Who’s working today?” get answered almost instantly.

We also established clear data relationships by mapping out how different pieces of information relate to each other. For example, we clearly connect employees to their departments, shifts, and managers. This helps answer complex questions like “Show me all department managers who have team members on vacation next week.”

These optimizations deliver real benefits to our users:

- Faster response times when asking questions

- More accurate answers to queries

- Less confusion when referring to specific types of data

- Ability to ask more complex questions about relationships between different types of information

- Consistent results when asking similar questions in different ways

For example, whether a user asks “Show me everyone’s vacation time” or “Display all holiday schedules,” the system understands they’re looking for the same type of information. This reliability makes the system more trustworthy and easier to use for everyone, regardless of their technical background.

Graph generation and display

One of the most powerful features of our system is its ability to turn data into meaningful visual charts and graphs automatically. This consists of the following actions:

- Smart label creation – The system understands what your data means and creates clear, readable labels. For example, if you ask “Show me employee attendance over the last 6 months,” the horizontal axis automatically labels the months (January through June), the vertical axis shows attendance numbers with simple-to-read intervals, and the title clearly states what you’re looking at: “Employee Attendance Trends.”

- Automatic legend creation – The system creates helpful legends that explain what each part of the chart means. For instance, if you ask “Compare sales across different departments,” different departments get different colors, a clear legend shows which color represents which department, and additional information like “Dashed lines show previous year” is automatically added when needed.

- Choosing the right type of chart – The system is smart about picking the best way to show your information. For example, it uses bar charts for comparing different categories (“Show me sales by department”), line graphs for trends over time (“How has attendance changed this year?”), pie charts for showing parts of a whole (“What’s the breakdown of full-time vs. part-time staff?”), and heat maps for complex patterns (“Show me busiest hours per day of the week”).

- Smart sizing and scaling – The system automatically adjusts the size and scale of charts to make them simple to read. For example, if numbers range from 1–100, it might show intervals of 10; if you’re looking at millions, it might show them in a more readable way (1M, 2M, etc.); charts automatically resize to show patterns clearly; and important details are never too small to see.

All of this happens automatically—you ask your question, and the system handles the technical details of creating a clear, professional visualization. For example, the following figure is an example for the question “How many hours my employees worked over the past 7 weeks?”

Security-first architecture

Our implementation adheres to OWASP best practices (specifically LLM06) by maintaining complete separation between security controls and the LLM.

Through dedicated security services, user authentication and authorization checks are performed before LLM interactions, with user context and permissions managed through Amazon Bedrock SessionParameters, keeping security information entirely outside of LLM processing.

Our validation layer uses Amazon Bedrock Guardrails to protect against prompt injection, inappropriate content, and forbidden topics such as racism, sexism, or illegal content.

The system’s architecture implements strict role-based access controls through a detailed permissions matrix, so users can only access data within their authorized scope. For authentication, we use industry-standard JWT and SAML protocols, and our authorization service maintains granular control over data access permissions.

This multi-layered approach prevents potential security bypasses through prompt manipulation or other LLM-specific attacks. The system automatically enforces data boundaries at both database and API levels, effectively preventing cross-contamination between different customer accounts. For instance, department managers can only access their team’s data, with these restrictions enforced through database compartmentalization.

Additionally, our comprehensive audit system maintains immutable logs of all actions, including timestamps, user identifiers, and accessed resources, stored separately to protect their integrity. This security framework operates seamlessly in the background, maintaining robust protection of sensitive information without disrupting the user experience or legitimate workflows.

Benefits

Creating data visualizations has never been more accessible. Even without specialized expertise, you can now produce professional-quality charts that communicate your insights effectively. The streamlined process makes sure your visualizations remain consistently clear and intuitive, so you can concentrate on exploring your data questions instead of spending time on presentation details.

The solution works through simple conversational requests that require no technical knowledge or specialized software. You simply describe what you want to visualize using everyday language and the system interprets your request and creates the appropriate visualization. There’s no need to learn complex software interfaces, remember specific commands, or understand data formatting requirements. The underlying technology handles the data processing, chart selection, and professional formatting automatically, transforming your spoken or written requests into polished visual presentations within moments.

Your specific information needs to drive how the data is displayed, making the insights more relevant and actionable. When it’s time to share your findings, these visualizations seamlessly integrate into your reports and presentations with polished formatting that enhances your overall message. This democratization of data visualization empowers everyone to tell compelling data stories.

Conclusion

In this post, we explored Skello’s implementation of an AI-powered assistant using Amazon Bedrock and Lambda. We saw how end-users can query their own data in a multi-tenant environment while maintaining logical boundaries and complying with GDPR regulations. The combination of serverless architecture and advanced language models proved effective in enhancing data accessibility and user experience.

We invite you to explore the AWS Machine Learning Blog for more insights on AI solutions and their potential business applications. If you’re interested in learning more about Skello’s journey in modernizing HR software, check out our blog post series on the topic.

If you have any questions or suggestions about implementing similar solutions in your own multi-tenant environment, please feel free to share them in the comments section.

About the authors

Nicolas de Place is a Data & AI Solutions Architect specializing in machine learning strategy for high-growth startups. He empowers emerging companies to harness the full potential of artificial intelligence and advanced analytics, designing scalable ML architectures and data-driven solutions

Nicolas de Place is a Data & AI Solutions Architect specializing in machine learning strategy for high-growth startups. He empowers emerging companies to harness the full potential of artificial intelligence and advanced analytics, designing scalable ML architectures and data-driven solutions

Cédric Peruzzi is a Software Architect at Skello, where he focuses on designing and implementing Generative AI features. Before his current role, he worked as a software engineer and architect, bringing his experience to help build better software solutions.

Cédric Peruzzi is a Software Architect at Skello, where he focuses on designing and implementing Generative AI features. Before his current role, he worked as a software engineer and architect, bringing his experience to help build better software solutions.

-

Business2 weeks ago

Business2 weeks agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms1 month ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy2 months ago

Ethics & Policy2 months agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi