Previous work

Our paper builds on and extends recent research examining the impact of AI on labor market outcomes and worker well-being. Prior studies have largely found positive effects of AI exposure on employment and wages at the industry or occupational level. For example, Felten et al. (2019)22 document small wage gains in AI-exposed occupations in the US, while Gathmann and Grimm (2022)23 find a positive relationship between AI exposure and employment in Germany, particularly in the service sector. Acemoglu et al. (2022)20 analyze U.S. labor markets using establishment-level vacancy data from 2010 onward, finding rapid AI adoption, particularly in firms with tasks suited for automation. Their findings suggest that AI-exposed firms increase AI-related hiring while simultaneously reducing non-AI hiring and altering skill demands. Despite these firm-level shifts, they find no significant impact on overall employment or wage growth in AI-exposed industries and occupations, indicating that AI’s displacement effects may currently outweigh productivity-driven job creation. When examining the heterogeneity of results across occupations, Bonfiglioli et al. (2025)24 find evidence that AI exposure led to job losses across US commuting zones, particularly for low-skill and production workers, while benefiting high-wage and STEM occupations. Unlike other technologies, AI’s impact is driven by services rather than manufacturing, contributing to automation of jobs and rising inequality.

At the same time, researchers have raised concerns that AI could accelerate the erosion of middle-class job security by automating tasks without creating sufficient new roles for human workers25.Whether AI complements or displaces human labor depends not only on the extent of automation but also on which tasks are automated and which workers are affected26. In this regard, Brekelmans and Petropoulos (2020)27 highlight that mid-skilled occupations are among the most vulnerable to AI-driven disruption. However, some scholars suggest that AI can help reduce job performance inequalities by improving efficiency and decision-making support10,28.

While existing studies have extensively examined labor market effects of AI, relatively little attention has been paid to its broader impact on worker well-being. Our work addresses this gap, contributing to a growing body of research exploring the effects of automation technologies on workers’ health and psychological outcomes.

Previous research on the effects of automation on well-being has primarily examined industrial robots and mechanized systems13,29. However, AI represents a distinct form of automation, as it relies on computer-based learning and cognitive processing rather than physical manipulation9. Unlike traditional robotics, which primarily displaces routine manual tasks, AI has the potential to automate non-routine cognitive tasks that were once considered resistant to mechanization22,25,30. This shift suggests that AI may not only disrupt routine jobs but also reshape knowledge-based professions, placing even highly educated workers at risk of automation-driven changes. Moreover, as AI systems become more capable of complex reasoning, decision-making, and problem-solving, their impact on the labor market is likely to extend beyond simple task automation, influencing job design, skill requirements, and career trajectories across various industries.

The influence of AI on employee well-being may operate through multiple channels. On one hand, AI-driven automation can reduce physical strain in labor-intensive jobs, potentially improving physical health. On the other hand, AI adoption can increase cognitive and emotional demands in knowledge-intensive occupations, altering job content in ways that either enhance or undermine job satisfaction. Additionally, shifts in workplace dynamics—such as changes in perceived job security, workplace autonomy, and the sense of purpose derived from work—may further affect workers’ experiences with AI.

Worker attitudes toward AI remain mixed. Some global surveys indicate rising concerns about the consequences of AI on job opportunities31 yet a recent Pew study finds that US workers in AI-exposed industries do not perceive AI as an immediate threat32. AI has the potential to enhance productivity and complement human skills, but it can also displace workers in certain roles. As with past technological revolutions, the ultimate labor market impact of AI will depend on the evolving balance between complementarity and substitution between AI and human labor20,33,34. Moreover, AI alters the nature of work itself, influencing job satisfaction, professional identity, and the perceived dignity of labor35.

Whether the beneficial effects of AI on labor market outcomes offset or even outweigh its displacement effects remains an empirical question—particularly in the short term, as workers and labor markets undergo a period of transition and adaptation to this new general-purpose technology.

Conceptual framework

This study builds on task-based theories of technological change33,36, which conceptualize AI as a transformative force that reallocates tasks between humans and machines. Similarly to robots, AI automates routine and physically demanding tasks, enabling workers to transition into higher-skill, cognitively demanding roles. However, unlike robots, AI can also automatize non-routine tasks30. The dual role of AI—as both a complement and a substitute to human labor—is central to understanding its heterogeneous effects. In our context, complementarity arises when AI reduces physical strain and augments workers’ capabilities, enhancing productivity and job satisfaction. Conversely, substitutability occurs when AI displaces workers, increasing job insecurity and workplace anxiety.

AI adoption, as documented by Acemoglu et al. (2022)20, follows a pattern where firms with task structures conducive to AI integration experience substantial labor reconfigurations. While automation’s displacement effects have been widely studied, the role of AI in hazardous and physically strenuous occupations offers a distinct perspective on its labor market consequences. We hypothesize that AI’s integration into hazardous tasks—such as those involving exposure to toxic environments, heavy lifting, or repetitive strain—can reduce workplace injuries and long-term health risks. This hypothesis aligns with prior research on automation’s potential to enhance worker safety by shifting high-risk activities to machines, thereby improving physical well-being13.

Acemoglu et al. (2022)20 highlight that AI-exposed establishments tend to reduce overall hiring, indicating that productivity gains from AI do not necessarily lead to net job creation. This trend, coupled with AI’s ability to automate both routine and certain non-routine cognitive and abstract tasks, suggests that a broader range of workers—including those in knowledge-based professions—may experience job displacement pressures. Such uncertainty can have significant psychological consequences, as fears of automation-related job loss contribute to chronic stress, financial insecurity, and diminished workplace morale. The effects are likely to be unevenly distributed, disproportionately impacting low-wage workers while also altering career trajectories for higher-skilled professionals, ultimately reinforcing patterns of economic inequality across different skill levels.

Thus, while AI adoption holds promise for improving workplace safety and productivity, its net effect on worker well-being remains uncertain. Whether the benefits—such as reduced workplace hazards and improved job quality—outweigh the disruptions caused by job displacement and economic instability depends on how AI is integrated into labor markets. This duality underscores the need for policies that not only facilitate AI integration in ways that protect workers’ health but also address the economic and social ramifications of AI-driven labor shifts.

Furthermore, our study builds upon traditional job quality frameworks by examining AI-induced transformations in physical job intensity, cognitive demands, and health outcomes. While much of the public debate on digitalization has focused on job quantity (i.e., job creation vs. job loss), its effects on job quality are equally significant and warrant further attention37,38. Martin and Hauret (2022)37 identify six key dimensions of job quality commonly examined in the literature: labor income, workplace safety, working time and work-life balance, job security, skill development and training, and employment-related relationships and work motivation. Traditional models conceptualize job quality through physical, cognitive, and emotional dimensions. However, emerging technologies—especially AI—are reshaping these dimensions in profound ways. AI-driven workplace changes can lead to new forms of cognitive strain, shifts in workplace autonomy, and evolving skill demands, all of which have direct consequences for workers’ well-being. Further research is needed to fully understand how digitalization—particularly AI—modifies job demands, psychological stress, and long-term employment conditions.

AI technologies frequently automate repetitive, physically demanding, and hazardous tasks, thereby alleviating physical labor for workers. In manufacturing, AI-powered machinery has increasingly replaced tasks such as assembly-line work and heavy lifting, while in service industries, AI tools like virtual assistants reduce administrative workloads, complementing physical task reductions. As AI shifts job responsibilities from physical execution to supervisory or decision-making roles, the overall physical strain on workers declines. Empirical evidence supports this trend; for instance, Gihleb et al. (2022)13 document significant reductions in physically demanding work as automation becomes more prevalent. Using a physical burden metric from the SOEP, we examine the link between AI exposure and reduced physical job intensity.

Finally, our study engages with theories of institutional mediation13 to examine how labor market institutions shape AI’s effects on workers. Specifically, we hypothesize that Germany’s strong labor protections, high unionization rates, and employment legislation may moderate the adverse consequences of AI on worker well-being. Institutions that provide employment security, reskilling opportunities, and worker protections may buffer against the negative impacts of automation, reducing stress and job displacement fears. This contrasts with more flexible labor markets, where AI-induced disruptions may lead to greater economic precarity.

Our contribution

Our research highlights the importance of Germany’s unique institutional context, characterized by strong labor protections, extensive union representation, and comprehensive employment legislation. These factors, combined with Germany’s gradual adoption of AI technologies, create an environment where AI is more likely to complement rather than displace worker skills, mitigating some of the negative labor market effects observed in countries like the US. Germany’s institutional framework, marked by strong labor protections, widespread union representation, and comprehensive employment regulations, plays a crucial role in shaping the effects of AI adoption. These structural safeguards, along with the country’s more gradual integration of AI technologies, foster an environment where AI is more likely to enhance rather than replace worker skills, mitigating some of the negative labor market effects observed elsewhere. However, as Bonfiglioli et al. (2025)24 highlight, AI exposure has led to job losses in the US, particularly among low-skilled and production workers, while benefiting high-wage and STEM occupations. Given AI’s distinct impact on service industries rather than traditional manufacturing, workers in routine-intensive service sectors may still face heightened risks of displacement, even within Germany’s more protective labor market. These theoretically ambiguous effects make Germany a particularly interesting case for examining the interaction between AI adoption and labor market institutions, raising an important empirical question about the extent to which these protections can shield workers from displacement while enabling technological progress.

In addition, we explore heterogeneity in outcomes based on worker characteristics (e.g., gender, education, union membership) and regional differences (e.g., East vs. West Germany), offering a more granular perspective on the potential effects of AI on labor markets and worker well-being.

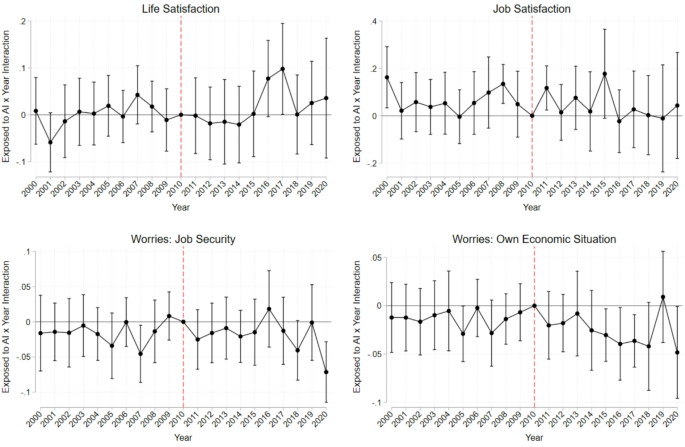

Unlike studies such as Nazareno and Schiff (2021) and Liu (2023)29,39, which rely on cross-sectional data and broad measures of automation exposure40, our analysis is uniquely AI-focused. We leverage the Webb measure of exposure to AI alongside a self-reported metric from the SOEP, ensuring a more precise assessment of the effects of AI on worker well-being and labor market dynamic. Using longitudinal data from the SOEP over the period 2000–2020, we employ an event study analysis and a DiD design to address selection bias and control for individual fixed effects. This methodological approach enables us to capture long-term trends and examine nuanced outcomes such as life satisfaction, mental health, and physical health—dimensions that have often been overlooked in prior research.

AI in Germany

The roll-out of AI in Germany accelerated only recently. As noted by Gathmann and Grimm (2022)23, patent applications for AI technologies started to grow strongly only after 2015, and more significantly in 2017 and 2018. The innovation survey conducted by ZEW- Leibniz Centre for European Economic Research provides a consistent longitudinal perspective on AI adoption in Germany18. Specifically, the most recent wave of the innovation survey contains information on AI adoption percentages for 2021. AI use was not widespread before 2010, and the rate of AI adoption was extremely low before 2016. To be conservative, we chose 2010 as the beginning of our treatment period. AI adoption rates have increased substantially over the last few years. While only 2% of firms adopted AI before 2016, this number rose to 6% in 2019 and 10% in 202118. Regarding the diffusion of AI across industries, the leading adopters of AI technology in 2019 were finance (24%) and IT (21%), followed by skilled services (18%) such as legal, architecture, consulting, and research. Conversely, the laggards in AI adoption include mining (1.6%), miscellaneous business services (2.3%), and transportation (5.3%). These cross-sectoral differences in AI adoption are qualitatively reflected in our individual-level data on AI exposure from the SOEP, with IT and finance being the most exposed (see Figure A.1 in the Appendix). As deatiled in Rammer et al. (2022), 75% of the firms in the finance sector that used AI technologies in 2019 began using AI in 2016. The share of the chemical and pharmaceutical sectors is 74%, whereas that of electronics and machines is 68%.

Increasing rates of AI adoption across German firms were accompanied by the German government’s investment in AI. In 2018, the German Federal Government launched its Artificial Intelligence Strategy and pledged to invest approximately 5 billion euros by 2025 in AI development. For these reasons, Germany is an interesting country for analyzing the effects of rising exposure to AI on the well-being and health of workers.