AI Insights

Better Artificial Intelligence Stock: Nvidia vs. Meta Platforms

Artificial intelligence (AI) stocks have proven to be big winners for investors lately — particularly last year, when some leading players in the space delivered double- and even triple-digit percentage gains. Though these high-growth companies’ share prices tumbled earlier this year due to concerns about President Donald Trump’s tariff plans, investors have recently returned to this compelling story.

Trump’s trade talks and tentative agreements on the frameworks of deals with the U.K. and China have boosted optimism that his tariffs won’t result in drastically higher costs for U.S. consumers or major earnings pressure on U.S. companies — in contrast to the worst-case scenario that many had feared. As a result, investors feel more comfortable investing in companies that rely on a strong economic environment to thrive — such as AI sector players.

This means that many investors are once again asking themselves which AI players look like the best buys today. Nvidia (NVDA 1.33%) and Meta Platforms (META 0.73%) are both aiming to reshape the future with their aggressive AI plans. If you could only buy one, which would be the better AI bet now?

Image source: Getty Images.

The case for Nvidia

Nvidia already has scored many AI victories. The company has built an empire of hardware and services that make it the go-to provider for any organization creating an AI platform or program. But the crown jewels of its portfolio are its graphics processing units (GPUs). It offers the top-performing parallel processors, and thanks to both its ecosystem and manufacturing lead, they’re also by far the best-sellers in their class. With demand from cloud infrastructure giants and other tech sector players still outstripping supply, Nvidia has been growing its sales at double- and triple-digit percentage rates, and setting new revenue records quarter after quarter.

In its fiscal 2025, which ended Jan. 26, Nvidia booked a 114% revenue gain to a record level of $130 billion. And the company isn’t just growing its top line — its net income surged by 145% to almost $73 billion as it continued to generate high levels of profitability on those sales.

Nvidia’s clients today rely heavily on its hardware to power their projects, as its GPUs are some of the best chips available for the training of large language models (LLMs), as well as for inferencing — the technical term for when those trained models are used to process real data to solve actual problems or make predictions. And Nvidia is helping customers with so much more — from the design of AI agents to the powering of autonomous vehicle systems and drug-discovery platforms.

Nvidia also is innovating steadily to stay ahead of rivals. It recently shifted to an accelerated schedule that will have it releasing chips based on new and improved architectures every year; previously, it rolled out new architectures about once every two years. So this company is likely to keep playing a major role in the evolution of AI throughout its next chapters.

The case for Meta Platforms

You will know Meta best as an owner of social media apps, some of which you probably use every day — its core “family of apps” includes Facebook, Messenger, WhatsApp, and Instagram. And the sales of advertising space across those platforms have provided billions of dollars in revenue and profits for the company.

But today, Meta’s big focus is on AI. The company has built its own LLM, Llama, and made it open source so that anyone can contribute to its development. The open-source model can result in the faster creation of a better-quality product — and in this case, it could help Meta emerge as a leader in the field. The company has put its money where its mouth is: It plans as much as $72 billion in capital spending this year to boost its AI presence. And just recently, Meta has been hiring up a storm in its efforts to staff its newly launched Meta Superintelligence Labs. That business unit will work on foundation models like Llama as well as other AI research projects.

In a memo to employees regarding the new AI unit, Meta CEO Mark Zuckerberg highlighted why it’s well positioned to lead in AI development: “We have a strong business that supports building out significantly more compute than smaller labs. We have deeper experience building and growing products that reach billions of people,” he said.

Those points are true, and they could help Meta reach its goals — and deliver big wins to investors over time.

Which stock is the better buy?

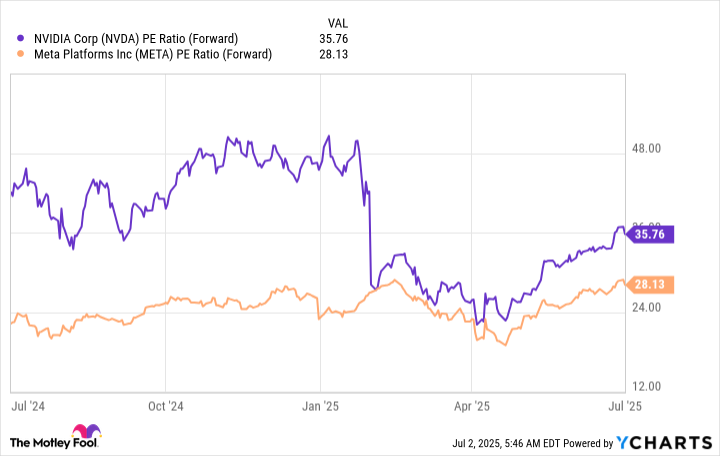

From a valuation perspective, you might choose Meta, as the stock is cheaper in relation to forward earnings estimates than Nvidia — a condition that has generally been the case.

NVDA PE Ratio (Forward) data by YCharts.

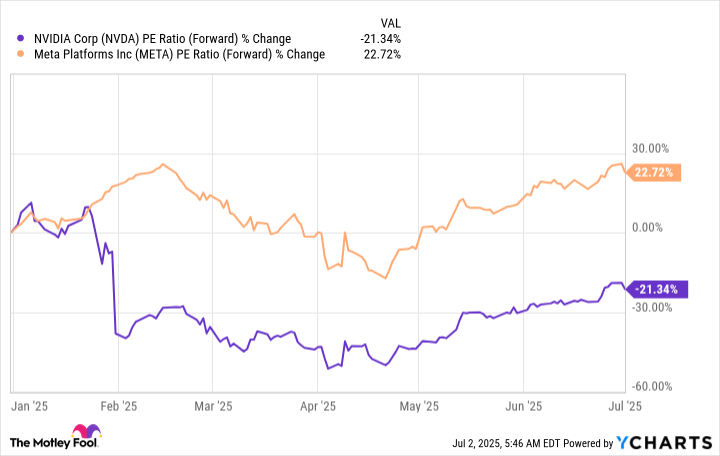

But a closer look shows that while Nvidia’s valuation is down since the start of the year, Meta’s actually has climbed.

NVDA PE Ratio (Forward) data by YCharts.

With that in mind, Nvidia looks like a more appealing buying opportunity, especially considering the company’s ongoing strong growth and its involvement in every area of AI development and application in real-world situations. Meta also could emerge as a major AI winner down the road, and the stock is still reasonably priced today in spite of its gains in valuation. But Nvidia remains the key player in this space — and at today’s valuation, it’s the better buy.

Randi Zuckerberg, a former director of market development and spokeswoman for Facebook and sister to Meta Platforms CEO Mark Zuckerberg, is a member of The Motley Fool’s board of directors. Adria Cimino has no position in any of the stocks mentioned. The Motley Fool has positions in and recommends Meta Platforms and Nvidia. The Motley Fool has a disclosure policy.

AI Insights

How Math Teachers Are Making Decisions About Using AI

Our Findings

Finding 1: Teachers valued many different criteria but placed highest importance on accuracy, inclusiveness, and utility.

We analyzed 61 rubrics that teachers created to evaluate AI. Teachers generated a diverse set of criteria, which we grouped into ten categories: accuracy, contextual awareness, engagingness, fidelity, inclusiveness, output variety, pedagogical soundness, user agency, and utility. We asked teachers to rank their criteria in order of importance and found a relatively flat distribution, with no single criterion emerging as one that a majority assigned highest importance. Still, our results suggest that teachers placed highest importance on accuracy, inclusiveness, and utility. 13% of teachers listed accuracy (which we defined as mathematically accurate, grounded in facts, and trustworthy) as their top evaluation criterion. Several teachers cited “trustworthiness” and “mathematical correctness” as their most important evaluation criteria, and another teacher described accuracy as a “gateway” for continuing evaluation; in other words, if the tool was not accurate, it would not even be worth further evaluation. Another 13% ranked inclusiveness (which we defined as accessible to diverse cognitive and cultural needs of users) as their top evaluation criterion. Teachers required AI tools to be inclusive to both student and teacher users. With respect to student users, teachers suggested that AI tools must be “accessible,” free of “bias and stereotypes,” and “culturally relevant.” They also wanted AI tools to be adaptable for “all teachers.” One teacher wrote, “Different teachers/scenarios need different levels/styles of support. There is no ‘one size fits all’ when it comes to teacher support!” Additionally, 11% of teachers reported utility as their top evaluation criterion (defined as benefits of using the tool significantly outweigh the costs). Teachers who cited this criterion valued “efficiency” and “feasibility.” One added that AI needed to be “directly useful to me and my students.”

In addition to accuracy, inclusiveness, and utility, teachers also valued tools that were relevant to their grade level or other context (10%), pedagogically sound (10%), and engaging (7%). Additionally, 8% reported that AI tools should be faithful to their own methods and voice. Several teachers listed “authentic,” “realistic,” and “sounds like me” as top evaluation criteria. One remarked that they wanted ChatGPT to generate questions for coaching colleagues, “in my voice,” adding, “I would only use ChatGPT-generated coaching questions if they felt like they were something I would actually say to that adult.”

|

CODE |

DESCRIPTION |

EXAMPLES |

|

Accuracy |

Tool outputs are mathematically accurate, grounded in fact, and trustworthy. |

Grounded in actual research and sources ( not hallucinations); mathematical correctness |

|

Adaptability |

Tool learns from data and can improve over time or with iterative prompting |

Continue to prompt until it fits the needs of the given scenario; continue to tailor it! |

|

Contextual Awareness |

Tool is responsive and applicable to specific classroom contexts, including grade level, standards, or teacher-specified goals. |

Ability to be specific to a context / grade-level / community |

|

Engagingness |

Tool evokes users’ interest, curiosity, or excitement. |

A math problem should be interesting or motivate students to engage with the math |

|

Fidelity |

Tool outputs are faithful to users’ intent or voice. |

In my voice- I would only use chatGPT- generated coaching questions if they felt like they were something I would actually say to that adult |

|

Inclusiveness |

Tool is accessible to diverse cognitive and cultural needs of users. |

I have to be able to adapt with regard to differentiation and cultural relevance. |

|

Output Variety |

Tool can provide a variety of output options for users to evaluate or enhance divergent thinking. |

Multiple solutions, not all feedback from chat is useful so providing multiple options is beneficial |

|

Pedagogically Sound |

Tool adheres to established pedagogical best practices. |

Knowledge about educational lingo and pedagogies |

|

User Agency |

Tool promotes users’ control over their own teaching and learning experience. |

It is used as a tool that enables student curiosity and advocacy for learning rather than a source to find answers. |

|

Utility |

Benefits of using the tool significantly outweigh the costs (e.g., risks, resource and time investment). |

Efficiency – will it actually help or is it something I already know |

Table 1. Codes for the top criteria, along with definitions and examples.

Teachers expressed criteria in their own words, which we categorized and quantified via inductive coding.

We have summarized teachers’ evaluation criteria on the chart below:

Finding 2: Teachers’ evaluation criteria revealed important tensions in AI edtech tool design.

In some cases, teachers listed two or more evaluation criteria that were in tension with one another. For example, many teachers emphasized the importance of AI tools that were relevant to their teaching context, grade level, and student population, while also being easy to learn and use. Yet, providing AI tools with adequate context would likely require teachers to invest significant time and effort, compromising efficiency and utility. Additionally, tools with high degrees of context awareness might also pose risks to student privacy, another evaluation criterion some teachers named as important. Teachers could input student demographics, Individualized Education Plans (IEPs), and health records into an AI tool to provide more personalized support for a student. However, the same data could be leaked or misused in a number of ways, including further training of AI models without consent.

Another tension apparent in our data was the tension between accuracy and creativity. As mentioned above, teachers placed highest importance on mathematical correctness and trustworthiness, with one stating that they would not even consider other criteria if a tool was not reliably accurate or produced hallucinations. However, several teachers also listed creativity as a top criterion – a trait produced by LLMs’ stochasticity, which in turn also leads to hallucinations. The tension here is that while accuracy is paramount for fact-based queries, teachers may want to use AI tools as a creative thought-partner for generating novel, outside-the-box tasks – potentially with mathematical inaccuracies – that motivate student reasoning and discussion.

Finding 3: A collaborative approach helped teachers quickly arrive at nuanced criteria.

One important finding we observed is that, when provided time and structure to explore, critique, and design with AI tools in community with peers, teachers develop nuanced ways of evaluating AI – even without having received training in AI. Grounding the summit in both teachers’ own values and concrete problems of practice helped teachers develop specific evaluation criteria tied to realistic classroom scenarios. We used purposeful tactics to organize teachers into groups with peers who held different experiences with and attitudes toward AI than they did, exposing them to diverse perspectives they may not have otherwise considered. Juxtaposing different perspectives informed thoughtful, balanced evaluation criteria, such as, “Teaching students to use AI tools as a resource for curiosity and creativity, not for dependence.” One teacher reflected, “There is so much more to learn outside of where I’m from and it is encouraging to learn from other people from all over.”

Over the course of the summit, several of our facilitators observed that teachers – even those who arrived with strong positive or strong negative feelings about AI – adopted a stance toward AI that we characterized as “critical but curious.” They moved easily between optimism and pessimism about AI, often in the same sentence. One teacher wrote in her summit reflection, “I’m mostly skeptical about using AI as a teacher for lesson planning, but I’m really excited … it could be used to analyze classroom talk, give students feedback … and help teachers foster a greater sense of community.” Another summed it up well: “We need more people dreaming and creating positive tools to outweigh those that will create tools that will cause challenges to education and our society as a whole.”

AI Insights

Cisco’s WebexOne Event Spotlights Global AI Brands and Ryan Reynolds, Acclaimed Actor, Film Producer, and Entrepreneur

Customer speakers include CarShield Founder, President and COO Steve Proetz; Topgolf Director of Global Technology Delivery Doug Klausen; GetixHealth CTO David Stuart; HD Supply Vice President of IT Emil DiMotta III and more, along with Cisco partners and leaders

SAN JOSE, Calif., Sept. 15 2025 — Cisco (NASDAQ: CSCO) today announced its luminary customers and partners headlining WebexOne, Cisco’s annual AI Collaboration and Customer Experience event, taking plance from September 28 – October 1, 2025 in San Diego. This year, executives from top global brands will take the stage to highlight how Cisco is addressing today’s demands for AI-powered innovations for the employee and customer experience.

WHO: Webex by Cisco, a leader in powering employee and customer experience solutions with AI, is hosting its annual signature event, WebexOne.

WHAT: The multiday event will explore trending topics shaping today’s workforce across generative AI, customer experience, and conferencing and office tech. WebexOne will feature the latest innovations from Cisco, executive-led sessions on product and strategy news, and customer conversations with inspiring leaders from the world’s leading brands.

-

Featured Brands and Customers: More than 50 Webex customers and partners will speak at WebexOne, including Conagra Brands, Kennedy Space Center, Brightli and more. All will address how they’re partnering with Cisco to revolutionize customer experiences and collaboration with AI.

-

Luminary Speakers: Ryan Reynolds, acclaimed Actor, film Producer, and Entrepreneur, will be the closing keynote. Ryan will explore the art of creative leadership, storytelling, and innovation across entertainment, business, and beyond. Deepu Talla, Vice President of Robotics and Edge AI at NVIDIA, will offer a visionary look at the new era of AI, highlighting the transformative possibilities ahead.

-

Inspiring Cisco Leaders: Cisco executives, including Jeetu Patel, President and Chief Product Officer, Anurag Dhingra, SVP & GM of Cisco Collaboration, Aruna Ravichandran, SVP and Chief Marketing & Customer Officer, and others will take the stage to discuss Cisco’s vision for artificial intelligence, customer experience, and collaboration. They will also showcase the latest technology revolutionizing the future of work and customer experience, and discuss how they integrate with Cisco’s broader product portfolio.

All attendees will also have the option to attend a training program that offers hands-on demos, 200+ hours of learning from 82 classes and labs, and 100+ breakout sessions featuring top customers and Cisco speakers.

Cisco will also announce its fourth-annual Webex Customer Award winners at the event.

WHEN:

September 28 – October 1, 2025, beginning at 9 a.m. PT

WHERE:

In-person: Marriott Marquis, San Diego Marina

Broadcast virtually: Using the Webex Events app

For press interested in behind-the-scenes exclusive access onsite at WebexOne, please contact Webex PR at webexpr@external.cisco.com. For general registration, please visit the link here.

AI Insights

Darwin Awards For AI Celebrate Epic Artificial Intelligence Fails

As the AI Darwin Awards prove, some AI ideas turn out to be far less bright than they seem.

getty

Not every artificial intelligence breakthrough is destined to change the world. Some are destined to make you wonder “With all this so-called intelligence flooding our lives, how could anyone think that was a smart idea?” That’s the spirit behind the AI Darwin Awards, which recognize the most spectacularly misguided uses of the technology. Submissions are open now.

Reads an introduction to the growing list of nominees, which include legal briefs replete with fictional court cases, fake books by real writers and an Airbnb host manipulating images with AI to make it appear a guest owed money for damages:

“Behold, this year’s remarkable collection of visionaries who looked at the cutting edge of artificial intelligence and thought, ‘Hold my venture capital.’ Each nominee has demonstrated an extraordinary commitment to the principle that if something can go catastrophically wrong with AI, it probably will — and they’re here to prove it.”

A software developer named Pete — who asked that his last name not be used to protect his privacy — launched the AI Darwin Awards last month, mostly as a joke, but also as a cheeky reminder that humans ultimately decide how technology gets deployed.

Don’t Blame The Chainsaw

“Artificial intelligence is just a tool — like a chainsaw, nuclear reactor or particularly aggressive blender,” reads the website for the awards. “It’s not the chainsaw’s fault when someone decides to juggle it at a dinner party.

“We celebrate the humans who looked at powerful AI systems and thought, ‘You know what this needs? Less testing, more ambition, and definitely no safety protocols!’ These visionaries remind us that human creativity in finding new ways to endanger ourselves knows no bounds.”

The AI Darwin Awards are not affiliated with the original Darwin Awards, which famously call out people who, through extraordinarily foolish choices, “protect our gene pool by making the ultimate sacrifice of their own lives.” Now that we let machines make dumb decisions for us too, it’s only fair they get their own awards.

Who Will Take The Crown?

Among the contenders for the inaugural AI Darwin Awards winner are the lawyers who defended MyPillow CEO Mike Lindell in a defamation lawsuit. They submitted an AI-generated brief with almost 30 defective citations, misquotes and references to completely fictional court cases. A federal judge fined the attorneys for their misstep, saying they violated a federal law requiring that lawyers certify court filings are grounded in the actual law.

Another nominee: the AI-generated summer reading list published earlier this year by the Chicago Sun Times and The Philadelphia Inquirer that contained fake books by real authors. “WTAF. I did not write a book called Boiling Point,” one of those authors, Rebecca Makkai, posted to BlueSky. Another writer, Min Jin Lee, also felt the need to issue a clarification.

“I have not written and will not be writing a novel called Nightshare Market,” the Pachinko author wrote on X. “Thank you.”

Then there’s the executive producer at Xbox Games Studios who suggested scores of newly laid-off employees should turn to chatbots for emotional support after losing their jobs, an idea that did not go over well.

“Suggesting that people process job loss trauma through chatbot conversations represents either breathtaking tone-deafness or groundbreaking faith in AI therapy — likely both,” the submission reads.

What Inspired The AI Darwin Awards?

The creator of the awards, who lives in Melbourne, Australia, and has worked in software for three decades, said he frequently uses large language models, including to craft the irreverent text for the AI Darwin Awards website. “It takes a lot of steering from myself to give it the desired tone, but the vast majority of actual content, probably 99%, is all the work of my LLM minions,” he said in an interview.

Pete got the idea for the awards as he and co-workers shared their experiences with AI on Slack. “Occasionally someone would post the latest AI blunder of the day and we’d all have either a good chuckle, or eye-roll or both,” he said.

The awards sit somewhere between reality and satire.

“AI will mean lots of good things for us all and it will mean lots of bad things,” the contest’s creator said. “We just need to work out how to try and increase the good and decrease the bad. In fact, our first task is to identify both the good and the bad. Hopefully the AI Darwin Awards can be a small part of that by highlighting some of the ‘bad.’”

He plans to invite the public to vote on candidates in January, with the winner to be announced in February.

For those who’d rather not win an AI Darwin Award, the site includes a handy guide for how for avoiding the dubious distinction. It includes these tips: “Test your AI systems in safe environments before deploying them globally,” “consider hiring humans for tasks that require empathy, creativity or basic common sense” and “ask ‘What’s the worst that could happen?’ and then actually think about the answer.”

-

Business2 weeks ago

Business2 weeks agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms1 month ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy2 months ago

Ethics & Policy2 months agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers3 months ago

Jobs & Careers3 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Education3 months ago

Education3 months agoVEX Robotics launches AI-powered classroom robotics system

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Funding & Business3 months ago

Funding & Business3 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries