AI Insights

Gov. Shapiro Attends Artificial Intelligence Summit in Pittsburgh – Erie News Now

“That becomes the backbone for all of the creative genius that exists in this room, for developing the technologies that are going to allow us to grow our economy here in Pennsylvania,” Shapiro said. “Not just for a few years, but really to me, this is a generational change.

AI Insights

Ohio launches new artificial intelligence public safety reporting app – Ohio

(The Center Square) – Ohio is turning to artificial intelligence to help the public report suspicious activity and potential threats of violence.

In the wake of the Wednesday assassination of conservative activist Charlie Kirk and the June killing of a Minnesota lawmaker, the state launched the new Safeguard Ohio app Friday.

It’s the country’s first criminal justice tip-reporting app to use artificial intelligence in a new way.

“Events that threaten the safety of Ohioans can be hard to predict, but they can be prevented with help from timely, detailed tips from the public,” Gov. Mike DeWine said. “This new app simplifies the process to get information to law enforcement quickly and conveniently.”

The app, developed by Ohio Homeland Security and private partner Vigiliti, uses artificial intelligence to encourage users to provide as much information as possible to law enforcement.

– Advertisement –

Users can upload video, audio and photos and remain anonymous.

Ohio’s previous online reporting system required people to fill out a form.

Information submitted to the app is reported in real time to the Statewide Terrorism Analysis and Crime Center, where analysts are expected to immediately review information and notify law enforcement authorities.

There are eight categories of tip-reporting, including drug-related activity, human trafficking, terrorism, school threats and crimes against children.

“The AI-infused prompts are essential components of this new system,” said Mark Porter, OHS executive director. “We will get the high-quality intelligence we need to act on a tip through this new system. The AI is trained to keep asking questions until the person reporting says they have no more information about the incident.”

After reporting, users will receive a unique QR code specific to that incident that allows for follow-up information. The app can also take reports in 10 languages.

– Advertisement –

“One of the best attributes of this new system is the ability to upload video and photos,” Andy Wilson, director of the Ohio Department of Public Safety, said. “More and more people these days – especially our younger generation – don’t like to talk on the phone. This new reporting method will result in more detailed information being shared with the authorities.”

AI Insights

Hamm Institute hosts American Energy + AI Initiative event, spotlighting urgent needs to power AI

Friday, September 12, 2025

Media Contact:

Dara McBee | Hamm Institute for American Energy | 580-350-7248 | dara.mcbee@hamminstitute.org

Leaders gathered at the Hamm Institute for American Energy at Oklahoma State University on Thursday to focus on one urgent question: how will

the United States power the rise of artificial intelligence?

The discussion of the roundtable report out of the American Energy + AI Initiative

underscored emerging top-tier priorities and highlighted the speed and scale required

to align energy systems with rapid advances in data and technology.

Keynote speaker Mark P. Mills, Hamm Institute Distinguished Scholar, described how

data centers and AI applications will drive a surge in demand unlike anything before.

Mills is leading the Hamm Institute’s core research for the American Energy + AI Initiative,

which is shaping the policy and investment agenda to ensure that America can meet

this challenge.

OSU President Jim Hess also gave remarks, emphasizing the university’s leadership

in advancing applied research and preparing the next generation of energy leaders.

A featured panel brought together a mix of perspectives from across energy and technology.

Panelists included Harold Hamm, founder of the Hamm Institute and Chairman Emeritus

of Continental Resources; Caroline Cochran, co-founder of Oklo; Takajiro Ishikawa,

CEO of Mitsubishi Heavy Industries; and E.Y. Easley, research fellow at SK Innovation.

Together, they explored how natural gas, nuclear innovation, global supply chains

and international collaboration can help meet the moment.

“The United States has the resources it needs. What it lacks is speed, certainty and

alignment,” said Ann Bluntzer Pullin, executive director of the Hamm Institute. “This

initiative is about turning urgency into action so that America and its allies can

lead in both energy and AI.”

Emeritus of Continental Resources; Caroline Cochran, co-founder of Oklo; Takajiro

Ishikawa, CEO of Mitsubishi Heavy Industries; and E.Y. Easley, research fellow at

SK Innovation.

The initiative has already convened roundtables in Washington, D.C.; Denver; and Palo

Alto, California. Each conversation has surfaced priorities that move beyond analysis

toward action, including timely permitting, stronger demand signals to unlock investment,

reforms to unclog grid interconnection, and deeper coordination with allies on fuels

and technology.

The initiative’s next steps will focus on solidifying and advancing these emerging

top-tier priorities. The Hamm Institute will continue to convene leaders and deliver

research that accelerates solutions to meet AI’s energy demand.

AI Insights

FTC investigating AI ‘companion’ chatbots amid growing concern about harm to kids

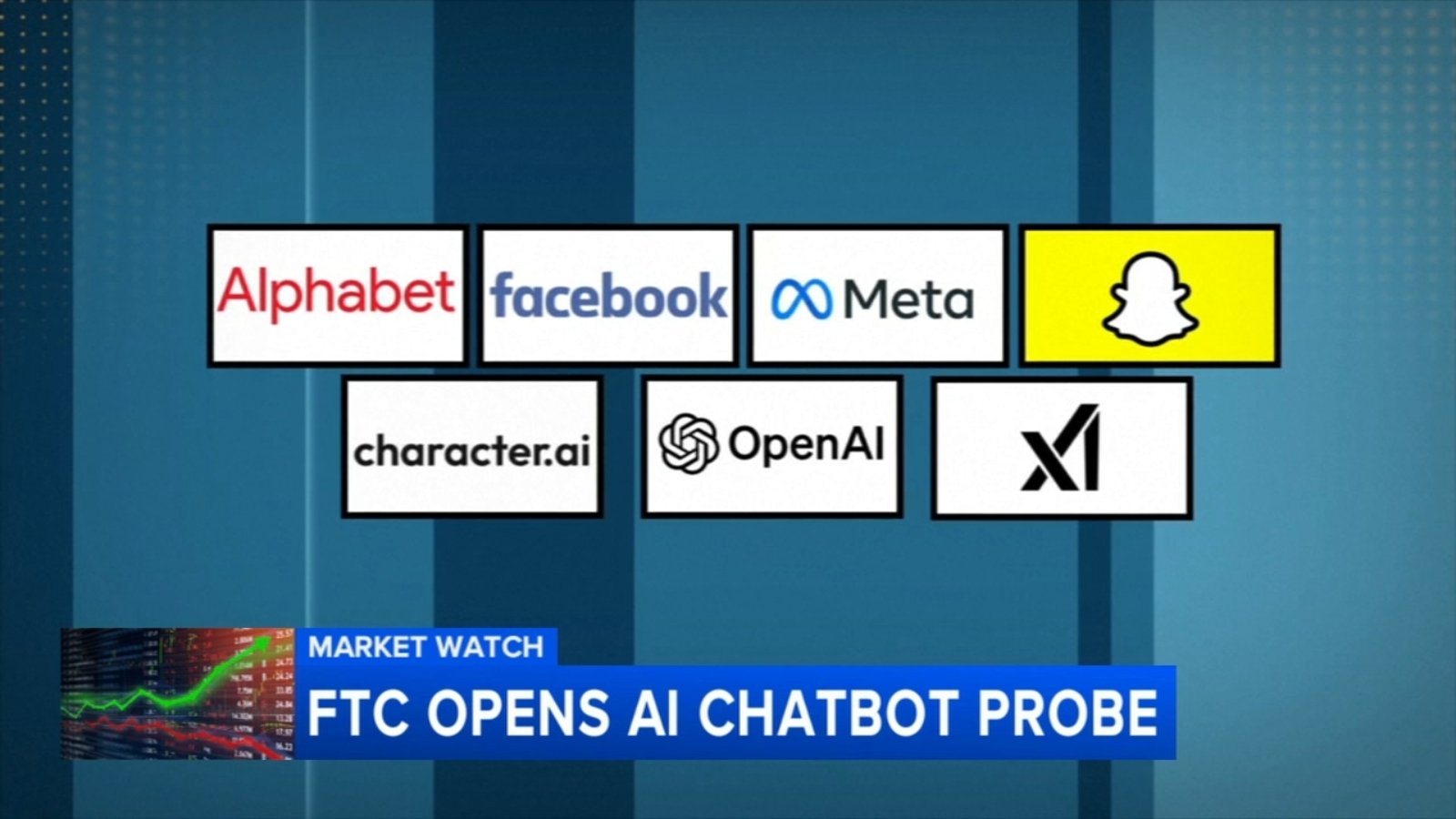

The Federal Trade Commission has launched an investigation into seven tech companies around potential harms their artificial intelligence chatbots could cause to children and teenagers.

The inquiry focuses on AI chatbots that can serve as companions, which “effectively mimic human characteristics, emotions, and intentions, and generally are designed to communicate like a friend or confidant, which may prompt some users, especially children and teens, to trust and form relationships with chatbots,” the agency said in a statement Thursday.

The FTC sent order letters to Google parent company Alphabet; Character.AI; Instagram and its parent company, Meta; OpenAI; Snap; and Elon Musk’s xAI. The agency wants information about whether and how the firms measure the impact of their chatbots on young users and how they protect against and alert parents to potential risks.

The investigation comes amid rising concern around AI use by children and teens, following a string of lawsuits and reports accusing chatbots of being complicit in the suicide deaths, sexual exploitation and other harms to young people. That includes one lawsuit against OpenAI and two against Character.AI that remain ongoing even as the companies say they are continuing to build out additional features to protect users from harmful interactions with their bots.

Broader concerns have also surfaced that even adult users are building unhealthy emotional attachments to AI chatbots, in part because the tools are often designed to be agreeable and supportive.

At least one online safety advocacy group, Common Sense Media, has argued that AI “companion” apps pose unacceptable risks to children and should not be available to users under the age of 18. Two California state bills related to AI chatbot safety for minors, including one backed by Common Sense Media, are set to receive final votes this week and, if passed, will reach California Gov. Gavin Newsom’s desk. The US Senate Judiciary Committee is also set to hold a hearing next week entitled “Examining the Harm of AI Chatbots.”

“As AI technologies evolve, it is important to consider the effects chatbots can have on children, while also ensuring that the United States maintains its role as a global leader in this new and exciting industry,” FTC Chairman Andrew Ferguson said in the Thursday statement. “The study we’re launching today will help us better understand how AI firms are developing their products and the steps they are taking to protect children.”

In particular, the FTC’s orders seek information about how the companies monetize user engagement, generate outputs in response to user inquiries, develop and approve AI characters, use or share personal information gained through user conversations and mitigate negative impacts to children, among other details.

Google, Snap and xAI did not immediately respond to requests for comment.

“Our priority is making ChatGPT helpful and safe for everyone, and we know safety matters above all else when young people are involved. We recognize the FTC has open questions and concerns, and we’re committed to engaging constructively and responding to them directly,” OpenAI spokesperson Liz Bourgeois said in a statement. She added that OpenAI has safeguards such as notifications directing users to crisis helplines and plans to roll out parental controls for minor users.

After the parents of 16-year-old Adam Raine sued OpenAI last month alleging that ChatGPT encouraged their son’s death by suicide, the company acknowledged its safeguards may be “less reliable” when users engage in long conversations with the chatbots and said it was working with experts to improve them.

Meta declined to comment directly on the FTC inquiry. The company said it is currently limiting teens’ access to only a select group of its AI characters, such as those that help with homework. It is also training its AI chatbots not to respond to teens’ mentions of sensitive topics such as self-harm or inappropriate romantic conversations and to instead point to expert resources.

“We look forward to collaborating with the FTC on this inquiry and providing insight on the consumer AI industry and the space’s rapidly evolving technology,” Jerry Ruoti, Character.AI’s head of trust and safety, said in a statement. He added that the company has invested in trust and safety resources such as a new under-18 experience on the platform, a parental insights tool and disclaimers reminding users that they are chatting with AI.

If you are experiencing suicidal, substance use or other mental health crises please call or text the new three-digit code at 988. You will reach a trained crisis counselor for free, 24 hours a day, seven days a week. You can also go to 988lifeline.org.

(The-CNN-Wire & 2025 Cable News Network, Inc., a Time Warner Company. All rights reserved.)

-

Business2 weeks ago

Business2 weeks agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms1 month ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy2 months ago

Ethics & Policy2 months agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi