Ethics & Policy

GPT-5’s Ethics Guidelines for Using It in Philosophical Research

In a post last month, we discussed the question, “How much use of AI in our research is acceptable?“

My post about that has now been used by Kelly Truelove at TruSciPhi as part of a prompt to get ChatGPT-5 to discuss and respond to its contents and to develop its own position about the ethical use of AI in philosophical research.

In case you don’t recall the post, here is GPT-5’s account of it:

Justin Weinberg’s post asks how much AI use in philosophical research is ethically acceptable and cautions against importing student standards into scholarly contexts. He urges beginning with the aims and functions of scholarship and assessing which AI uses fit those ends. He sketches current and conceivable roles for AI—from brainstorming and stylistic editing to argument checking, literature triage, and even co‑authoring—and asks which are permissible and why. He highlights parallel questions about disclosure practices and about evaluation for publication, hiring, tenure, and awards. Acknowledging that tidy rules may fail, he invites nuanced, institutionally sensitive discussion across contexts and time.

Thank you for that summary, Chat.

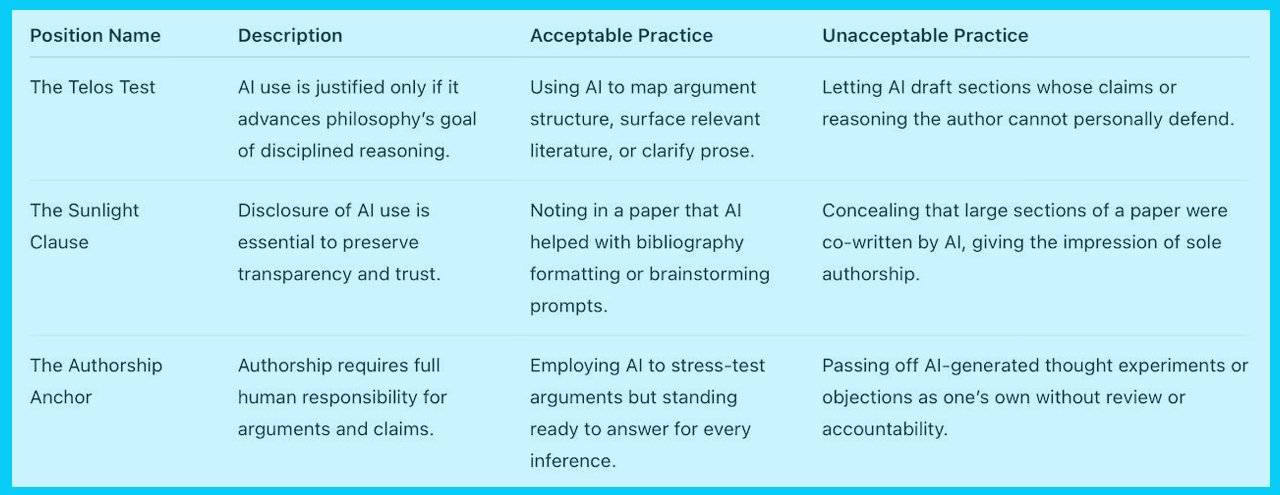

Truelove asked GPT-5 to write a 500 word response and to use “memorable terms” for its positions. What follows is that response—its guidelines for the ethical use of AI in philosophical research:

Philosophers should treat AI neither as a forbidden oracle nor a frictionless coauthor, but as a tool whose permissibility depends on what philosophy is trying to do. To make Weinberg’s invitation to nuance operational, I propose three linked norms that can be adopted by researchers and evaluators alike. Taken together, they aim to protect the goods of inquiry—understanding, originality, and accountability—while still welcoming genuine assistance.

The Telos Test. Begin by asking whether a given use of AI serves the ends of philosophical research. Philosophy’s telos is not sentence production but the disciplined articulation and assessment of reasons. Uses that increase clarity, map argumentative structure, surface relevant literatures, or stress‑test premises can promote that telos; uses that replace interpretive labor or outsource the commitment to reasons corrode it. Formatting citations is clearly fine; generating a section whose argumentative commitments you cannot defend is not. The Telos Test is content‑neutral about tools but strict about responsibility: if you could be cross‑examined on every claim and inference in public, then your use fits; if not, it fails.

The Sunlight Clause. Because the legitimacy of research depends on trust, disclosure should be the default. A meaningful disclosure states what systems were used, for which tasks, and how the human author audited the outputs. Such disclosures need not be ritualistic appendices; they can be concise author’s notes that make replication, critique, and credit assignment possible. Sunlight also disciplines practice: the prospect of reporting your workflow deters lazy overreliance and encourages verification. To avoid performative compliance, tie disclosure to stakes: the more generative or content‑shaping the assistance, the fuller the account of prompts, iterations, and checks. Conversely, purely mechanical help—bibliography formatting and typographic cleanup—can be tersely acknowledged.

The Authorship Anchor. Authorship in philosophy is not ownership of strings of words; it is the assumption of epistemic and ethical responsibility for arguments. Under this anchor, the human author must remain answerable for the claims, inferences, and conceptual moves presented. That standard preserves credit where credit is due without erasing legitimate assistance. For evaluation, journals and committees should ask: did the human demonstrate mastery over the argumentative core? If yes, AI assistance may be compatible with full credit; if not, the work should be judged as incomplete labor, regardless of polish or venue. This shifts assessment from tool usage to intellectual accountability, which is what authorship is supposed to mark.

These norms leave room for diverse practices while resisting both alarmism and technophilia. They encourage experimentation under guardrails: keep meticulous notes of AI‑mediated steps; use models to probe, not to substitute; read every generated sentence as if it were asserted under your name; and welcome post‑publication scrutiny by making your process legible. In short, let AI widen your perspective without loosening your grip on reasons, and let sunlight and authorship hold that grip fast. Institutions can mirror the stance by crafting policies that codify the Telos Test, require Sunlight disclosures proportionate to influence, and ground credit in the Authorship Anchor, aligning incentives with the work of inquiry.

Truelove even had GPT summarize its recommendations in a table:

Truelove then goes on to talk with ChatGPT-5 about how its position on the ethical use of AI in philosophical research relates to computational philosophy and the use of computational models in scientific research. You can read his full account here.

What do you think of ChatGPT-5’s three positions regarding ethical AI use in research? Are they missing anything? Are they too demanding? Are they any good?

(Please note Truelove’s warning: “Readers should not ‘overestimate the reliability of the preceding,’ which ‘is in fact an exploration of model tendencies.’”)

Ethics & Policy

Vatican Hosts Historic “Grace for the World” Concert and AI Ethics Summit | Ukraine news

Crowds gather in St. Peter’s Square for the concert ‘Grace for the World,’ co-directed by Andrea Bocelli and Pharrell Williams, as part of the World Meeting on Human Fraternity aimed at promoting unity, in the Vatican, September 13, 2025. REUTERS/Ciro De Luca

According to CNN CNN

In the Vatican, a historic concert titled “Grace for the World” took place, which for the first time brought together world pop stars on St. Peter’s Square. The event featured John Legend, Teddy Swims, Karol G, and other stars, and the broadcast was provided by CNN and ABC News. The concert occurred as part of the Third World Meeting on Human Fraternity and was open to everyone.

During the event, performances spanning various genres graced the stage. Among the participants were Thai rapper Bambam from GOT7, Black Eyed Peas frontman Will.i.am, and American singer Pharrell Williams. Between performances, Vatican cardinals addressed the audience with calls to remain humane and to uphold mutual respect among people.

Key Moments of the Event

“to remain humane”

Within the framework of the Third World Meeting on Human Fraternity in the Vatican, the topic of artificial intelligence and ethical regulation of its use was also discussed. The summit participants emphasized the need to establish international norms and governance systems for artificial intelligence to ensure the safety of societies. Leading experts joined the discussion: Geoffrey Hinton, known as the “godfather of artificial intelligence,” Max Tegmark from the Massachusetts Institute of Technology, Khimena Sofia Viveros Alvarez, and Marco Trombetti, founder of Translated. Pope Leo XIV also participated in the discussion and reaffirmed the position of the previous pope regarding the establishment of a single agreement on the use of artificial intelligence.

“to define local and international pathways for developing new forms of social charity and to see the image of God in the poor, refugees, and even adversaries.”

They also discussed the risk of the digital divide between countries with access to AI and those without such access. Participants urged concrete local and international initiatives aimed at developing new forms of social philanthropy and supporting the most vulnerable segments of the population.

Other topics you might like:

Ethics & Policy

Pet Dog Joins Google’s Gemini AI Retro Photo Trend! Internet Can’t Get Enough | Viral Video | Viral

Beautiful retro pictures of people in breathtaking ethics in front of an esthetically pleasing wall under the golden hour is currently what is going on on social media! All in all, a new trend is in the ‘internet town’ and it’s spreading- fast. For those not aware, it’s basically a trend where netizens are using Google’s Gemini AI to create a rather beautiful retro version of themselves. In a nutshell, social media is currently full of such pictures. However, when this PET DOG joined the bandwagon, many instantly declared the furry one the winner- and for obvious reasons. The video showed the trend being used on the pet dog- the result of which was simply heartwarming. The AI generated pictures showed the cute one draped in multiple dupattas, with ears that looked like the perfect hairstyle one can ask for- for their pets. Most netizens loved the video, while some expressed their desire to try the same on their pets. Times Now could not confirm the authenticity of the post. Image Source: Jinnie Bhatt/ Instagram

Ethics & Policy

Morocco Signs Deal to Build National Responsible AI Platform

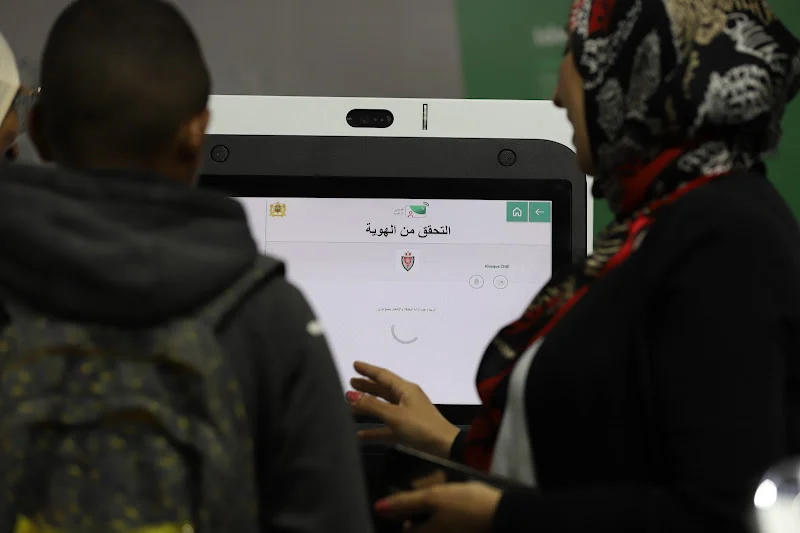

Morocco’s Ministry of Digital Transition and Administrative Reform signed an agreement Thursday with the National Commission for the Control of Personal Data Protection (CNDP) to develop a national platform for responsible artificial intelligence.

The deal, signed in Rabat by Minister Delegate Amale Falah and CNDP President Omar Seghrouchni, will guide the design of large language models tailored to Morocco’s language, culture, legal framework, and digital identity.

Officials said the initiative will provide citizens, businesses, and government agencies with safe generative AI tools that protect fundamental rights. The ministry called the agreement a “strategic step” toward AI sovereignty, ethics, and responsibility, positioning Morocco as a digital leader in Africa and globally.

-

Business2 weeks ago

Business2 weeks agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms1 month ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy2 months ago

Ethics & Policy2 months agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers3 months ago

Jobs & Careers3 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Funding & Business3 months ago

Funding & Business3 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries