By Amy Miller ( September 13, 2025, 00:43 GMT | Comment) — California Gov. Gavin Newsom is facing a balancing act as more than a dozen bills aimed at regulating artificial intelligence tools in a wide range of settings head to his desk for approval. He could approve bills to push back on the Trump administration’s industry-friendly avoidance of AI regulation and make California a model for other states — or he could nix bills to please wealthy Silicon Valley companies and their lobbyists.California Gov. Gavin Newsom is facing a balancing act as more than a dozen bills aimed at regulating artificial intelligence tools in a wide range of settings head to his desk for approval….

AI Insights

From Language Sovereignty to Ecological Stewardship – Intercontinental Cry

Last Updated on September 10, 2025

Artificial intelligence is often framed as a frontier that belongs to Silicon Valley, Beijing, or the halls of elite universities. Yet across the globe, Indigenous peoples are shaping AI in ways that reflect their own histories, values, and aspirations. These efforts are not simply about catching up with the latest technological wave—they are about protecting languages, reclaiming data sovereignty, and aligning computation with responsibilities to land and community.

From India’s tribal regions to the Māori homelands of Aotearoa New Zealand, Indigenous-led AI initiatives are emerging as powerful acts of cultural resilience and political assertion. They remind us that intelligence—whether artificial or human—must be grounded in relationship, reciprocity, and respect.

Giving Tribal Languages a Digital Voice

Just this week, researchers at IIIT Hyderabad, alongside IIT Delhi, BITS Pilani, and IIIT Naya Raipur, launched Adi Vaani, a suite of AI-powered tools designed for tribal languages such as Santali, Mundari, and Bhili.

At the heart of the project is a simple premise that technology should serve the people who need it most. Adi Vaani offers text-to-speech, translation, and optical character recognition (OCR) systems that allow speakers of marginalized languages to access education, healthcare, and public services in their mother tongues.

One of the project’s most promising outputs is a Gondi translator app that enables real-time communication between Gondi, Hindi, and English. For the nearly three million Gondi speakers who have long been excluded from India’s digital ecosystem, this tool is nothing less than transformative.

Speaking about the value of the app, research scholar Gopesh Kumar Bharti commented, “Like many tribal languages, Gondi faces several challenges due to its lack of representation in the official schedule, which hampers its preservation and development. The aim is to preserve and restore the Gondi language so that the next generation understands its cultural and historical significance.”

Latin America’s Open-Source Revolution

In Latin America, a similar wave of innovation is underway. Earlier this year, researchers at the Chilean National Center for Artificial Intelligence (CENIA) unveiled Latam-GPT, a free and open-source large language model trained not only on Spanish and Portuguese, but also incorporating Indigenous languages such as Mapuche, Rapanui, Guaraní, Nahuatl, and Quechua.

Unlike commercial AI systems that extract and commodify, Latam-GPT was designed with sovereignty and accessibility in mind.

To be successful, Latam-GPT needs to ensure the participation of “Indigenous peoples, migrant communities, and other historically marginalized groups in the model’s validation,” said Varinka Farren, chief executive officer of Hub APTA.

But as with most good things, it’s going to take time. Rodrigo Durán, CENIA’s general manager, told Rest of World that it will likely take at least a decade.

Māori Data Sovereignty: “Our Language, Our Algorithms”

Half a world away, the Māori broadcasting collective Te Hiku Media has become a global leader in Indigenous AI. In 2021, the organization released an automatic speech recognition (ASR) model for Te Reo Māori with an accuracy rate of 92%—outperforming international tech giants.

Their achievement was not the result of corporate investment or vast computing power, but of decades of community-led language revitalization. By combining archival recordings with new contributions from fluent speakers, Te Hiku demonstrated that Indigenous peoples can own not only their languages but also the algorithms that process them.

As co-director Peter-Lucas Jones explained, “In the digital world, data is like land,” he says. “If we do not have control, governance, and ongoing guardianship of our data as indigenous people, we will be landless in the digital world, too.”

Indigenous Leadership at UNESCO

On the global policy front, leadership is also shifting. Earlier this year, UNESCO appointed Dr. Sonjharia Minz, an Oraon computer scientist from India’s Jharkhand state, as co-chair of the Indigenous Knowledge Research Governance and Rematriation program.

Her mandate is ambitious: to guide the development of AI-based systems that can securely store, share, and repatriate Indigenous cultural heritage. For communities who have seen their songs, rituals, and even sacred objects stolen and digitized without consent, this initiative signals a long-overdue turn toward justice.

As Dr. Minz told The Times of India, “We are on the brink of losing indigenous languages around the world. Indigenous languages are more than mere communication tools. They are repository of culture, knowledge and knowledge system. They are awaiting urgent attention for revitalization.”

AI and Environmental Co-Stewardship

Artificial intelligence is also being harnessed to care for the land and waters that sustain Indigenous peoples. In the Arctic, communities are blending traditional ecological knowledge with AI-driven satellite monitoring to guide adaptive mariculture practices—helping to ensure that changing seas still provide food for generations to come.

In the Pacific Northwest, Indigenous nations are deploying AI-powered sonar and video systems to monitor salmon runs, an effort vital not only to ecosystems but to cultural survival. Unlike conventional “black box” AI, these systems are validated by Indigenous experts, ensuring that machine predictions remain accountable to local governance and ecological ethics.

Such projects remind us that AI need not be extractive. It can be used to strengthen stewardship practices that have protected biodiversity for millennia.

The Hidden Toll of AI’s Appetite

As Indigenous communities lead the charge toward ethical and ecologically grounded AI, we must also confront the environmental realities underpinning the technology—especially the vast energy and water demands of large language models.

In Chile, the rapid proliferation of data centers—driven partly by AI demands—has sparked fierce opposition. Activists argue that facilities run by tech giants like Amazon, Google, and Microsoft exacerbate water scarcity in drought-stricken regions. As one local put it, “It’s turned into extractivism … We end up being everybody’s backyard.”

The energy hunger of LLMs compounds this strain further. According to researchers at MIT, training clusters for generative AI consume seven to eight times more energy than typical computing workloads, accelerating energy demands just as renewable capacity lags behind.

Globally, by 2022, data centers had consumed a staggering 460 terawatt-hours—a scale comparable to the electricity use of entire states such as France—and are projected to reach 1,050 TWh by 2026, which would place data centers among the top five global electricity users.

LLMs aren’t just energy-intensive; their environmental footprint also extends across their whole lifecycle. New modeling shows that inference—the use of pre-trained models—now contributes to more than half of total emissions. Meanwhile, Google’s own reporting suggests that AI operations have increased greenhouse gas emissions by roughly 48% over five years.

Communities hosting data centers often face additional challenges, including:

This environmental reckoning matters deeply to Indigenous-led AI initiatives—because AI should not replicate colonial patterns of extraction and dispossession. Instead, it must align with ecological reciprocity, sustainability, and respect for all forms of life.

Rethinking Intelligence

Together, these Indigenous-led initiatives compel us to rethink both what counts as intelligence and where AI should be heading. In the mainstream tech industry, intelligence is measured by processing power, speed, and predictive accuracy. But for Indigenous nations, intelligence is relational: it lives in languages that carry ancestral memory and in stories that guide communities toward balance and responsibility.

When these values shape artificial intelligence, the results look radically different from today’s extractive systems. AI becomes a tool for reciprocity instead of extraction. In other words, it becomes less about dominating the future and more about sustaining the conditions for life itself.

This vision matters because the current trajectory of AI as an arms race of ever-larger models, resource-hungry data centers, and escalating ecological costs—cannot be sustained.

The challenge is no longer technical but political and ethical. Will governments, institutions, and corporations make space for Indigenous leadership to shape AI’s future? Or will they repeat the same old colonial logics of extraction and exclusion? Time will tell.

AI Insights

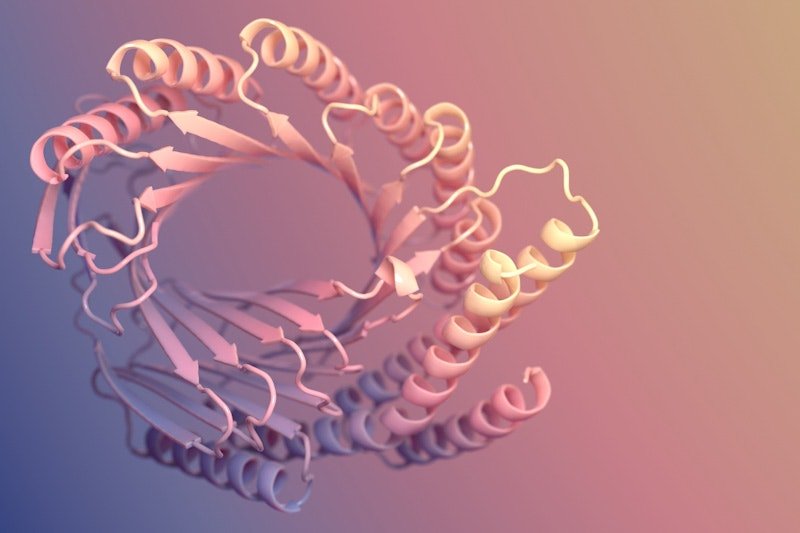

UW lab spinoff focused on AI-enabled protein design cancer treatments

A Seattle startup company has inked a deal with Eli Lilly to develop AI powered cancer treatments. The team at Lila Biologics says they’re pioneering the translation of AI design proteins for therapeutic applications. Anindya Roy is the company’s co-founder and chief scientist. He told KUOW’s Paige Browning about their work.

This interview has been edited for clarity.

Paige Browning: Tell us about Lila Biologics. You spun out of UW Professor David Baker’s protein design lab. What’s Lila’s origin story?

Anindya Roy: I moved to David Baker’s group as a postdoctoral scientist, where I was working on some of the molecules that we are currently developing at Lila. It is an absolutely fantastic place to work. It was one of the coolest experiences of my career.

The Institute for Protein Design has a program called the Translational Investigator Program, which incubates promising technologies before it spins them out. I was part of that program for four or five years where I was generating some of the translational data. I met Jake Kraft, the CEO of Lila Biologics, at IPD, and we decided to team up in 2023 to spin out Lila.

You got a huge boost recently, a collaboration with Eli Lilly, one of the world’s largest pharmaceutical companies. What are you hoping to achieve together, and what’s your timeline?

The current collaboration is one year, and then there are other targets that we can work on. We are really excited to be partnering with Lilly, mainly because, as you mentioned, it is one of the top pharma companies in the US. We are excited to learn from each other, as well as leverage their amazing clinical developmental team to actually develop medicine for patients who don’t have that many options currently.

You are using artificial intelligence and machine learning to create cancer treatments. What exactly are you developing?

Lila Biologics is a pre-clinical stage company. We use machine learning to design novel drugs. We have mainly two different interests. One is to develop targeted radiotherapy to treat solid tumors, and the second is developing long acting injectables for lung and heart diseases. What I mean by long acting injectables is something that you take every three or six months.

Tell me a little bit more about the type of tumors that you are focusing on.

We have a wide variety of solid tumors that we are going for, lung cancer, ovarian cancer, and pancreatic cancer. That’s something that we are really focused on.

And tell me a little bit about the partnership you have with Eli Lilly. What are you creating there when it comes to cancers?

The collaboration is mainly centered around targeted radiotherapy for treating solid tumors, and it’s a multi-target research collaboration. Lila Biologics is responsible for giving Lilly a development candidate, which is basically an optimized drug molecule that is ready for FDA filing. Lilly will take over after we give them the optimized molecule for the clinical development and taking those molecules through clinical trials.

Why use AI for this? What edge is that giving you, or what opportunities does it have that human intelligence can’t accomplish?

In the last couple of years, artificial intelligence has fundamentally changed how we actually design proteins. For example, in last five years, the success rate of designing protein in the computer has gone from around one to 2% to 10% or more. With that unprecedented success rate, we do believe we can bring a lot of drugs needed for the patients, especially for cancer and cardiovascular diseases.

In general, drug design is a very, very difficult problem, and it has really, really high failure rates. So, for example, 90% of the drugs that actually enter the clinic actually fail, mainly due to you cannot make them in scale, or some toxicity issues. When we first started Lila, we thought we can take a holistic approach, where we can actually include some of this downstream risk in the computational design part. So, we asked, can machine learning help us designing proteins that scale well? Meaning, can we make them in large scale, or they’re stable on the benchtop for months, so we don’t face those costly downstream failures? And so far, it’s looking really promising.

When did you realize you might be able to use machine learning and AI to treat cancer?

When we actually looked at this problem, we were thinking whether we can actually increase the clinical success rate. That has been one of the main bottlenecks of drug design. As I mentioned before, 90% of the drugs that actually enter the clinic fail. So, we are really hoping we can actually change that in next five to 10 years, where you can actually confidently predict the clinical properties of a molecule. In other words, what I’m trying to say is that can you predict how a molecule will behave in a living system. And if we can do that confidently, that will increase the success rate of drug development. And we are really optimistic, and we’ll see how it turns out in the next five to 10 years.

Beyond treating hard to tackle tumors at Lila, are there other challenges you hope to take on in the future?

Yeah. It is a really difficult problem to predict how a molecule will behave in a living system. Meaning, we are really good at designing molecules that behave in a certain way, bind to a protein in a certain way, but the moment you try to put that molecule in a human, it’s really hard to predict how that molecule will behave, or whether the molecule is going to the place of the disease, or the tissue of the disease. And that is one of the main reasons there is a 90% failure in drug development.

I think the whole field is moving towards this predictability of biological properties of a molecule, where you can actually predict how this molecule will behave in a human system, or how long it will stay in the body. I think when the computational tools become good enough, when we can predict these properties really well, I think that’s where the fun begins, and we can actually generate molecules with a really high success rate in a really short period of time.

Listen to the interview by clicking the play button above.

AI Insights

California governor facing balancing act as AI bills head to his desk | MLex

Prepare for tomorrow’s regulatory change, today

MLex identifies risk to business wherever it emerges, with specialist reporters across the globe providing exclusive news and deep-dive analysis on the proposals, probes, enforcement actions and rulings that matter to your organization and clients, now and in the longer term.

Know what others in the room don’t, with features including:

- Daily newsletters for Antitrust, M&A, Trade, Data Privacy & Security, Technology, AI and more

- Custom alerts on specific filters including geographies, industries, topics and companies to suit your practice needs

- Predictive analysis from expert journalists across North America, the UK and Europe, Latin America and Asia-Pacific

- Curated case files bringing together news, analysis and source documents in a single timeline

Experience MLex today with a 14-day free trial.

AI Insights

Prediction: This Artificial Intelligence (AI) Player Could Be the Next Palantir in the 2030s

Becoming the next Palantir is a tough job.

Palantir (NASDAQ: PLTR) has already shown what it takes to be a successful enterprise artificial intelligence (AI) player: Become the core platform for customers to build their AI applications on, rapidly turn pilot projects into production-level deployments, cross-sell and upsell to existing clients, and focus on new client acquisition across industries and new verticals.

Image source: Getty Images

Innodata (INOD 2.53%) is much smaller, but it seems to be on a similar growth trajectory. The company is moving beyond traditional data services and is now becoming an AI partner focused on the data and evaluation layer in the enterprise AI stack — something that Palantir is not focusing on.

Financial performance

Palantir’s second-quarter fiscal 2025 (ending June 30) earnings performance underscores the success of this business model. Revenues grew 48% year over year to over $1 billion, with U.S. commercial and U.S. government revenues soaring year over year by 93% and 53%, respectively. The company’s Rule of 40 score increased 11 percentage points sequentially to 94. Management raised its fiscal 2025 revenue guidance and ended Q2 with total contract value (TCV) of $2.3 billion.

Innodata’s Q2 of fiscal 2025 (ending June 30) performance was also stellar. Revenues grew 79% year over year to $58.4 million, while adjusted EBITDA increased 375% to $13.2 million. Management raised full-year organic growth guidance to 45% or more, driven by a robust project pipeline, with several projects from large customers.

Data vendor to AI partner

Palantir differs from other AI giants by focusing not on large language models, but on its ability to leverage AI capabilities to resolve real-world problems. The company’s focus on ontology — a framework relating the company’s real assets to digital assets — helps its software properly understand context to deliver effective results.

Innodata also seems to be implementing a similar strategy. Instead of focusing on traditional data and workflows, it is providing “smart data,” or high-quality complex training data, to improve accuracy, safety, coherence, and reasoning in AI models of enterprise clients. It is also working closely with big technology customers to test models, find performance gaps, and deliver the data and evaluation needed to raise model performance. That shift will help Innodata’s offerings become entrenched in their clients’ ecosystems, thereby strengthening pricing power and creating a sticky customer base.

Vendor neutrality

Palantir has not built any proprietary foundational model. Plus, its Foundry and artificial intelligence platform (AIP) can run on any cloud and can be integrated with multiple large language models. By giving its clients the flexibility to choose their preferred cloud infrastructure and AI models, the company prevents vendor lock-in. This vendor neutrality has helped build trust among both government and commercial clients.

Innodata’s vendor-neutral stance is also becoming a competitive advantage. In its Q2 earnings call, an analyst noted that several big technology companies have said they would no longer work with Innodata’s largest competitor, Scale AI, after Meta Platforms’ large investment in the company. This is creating new opportunities for Innodata. Because it isn’t tied to any single platform, there is no conflict of interest involved in working with Innodata. This gives enterprises and hyperscalers confidence that their proprietary data and model development efforts will not be compromised.

Scaling efforts

Palantir’s business is seeing rapid traction, driven primarily by high-value clients. The company closed 157 deals worth $1 million or more, of which 42 deals were worth $10 million or more.

Innodata is scaling up revenues while also focusing on profitability. Management highlighted that it has won several new projects from its largest customer. The company has also expanded revenues from another big technology client, from $200,000 over the past year to an expected $10 million in the second half of 2025. Innodata’s adjusted EBITDA margins were 23% in the second quarter, up from 9% the same quarter of the prior year.

Agentic AI

Palantir has been focusing on the agentic AI opportunity by investing in AI Function-Driven Engineering (FDE) capability within its AIP platform. AI FDE is expected to solve bigger and more complex problems for clients by autonomously executing a wide array of tasks, including building and changing ontology, building data flows, writing functions, fixing errors, and building applications. It also works in collaboration with humans and can help clients get results faster. Palantir is thus progressing toward developing AI systems that can plan, act, and improve inside enterprise setups.

Innodata is also advancing its agentic AI capabilities by helping enterprises build and manage AI that can act autonomously. The company aims to provide simulation training data to show how humans solve complex problems, and advanced trust and safety monitoring to guide these systems. Agentic AI is also expected to help the robotics field progress rapidly, and AI systems will run on edge devices used in daily life. Hence, Innodata plans to invest more in building data and evaluation services for these agentic AI and robotics projects, which it expects could become a market even larger than today’s post-training data work.

Valuation

Despite its many strengths, Innodata is still very much in the early stages of its AI journey. Shares have gained by over 315% in the last year. Yet, with a market cap of about $1.9 billion and trading at nearly 8.2 times sales, Innodata is priced like a data services company making inroads in the AI market, and not like an AI platform company with a significant competitive moat. On the other hand, Palantir stock is expensive and trades closer to 114 times sales. This shows how Wall Street rewards a category leader like Palantir, whose offerings act as an operating layer for enterprise AI companies.

Innodata also needs to dominate the AI performance market to reach such sky-high valuations. The company will need to expand its customer base, cross-sell and upsell to existing clients, and make it difficult to switch to the competition.

While this involves significant execution risk, there is definitely a chance — albeit a small one — that Innodata can become the next Palantir in the 2030s.

-

Business2 weeks ago

Business2 weeks agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms1 month ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy2 months ago

Ethics & Policy2 months agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi