AI Research

How London Stock Exchange Group is detecting market abuse with their AI-powered Surveillance Guide on Amazon Bedrock

London Stock Exchange Group (LSEG) is a global provider of financial markets data and infrastructure. It operates the London Stock Exchange and manages international equity, fixed income, and derivative markets. The group also develops capital markets software, offers real-time and reference data products, and provides extensive post-trade services. This post was co-authored with Charles Kellaway and Rasika Withanawasam of LSEG.

Financial markets are remarkably complex, hosting increasingly dynamic investment strategies across new asset classes and interconnected venues. Accordingly, regulators place great emphasis on the ability of market surveillance teams to keep pace with evolving risk profiles. However, the landscape is vast; London Stock Exchange alone facilitates the trading and reporting of over £1 trillion of securities by 400 members annually. Effective monitoring must cover all MiFID asset classes, markets and jurisdictions to detect market abuse, while also giving weight to participant relationships, and market surveillance systems must scale with volumes and volatility. As a result, many systems are outdated and unsatisfactory for regulatory expectations, requiring manual and time-consuming work.

To address these challenges, London Stock Exchange Group (LSEG) has developed an innovative solution using Amazon Bedrock, a fully managed service that offers a choice of high-performing foundation models from leading AI companies, to automate and enhance their market surveillance capabilities. LSEG’s AI-powered Surveillance Guide helps analysts efficiently review trades flagged for potential market abuse by automatically analyzing news sensitivity and its impact on market behavior.

In this post, we explore how LSEG used Amazon Bedrock and Anthropic’s Claude foundation models to build an automated system that significantly improves the efficiency and accuracy of market surveillance operations.

The challenge

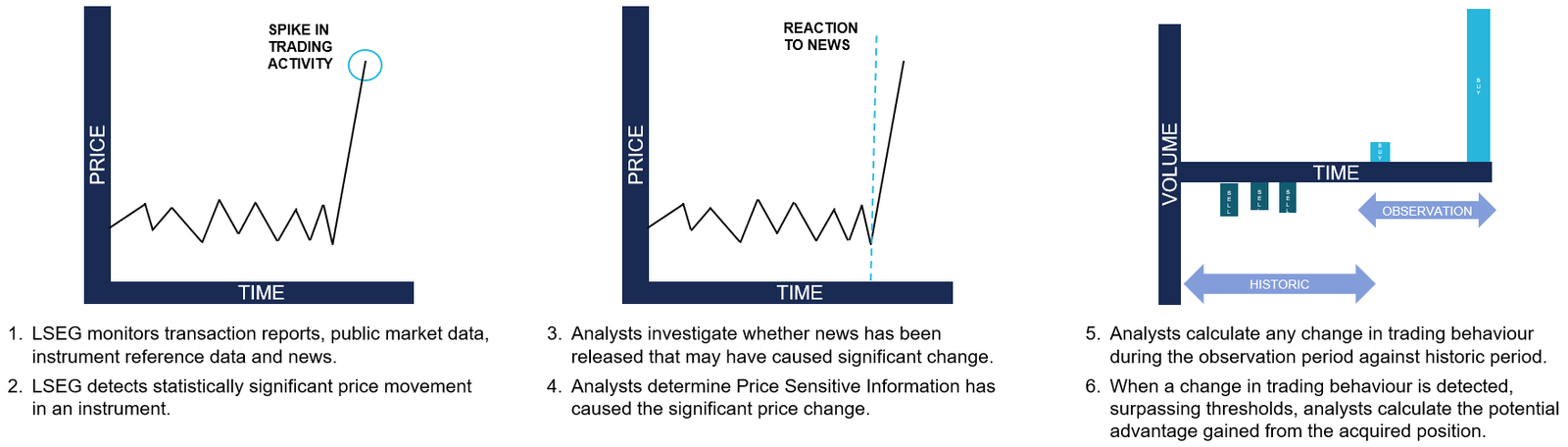

Currently, LSEG’s surveillance monitoring systems generate automated, customized alerts to flag suspicious trading activity to the Market Supervision team. Analysts then conduct initial triage assessments to determine whether the activity warrants further investigation, which might require undertaking differing levels of qualitative analysis. This could involve manual collation of all and any evidence that might be applicable when methodically corroborating regulation, news, sentiment and trading activity. For example, during an insider dealing investigation, analysts are alerted to statistically significant price movements. The analyst must then conduct an initial assessment of related news during the observation period to determine if the highlighted price move has been caused by specific news and its likely price sensitivity, as shown in the following figure. This initial step in assessing the presence, or absence, of price sensitive news guides the subsequent actions an analyst will take with a possible case of market abuse.

Initial triaging can be a time-consuming and resource-intensive process and still necessitate a full investigation if the identified behavior remains potentially suspicious or abusive.

Moreover, the dynamic nature of financial markets and evolving tactics and sophistication of bad actors demand that market facilitators revisit automated rules-based surveillance systems. The increasing frequency of alerts and high number of false positives adversely impact an analyst’s ability to devote quality time to the most meaningful cases, and such heightened emphasis on resources could result in operational delays.

Solution overview

To address these challenges, LSEG collaborated with AWS to improve insider dealing detection, developing a generative AI prototype that automatically predicts the probability of news articles being price sensitive. The system employs Anthropic’s Claude Sonnet 3.5 model—the most price performant model at the time—through Amazon Bedrock to analyze news content from LSEG’s Regulatory News Service (RNS) and classify articles based on their potential market impact. The results support analysts to more quickly determine whether highlighted trading activity can be mitigated during the observation period.

The architecture consists of three main components:

- A data ingestion and preprocessing pipeline for RNS articles

- Amazon Bedrock integration for news analysis using Claude Sonnet 3.5

- Inference application for visualising results and predictions

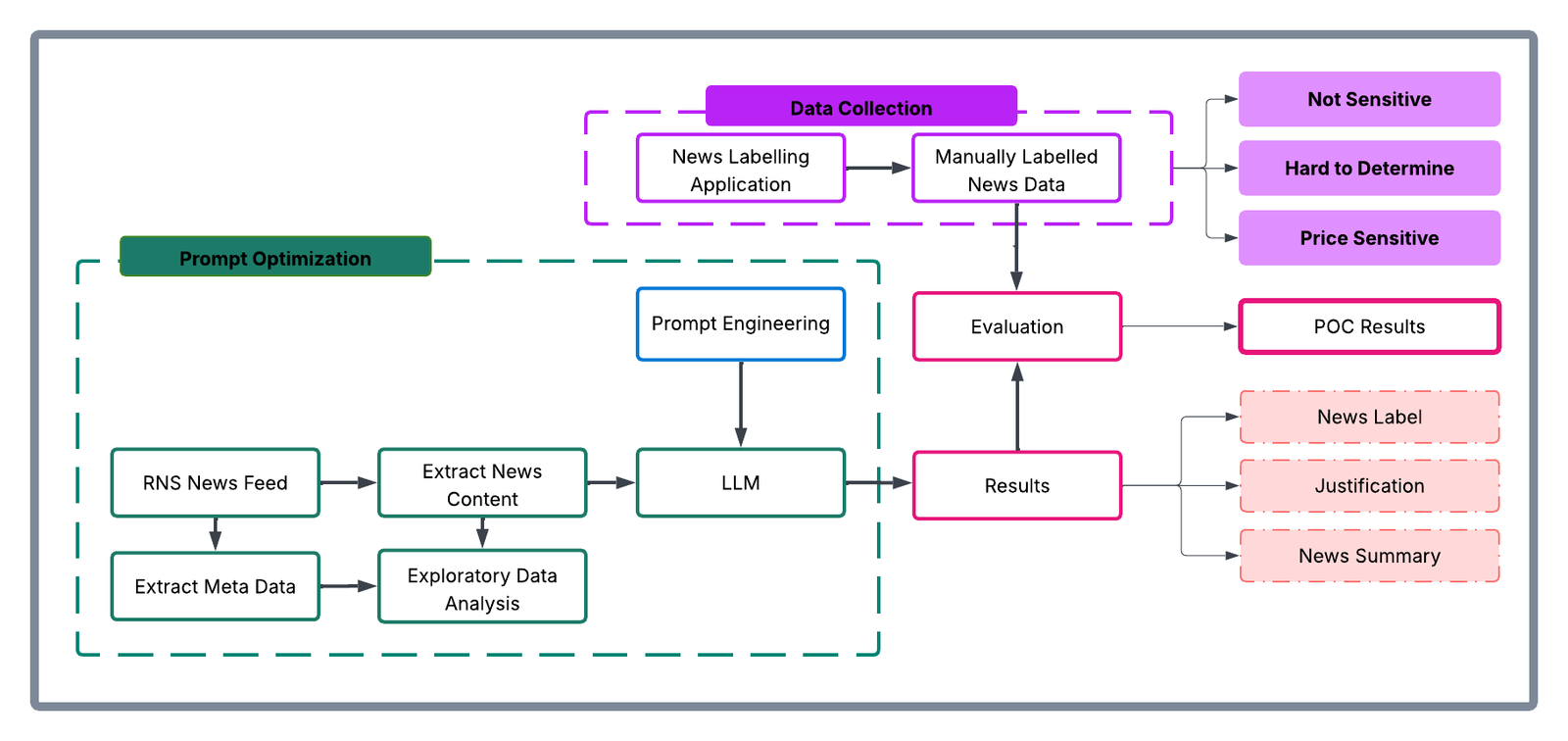

The following diagram illustrates the conceptual approach:

The workflow processes news articles through the following steps:

- Ingest raw RNS news documents in HTML format

- Preprocess and extract clean news text

- Fill the classification prompt template with text from the news documents

- Prompt Anthropic’s Claude Sonnet 3.5 through Amazon Bedrock

- Receive and process model predictions and justifications

- Present results through the visualization interface developed using Streamlit

Methodology

The team collated a comprehensive dataset of approximately 250,000 RNS articles spanning 6 consecutive months of trading activity in 2023. The raw data—HTML documents from RNS—were initially pre-processed within the AWS environment by removing extraneous HTML elements and formatted to extract clean textual content. Having isolated substantive news content, the team subsequently carried out exploratory data analysis to understand distribution patterns within the RNS corpus, focused on three dimensions:

- News categories: Distribution of articles across different regulatory categories

- Instruments: Financial instruments referenced in the news articles

- Article length: Statistical distribution of document sizes

Exploration provided contextual understanding of the news landscape and informed the sampling strategy in creating a representative evaluation dataset. 110 articles were selected to cover major news categories, and this curated subset was presented to market surveillance analysts who, as domain experts, evaluated each article’s price sensitivity on a nine-point scale, as shown in the following image:

- 1–3: PRICE_NOT_SENSITIVE – Low probability of price sensitivity

- 4–6: HARD_TO_DETERMINE – Uncertain price sensitivity

- 7–9: PRICE_SENSITIVE – High probability of price sensitivity

The experiment was executed within Amazon SageMaker using Jupyter Notebooks as the development environment. The technical stack consisted of:

- Instructor library: Provided integration capabilities with Anthropic’s Claude Sonnet 3.5 model in Amazon Bedrock

- Amazon Bedrock: Served as the API infrastructure for model access

- Custom data processing pipelines (Python): For data ingestion and preprocessing

This infrastructure enabled systematic experimentation with various algorithmic approaches, including traditional supervised learning methods, prompt engineering with foundation models, and fine-tuning scenarios.

The evaluation framework established specific technical success metrics:

- Data pipeline implementation: Successful ingestion and preprocessing of RNS data

- Metric definition: Clear articulation of precision, recall, and F1 metrics

- Workflow completion: Execution of comprehensive exploratory data analysis (EDA) and experimental workflows

The analytical approach was a two-step classification process, as shown in the following figure:

- Step 1: Classify news articles as potentially price sensitive or other

- Step 2: Classify news articles as potentially price not sensitive or other

This multi-stage architecture was designed to maximize classification accuracy by allowing analysts to focus on specific aspects of price sensitivity at each stage. The results from each step were then merged to produce the final output, which was compared with the human-labeled dataset to generate quantitative results.

To consolidate the results from both classification steps, the data merging rules followed were:

| Step 1 Classification | Step 2 Classification | Final Classification |

|---|---|---|

| Sensitive | Other | Sensitive |

| Other | Non-sensitive | Non-sensitive |

| Other | Other | Ambiguous – requires manual review i.e., Hard to Determine |

| Sensitive | Non-sensitive | Ambiguous – requires manual review i.e., Hard to Determine |

Based on the insights gathered, prompts were optimized. The prompt templates elicited three key components from the model:

- A concise summary of the news article

- A price sensitivity classification

- A chain-of-thought explanation justifying the classification decision

The following is an example prompt:

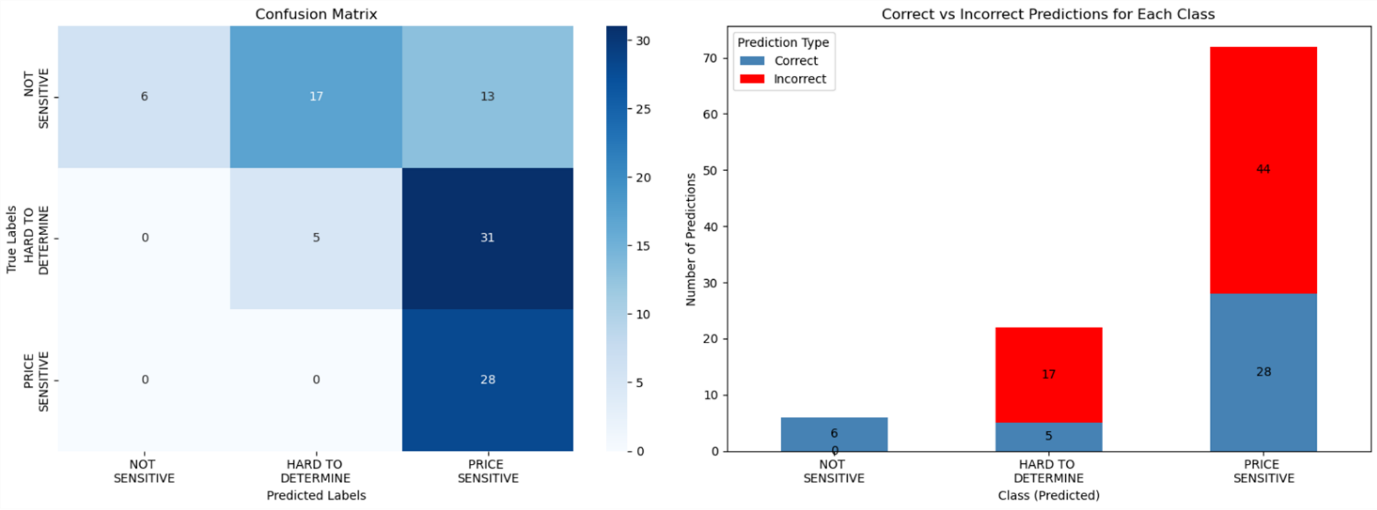

As shown in the following figure, the solution was optimized to maximize:

- Precision for the

NOT SENSITIVEclass - Recall for the

PRICE SENSITIVEclass

This optimization strategy was deliberate, facilitating high confidence in non-sensitive classifications to reduce unnecessary escalations to human analysts (in other words, to reduce false positives). Through this methodical approach, prompts were iteratively refined while maintaining rigorous evaluation standards through comparison against the expert-annotated baseline data.

Key benefits and results

Over a 6-week period, Surveillance Guide demonstrated remarkable accuracy when evaluated on a representative sample dataset. Key achievements include the following:

- 100% precision in identifying non-sensitive news, allocating 6 articles to this category that analysts confirmed were non price sensitive

- 100% recall in detecting price-sensitive content, allocating 36 hard to determine and 28 price sensitive articles labelled by analysts into one of these two categories (never misclassifying price sensitive content)

- Automated analysis of complex financial news

- Detailed justifications for classification decisions

- Effective triaging of results by sensitivity level

In this implementation, LSEG has employed Amazon Bedrock so that they can use secure, scalable access to foundation models through a unified API, minimizing the need for direct model management and reducing operational complexity. Because of the serverless architecture of Amazon Bedrock, LSEG can take advantage of dynamic scaling of model inference capacity based on news volume, while maintaining consistent performance during market-critical periods. Its built-in monitoring and governance features support reliable model performance and maintain audit trails for regulatory compliance.

Impact on market surveillance

This AI-powered solution transforms market surveillance operations by:

- Reducing manual review time for analysts

- Improving consistency in price-sensitivity assessment

- Providing detailed audit trails through automated justifications

- Enabling faster response to potential market abuse cases

- Scaling surveillance capabilities without proportional resource increases

The system’s ability to process news articles instantly and provide detailed justifications helps analysts focus their attention on the most critical cases while maintaining comprehensive market oversight.

Proposed next steps

LSEG plans to first enhance the solution, for internal use, by:

- Integrating additional data sources, including company financials and market data

- Implementing few-shot prompting and fine-tuning capabilities

- Expanding the evaluation dataset for continued accuracy improvements

- Deploying in live environments alongside manual processes for validation

- Adapting to additional market abuse typologies

Conclusion

LSEG’s Surveillance Guide demonstrates how generative AI can transform market surveillance operations. Powered by Amazon Bedrock, the solution improves efficiency and enhances the quality and consistency of market abuse detection.

As financial markets continue to evolve, AI-powered solutions architected along similar lines will become increasingly important for maintaining integrity and compliance. AWS and LSEG are intent on being at the forefront of this change.

The selection of Amazon Bedrock as the foundation model service provides LSEG with the flexibility to iterate on their solution while maintaining enterprise-grade security and scalability. To learn more about building similar solutions with Amazon Bedrock, visit the Amazon Bedrock documentation or explore other financial services use cases in the AWS Financial Services Blog.

About the authors

Charles Kellaway is a Senior Manager in the Equities Trading team at LSE plc, based in London. With a background spanning both Equity and Insurance markets, Charles specialises in deep market research and business strategy, with a focus on deploying technology to unlock liquidity and drive operational efficiency. His work bridges the gap between finance and engineering, and he always brings a cross-functional perspective to solving complex challenges.

Charles Kellaway is a Senior Manager in the Equities Trading team at LSE plc, based in London. With a background spanning both Equity and Insurance markets, Charles specialises in deep market research and business strategy, with a focus on deploying technology to unlock liquidity and drive operational efficiency. His work bridges the gap between finance and engineering, and he always brings a cross-functional perspective to solving complex challenges.

Rasika Withanawasam is a seasoned technology leader with over two decades of experience architecting and developing mission-critical, scalable, low-latency software solutions. Rasika’s core expertise lies in big data and machine learning applications, focusing intently on FinTech and RegTech sectors. He has held several pivotal roles at LSEG, including Chief Product Architect for the flagship Millennium Surveillance and Millennium Analytics platforms, and currently serves as Manager of the Quantitative Surveillance & Technology team, where he leads AI/ML solution development.

Rasika Withanawasam is a seasoned technology leader with over two decades of experience architecting and developing mission-critical, scalable, low-latency software solutions. Rasika’s core expertise lies in big data and machine learning applications, focusing intently on FinTech and RegTech sectors. He has held several pivotal roles at LSEG, including Chief Product Architect for the flagship Millennium Surveillance and Millennium Analytics platforms, and currently serves as Manager of the Quantitative Surveillance & Technology team, where he leads AI/ML solution development.

Richard Chester is a Principal Solutions Architect at AWS, advising large Financial Services organisations. He has 25+ years’ experience across the Financial Services Industry where he has held leadership roles in transformation programs, DevOps engineering, and Development Tooling. Since moving across to AWS from being a customer, Richard is now focused on driving the execution of strategic initiatives, mitigating risks and tackling complex technical challenges for AWS customers.

Richard Chester is a Principal Solutions Architect at AWS, advising large Financial Services organisations. He has 25+ years’ experience across the Financial Services Industry where he has held leadership roles in transformation programs, DevOps engineering, and Development Tooling. Since moving across to AWS from being a customer, Richard is now focused on driving the execution of strategic initiatives, mitigating risks and tackling complex technical challenges for AWS customers.

AI Research

California, New York could become first states to enact laws aiming to prevent catastrophic AI harm

This story is originally published by Stateline.

California and New York could become the first states to establish rules aiming to prevent the most advanced, large-scale artificial intelligence models — known as frontier AI models — from causing catastrophic harm involving dozens of casualties or billion-dollar damages.

The bill in California, which passed the state Senate earlier this year, would require large developers of frontier AI systems to implement and disclose certain safety protocols used by the company to mitigate the risk of incidents contributing to the deaths of 50 or more people or damages amounting to more than $1 billion.

The bill, which is under consideration in the state Assembly, would also require developers to create a frontier AI framework that includes best practices for using the models. Developers would have to publish a transparency report that discloses the risk assessments used while developing the model.

In June, New York state lawmakers approved a similar measure; Democratic Gov. Kathy Hochul has until the end of the year to decide whether to sign it into law.

Under the measure, before deploying a frontier AI model, large developers would be required to implement a safety policy to prevent the risk of critical harm — including the death or serious injury of more than 100 people or at least $1 billion in damages — caused or enabled by a frontier model through the creation or use of large-scale weapons systems or through AI committing criminal acts.

Frontier AI models are large-scale systems that exist at the forefront of artificial intelligence innovation. These models, such as OpenAI’s GPT-5, Google’s Gemini Ultra and others, are highly advanced and can perform a wide range of tasks by processing substantial amounts of data. These powerful models also have the potential to cause catastrophic harm.

California legislators last year attempted to pass stricter regulations on large developers to prevent the catastrophic harms of AI, but Democratic Gov. Gavin Newsom vetoed the bill. He said in his veto message that it would apply “stringent standards to even the most basic functions” of large AI systems. He wrote that small models could be “equally or even more dangerous” and worried about the bill curtailing innovation.

Over the following year, the Joint California Policy Working Group on AI Frontier Models wrote and published its report on how to approach frontier AI policy. The report emphasized the importance of empirical research, policy analyses and balance between the technology’s benefits and risks.

Tech developers and industry groups have opposed the bills in both states. Paul Lekas, the senior vice president of global public policy at the Software & Information Industry Association, wrote in an emailed statement to Stateline that California’s measure, while intended to promote responsible AI development, “is not the way to advance this goal, build trust in AI systems, and support consumer protection.”

The bill would create “an overly prescriptive and burdensome framework that risks stifling frontier model development without adequately improving safety,” he said, the same problems that led to last year’s veto. “The bill remains untethered to measurable standards, and its vague disclosure and reporting mandates create a new layer of operational burdens.”

NetChoice, a trade association of online businesses including Amazon, Google and Meta, sent a letter to Hochul in June, urging the governor to veto New York’s proposed legislation.

“While the goal of ensuring the safe development of artificial intelligence is laudable, this legislation is constructed in a way that would unfortunately undermine its very purpose, harming innovation, economic competitiveness, and the development of solutions to some of our most pressing problems, without effectively improving public safety,” wrote Patrick Hedger, the director of policy at NetChoice.

Stateline reporter Madyson Fitzgerald can be reached at mfitzgerald@stateline.org.

Stateline is part of States Newsroom, a nonprofit news network supported by grants and a coalition of donors as a 501c(3) public charity. Stateline maintains editorial independence. Contact Editor Scott S. Greenberger for questions: info@stateline.org.

AI Research

Commanders vs. Packers props, SportsLine Machine Learning Model AI picks: Jordan Love Over 223.5 passing yards

The NFL Week 2 schedule gets underway with a Thursday Night Football matchup between NFC playoff teams from a year ago. The Washington Commanders battle the Green Bay Packers beginning at 8:15 p.m. ET from Lambeau Field in Green Bay. Second-year quarterback Jayden Daniels led the Commanders to a 21-6 opening-day win over the New York Giants, completing 19 of 30 passes for 233 yards and one touchdown. Jordan Love, meanwhile, helped propel the Packers to a dominating 27-13 win over the Detroit Lions in Week 1. He completed 16 of 22 passes for 188 yards and two touchdowns.

NFL prop bettors will likely target the two young quarterbacks with NFL prop picks, in addition to proven playmakers like Terry McLaurin, Deebo Samuel and Josh Jacobs. Green Bay’s Jayden Reed has been dealing with a foot injury, but still managed to haul in a touchdown pass in the opener. The Packers enter as a 3.5-point favorite with Green Bay at -187 on the money line. The over/under is 48.5 points. Before betting any Commanders vs. Packers props for Thursday Night Football, you need to see the Commanders vs. Packers prop predictions powered by SportsLine’s Machine Learning Model AI.

Built using cutting-edge artificial intelligence and machine learning techniques by SportsLine’s Data Science team, AI Predictions and AI Ratings are generated for each player prop.

For Packers vs. Commanders NFL betting on Monday Night Football, the Machine Learning Model has evaluated the NFL player prop odds and provided Bears vs. Vikings prop picks. You can only see the Machine Learning Model player prop predictions for Washington vs. Green Bay here.

Top NFL player prop bets for Commanders vs. Packers

After analyzing the Commanders vs. Packers props and examining the dozens of NFL player prop markets, the SportsLine’s Machine Learning Model says Packers quarterback Love goes Over 223.5 passing yards (-112 at FanDuel). Love passed for 224 or more yards in eight games a year ago, despite an injury-filled season. In 15 regular-season games in 2024, he completed 63.1% of his passes for 3,389 yards and 25 touchdowns with 11 interceptions.

In a 30-13 win over the Seattle Seahawks on Dec. 15, he completed 20 of 27 passes for 229 yards and two touchdowns. Love completed 21 of 28 passes for 274 yards and two scores in a 30-17 victory over the Miami Dolphins on Nov. 28. The model projects Love to pass for 259.5 yards, giving this prop bet a 4.5 rating out of 5. See more NFL props here, and new users can also target the FanDuel promo code, which offers new users $300 in bonus bets if their first $5 bet wins:

How to make NFL player prop bets for Washington vs. Green Bay

In addition, the SportsLine Machine Learning Model says another star sails past his total and has nine additional NFL props that are rated four stars or better. You need to see the Machine Learning Model analysis before making any Commanders vs. Packers prop bets for Thursday Night Football.

Which Commanders vs. Packers prop bets should you target for Thursday Night Football? Visit SportsLine now to see the top Commanders vs. Packers props, all from the SportsLine Machine Learning Model.

AI Research

Oklahoma considers a pitch from a private company to monitor parolees with artificial intelligence

Oklahoma lawmakers are considering investing in a new platform that aids in parole and probation check-ins through monitoring with artificial intelligence and fingerprint and facial scans.

The state could be the first in the nation to use the Montana-based company Global Accountability’s technology for parole and probation monitoring, said CEO Jim Kinsey.

Global Accountability is also pitching its Absolute ID platform to states to prevent fraud with food stamp benefits and track case workers and caregivers in the foster care system.

A pilot program for 300 parolees and 25 to 40 officers would cost Oklahoma around $2 million for one year, though the exact amount would depend on the number of programs the state wants to use the platform for, Kinsey said.

The Oklahoma Department of Corrections already uses an offender monitoring platform with the capability for check-ins using facial recognition, a spokesperson for the agency said in an email. Supervising officers can allow certain low-level offenders with smartphones to check in monthly through a mobile app instead of an office visit.

The state agency is “always interested in having conversations with companies that might be able to provide services that can create efficiencies in our practices,” the spokesperson said in a statement.

States like Illinois, Virginia and Idaho have adopted similar technology, though Global Accountability executives say their platform is unique because of its combination of biometrics, location identification and a feature creating virtual boundaries that send an alert to an officer when crossed.

The Absolute ID platform has the capacity to collect a range of data, including location and movement, but states would be able to set rules on what data actually gets captured, Kinsey said.

During an interim study at the Oklahoma House of Representatives in August, company representatives said their technology could monitor people on parole and probation through smartphones and smartwatches. Users would have to scan their face or fingerprint to access the platform for scheduled check-ins. The company could implement workarounds for certain offenders who can’t have access to a smartphone.

There are 428 people across the state using ankle monitors, an Oklahoma Department of Corrections spokesperson said. The agency uses the monitors for aggravated drug traffickers, sex offenders and prisoners participating in a GPS-monitored reentry program.

“That is a working technology,” said David Crist, lead compliance officer for Global Accountability. “It’s great in that it does what it should do, but it’s not keeping up with the needs.”

The Absolute ID platform uses artificial intelligence to find patterns in data, like changes in the places a prisoner visits or how often they charge their device, Crist said. It can also flag individuals for review by an officer based on behaviors like missing check-ins, visiting unauthorized areas or allowing their device to die.

Agencies would create policies that determine potential consequences, which could involve a call or visit from an officer, Crist said. He also said no action would be taken without a final decision from a supervising officer.

“Ultimately, what we’re trying to do is reduce some of the workload of officers because they can’t be doing this 24/7,” Crist said. “But some of our automation can. And it’s not necessarily taking any action, but it is providing assistance.”

Parolees and probationers can also text message and call their supervising officers through the platform.

The state could provide smartphones or watches to people on parole or probation or require them to pay for the devices themselves, said Crist. He also said the state could make prisoners’ failure to carry their phone with them or pay their phone bill a violation of parole.

Rep. Ross Ford, R-Broken Arrow, who organized the study, said in an interview with the Frontier he first learned about Global Accountability several years ago and was impressed by their platform.

Ford said he doesn’t see the associated costs for parolees and probationers, like keeping up with phone bills, as a problem.

“I want to help them get back on their feet,” Ford said. “I want to do everything I can to make sure that they’re successful when they’re released from the penitentiary. But you have to also pay your debt to society too and part of that is paying fees.”

Support Independent Oklahoma Journalism

The Frontier holds the powerful accountable through fearless, in-depth reporting. We don’t run ads — we rely on donors who believe in our mission. If that’s you, please consider making a contribution.

Your gift helps keep our journalism free for everyone.

Ford said he thinks using the platform to monitor parole, probation and food stamp benefits could help the state save money. He’s requested another interim study on using the company’s technology for food stamp benefits, but a date hasn’t been posted yet.

Other legislators are more skeptical of the platform. Rep. Jim Olsen, R-Roland, said he thought the platform could be helpful, but he doesn’t see a benefit to Oklahoma being an early adopter. He said he’d like to let software companies work out some of the kinks first and then consider investing when the technology becomes less expensive.

Rep. David Hardin, R-Stilwell, said he remains unconvinced by Global Accountability’s presentation. He said the Department of Corrections would likely need to request a budget increase to fund the program, which would need legislative approval. Unless the company can alleviate some of his concerns, he said he doubts any related bill would pass the Public Safety committee that he chairs.

“You can tell me anything,” Hardin said. “I want to see what you’re doing. I want you to prove to me that it’s going to work before I start authorizing the sale of taxpayer money.”

Related stories

-

Business2 weeks ago

Business2 weeks agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms1 month ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy2 months ago

Ethics & Policy2 months agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi