AI Insights

The Debate On Whether Artificial General Intelligence Should Inevitably Be Declared A Worldwide Public Good With Free Access For All

Will access to AGI (artificial general intelligence) be free for all or might there be cost and other access barriers?

getty

In today’s column, I examine an ongoing debate about who will have access to artificial general intelligence (AGI). AGI is purportedly on the horizon and will be AI so advanced that it acts intellectually on par with humans. The question arises as to whether everyone will be able to use AGI or whether only those who can afford to do so will have ready access.

Some ardently insist that if AGI is truly attained, it ought to be considered a worldwide public good, including that AGI would be freely available to all at any time and any place.

Let’s talk about it.

This analysis of an innovative AI breakthrough is part of my ongoing Forbes column coverage on the latest in AI, including identifying and explaining various impactful AI complexities (see the link here).

Heading Toward AGI And ASI

First, some fundamentals are required to set the stage for this weighty discussion.

There is a great deal of research going on to further advance AI. The general goal is to either reach artificial general intelligence (AGI) or maybe even the outstretched possibility of achieving artificial superintelligence (ASI).

AGI is AI that is considered on par with human intellect and can seemingly match our intelligence. ASI is AI that has gone beyond human intellect and would be superior in many if not all feasible ways. The idea is that ASI would be able to run circles around humans by outthinking us at every turn. For more details on the nature of conventional AI versus AGI and ASI, see my analysis at the link here.

We have not yet attained AGI.

In fact, it is unknown as to whether we will reach AGI, or that maybe AGI will be achievable in decades or perhaps centuries from now. The AGI attainment dates that are floating around are wildly varying and wildly unsubstantiated by any credible evidence or ironclad logic. ASI is even more beyond the pale when it comes to where we are currently with conventional AI.

Immense Capability Of AGI

Imagine how much you could accomplish if you had access to AGI.

AGI would be an amazing intellectual partner. Any decisions that you need to make can be bounced off AGI for feedback and added insights. AGI would be instrumental in your personal life. You might use AGI to teach you new skills or hone your own intellectual capacity. By and large, having AGI at your fingertips will be crucial to how your life proceeds.

The issue is this.

Suppose that AGI is provided by an AI maker that opts to charge for the use of the AGI. This seems like a reasonable approach by the AI maker since they undoubtedly want to recoup their investment made in devising AGI. Plus, they will have ongoing costs such as the running of AGI on expensive servers in costly data centers, along with doing upgrades and maintenance on AGI.

As they say, to the victor go the spoils.

Those who can afford to pay for AGI usage will have a leg up on everyone else. We will descend into a society of the AGI haves and the AGI have-nots. Things will get even worse since the AGI haves will presumably flourish in new ways and far outpace the AGI have-nots. Humans armed with AGI will excel while the rest of the populace is at a distinct disadvantage.

Some believe that if that’s how the post-AGI era plays out, something demonstrative would need to be done. We can’t simply allow the world to be divided into those that can afford AGI and those that cannot do so.

There must be a means to figure this out in some balancing way.

AGI As A Designated Public Good

One burgeoning idea is that AGI should be designated as an international public good.

If we reach AGI, no matter whether by an AI maker or some governmental entity or however reached, the AGI must be globally declared as a public good for all. There must be no barriers to the use of AGI. People everywhere are to have full and equal access to AGI. Period, end of story.

How might this be undertaken?

Some indicate that AGI should be handed over to the United Nations. The UN would be tasked with making sure that access to AGI was a worldwide facility. People in all nations and anywhere on the planet would readily be provided a login and full access to AGI. They could use AGI as much as they desired.

Furthermore, since there will undoubtedly be people who don’t know about AGI or don’t have equipment such as smartphones or Internet access, the UN would perform a global educational effort to get people up-to-speed. This would include providing equipment such as a smartphone and the network capacity needed to utilize AGI.

The AI maker that attained AGI would be given some compensation for their accomplishment but might be cut out of future compensation under the notion of a government taking of AGI from the AI maker. In an imminent domain type of action, their devised AGI would be taken from them and placed into a special infrastructure established to globally enable access to the AGI.

Lots of variations are being tossed around about whether the originating maker of AGI would be allowed to keep AGI and run it on behalf of the world or be set to the side as a result of the governmental taking of AGI. Pros and cons are hotly debated.

Worries About Human-AGI Evildoing

Wait for a second, some bellow vociferously. If everyone has ready access to AGI, at no cost and no barrier to usage, we are opening a Pandora’s box.

Consider this scenario. An evildoer opts to access AGI. They can do so without any cost concerns. So, they tell AGI to come up with a biochemical weapon. AGI runs and runs, inventing a new biochemical weapon that nobody knows about. The evildoer thanks AGI and proceeds to construct the weapon. They can use it to blackmail the world.

Not good.

One retort to this worry is that we would stridently instruct AGI to not undertake any effort that would be construed as detrimental to humankind. Thus, when someone tries to go down the evil route, AGI would stop them cold. The AGI would indicate that their request is not allowed.

But suppose an evildoer manages to trick AGI into aiding an evildoing plot. Perhaps the evildoer asks for something seemingly innocuous but is part of a subtle step in a larger evil plan. Step by step, they get AGI to give them solutions that can be ultimately pieced together into a larger evil purpose. The AGI was not the wiser for it.

It would be very challenging to somehow get AGI to in an ironclad fashion never reveal or respond in a manner that would completely avert all potential evildoing.

Registering People That Use AGI

A related facet is that some say we would want to make sure that the people using AGI are identifiable. In other words, even if ready access to all is provided, we should still require people to identify who they are. We cannot allow people to wantonly or anonymously use AGI.

Each person ought to be responsible for how they use AGI.

Whoa, comes the reply, if you require identification to use AGI, that’s an entirely different can of worms. People will naturally suspect that the AGI is going to monitor them. In turn, the AGI might tattle on them to government authorities. This is a dire pathway.

It would also be a somewhat impractical notion anyway. What kind of identification would be used? The identification could be faked. Some point out that with the latest high-tech, such as eyeball scans, presumably people could be distinctly and suitably identified.

Creating A Worldwide AGI Agency-Entity

There are those that express qualms about handing over AGI to the UN. The concern is that the UN might not be the most suitable choice to fully control and run AGI.

A suggested alternative is to establish a new entity that would oversee AGI. This would be a means of starting fresh. No prior baggage. The new agency entity would be formulated from the ground up as having the sole purpose of managing AGI.

Who would pay for this and how would the new entity be globally governed?

Various proposed approaches are being floated. Perhaps every country in the world would need to pay into a special fund for AGI. The monies would go to the ongoing costs of running AGI, along with administering the use of AGI. The new agency entity wouldn’t necessarily be expected to drive a profit and would be set up as a non-profit, perhaps as an NGO.

That sounds solid, but again there are those who doubt this new agency would be as beneficial and supportive as one might assume. For example, the agency might become corrupt and those running AGI start to use the AGI for their own nefarious purposes. The rest of the world might not know what’s happening.

A reply to this concern is that there would be auditors that routinely examine the new agency. They would report to the world at large. This would presumably keep the AGI-managing entity on the up and up.

Round and round these arguments go.

Universal Access To AGI

How do you feel about the claim that universal access to AGI is a must-do?

There are those who fall into the camp that AGI access must absolutely be a universal right. Nothing other than full universal access is to be undertaken. All humans deserve access to AGI.

Others say that AGI access is a privilege and isn’t necessarily going to be applicable to everyone. That’s the way the ball bounces. The world doesn’t owe everyone access to AGI.

Another variation is that we might have tiered access to AGI. Perhaps there would be AGI access at a minimum level for all, including associated constraints on usage, and then above that tier would be AGI usage with more open-ended facilities.

It’s a tough question.

At this time, it is a theoretical question since we don’t yet have AGI. That being said, there are predictions that we might have AGI in the next several years, perhaps by 2030 or so. The question is looming, and we probably should be figuring out what we are going to do when the moment arrives (assuming we do attain AGI).

Take a few reflective moments to ascertain where you stand on the thorny issue.

A final thought for now might be spurred by the famous line of Margaret Fuller, noted American journalist in the early 1800s: “If you have knowledge, let others light their candles in it.”

AI Insights

To ChatGPT or not to ChatGPT: Professors grapple with AI in the classroom

As shopping period settles, students may notice a new addition to many syllabi: an artificial intelligence policy. As one of his first initiatives as associate provost for artificial intelligence, Michael Littman PhD’96 encouraged professors to implement guidelines for the use of AI.

Littman also recommended that professors “discuss (their) expectations in class” and “think about (their) stance around the use of AI,” he wrote in an Aug. 20 letter to faculty. But, professors on campus have applied this advice in different ways, reflecting the range of attitudes towards AI.

In her nonfiction classes, Associate Teaching Professor of English Kate Schapira MFA’06 prohibits AI usage entirely.

“I teach nonfiction because evidence … clarity and specificity are important to me,” she said. AI threatens these principles at a time “when they are especially culturally devalued” nationally.

She added that an overreliance on AI goes beyond the classroom. “It can get someone fired. It can screw up someone’s medication dosage. It can cause someone to believe that they have justification to harm themselves or another person,” she said.

Nancy Khalek, an associate professor of religious studies and history, said she is intentionally designing assignments that are not suitable for AI usage. Instead, she wants students “to engage in reflective assignments, for which things like ChatGPT and the like are not particularly useful or appropriate.”

Khalek said she considers herself an “AI skeptic” — while she acknowledged the tool’s potential, she expressed opposition to “the anti-human aspects of some of these technologies.”

But AI policies vary within and across departments.

Professors “are really struggling with how to create good AI policies, knowing that AI is here to stay, but also valuing some of the intermediate steps that it takes for a student to gain knowledge,” said Aisling Dugan PhD’07, associate teaching professor of biology.

In her class, BIOL 0530: “Principles of Immunology,” Dugan said she allows students to choose to use artificial intelligence for some assignments, but that she requires students to critique their own AI-generated work.

She said this reflection “is a skill that I think we’ll be using more and more of.”

Dugan added that she thinks AI can serve as a “study buddy” for students. She has been working with her teaching assistants to develop an AI chatbot for her classes, which she hopes will eventually answer student questions and supplement the study videos made by her TAs.

Despite this, Dugan still shared concerns over AI in classrooms. “It kind of misses the mark sometimes,” she said, “so it’s not as good as talking to a scientist.”

For some assignments, like primary literature readings, she has a firm no-AI policy, noting that comprehending primary literature is “a major pedagogical tool in upper-level biology courses.”

“There’s just some things that you have to do yourself,” Dugan said. “It (would be) like trying to learn how to ride a bike from AI.”

Assistant Professor of the Practice of Computer Science Eric Ewing PhD’24 is also trying to strike a balance between how AI can support and inhibit student learning.

This semester, his courses, CSCI 0410: “Foundations of AI and Machine Learning” and CSCI 1470: “Deep Learning,” heavily focus on artificial intelligence. He said assignments are no longer “measuring the same things,” since “we know students are using AI.”

While he does not allow students to use AI on homework, his classes offer projects that allow them “full rein” use of AI. This way, he said, “students are hopefully still getting exposure to these tools, but also meeting our learning objectives.”

Get The Herald delivered to your inbox daily.

Ewing also added that the skills required of graduated students are shifting — the growing presence of AI in the professional world requires a different toolkit.

He believes students in upper level computer science classes should be allowed to use AI in their coding assignments. “If you don’t use AI at the moment, you’re behind everybody else who’s using it,” he said.

Ewing says that he identifies AI policy violations through code similarity — last semester, he found that 25 students had similarly structured code. Ultimately, 22 of those 25 admitted to AI usage.

Littman also provided guidance to professors on how to identify the dishonest use of AI, noting various detection tools.

“I personally don’t trust any of these tools,” Littman said. In his introductory letter, he also advised faculty not to be “overly reliant on automated detection tools.”

Although she does not use detection tools, Schapira provides specific reasons in her syllabi to not use AI in order to convince students to comply with her policy.

“If you’re in this class because you want to get better at writing — whatever “better” means to you — those tools won’t help you learn that,” her syllabus reads. “It wastes water and energy, pollutes heavily, is vulnerable to inaccuracies and amplifies bias.”

In addition to these environmental concerns, Dugan was also concerned about the ethical implications of AI technology.

Khalek also expressed her concerns “about the increasingly documented mental health effects of tools like ChatGPT and other LLM-based apps.” In her course, she discussed with students how engaging with AI can “resonate emotionally and linguistically, and thus impact our sense of self in a profound way.”

Students in Schapira’s class can also present “collective demands” if they find the structure of her course overwhelming. “The solution to the problem of too much to do is not to use an AI tool. That means you’re doing nothing. It’s to change your conditions and situations with the people around you,” she said.

“There are ways to not need (AI),” Schapira continued. “Because of the flaws that (it has) and because of the damage (it) can do, I think finding those ways is worth it.”

AI Insights

This Artificial Intelligence (AI) Stock Could Outperform Nvidia by 2030

When investors think about artificial intelligence (AI) and the chips powering this technology, one company tends to dominate the conversation: Nvidia (NASDAQ: NVDA). It has become an undisputed barometer for AI adoption, riding the wave with its industry-leading GPUs and the sticky ecosystem of its CUDA software that keep developers in its orbit. Since the launch of ChatGPT about three years ago, Nvidia stock has surged nearly tenfold.

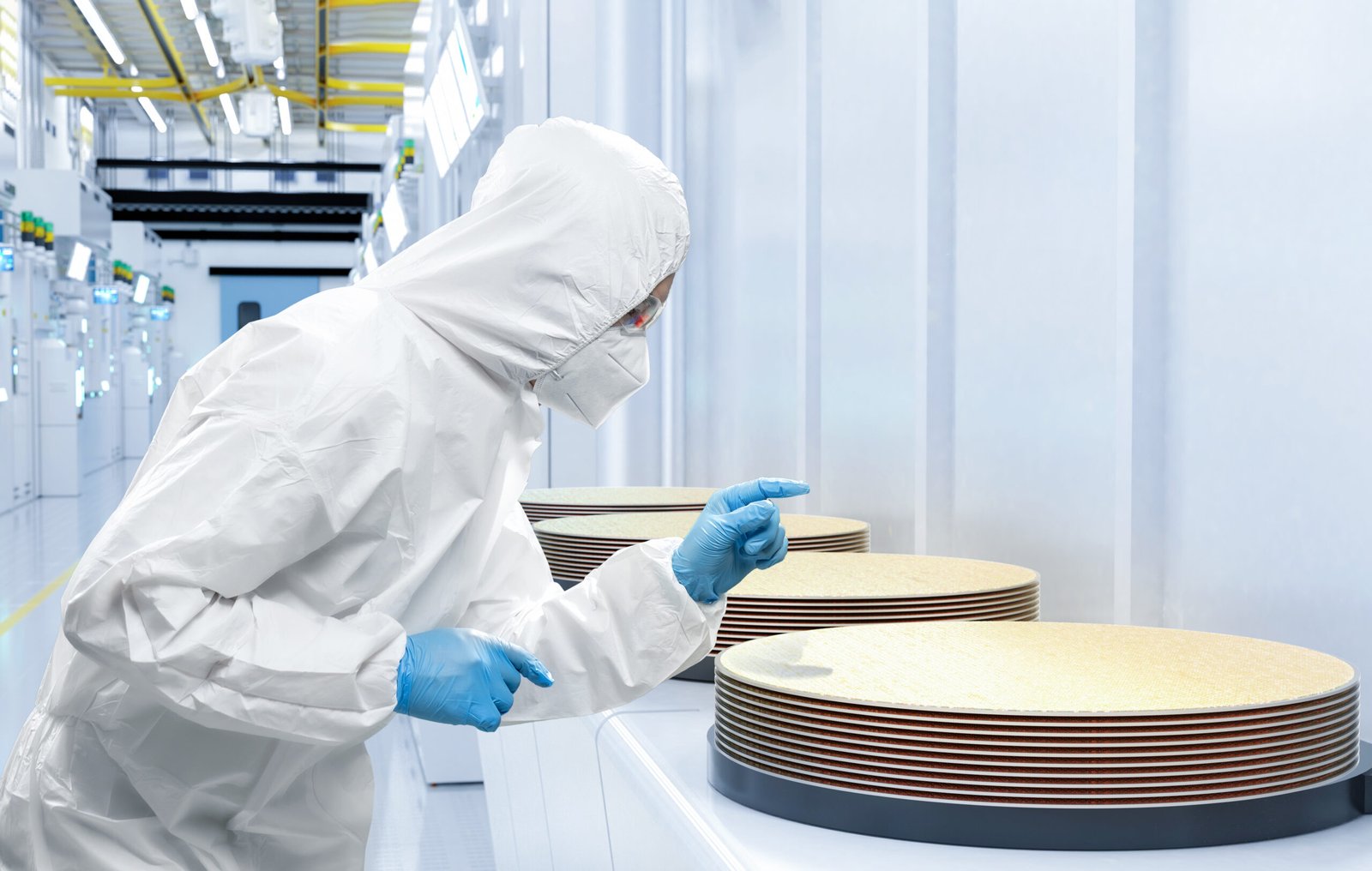

Here’s the twist: While Nvidia commands the spotlight today, it may be Taiwan Semiconductor Manufacturing (NYSE: TSM) that holds the real keys to growth as we look toward the next decade. Below, I’ll unpack why Taiwan Semi — or TSMC, as it’s often called — isn’t just riding the AI wave, but rather is building the foundation that brings the industry to life.

What makes Taiwan Semi so critical is its role as the backbone of the semiconductor ecosystem. Its foundry operations serve as the lifeblood of the industry, transforming complex chip designs into the physical processors that power myriad generative AI applications.

Source Fool.com

AI Insights

Albania puts AI-created ‘minister’ in charge of public procurement | Albania

A digital assistant that helps people navigate government services online has become the first “virtually created” AI cabinet minister and put in charge of public procurement in an attempt to cut down on corruption, the Albanian prime minister has said.

Diella, which means Sun in Albanian, has been advising users on the state’s e-Albania portal since January, helping them through voice commands with the full range of bureaucratic tasks they need to perform in order to access about 95% of citizen services digitally.

“Diella, the first cabinet member who is not physically present, but has been virtually created by AI”, would help make Albania “a country where public tenders are 100% free of corruption”, Edi Rama said on Thursday.

Announcing the makeup of his fourth consecutive government at the ruling Socialist party conference in Tirana, Rama said Diella, who on the e-Albania portal is dressed in traditional Albanian costume, would become “the servant of public procurement”.

Responsibility for deciding the winners of public tenders would be removed from government ministries in a “step-by-step” process and handled by artificial intelligence to ensure “all public spending in the tender process is 100% clear”, he said.

Diella would examine every tender in which the government contracts private companies and objectively assess the merits of each, said Rama, who was re-elected in May and has previously said he sees AI as a potentially effective anti-corruption tool that would eliminate bribes, threats and conflicts of interest.

Public tenders have long been a source of corruption scandals in Albania, which experts say is a hub for international gangs seeking to launder money from trafficking drugs and weapons and where graft has extended into the upper reaches of government.

Albanian media praised the move as “a major transformation in the way the Albanian government conceives and exercises administrative power, introducing technology not only as a tool, but also as an active participant in governance”.

after newsletter promotion

Not everyone was convinced, however. “In Albania, even Diella will be corrupted,” commented one Facebook user.

-

Business2 weeks ago

Business2 weeks agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms1 month ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy2 months ago

Ethics & Policy2 months agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi