AI Research

Clozd announces expanded platform and AI interviewer to power the future of qualitative research

LEHI, Utah, Sept. 9, 2025 /PRNewswire/ — Clozd, the category leader in win-loss analysis, today announced two major innovations: the launch of its evolved Clozd Platform—built to deliver rich qualitative feedback across the customer journey—and the debut of the Clozd AI Interviewer, a tool shaped by more than 50,000 interviews that combines the depth of human research with the scale modern teams demand.

Trusted by leaders including Microsoft, Toast, Gong, and Blue Cross Blue Shield, Clozd is redefining how go-to-market, product, and customer success teams capture and act on voice-of-customer insights.

“Clozd has become the world’s leading platform for win-loss analysis by transforming rich, conversational buyer feedback into actionable insights,” said Andrew Peterson, Clozd co-founder and co-CEO. “Our customers know that no survey or dashboard compares to the depth of understanding that comes from real buyer conversations. That’s why they’re asking us to expand Clozd beyond purchase decisions—to power qualitative research workflows across the entire customer journey, so they can understand their customers more deeply at every critical moment.”

A unified platform for continuous customer insights

The evolved Clozd Platform now supports any type of rich qualitative feedback—including win-loss analysis, post-implementation feedback, customer experience check-ins, and renewal-stage interviews—helping organizations capture richer insights across the customer journey.

Key new capabilities include:

- Built for your business: Easily customize labels and workflows so programs match your company’s terminology and processes.

- Launch when it matters most: Run interviews at any stage—onboarding, renewal, or anywhere in between—with an updated system.

- See the full customer journey: Manage multiple programs in one workspace—and get complete visibility across accounts.

Beyond these core use cases, Clozd customers can run virtually any type of qualitative research—from product feedback to market validation—all in one platform.

Meet Clozd’s AI Interviewer: Human-quality insights, now at scale

Embedded in Clozd’s Flex Interview product, the AI Interviewer behaves like a trained human researcher—probing for depth, listening for nuance, and adapting in real time.

“For years, teams have had to choose between a handful of deep interviews or a higher number of broad, shallow surveys. The AI Interviewer eliminates that choice,” said Spencer Dent, Clozd co-CEO and co-founder. “It feels like talking to a seasoned researcher—only now there’s no limit to how many conversations you can have.”

Designed as an intelligent extension of the user’s team, Clozd’s AI Interviewer:

- Responds dynamically to what participants say

- Explores with relevant, unscripted follow-ups

- Adapts questioning to context

- Available in all Flex Interview programs—with no additional license or fee

Shaped by more than 50,000 live interviews, Clozd’s AI Interviewer captures the instincts and professionalism of expert qualitative researchers while enabling organizations to hear from more voices, more often.

Customers are already expanding what’s possible

Clozd customers are already using the platform to collect rich qualitative feedback across the customer journey—well beyond traditional win-loss analysis. These programs include mid-POC buyer feedback, strategic temperature checks, new market validation, and renewal insights.

With the evolved Clozd Platform and new AI Interviewer, teams can now scale these initiatives with greater depth, speed, and confidence.

“These aren’t just interviews—they’re opportunities to intervene earlier, adapt faster, and win more often,” Peterson said. “We’re not just making rich customer feedback more accessible—we’re setting a new standard for how it’s captured and put to work.”

To experience the new Clozd Platform and AI Interviewer, click here.

About Clozd

Clozd is the leading qualitative research platform for enterprise use cases like win-loss analysis, onboarding feedback, mid-cycle check-ins, and churn prevention, offering world-class technology with unmatched expertise to deliver insights that drive action. Clozd has conducted more than 50,000 customer interviews for clients in a wide range of industries, including enterprise software, business services, healthcare, financial services, manufacturing, transportation, telecom, and more. For more information, visit www.clozd.com.

CONTACT:

Danielle Talbot

[email protected]

SOURCE Clozd

AI Research

AI Startup Authentica Tackles Supply Chain Risk

AI Research

Artificial Intelligence (AI) Unicorn Anthropic Just Hit a $183 Billion Valuation. Here’s What It Means for Amazon Investors

Anthropic just closed on a $13 billion Series F funding round.

It’s been about a month since OpenAI unveiled its latest model, GPT-5. In that time, rival platforms have made bold moves of their own.

Perplexity, for instance, drew headlines with a $34.5 billion unsolicited bid for Alphabet‘s Google Chrome, while Anthropic — backed by both Alphabet and Amazon (AMZN 1.04%) — closed a $13 billion Series F funding round that propelled its valuation to an eye-popping $183 billion.

Since debuting its AI chatbot, Claude, in March 2023, Anthropic has experienced explosive growth. The company’s run-rate revenue surged from $1 billion at the start of this year to $5 billion by the end of August.

Image source: Getty Images.

While these gains are a clear win for the venture capital firms that backed Anthropic early on, the company’s trajectory carries even greater strategic weight for Amazon.

Let’s explore how Amazon is integrating Anthropic into its broader artificial intelligence (AI) ecosystem — and what this deepening alliance could mean for investors.

AWS + Anthropic: Amazon’s secret weapon in the AI arms race

Beyond its e-commerce dominance, Amazon’s largest business is its cloud computing arm — Amazon Web Services (AWS).

Much like Microsoft‘s integration of ChatGPT into its Azure platform, Amazon is positioning Anthropic’s Claude as a marquee offering within AWS. Through its Bedrock service, AWS customers can access a variety of large language models (LLMs) — with Claude being a prominent staple — to build and deploy generative AI applications.

In effect, Anthropic acts as both a differentiator and a distribution channel for AWS — giving enterprise customers the flexibility to test different models while keeping them within Amazon’s ecosystem. This expands AWS’s value proposition because it helps create stickiness in a fiercely intense cloud computing landscape.

Cutting Nvidia and AMD out of the loop

Another strategic benefit of Amazon’s partnership with Anthropic is the opportunity to accelerate adoption of its custom silicon, Trainium and Inferentia. These chips were specifically engineered to reduce dependence on Nvidia‘s GPUs and to lower the cost of both training and inferencing AI workloads.

The bet is that if Anthropic can successfully scale Claude on Trainium and Inferentia, it will serve as a proof point to the broader market that Amazon’s hardware offers a viable, cost-efficient alternative to premium GPUs from Nvidia and Advanced Micro Devices.

By steering more AI compute toward its in-house silicon, Amazon improves its unit economics — capturing more of the value chain and ultimately enhancing AWS’s profitability over time.

From Claude to cash flow

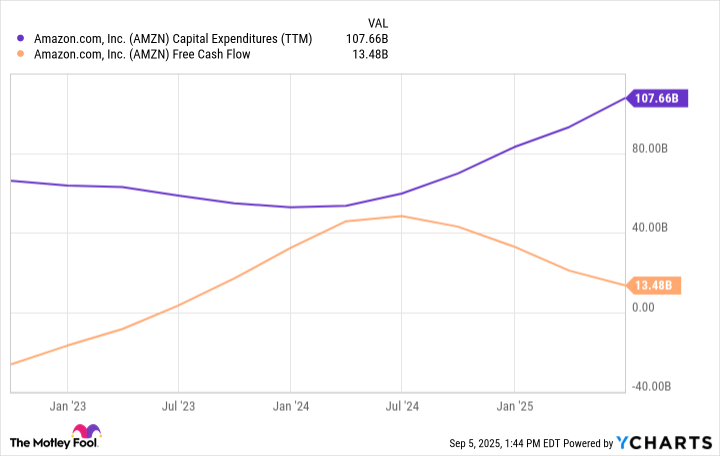

For investors, the central question is how Anthropic is translating into a tangible financial impact for Amazon. As the figures below illustrate, Amazon has not hesitated to deploy unprecedented sums into AI-related capital expenditures (capex) over the past few years. While this acceleration in spend has temporarily weighed on free cash flow, such investments are part of a deliberate long-term strategy rather than a short-term playbook.

AMZN Capital Expenditures (TTM) data by YCharts

Partnerships of this scale rarely yield immediate results. Working with Anthropic is not about incremental wins — it’s about laying the foundation for transformative outcomes.

In practice, Anthropic enhances AWS’s ability to secure long-term enterprise contracts — reinforcing Amazon’s position as an indispensable backbone of AI infrastructure. Once embedded, the switching costs for customers considering alternative models or rival cloud providers like Microsoft Azure or Google Cloud Platform (GCP) become prohibitively high.

Over time, these dynamics should enable Amazon to capture a larger share of AI workloads and generate durable, high-margin recurring fees. As profitability scales alongside revenue growth, Amazon is well-positioned to experience meaningful valuation expansion relative to its peers — making the stock a compelling opportunity to buy and hold for long-term investors right now.

Adam Spatacco has positions in Alphabet, Amazon, Microsoft, and Nvidia. The Motley Fool has positions in and recommends Advanced Micro Devices, Alphabet, Amazon, Microsoft, and Nvidia. The Motley Fool recommends the following options: long January 2026 $395 calls on Microsoft and short January 2026 $405 calls on Microsoft. The Motley Fool has a disclosure policy.

AI Research

AI co-pilot boosts noninvasive brain-computer interface by interpreting user intent, UCLA study finds

Key takeaways:

- A wearable, noninvasive brain-computer interface system that utilizes artificial intelligence as a co-pilot to help infer user intent and complete tasks has been developed by UCLA engineers.

- The team developed custom algorithms to decode electroencephalography, or EEG — a method of recording the brain’s electrical activity — and extract signals that reflect movement intentions.

- All participants completed both tasks significantly faster with AI assistance.

UCLA engineers have developed a wearable, noninvasive brain-computer interface system that utilizes artificial intelligence as a co-pilot to help infer user intent and complete tasks by moving a robotic arm or a computer cursor.

The study, published in Nature Machine Intelligence, shows that the interface demonstrates a new level of performance in noninvasive brain-computer interface, or BCI, systems. This could lead to a range of technologies to help people with limited physical capabilities, such as those with paralysis or neurological conditions, handle and move objects more easily and precisely.

The team developed custom algorithms to decode electroencephalography, or EEG — a method of recording the brain’s electrical activity — and extract signals that reflect movement intentions. They paired the decoded signals with a camera-based artificial intelligence platform that interprets user direction and intent in real time. The system allows individuals to complete tasks significantly faster than without AI assistance.

“By using artificial intelligence to complement brain-computer interface systems, we’re aiming for much less risky and invasive avenues,” said study leader Jonathan Kao, an associate professor of electrical and computer engineering at the UCLA Samueli School of Engineering. “Ultimately, we want to develop AI-BCI systems that offer shared autonomy, allowing people with movement disorders, such as paralysis or ALS, to regain some independence for everyday tasks.”

State-of-the-art, surgically implanted BCI devices can translate brain signals into commands, but the benefits they currently offer are outweighed by the risks and costs associated with neurosurgery to implant them. More than two decades after they were first demonstrated, such devices are still limited to small pilot clinical trials. Meanwhile, wearable and other external BCIs have demonstrated a lower level of performance in detecting brain signals reliably.

To address these limitations, the researchers tested their new noninvasive AI-assisted BCI with four participants — three without motor impairments and a fourth who was paralyzed from the waist down. Participants wore a head cap to record EEG, and the researchers used custom decoder algorithms to translate these brain signals into movements of a computer cursor and robotic arm. Simultaneously, an AI system with a built-in camera observed the decoded movements and helped participants complete two tasks.

In the first task, they were instructed to move a cursor on a computer screen to hit eight targets, holding the cursor in place at each for at least half a second. In the second challenge, participants were asked to activate a robotic arm to move four blocks on a table from their original spots to designated positions.

All participants completed both tasks significantly faster with AI assistance. Notably, the paralyzed participant completed the robotic arm task in approximately six and a half minutes with AI assistance, whereas without it, he was unable to complete the task.

The BCI deciphered electrical brain signals that encoded the participants’ intended actions. Using a computer vision system, the custom-built AI inferred the users’ intent — not their eye movements — to guide the cursor and position the blocks.

“Next steps for AI-BCI systems could include the development of more advanced co-pilots that move robotic arms with more speed and precision, and offer a deft touch that adapts to the object the user wants to grasp,” said co-lead author Johannes Lee, a UCLA electrical and computer engineering doctoral candidate advised by Kao. “And adding in larger-scale training data could also help the AI collaborate on more complex tasks, as well as improve EEG decoding itself.”

The paper’s authors are all members of Kao’s Neural Engineering and Computation Lab, including Sangjoon Lee, Abhishek Mishra, Xu Yan, Brandon McMahan, Brent Gaisford, Charles Kobashigawa, Mike Qu and Chang Xie. A member of the UCLA Brain Research Institute, Kao also holds faculty appointments in the computer science department and the Interdepartmental Ph.D. program in neuroscience.

The research was funded by the National Institutes of Health and the Science Hub for Humanity and artificial intelligence, which is a collaboration between UCLA and Amazon. The UCLA Technology Development Group has applied for a patent related to the AI-BCI technology.

-

Business2 weeks ago

Business2 weeks agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms4 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi