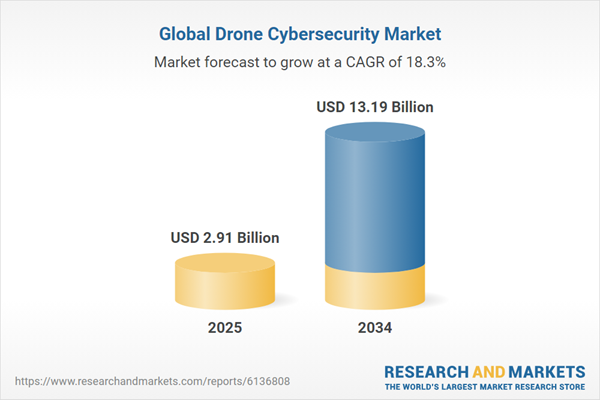

Dublin, Sept. 12, 2025 (GLOBE NEWSWIRE) — The “Drone Cybersecurity Market – A Global and Regional Analysis: Focus on Components, Drone Type, Application, and Regional Analysis – Analysis and Forecast, 2025-2034” report has been added to ResearchAndMarkets.com’s offering.

The drone cybersecurity market forms a critical segment of the broader UAV and cybersecurity ecosystem. Advances in sensor technology, encrypted communication, AI-driven analytics, and blockchain integration are reshaping how drones mitigate cyber risks. Drone cybersecurity solutions encompass software, hardware, and managed services that collectively safeguard UAV operations against GPS spoofing, signal jamming, data breaches, and unauthorized control.

The market benefits from substantial investments in research and development aimed at enhancing threat detection accuracy, minimizing latency, and securing over-the-air firmware updates. Regulatory frameworks, particularly in the U.S., Europe, and Asia-Pacific regions, are driving increased adoption of cybersecurity measures, compelling manufacturers and operators to comply with stringent standards. This regulatory emphasis fuels innovation in drone cybersecurity market offerings, including autonomous defense features and comprehensive incident response services.

Global Drone Cybersecurity Market Lifecycle Stage

Currently, the drone cybersecurity market is in a high-growth phase, propelled by accelerating UAV deployments in sectors such as agriculture, defense, infrastructure inspection, and logistics. Key technologies have matured to advanced readiness levels, supporting broad implementation. North America commands a significant market share due to substantial defense spending and proactive regulatory policies, while the Asia-Pacific region demonstrates rapid adoption driven by commercial applications and government initiatives.

Collaborative ventures between cybersecurity firms, drone manufacturers, and government agencies are essential to delivering integrated security solutions. Market dynamics are influenced by evolving cyber threat landscapes, emerging drone use cases, and advancements in AI and machine learning. The drone cybersecurity market is forecast to maintain strong momentum over the next decade, supported by continuous technological innovation and increased prioritization of UAV security in global drone operations.

Drone Cybersecurity Market Key Players and Competition Synopsis

The drone cybersecurity market exhibits a dynamic and competitive environment driven by leading technology firms and innovative cybersecurity solution providers specializing in unmanned aerial vehicle (UAV) security. Major global players such as Airbus Defence and Space, DroneShield, and Raytheon Technologies are pivotal in advancing drone cybersecurity technologies. These companies focus on developing sophisticated threat detection systems, secure communication protocols, anti-jamming hardware, and AI-powered anomaly detection tools tailored to protect drones from evolving cyber threats.

Alongside established leaders, emerging startups contribute innovative solutions addressing niche vulnerabilities and enabling real-time response capabilities. Competition within the drone cybersecurity market is intensified by strategic partnerships, continuous innovation, regulatory compliance demands, and increasing drone adoption across defense, commercial, and governmental sectors. As the drone cybersecurity market expands, players prioritize scalable, interoperable, and cost-effective security solutions that meet diverse operational requirements globally.

Demand Drivers and Limitations

The following are the demand drivers for the drone cybersecurity market:

- Growing drone use in critical applications

- Increasing sophistication of cyberattacks on UAVs

- Strict regulatory cybersecurity requirements

The drone cybersecurity market is expected to face some limitations as well due to the following challenges:

- High implementation costs

- Technology outpacing security solutions

Some prominent names established in the drone cybersecurity market are:

- Airbus Defence and Space

- Palo Alto Networks

- Airspace Systems

- Boeing Defense, Space & Security

- BAE Systems plc

- DroneShield

- DroneSec

- Fortem Technologies

- Raytheon Technologies

- Israel Aerospace Industries Ltd. (IAI)

- General Dynamics Corporation

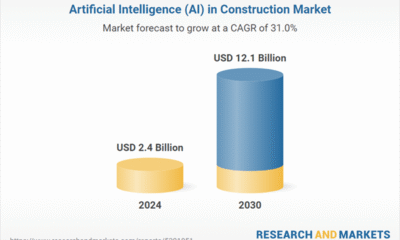

Key Attributes:

| Report Attribute | Details |

| No. of Pages | 140 |

| Forecast Period | 2025 – 2034 |

| Estimated Market Value (USD) in 2025 | $2.91 Billion |

| Forecasted Market Value (USD) by 2034 | $13.19 Billion |

| Compound Annual Growth Rate | 18.2% |

| Regions Covered | Global |

Key Topics Covered:

1. Markets: Industry Outlook

1.1 Trends: Current and Future Impact Assessment

1.2 Market Dynamics Overview

1.2.1 Market Drivers

1.2.2 Market Restraints

1.2.3 Market Opportunities

1.3 Impact of Regulatory and Environmental Policies

1.4 Patent Analysis

1.4.1 By Year

1.4.2 By Region

1.5 Technology Trends and Innovations

1.6 Cyber Threats and Risk Assessment

1.7 Investment Landscape and R&D Trends

1.8 Value Chain Analysis

1.9 Industry Attractiveness

2. Global Drone Cybersecurity Market (by Components)

2.1 Software

2.2 Hardware

2.3 Services

3. Global Drone Cybersecurity Market (by Drone Type)

3.1 Fixed Wing

3.2 Rotary Wing

3.3 Hybrid

4. Global Drone Cybersecurity Market (by Application)

4.1 Manufacturing

4.2 Military and Defense

4.3 Agriculture

4.4 Logistics and Transportation

4.5 Surveillance and Monitoring

4.6 Others

5. Global Drone Cybersecurity Market (by Region)

5.1 Global Drone Cybersecurity Market (by Region)

5.2 North America

5.2.1 Regional Overview

5.2.2 Driving Factors for Market Growth

5.2.3 Factors Challenging the Market

5.2.4 Key Companies

5.2.5 Components

5.2.6 Drone Type

5.2.7 Application

5.2.8 North America (by Country)

5.2.8.1 U.S.

5.2.8.1.1 Market by Components

5.2.8.1.2 Market by Drone Type

5.2.8.1.3 Market by Application

5.2.8.2 Canada

5.2.8.2.1 Market by Components

5.2.8.2.2 Market by Drone Type

5.2.8.2.3 Market by Application

5.2.8.3 Mexico

5.2.8.3.1 Market by Components

5.2.8.3.2 Market by Drone Type

5.2.8.3.3 Market by Application

5.3 Europe

5.4 Asia-Pacific

5.5 Rest-of-the-World

6. Competitive Benchmarking & Company Profiles

6.1 Next Frontiers

6.2 Geographic Assessment

6.3 Company Profiles

6.3.1 Overview

6.3.2 Top Products/Product Portfolio

6.3.3 Top Competitors

6.3.4 Target Customers

6.3.5 Key Personnel

6.3.6 Analyst View

6.3.7 Market Share

For more information about this report visit https://www.researchandmarkets.com/r/mhm1qg

About ResearchAndMarkets.com

ResearchAndMarkets.com is the world’s leading source for international market research reports and market data. We provide you with the latest data on international and regional markets, key industries, the top companies, new products and the latest trends.