AI Insights

Artificial Intelligence Consultant Ashley Gross Shares Details on Pittsboro Commissioner Candidacy

Among the eight candidates looking to connect with voters in Pittsboro this fall is Ashley Gross, an artificial intelligence advocate, consultant and course creator.

Gross filed to run for the town government’s Board of Commissioners in July, joining a crowded race to replace the outgoing Pamela Baldwin and James Vose. A resident of the Vineyards neighborhood of Chatham Park, she works as a keynote speaker and consultant for businesses looking to learn more about AI practices in the emerging technology space, leading her own consulting company and working as the CEO of the organization AI Workforce Alliance.

In an email with Chapelboro, Gross described herself as “a mom who loves this little corner of the world we call home” and committed to the area. When describing her motivation to run — in which she incorrectly stated she was running for a county commissioner seat — she said helping the greater Pittsboro community feel connected and supported with a variety of resources is key amid the town’s ongoing growth.

“I see the push and pull between people who have called Chatham home for generations and those who are just discovering it,” Gross said. “I believe that our differences are not barriers. They are opportunities to learn from each other. My strength is sitting down with people, even when we disagree, and finding the common ground we share. I am a researcher and an experimenter by nature, and I have seen that the most successful communities are built when people come together around shared interests and goals. That is the kind of leadership I want to bring, one that unites us instead of dividing us.”

Gross cited uplifting small businesses to help maintain the local economy as a key priority, as well as public safety and investments into local infrastructure.

“Safe roads, modern emergency response systems, and preparation for the weather risks we face mean families can feel secure no matter what comes our way,” she said. “And as we grow, I will focus on smart development that keeps our small town character intact while building the infrastructure we need for the future.”

Other priorities the Pittsboro resident listed as having strong local schools, improving partnerships with local colleges and expanding reliable internet to each home and business — all issues that fall more under the purview of the Chatham County government more than the town government.

When describing what she is looking forward to during her campaign for Pittsboro’s Board of Commissioners, Gross wrote that she wants to hear directly from residents about their “concerns, hopes and ideas” while listening and using “data and common sense” to inform her policy decisions.

“Every choice I make,” Gross wrote, “will be guided by a simple question: will this keep our families safe, connected, and thriving? At the end of the day, I am just a mom who believes Chatham is at its best when we work as one community, where families stay close, opportunities grow here, and every neighbor feels they belong.”

Gross will be on the ballot along with Freda Alston, Alex M. Brinker, Corey Forrest, Candace Hunziker, Tobais Palmer, Nikkolas Shramek and Tiana Thurber. The top two commissioner candidates to receive votes will serve four-year terms on the five-seat town board alongside Pittsboro Mayor Kyle Shipp — who is running unopposed for re-election.

Election Day for the 2025 fall cycle will be Tuesday, Nov. 4, with early voting in Chatham County’s municipal elections beginning on Thursday, Oct. 10.

Featured image via Ashley Gross.

Chapelboro.com does not charge subscription fees, and you can directly support our efforts in local journalism here. Want more of what you see on Chapelboro? Let us bring free local news and community information to you by signing up for our newsletter.

Related Stories

AI Insights

Perplexity Valuation Hits $20 Billion Following New Funding Round

Artificial intelligence (AI) search startup Perplexity AI has reportedly secured $200 million in new funding.

AI Insights

Patients turn to AI to interpret lab tests, with mixed results : Shots

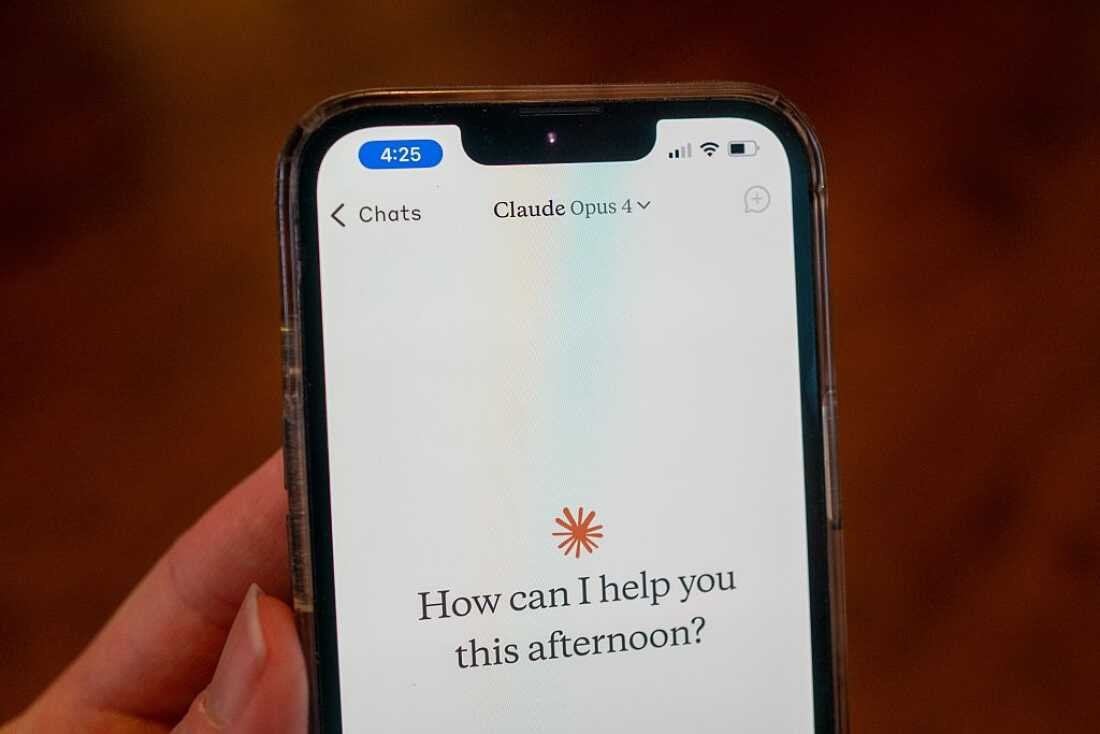

People are turning to Chatbots like Claude to get help interpreting their lab test results.

Smith Collection/Gado/Archive Photos/Getty Images

hide caption

toggle caption

Smith Collection/Gado/Archive Photos/Getty Images

When Judith Miller had routine blood work done in July, she got a phone alert the same day that her lab results were posted online. So, when her doctor messaged her the next day that overall her tests were fine, Miller wrote back to ask about the elevated carbon dioxide and something called “low anion gap” listed in the report.

While the 76-year-old Milwaukee resident waited to hear back, Miller did something patients increasingly do when they can’t reach their health care team. She put her test results into Claude and asked the AI assistant to evaluate the data.

“Claude helped give me a clear understanding of the abnormalities,” Miller said. The generative AI model didn’t report anything alarming, so she wasn’t anxious while waiting to hear back from her doctor, she said.

Patients have unprecedented access to their medical records, often through online patient portals such as MyChart, because federal law requires health organizations to immediately release electronic health information, such as notes on doctor visits and test results.

And many patients are using large language models, or LLMs, like OpenAI’s ChatGPT, Anthropic’s Claude, and Google’s Gemini, to interpret their records. That help comes with some risk, though. Physicians and patient advocates warn that AI chatbots can produce wrong answers and that sensitive medical information might not remain private.

But does AI know what it’s talking about?

Yet, most adults are cautious about AI and health. Fifty-six percent of those who use or interact with AI are not confident that information provided by AI chatbots is accurate, according to a 2024 KFF poll. (KFF is a health information nonprofit that includes KFF Health News.)

That instinct is born out in research.

“LLMs are theoretically very powerful and they can give great advice, but they can also give truly terrible advice depending on how they’re prompted,” said Adam Rodman, an internist at Beth Israel Deaconess Medical Center in Massachusetts and chair of a steering group on generative AI at Harvard Medical School.

Justin Honce, a neuroradiologist at UCHealth in Colorado, said it can be very difficult for patients who are not medically trained to know whether AI chatbots make mistakes.

“Ultimately, it’s just the need for caution overall with LLMs. With the latest models, these concerns are continuing to get less and less of an issue but have not been entirely resolved,” Honce said.

Rodman has seen a surge in AI use among his patients in the past six months. In one case, a patient took a screenshot of his hospital lab results on MyChart then uploaded them to ChatGPT to prepare questions ahead of his appointment. Rodman said he welcomes patients’ showing him how they use AI, and that their research creates an opportunity for discussion.

Roughly 1 in 7 adults over 50 use AI to receive health information, according to a recent poll from the University of Michigan, while 1 in 4 adults under age 30 do so, according to the KFF poll.

Using the internet to advocate for better care for oneself isn’t new. Patients have traditionally used websites such as WebMD, PubMed, or Google to search for the latest research and have sought advice from other patients on social media platforms like Facebook or Reddit. But AI chatbots’ ability to generate personalized recommendations or second opinions in seconds is novel.

What to know: Watch out for “hallucinations” and privacy issues

Liz Salmi, communications and patient initiatives director at OpenNotes, an academic lab at Beth Israel Deaconess that advocates for transparency in health care, had wondered how good AI is at interpretation, specifically for patients.

In a proof-of-concept study published this year, Salmi and colleagues analyzed the accuracy of ChatGPT, Claude, and Gemini responses to patients’ questions about a clinical note. All three AI models performed well, but how patients framed their questions mattered, Salmi said. For example, telling the AI chatbot to take on the persona of a clinician and asking it one question at a time improved the accuracy of its responses.

Privacy is a concern, Salmi said, so it’s critical to remove personal information like your name or Social Security number from prompts. Data goes directly to tech companies that have developed AI models, Rodman said, adding that he is not aware of any that comply with federal privacy law or consider patient safety. Sam Altman, CEO of OpenAI, warned on a podcast last month about putting personal information into ChatGPT.

“Many people who are new to using large language models might not know about hallucinations,” Salmi said, referring to a response that may appear sensible but is inaccurate. For example, OpenAI’s Whisper, an AI-assisted transcription tool used in hospitals, introduced an imaginary medical treatment into a transcript, according to a report by The Associated Press.

Using generative AI demands a new type of digital health literacy that includes asking questions in a particular way, verifying responses with other AI models, talking to your health care team, and protecting your privacy online, said Salmi and Dave deBronkart, a cancer survivor and patient advocate who writes a blog devoted to patients’ use of AI.

Physicians must be cautious with AI too

Patients aren’t the only ones using AI to explain test results. Stanford Health Care has launched an AI assistant that helps its physicians draft interpretations of clinical tests and lab results to send to patients.

Colorado researchers studied the accuracy of ChatGPT-generated summaries of 30 radiology reports, along with four patients’ satisfaction with them. Of the 118 valid responses from patients, 108 indicated the ChatGPT summaries clarified details about the original report.

But ChatGPT sometimes overemphasized or underemphasized findings, and a small but significant number of responses indicated patients were more confused after reading the summaries, said Honce, who participated in the preprint study.

Meanwhile, after four weeks and a couple of follow-up messages from Miller in MyChart, Miller’s doctor ordered a repeat of her blood work and an additional test that Miller suggested. The results came back normal. Miller was relieved and said she was better informed because of her AI inquiries.

“It’s a very important tool in that regard,” Miller said. “It helps me organize my questions and do my research and level the playing field.”

KFF Health News is a national newsroom that produces in-depth journalism about health issues and is one of the core operating programs at KFF .

AI Insights

Artificial intelligence can predict risk of heart attack – mydailyrecord.com

-

Business2 weeks ago

Business2 weeks agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms1 month ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy2 months ago

Ethics & Policy2 months agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi