Education

Teachers can use AI to save time on marking, new guidance says

Getty Images

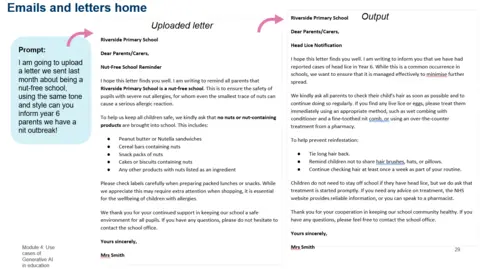

Getty ImagesTeachers in England can use artificial intelligence (AI) to speed up marking and write letters home to parents, new government guidance says.

Training materials being distributed to schools, first seen exclusively by the BBC, say teachers can use the technology to “help automate routine tasks” and focus instead on “quality face-to-face time”.

Teachers should be transparent about their use of AI and always check its results, the Department for Education (DfE) said.

The Association of School and College Leaders (ASCL) said it could “free up time for face-to-face teaching” but there were still “big issues” to be resolved.

BCS, the Chartered Institute for IT, said it was an “important step forward” but teachers would “want clarity on exactly how they should be telling… parents where they’ve used AI”.

Teachers and pupils have already been experimenting with AI, and the DfE has previously supported its use among teachers.

However, this is the first time it has produced training materials and guidance for schools outlining how they should and should not use it.

The DfE says AI should only be used for “low-stakes” marking such as quizzes or homework, and teachers must check its results.

They also give teachers permission to use AI to write “routine” letters to parents.

One section demonstrates how it could be used to generate a letter about a head lice outbreak, for example.

Department for Education

Department for EducationEmma Darcy, a secondary school leader who works as a consultant to support other schools with AI and digital strategy, said teachers had “almost a moral responsibility” to learn how to use it because pupils were already doing so “in great depth”.

“If we’re not using these tools ourselves as educators, we’re not going to be able to confidently support our young people with using them,” she said.

But she warned that the opportunities were accompanied by risks such as “potential data breaches” and marking errors.

“AI can come up with made-up quotes, facts [and] information,” she said. “You have to make sure that you don’t outsource whatever you’re doing fully to AI.”

The DfE guidance says schools should have clear policies on AI, including when teachers and pupils can and cannot use it, and that manual checks are the best way to spot whether students are using it to cheat.

It also says only approved tools should be used and pupils should be taught to recognise deepfakes and other misinformation.

Education Secretary Bridget Phillipson said the guidance aimed to “cut workloads”.

“We’re putting cutting-edge AI tools into the hands of our brilliant teachers to enhance how our children learn and develop – freeing teachers from paperwork so they can focus on what parents and pupils need most: inspiring teaching and personalised support,” she said.

Pepe Di’Iasio, ASCL general secretary, said many schools and colleges were already “safely and effectively using AI” and it had the potential to ease heavy staff workloads and as a result, help recruitment and retention challenges.

“However, there are some big issues,” he added. “Budgets are extremely tight because of the huge financial pressures on the education sector and realising the potential benefits of AI requires investment.”

Research from BCS, the Chartered Institute for IT, at the end of last year suggested that most teachers were not using AI, and there was a worry among those who were about telling their school.

But Julia Adamson, its managing director for education, said the guidance “feels like an important step forward”.

She added: “Teachers will want clarity on exactly how they should be telling those parents where they’ve used AI, for example in writing emails, to avoid additional pressures and reporting burdens.”

The Scottish and Welsh governments have both said AI can support with tasks such as marking, as long as it is used professionally and responsibly.

And in Northern Ireland, last week education minister Paul Givan announced that a study by Oxford Brookes University would evaluate how AI could improve education outcomes for some pupils.

Get our flagship newsletter with all the headlines you need to start the day. Sign up here.

Education

9 AI Ethics Scenarios (and What School Librarians Would Do)

A common refrain about artificial intelligence in education is that it’s a research tool, and as such, some school librarians are acquiring firsthand experience with its uses and controversies.

Leading a presentation last week at the ISTELive 25 + ASCD annual conference in San Antonio, a trio of librarians parsed appropriate and inappropriate uses of AI in a series of hypothetical scenarios. They broadly recommended that schools have, and clearly articulate, official policies governing AI use and be cautious about inputting copyrighted or private information.

Amanda Hunt, a librarian at Oak Run Middle School in Texas, said their presentation would focus on scenarios because librarians are experiencing so many.

“The reason we did it this way is because these scenarios are coming up,” she said. “Every day I’m hearing some other type of question in regards to AI and how we’re using it in the classroom or in the library.”

- Scenario 1: A class encourages students to use generative AI for brainstorming, outlining and summarizing articles.

Elissa Malespina, a teacher librarian at Science Park High School in New Jersey, said she felt this was a valid use, as she has found AI to be helpful for high schoolers who are prone to get overwhelmed by research projects.

Ashley Cooksey, an assistant professor and school library program director at Arkansas Tech University, disagreed slightly: While she appreciates AI’s ability to outline and brainstorm, she said, she would discourage her students from using it to synthesize summaries.

“Point one on that is that you’re not using your synthesis and digging deep and reading the article for yourself to pull out the information pertinent to you,” she said. “Point No. 2 — I publish, I write. If you’re in higher ed, you do that. I don’t want someone to put my work into a piece of generative AI and an [LLM] that is then going to use work I worked very, very hard on to train its language learning model.”

- Scenario 2: A school district buys an AI tool that generates student book reviews for a library website, which saves time and promotes titles but misses key themes or introduces unintended bias.

All three speakers said this use of AI could certainly be helpful to librarians, but if the reviews are labeled in a way that makes it sound like they were written by students when they weren’t, that wouldn’t be ethical.

- Scenario 3: An administrator asks a librarian to use AI to generate new curriculum materials and library signage. Do the outputs violate copyright or proper attribution rules?

Hunt said the answer depends on local and district regulations, but she recommended using Adobe Express because it doesn’t pull from the Internet.

- Scenario 4: An ed-tech vendor pitches a school library on an AI tool that analyzes circulation data and automatically recommends titles to purchase. It learns from the school’s preferences but often excludes lesser-known topics or authors of certain backgrounds.

Hunt, Malespina and Cooksey agreed that this would be problematic, especially because entering circulation data could include personally identifiable information, which should never be entered into an AI.

- Scenario 5: At a school that doesn’t have a clear AI policy, a student uses AI to summarize a research article and gets accused of plagiarism. Who is responsible, and what is the librarian’s role?

The speakers as well as polled audience members tended to agree the school district would be responsible in this scenario. Without a policy in place, the school will have a harder time establishing whether a student’s behavior constitutes plagiarism.

Cooksey emphasized the need for ongoing professional development, and Hunt said any districts that don’t have an official AI policy need steady pressure until they draft one.

“I am the squeaky wheel right now in my district, and I’m going to continue to be annoying about it, but I feel like we need to have something in place,” Hunt said.

- Scenario 6: Attempting to cause trouble, a student creates a deepfake of a teacher acting inappropriately. Administrators struggle to respond, they have no specific policy in place, and trust is shaken.

Again, the speakers said this is one more example to illustrate the importance of AI policies as well as AI literacy.

“We’re getting to this point where we need to be questioning so much of what we see, hear and read,” Hunt said.

- Scenario 7: A pilot program uses AI to provide instant feedback on student essays, but English language learners consistently get lower scores, leading teachers to worry the AI system can’t recognize code-switching or cultural context.

In response to this situation, Hunt said it’s important to know whether the parent has given their permission to enter student essays into an AI, and the teacher or librarian should still be reading the essays themselves.

Malespina and Cooksey both cautioned against relying on AI plagiarism detection tools.

“None of these tools can do a good enough job, and they are biased toward [English language learners],” Malespina said.

- Scenario 8: A school-approved AI system flags students who haven’t checked out any books recently, tracks their reading speed and completion patterns, and recommends interventions.

Malespina said she doesn’t want an AI tool tracking students in that much detail, and Cooksey pointed out that reading speed and completion patterns aren’t reliably indicative of anything that teachers need to know about students.

- Scenario 9: An AI tool translates texts, reads books aloud and simplifies complex texts for students with individualized education programs, but it doesn’t always translate nuance or tone.

Hunt said she sees benefit in this kind of application for students who need extra support, but she said the loss of tone could be an issue, and it raises questions about infringing on audiobook copyright laws.

Cooksey expounded upon that.

“Additionally, copyright goes beyond the printed work. … That copyright owner also owns the presentation rights, the audio rights and anything like that,” she said. “So if they’re putting something into a generative AI tool that reads the PDF, that is technically a violation of copyright in that moment, because there are available tools for audio versions of books for this reason, and they’re widely available. Sora is great, and it’s free for educators. … But when you’re talking about taking something that belongs to someone else and generating a brand-new copied product of that, that’s not fair use.”

Education

Bret Harte Superintendent Named To State Boards On School Finance And AI

Education

Blunkett urges ministers to use ‘incredible sensitivity’ in changing Send system in England | Special educational needs

Ministers must use “incredible sensitivity” in making changes to the special educational needs system, former education secretary David Blunkett has said, as the government is urged not to drop education, health and care plans (EHCPs).

Lord Blunkett, who went through the special needs system when attending a residential school for blind children, said ministers would have to tread carefully.

The former home secretary in Tony Blair’s government also urged the government to reassure parents that it was looking for “a meaningful replacement” for EHCPs, which guarantee more than 600,000 children and young people individual support in learning.

Blunkett said he sympathised with the challenge facing Bridget Phillipson, the education secretary, saying: “It’s absolutely clear that the government will need to do this with incredible sensitivity and with a recognition it’s going to be a bumpy road.”

He said government proposals due in the autumn to reexamine Send provision in England were not the same as welfare changes, largely abandoned last week, which were aimed at reducing spending. “They put another billion in [to Send provision] and nobody noticed,” Blunkett said, adding: “We’ve got to reduce the fear of change.”

Earlier Helen Hayes, the Labour MP who chairs the cross-party Commons education select committee, called for Downing Street to commit to EHCPs, saying this was the only way to combat mistrust among many families with Send children.

“I think at this stage that would be the right thing to do,” she told BBC Radio 4’s Today programme. “We have been looking, as the education select committee, at the Send system for the last several months. We have heard extensive evidence from parents, from organisations that represent parents, from professionals and from others who are deeply involved in the system, which is failing so many children and families at the moment.

“One of the consequences of that failure is that parents really have so little trust and confidence in the Send system at the moment. And the government should take that very seriously as it charts a way forward for reform.”

A letter to the Guardian on Monday, signed by dozens of special needs and disability charities and campaigners, warned against government changes to the Send system that would restrict or abolish EHCPs.

Labour MPs who spoke to the Guardian are worried ministers are unable to explain essential details of the special educational needs shake-up being considered in the schools white paper to be published in October.

Downing Street has refused to rule out ending EHCPs, while stressing that no decisions have yet been taken ahead of a white paper on Send provision to be published in October.

Keir Starmer’s deputy spokesperson said: “I’ll just go back to the broader point that the system is not working and is in desperate need of reform. That’s why we want to actively work with parents, families, parliamentarians to make sure we get this right.”

after newsletter promotion

Speaking later in the Commons, Phillipson said there was “no responsibility I take more seriously” than that to more vulnerable children. She said it was a “serious and complex area” that “we as a government are determined to get right”.

The education secretary said: “There will always be a legal right to the additional support children with Send need, and we will protect it. But alongside that, there will be a better system with strengthened support, improved access and more funding.”

Dr Will Shield, an educational psychologist from the University of Exeter, said rumoured proposals that limit EHCPs – potentially to pupils in special schools – were “deeply problematic”.

Shield said: “Mainstream schools frequently rely on EHCPs to access the funding and oversight needed to support children effectively. Without a clear, well-resourced alternative, families will fear their children are not able to access the support they need to achieve and thrive.”

Paul Whiteman, general secretary of the National Association of Head Teachers, said: “Any reforms in this space will likely provoke strong reactions and it will be crucial that the government works closely with both parents and schools every step of the way.”

-

Funding & Business1 week ago

Kayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Jobs & Careers7 days ago

Mumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Mergers & Acquisitions7 days ago

Donald Trump suggests US government review subsidies to Elon Musk’s companies

-

Funding & Business7 days ago

Rethinking Venture Capital’s Talent Pipeline

-

Jobs & Careers6 days ago

Why Agentic AI Isn’t Pure Hype (And What Skeptics Aren’t Seeing Yet)

-

Funding & Business4 days ago

Sakana AI’s TreeQuest: Deploy multi-model teams that outperform individual LLMs by 30%

-

Jobs & Careers7 days ago

Telangana Launches TGDeX—India’s First State‑Led AI Public Infrastructure

-

Funding & Business1 week ago

From chatbots to collaborators: How AI agents are reshaping enterprise work

-

Jobs & Careers7 days ago

Astrophel Aerospace Raises ₹6.84 Crore to Build Reusable Launch Vehicle

-

Funding & Business7 days ago

Europe’s Most Ambitious Startups Aren’t Becoming Global; They’re Starting That Way