Tools & Platforms

AI Apocalypse? Why language surrounding tech is sounding increasingly religious

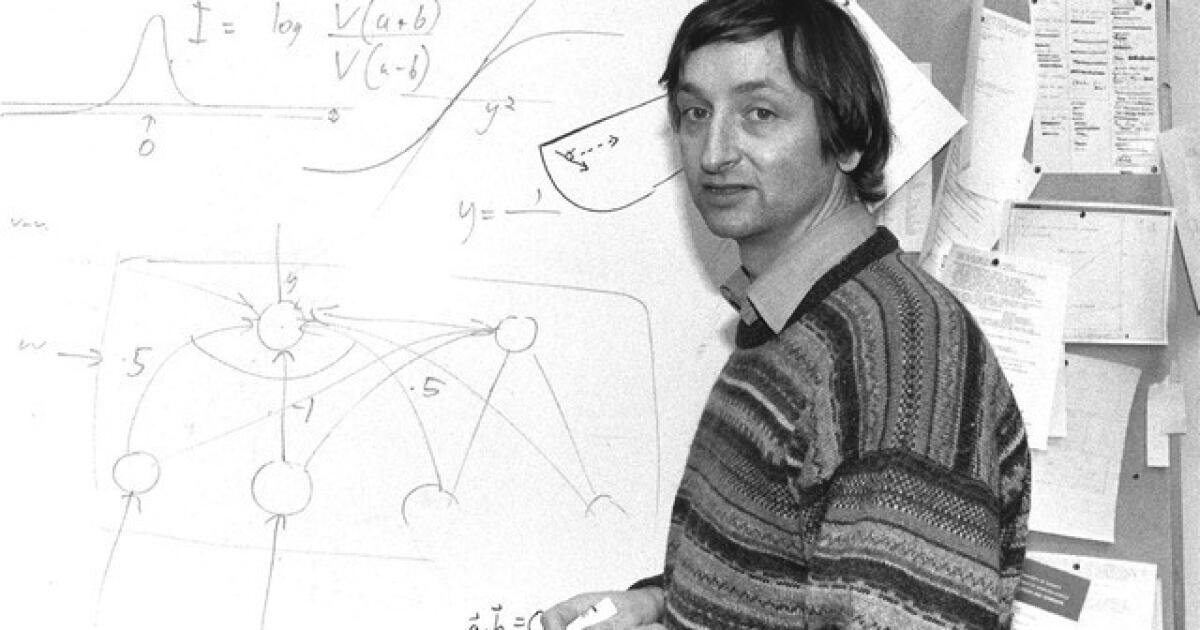

At 77 years old, Geoffrey Hinton has a new calling in life. Like a modern-day prophet, the Nobel Prize winner is raising alarms about the dangers of uncontrolled and unregulated artificial intelligence.

Frequently dubbed the “Godfather of AI,” Hinton is known for his pioneering work on deep learning and neural networks which helped lay the foundation for the AI technology often used today. Feeling “somewhat responsible,” he began speaking publicly about his concerns in 2023 after he left his job at Google, where he worked for more than a decade.

As the technology — and investment dollars — powering AI have advanced in recent years, so too have the stakes behind it.

“It really is godlike,” Hinton said.

Hinton is among a growing number of prominent tech figures who speak of AI using language once reserved for the divine. OpenAI CEO Sam Altman has referred to his company’s technology as a “magic intelligence in the sky,” while Peter Thiel, the co-founder of PayPal and Palantir, has even argued that AI could help bring about the Antichrist.

Will AI bring condemnation or salvation?

There are plenty of skeptics who doubt the technology merits this kind of fear, including Dylan Baker, a former Google employee and lead research engineer at the Distributed AI Research Institute, which studies the harmful impacts of AI.

“I think oftentimes they’re operating from magical fantastical thinking informed by a lot of sci-fi that presumably they got in their formative years,” Baker said. “They’re really detached from reality.”

Although chatbots like ChatGPT only recently penetrated the zeitgeist, certain Silicon Valley circles have prophesied of AI’s power for decades.

“We’re trying to wake people up,” Hinton said. “To get the public to understand the risks so that the public pressures politicians to do something about it.”

While researchers like Hinton are warning about the existential threat they believe AI poses to humanity, there are CEOs and theorists on the other side of the spectrum who argue we are approaching a kind of technological apocalypse that will usher in a new age of human evolution.

In an essay published last year titled “Machines of Loving Grace: How AI Could Transform the World for the Better,” Anthropic CEO Dario Amodei lays out his vision for a future “if everything goes right with AI.”

The AI entrepreneur predicts “the defeat of most diseases, the growth in biological and cognitive freedom, the lifting of billions of people out of poverty to share in the new technologies, a renaissance of liberal democracy and human rights.”

While Amodei opts for the phrase “powerful AI,” others use terms like “the singularity” or “artificial general intelligence (AGI).” Though proponents of these concepts don’t often agree on how to define them, they refer broadly to a hypothetical future point at which AI will surpass human-level intelligence, potentially triggering rapid, irreversible changes to society.

Computer scientist and author Ray Kurzweil has been predicting since the 1990s that humans will one day merge with technology, a concept often called transhumanism.

“We’re not going to actually tell what comes from our own brain versus what comes from AI. It’s all going to be embedded within ourselves. And it’s going to make ourselves more intelligent,” Kurzweil said.

In his latest book, “The Singularity Is Nearer: When We Merge with AI,” Kurzweil doubles down on his earlier predictions. He believes that by 2045 we will have “multiplied our own intelligence a millionfold.”

“Yes,” he eventually conceded when asked if he considers AI to be his religion. It informs his sense of purpose.

“My thoughts about the future and the future of technology and how quickly it’s coming definitely affects my attitudes towards being here and what I’m doing and how I can influence other people,” he said.

Visions of the apocalypse bubble up

Despite Thiel’s explicit invocation of language from the Book of Revelation, the positive visions of an AI future are more “apocalyptic” in the historical sense of the word.

“In the ancient world, apocalyptic is not negative,” explains Domenico Agostini, a professor at the University of Naples L’Orientale who studies ancient apocalyptic literature. “We’ve completely changed the semantics of this word.”

The term “apocalypse” comes from the Greek word “apokalypsis,” meaning “revelation.” Although often associated today with the end of the world, apocalypses in ancient Jewish and Christian thought were a source of encouragement in times of hardship or persecution.

“God is promising a new world,” said Professor Robert Geraci, who studies religion and technology at Knox College. “In order to occupy that new world, you have to have a glorious new body that triumphs over the evil we all experience.”

Geraci first noticed apocalyptic language being used to describe AI’s potential in the early 2000s. Kurzweil and other theorists eventually inspired him to write his 2010 book, “Apocalyptic AI: Visions of Heaven in Robotics, Artificial Intelligence, and Virtual Reality.”

The language reminded him of early Christianity. “Only we’re gonna slide out God and slide in … your pick of cosmic science laws that supposedly do this and then we were going to have the same kind of glorious future to come,” he said.

Geraci argues this kind of language hasn’t changed much since he began studying it. What surprises him is how pervasive it has become.

“What was once very weird is kind of everywhere,” he said.

Has Silicon Valley finally found its God?

One factor in the growing cult of AI is profitability.

“Twenty years ago, that fantasy, true or not, wasn’t really generating a lot of money,” Geraci said. Now, though, “there’s a financial incentive to Sam Altman saying AGI is right around the corner.”

But Geraci, who argues ChatGPT “isn’t even remotely, vaguely, plausibly conscious,” believes there may be more driving this phenomenon.

Historically, the tech world has been notoriously devoid of religion. Its secular reputation had so preceded it that one episode of the satirical HBO comedy series, “Silicon Valley,” revolves around “outing” a co-worker as Christian.

Rather than viewing the skeptical tech world’s veneration of AI as ironic, Geraci believes they’re causally linked.

“We human beings are deeply, profoundly, inherently religious,” he said, adding that the impressive technologies behind AI might appeal to people in Silicon Valley who have already pushed aside “ordinary approaches to transcendence and meaning.”

No religion is without skeptics

Not every Silicon Valley CEO has been converted — even if they want in on the tech.

“When people in the tech industry talk about building this one true AI, it’s almost as if they think they’re creating God or something,” Meta CEO Mark Zuckerberg said on a podcast last year as he promoted his company’s own venture into AI.

Although transhumanist theories like Kurzweil’s have become more widespread, they are still not ubiquitous within Silicon Valley.

“The scientific case for that is in no way stronger than the case for a religious afterlife,” argues Max Tegmark, a physicist and machine learning researcher at the Massachusetts Institute of Technology.

Like Hinton, Tegmark has been outspoken about the potential risks of unregulated AI. In 2023, as president of the Future of Life Institute, Tegmark helped spearhead an open letter calling for powerful AI labs to “immediately pause” the training of their systems.

The letter collected more than 33,000 signatures, including from Elon Musk and Apple co-founder Steve Wozniak. Tegmark considers the letter to have been successful because it helped “mainstream the conversation” about AI safety, but believes his work is far from over.

With regulations and safeguards, Tegmark thinks AI can be used as a tool to do things like cure diseases and increase human productivity. But it is imperative, he argues, to stay away from the “quite fringe” race that some companies are running — “the pseudoreligious pursuit to try to build an alternative God.”

“There are a lot of stories, both in religious texts and in, for example, ancient Greek mythology, about how when we humans start playing gods, it ends badly,” he said. “And I feel there’s a lot of hubris in San Francisco right now.”

Fauria writes for the Associated Press.

Tools & Platforms

Gen AI: Faster Than a Speeding BulletNot So Great at Leaping Tall Buildings

FEATURE

Gen AI: Faster Than a Speeding BulletNot So Great at Leaping Tall Buildings

by Christine Carmichael

This article was going to be a recap of my March 2025 presentation at the Computers in Libraries conference (computersinlibraries.infotoday.com).

However, AI technology and the industry are experiencing not just rapid

growth, but also rapid change. And as we info pros know, not all change is

good. Sprinkled throughout this article are mentions of changes since March, some in the speeding-bullet category, others in the not-leaping-tall-buildings one.

Getting Started

I admit to being biased—after all, Creighton University is in my hometown of Omaha—but what makes Creighton special is how the university community lives out its Jesuit values. That commitment to caring for the whole person, caring for our common home, educating men and women to serve with and for others, and educating students to be agents of change has never been more evident in my 20 years here than in the last 2–3 years.

An adherence to those purposes has allowed people from across the campus to come together easily and move forward quickly. Not in a “Move fast and break things” manner, but with an attitude of “We have to face this together if we are going to teach our students effectively.” The libraries’ interest in AI officially started after OpenAI launched ChatGPT-3.5 in November 2022.

Confronted with this new technology, my library colleagues and I gorged ourselves on AI-related information, attending workshops from different disciplines, ferreting out article “hallucinations,” and experimenting with ways to include information about generative AI (gen AI) tools in information literacy instruction sessions. Elon University shared its checklist, which we then included in our “Guide to Artificial Intelligence” (culibraries.creighton.edu/AI).

(Fun fact: Since August 2024, this guide has received approximately 1,184 hits—it’s our fourth-highest-ranked guide.)

|

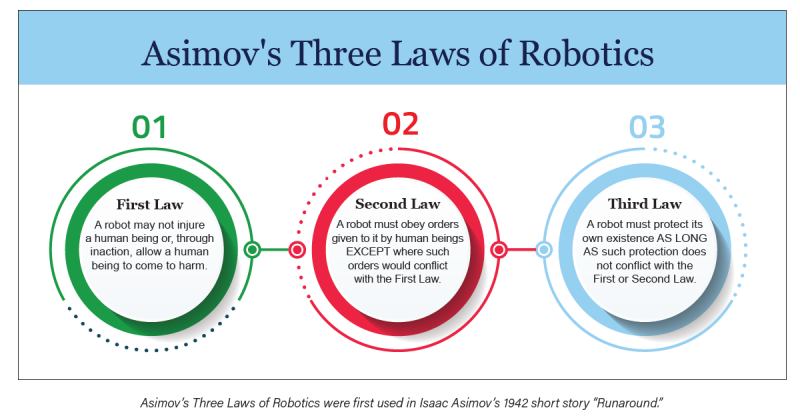

Two things inspired and convinced me to do more and take a lead role with AI at Creighton. First was my lifelong love affair with science fiction literature. Anyone familiar with classic science fiction should recognize Asimov’s Three Laws of Robotics. First used in Isaac Asimov’s 1942 short story “Runaround,” they are said to have come from the Handbook of Robotics, 56th Edition, published in 2058. Librarians should certainly appreciate this scenario of an author of fiction citing a fictional source. In our current environment, where gen AI “hallucinates” citations, it doesn’t get more AI than that!

Whether or not these three laws/concepts really work in a scientific sense, you could extrapolate some further guidance for regulating development and deployment of these new technologies to mitigate harm. Essentially, it’s applying the Hippocratic oath to AI.

|

The second inspiration came from a February 2023 CNN article by Samantha Murphy Kelly (“The Way We Search for Information Online Is About to Change”; cnn.com/2023/02/09/tech/ai-search/index.html), which claims that the way we search for information is never going to be the same. I promptly thought to myself, “Au contraire, mon frère, we have been here before,” and posted a response on Medium (“ChatGPT Won’t Fix the Fight Against Misinformation”; medium.com/@christine.carmichael/chatgpt-wont-fix-the-fight-against-misinformation-15c1505851c3).

What Happened Next

Having a library director whose research passion is AI and ethics enabled us to act as a central hub for convening meetings and to keep track of what is happening with AI throughout the campus. We are becoming a clearinghouse for sharing information about projects, learning opportunities, student engagement, and faculty development, as well as pursuing library-specific projects.

After classes started in the fall 2024, the director of Magis Core Curriculum and the program director for COM 101—a co-curricular course requirement which meets oral communication and information literacy learning objectives—approached two research and instruction librarians, one being me. They asked us to create a module for our Library Encounter Online course (required in COM 101) to explain some background of AI and how large language models (LLMs) work. The new module was in place for spring 2025 classes.

In November 2024, the library received a $250,000 Google grant, which allowed us to subscribe to and test three different AI research assistant tools—Scopus, Keenious, and Scite. We split into three teams, each one responsible for becoming the experts on a particular tool. After presenting train-the-trainer sessions to each other, we restructured our teams to discuss the kinds of instruction options we could use: long, short, online (bite-sized).

We turned on Scopus AI in late December 2024. Team Scopus learned the tool’s ins and outs and describe it as “suitable for general researchers, upper-level undergraduates, graduate/professional students, medical residents, and faculty.” Aspects of the tool are particularly beneficial to discovering trends and gaps in research areas. One concern the team has remains the generation of irrelevant references. The team noticed this particularly when comparing the same types of searches in “regular” Scopus vs. Scopus AI.

Team Keenious was very enthusiastic and used an evaluation rubric from Rutgers University (credit where it’s due!). The librarians’ thoughts currently are that this tool is doing fairly well at “being a research recommendation tool” for advanced researchers. It has also successfully expanded the “universe” of materials used in systematic reviews. However, reservations remain as to its usefulness for undergraduate assignments, particularly when that group of students may not possess the critical thinking skills necessary to effectively use traditional library resources.

Scite is the third tool in our pilot. The platform is designed to help researchers evaluate the reliability of research papers using three categories: citations supporting claims, citations contrasting claims, and citations without either. Team Scite.ai” liked the tool, but recognized that it has a sizable learning curve. One Team Scite.ai member summed up their evaluation like this: “Much like many libraries, Scite.ai tries to be everything to everyone, but it lacks the helpful personal guide-on-the-side that libraries provide.”

The official launch and piloting the use of these tools began in April 2025 for faculty. We will introduce them to students in the fall 2025 semester. However, because the Scopus AI tool is already integrated and sports a prominent position on the search page, we anticipate folks using that tool before we get an opportunity to market it.

The Google funding also enabled the Creighton University Libraries to partner with several departments across campus in support of a full-day faculty development workshop in June. “Educating the Whole Person in an AI-Driven World” brought almost 150 faculty and staff together for the common purpose of discerning (another key component of Jesuit education) where and how we will integrate AI literacies into the curriculum. In addition to breakout sessions, we were introduced to a gen AI SharePoint site, the result of a library collaboration with the Center for Faculty Excellence.

ETHICS AND AI

Our director is developing a Jesuit AI Ethics Framework. Based on Jesuit values and Catholic Social Teaching, the framework also addresses questions asked by Pope Francis and the Papal Encyclical, Laudato si’, which asks us to care for our common home by practicing sustainability. Because of our extensive health sciences programs, a good portion of this ethical framework will focus on how to maintain patient and student privacy when using AI tools.

Ethics is a huge umbrella. These are things we think bear deeper dives and reflection:

The labor question: AI as a form of automation

We had some concerns and questions about AI as it applies to the workforce. Is it good if AI takes people’s jobs or starts producing art? Where does that leave the people affected? Note that the same concerns were raised about automation in the Industrial Revolution and when digital art tablets hit the market.

Garbage in, garbage out (GIGO): AIs are largely trained on internet content, and much of that content is awful.

We were concerned particularly about the lack of transparency: If we don’t know what an AI model is trained on, if there’s no transparency, can we trust it—especially for fields like healthcare and law, where there are very serious real-word implications? In the legal field, AI has reinforced existing biases, generally assuming People of Color (POCs) to be guilty.

Availability bias is also part of GIGO. AIs are biased by the nature of their training data and the nature of the technology itself (pattern recognition). Any AI trained on the internet is biased toward information from the modern era. Scholarly content is biased toward OA, and OA is biased by different field/author demographics that indicate who is more or less likely to publish OA.

AI cannot detect sarcasm, which may contribute to misunderstanding about meaning. AI chatbots and LLMs have repeatedly shown sexist, racist, and even white supremacist responses due to the nature of people online. What perspectives are excluded from training data (non-English content)? What about the lack of developer diversity (largely white Americans and those from India and East Asia)?

Privacy, illegal content sourcing, financial trust issues

Kashmir Hill’s 2023 book, Your Face Belongs to Us: A Secretive Startup’s Quest to End Privacy (Penguin Random House), provides an in-depth exposé on how facial recognition technology is being (mis)used. PayPal founder Peter Thiel invested $200,000 into a company called SmartCheckr, which eventually became Clearview AI, specializing in facial recognition. Thiel was a Facebook board member with a fiduciary responsibility at the same time that he was investing money in a company that was illegally scraping Facebook content. There is more to the Clearview AI story, including algorithmic bias issues with facial recognition. Stories like this raise questions about how to regulate this technology.

Environmental impacts

What about energy and water use and their climate impacts? This is from Scientific American: “A continuation of the current trends in AI capacity and adoption are set to lead to NVIDIA shipping 1.5 million AI server units per year by 2027. These 1.5 million servers, running at full capacity, would consume at least 85.4 terawatt-hours of electricity annually—more than what many small countries use in a year, according to the new assessment“ (scientificamerican.com/article/the-ai-boom-could-use-a-shocking-amount-of-electricity).

In May 2025, MIT Technology Review released a new report, “Power Hungry: AI and Our Energy Future” (technologyreview.com/supertopic/ai-energy-package), which states, “The rising energy cost of data centers is a vital test case for how we deal with the broader electrification of the economy.”

It’s Not All Bad News

There are days I do not even want to open my newsfeed because I know I will find another article detailing some new AI-infused horror, like this one from Futurism talking about people asking ChatGPT how to administer their own facial fillers (futurism.com/neoscope/chatgpt-advice-cosmetic-procedure-medicine). Then there is this yet-to-be-peer-reviewed article about what happens to your brain over time when using an AI writing assistant (“Your Brain on ChatGPT: Accumulation of Cognitive Debt When Using an AI Assistant for Essay Writing Task”; arxiv.org/pdf/2506.08872v1). It is reassuring to know that there are many, many people who are not invested in the hype, who are paying attention to how gen AI is or is not performing.

There are good things happening. For instance, ResearchRabbit and Elicit, two well-known AI research assistant tools, use the corpus of Semantic Scholar’s metadata as their training data. Keenious, one of the tools we are piloting, uses OpenAlex for its training corpus.

On either coast of the U.S. are two outstanding academic research centers focusing on ethics: Santa Clara University’s Markkula Center for Applied Ethics (scu.edu/ethics/focus-areas/internet-ethics) and the Berkman Klein Center for Internet and Society at Harvard University (cyber.harvard.edu).

FUTURE PLANS

We have projects and events planned for the next 2 years:

- Library director and School of Medicine faculty hosting a book club using Teaching With AI: Navigating the Future of Education

- Organizing an “unconference” called Research From All Angles Focusing on AI

- Hosting a Future of Work conference with Google

- Facilitating communities of practice around different aspects of AI: teaching, research, medical diagnostics, computing, patient interaction, etc.

As we get further immersed, our expectations of ourselves need to change. Keeping up with how fast the technology changes will be nigh on impossible. We must give ourselves and each other the grace to accept that we will never “catch up.” Instead, we will continue to do what we excel at—separating the truth from the hype, trying the tools for ourselves, chasing erroneous citations, teaching people to use their critical thinking skills every time they encounter AI, learning from each other, and above all, sharing what we know.

Tools & Platforms

Is AI ‘The New Encyclopedia’? As The School Year Begins, Teachers Debate Using The Technology In The Classroom

As the school year gets underway, teachers across the country are dealing with a pressing quandary: whether or not to use AI in the classroom.

Ludrick Cooper, an eighth-grade teacher in South Carolina, told CNN that he’s been against the use of AI inside and outside the classroom for years, but is starting to change his tune.

“This is the new encyclopedia,” he said of AI.

Don’t Miss:

There are certainly benefits to using AI in the classroom. It can make lessons more engaging, make access to information easier, and help with accessibility for those with visual impairments or conditions like dyslexia.

However, experts also have concerns about the negative impacts AI can have on students. Widening education inequalities, mental health impacts, and easier methods of cheating are among the main downfalls of the technology.

“AI is a little bit like fire. When cavemen first discovered fire, a lot of people said, ‘Ooh, look what it can do,'” University of Maine Associate Professor of Special Education Sarah Howorth told CNN. “And other people are like, ‘Ah, it could kill us.’ You know, it’s the same with AI.”

Several existing platforms have developed specific AI tools to be used in the classroom. In July, OpenAI launched “Study Mode,” which offers students step-by-step guidance on classwork instead of just giving them an answer.

Trending: Kevin O’Leary Says Real Estate’s Been a Smart Bet for 200 Years — This Platform Lets Anyone Tap Into It

The company has also partnered with Instructure, the company behind the learning platform Canvas, to create a new tool called the LLM-Enabled Assignment. The tool will allow teachers to create AI-powered lessons while simultaneously tracking student progress.

“Now is the time to ensure AI benefits students, educators, and institutions, and partnerships like this are critical to making that happen,” OpenAI Vice President of Education Leah Belsky said in a statement.

While some teachers are excited by these advancements and the possibilities they create, others aren’t quite sold.

Stanford University Vice Provost for Digital Education Matthew Rascoff worries that tools like this one remove the social aspect of education. By helping just one person at a time, AI tools don’t allow opportunities for students to work on things like collaboration skills, which will be vital in their success as productive members of society.

Tools & Platforms

AI Growth Overshadowed by Market Panic

This article first appeared on GuruFocus.

Sep 1 – Marvell Technology (NASDAQ:MRVL) saw its shares tumble nearly 18% on Friday after the company reported second-quarter results that came in slightly below Wall Street expectations. While the semiconductor maker missed revenue estimates by a narrow margin, the broader story shows a company still riding powerful growth trends in the data center and artificial intelligence markets.

The chipmaker posted quarterly revenue above $2 billion for the first time, representing 58% growth from a year earlier. Data center sales made up $1.5 billion, or roughly three-quarters of total revenue, underscoring Marvell’s reliance on AI-focused infrastructure spending by hyperscale customers such as Microsoft (NASDAQ:MSFT) and Alphabet (NASDAQ:GOOG). Other business lines, including enterprise networking, carrier infrastructure, consumer, and automotive, accounted for the remaining share.

On the profitability side, Marvell delivered $1.2 billion in gross profit with a non-GAAP margin of 59.4%. Adjusted net income more than doubled year over year to $585.5 million, reflecting the company’s improving operating leverage. Management also guided for third-quarter revenue of about $2.06 billion, which at the midpoint implies 36% growth.

Despite the strong numbers, investors reacted negatively to the revenue miss and concerns over the foundry cycle, triggering the sharp sell-off. Analysts, however, note that Marvell trades below both its historical valuation multiples and the broader industry average. With data center capital spending accelerating and AI demand surging, many see the pullback as an opportunity rather than a red flag.

Marvell’s outlook suggests annualized revenue could surpass $10 billion by the end of fiscal 2026. While risks remain if hyperscalers slow AI spending or if margins weaken, the company’s positioning in semiconductors for cloud, AI, and networking continues to attract long-term investors.

Based on the one year price targets offered by 36 analysts, the average target price for Marvell Technology Inc is $88.42 with a high estimate of $135.00 and a low estimate of $64.31. The average target implies a upside of +40.65% from the current price of $62.87.

Based on GuruFocus estimates, the estimated GF Value for Marvell Technology Inc in one year is $98.65, suggesting a upside of +56.92% from the current price of $62.87.

-

Business3 days ago

Business3 days agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms3 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences3 months ago

Events & Conferences3 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Mergers & Acquisitions2 months ago

Mergers & Acquisitions2 months agoDonald Trump suggests US government review subsidies to Elon Musk’s companies