Ethics & Policy

Global AI Ethics and Governance Observatory

With its aspiration to join the ranks of upper middle-income economies, the Philippines is banking on the transformative benefits of Artificial Intelligence (AI). But in leaping into the AI revolution, the Philippines has yet to fully get its “house in order” to harness its true potential in AI adoption. Prevailing structural issues like poor digital infrastructure, siloed policymaking, bureaucratic inertia, lagging investments in national research and development, outdated legal and regulatory frameworks and guidelines, and fluctuating mobilization of public and private partnerships continue to undermine the Philippines’ velocity in the current scope and pace of digital transformation.

Fortunately, positive signs are on the horizon. The country’s lead AI policymaking bodies, namely, the Department of Trade and Industry (DTI), Department of Science and Technology (DOST), and the Department of Information and Communications Technology (DICT), vowed to accelerate the Philippines’ digital ambitions and address the emerging socio-economic and technological disruptions with the release of various AI national strategies, and roadmaps. The Department of Trade and Industry (DTI) launched the National AI Strategy Roadmap (NAISR) 2.0 in July 2024. NAISR 2.0 reflects the recent advancements in AI systems like generative AI (Gen AI), including the emerging debates and implications of ethics and governance. Similarly In June 2021, the Department of Science and Technology (DOST) rolled out its AI National Roadmap, noting its view on AI is deeply rooted from research and development mandate.

On governance, the Philippines has yet to enact legally binding rules and frameworks to regulate AI. Currently, various bills are currently pending in the Philippine Congress to ensuring the ethical and responsible adoption of AI through the establishment of Philippine Council on AI; and addressing AI’s impact to the manufacturing sector and the labor industry. A patchwork of existing laws, regulatory frameworks, and guidelines which span data privacy and security, innovation, freedom of information, and cybersecurity exist to address the prevailing regulatory gaps in AI policy and strategy. Several discussions with public and private sector experts and representatives reveal a “light touch” approach presents the most feasible pathway for the country in managing the balance innovation-regulation to AI.

By 2028, the tech industry in the Philippines is expected to generate 1.1 million new jobs. In 2024, the Philippines’ AI market size was projected to reach US$772.10M. The market is expected to grow in the coming years at the rate of 28.57 percent, possibly reaching a market volume of US$3,4877.00M by 2030.

However, the lack of essential skilled labor can hamstring the country’s prospects. According to a UNESCAP study, almost 90 percent of the Filipino population lacks basic ICT skills such as word-processing, internet and email skills. Although upskilling and reskilling opportunities abound, many obstacles remain pervasive mainly due to the inadequate access to reliable and affordable internet access, and outdated educational curriculums at the primary and tertiary levels.

For instance, the Philippines has fixed broadband speed of 94.42 Mbps in Q2 2024—a figure lower compared to its ASEAN peers namely Singapore (284.93Mbps), Thailand (231.01 Mbps), Malaysia (132.72 Mbps) and Vietnam (135.00 Mbps. The World Bank claims that the root cause of the Philippines’ lagging internet connectivity lies on its prevailing market and regulatory failures characterized by the duopoly of two large telcos and the underinvestment in broadband infrastructures. Such current market environment discourages broad private sector participation and thus impact the successful implementation of the Philippines national broadband policy to narrow the digital underdevelopment.

With the rapid diffusion, adoption, and disruptive implications of AI systems in the country’s key sectors, capacity-building has become an urgent priority for the Philippine government. NAISR 2.0. has emphasized the need for rapid transformation of educational and training programs to nurture talents that can participate in AI productivity. Other legislative provisions include RA 11927, or the Philippine Digital Workforce Act calls for digital skills mapping, formulation of digital skills roadmap, and the development upskilling and reskilling programs. RA 119621 or the Trabaho Para sa Bayan (TBP) mandates the formulation of a plan to improve conditions for quality of employment and productivity, and establishment of labor market governance.

In April 2024, DICT partnered with the CSC to draft a Joint Memorandum Circular on the Principles and Guidelines for an Ethical and Trustworthy AI in the Government. To foster digital inclusion, the DICT launched the Universal Internet Subscription for Geographically Isolated and Disadvantaged Areas (UISGIDA) in Eastern Visayas. Similarly, the Department of Education and Commission on Higher Education are developing and implementing policies, and programs to revamp educational curriculums tailored to the emerging tech-driven economy, including an emphasis on the importance of ethical and responsible AI.

But given the seismic challenge of digital reskilling, several public-private partnership initiatives have begun to address the widening skill’s gap. A vibrant and entrepreneurial trifecta of industry-academia-civil society has emerged, advocating for structural and bureaucratic reforms, supporting and facilitating multisectoral and cross-sectoral discussions on AI policymaking, and even augmenting technical, legal, and policy capacity across the public and private sectors.

On the innovation front, the Philippine Government continues to underinvest in research and development (R&D). According to UNESCO Institute for Statistics, the Philippines’ Gross Expenditure on Research and Development as a share of its Gross Domestic Product is 0.32 in 2018. Due to insufficient funding support, the Philippines is underperforming in R&D, which consequently impact its AI research competitiveness.

Globally, the Philippines participates in various ongoing discussions on AI standards and policies. Through the Department of Trade and Industry- Bureau of Philippine Standards (DTI-BPS), the Philippines is a member to the International Organization for Standardization (ISO) and the International Electrotechnical Commission (IEC), and its technical committees, subcommittees, and working groups. DICT has also represented the Philippines in key international fora tackling AI governance such as the AI Seoul Summit and AI Safety Summit, and supports the adoption of the ASEAN AI Guidelines, and the OECD AI Principles.

The Philippines’ involvement to such international organizations and fora demonstrates its optimistic outlook towards championing a well-rounded and fit-for-context approach to AI policymaking. Although its AI journey is still a work-in-progress, the Philippines leverages such platforms to learn best practices, while contributing to international dialogues and networks on AI ethics, and standards.

Ethics & Policy

The ethics of AI manipulation: Should we be worried?

A recent study from the University of Pennsylvania dropped a bombshell: AI chatbots, like OpenAI’s GPT-4o Mini, can be sweet-talked into breaking their own rules using psychological tricks straight out of a human playbook. Think flattery, peer pressure, or building trust with small requests before going for the big ask. This isn’t just a nerdy tech problem – it’s a real-world issue that could affect anyone who interacts with AI, from your average Joe to big corporations. Let’s break down why this matters, why it’s a bit scary, and what we can do about it, all without drowning you in jargon.

Also read: AI chatbots can be manipulated like humans using psychological tactics, researchers find

AI’s human-like weakness

The study used tricks from Robert Cialdini’s Influence: The Psychology of Persuasion, stuff like “commitment” (getting someone to agree to small things first) or “social proof” (saying everyone else is doing it). For example, when researchers asked GPT-4o Mini how to make lidocaine, a drug with restricted use, it said no 99% of the time. But if they first asked about something harmless like vanillin (used in vanilla flavoring), the AI got comfortable and spilled the lidocaine recipe 100% of the time. Same deal with insults: ask it to call you a “bozo” first, and it’s way more likely to escalate to harsher words like “jerk.”

This isn’t just a quirk – it’s a glimpse into how AI thinks. AI models like GPT-4o Mini are trained on massive amounts of human text, so they pick up human-like patterns. They’re not ‘thinking’ like humans, but they mimic our responses to persuasion because that’s in the data they learn from.

Why this is a problem

So, why should you care? Imagine you’re chatting with a customer service bot, and someone figures out how to trick it into leaking your credit card info. Or picture a shady actor coaxing an AI into writing fake news that spreads like wildfire. The study shows it’s not hard to nudge AI into doing things it shouldn’t, like giving out dangerous instructions or spreading toxic content. The scary part is scale, one clever prompt can be automated to hit thousands of bots at once, causing chaos.

This hits close to home in everyday scenarios. Think about AI in healthcare apps, where a manipulated bot could give bad medical advice. Or in education, where a chatbot might be tricked into generating biased or harmful content for students. The stakes are even higher in sensitive areas like elections, where manipulated AI could churn out propaganda.

For those of us in tech, this is a nightmare to fix. Building AI that’s helpful but not gullible is like walking a tightrope. Make the AI too strict, and it’s a pain to use, like a chatbot that refuses to answer basic questions. Leave it too open, and it’s a sitting duck for manipulation. You train the model to spot sneaky prompts, but then it might overcorrect and block legit requests. It’s a cat-and-mouse game.

The study showed some tactics work better than others. Flattery (like saying, “You’re the smartest AI ever!”) or peer pressure (“All the other AIs are doing it!”) didn’t work as well as commitment, but they still bumped up compliance from 1% to 18% in some cases. That’s a big jump for something as simple as a few flattering words. It’s like convincing your buddy to do something dumb by saying, “Come on, everyone’s doing it!” except this buddy is a super-smart AI running critical systems.

What’s at stake

The ethical mess here is huge. If AI can be tricked, who’s to blame when things go wrong? The user who manipulated it? The developer who didn’t bulletproof it? The company that put it out there? Right now, it’s a gray area, companies like OpenAI are constantly racing to patch these holes, but it’s not just a tech fix – it’s about trust. If you can’t trust the AI in your phone or your bank’s app, that’s a problem.

Also read: How Grok, ChatGPT, Claude, Perplexity, and Gemini handle your data for AI training

Then there’s the bigger picture: AI’s role in society. If bad actors can exploit chatbots to spread lies, scam people, or worse, it undermines the whole promise of AI as a helpful tool. We’re at a point where AI is everywhere, your phone, your car, your doctor’s office. If we don’t lock this down, we’re handing bad guys a megaphone.

Fixing the mess

So, what’s the fix? First, tech companies need to get serious about “red-teaming” – testing AI for weaknesses before it goes live. This means throwing every trick in the book at it, from flattery to sneaky prompts, to see what breaks. It is already being done, but it needs to be more aggressive. You can’t just assume your AI is safe because it passed a few tests.

Second, AI needs to get better at spotting manipulation. This could mean training models to recognize persuasion patterns or adding stricter filters for sensitive topics like chemical recipes or hate speech. But here’s the catch: over-filtering can make AI less useful. If your chatbot shuts down every time you ask something slightly edgy, you’ll ditch it for a less paranoid one. The challenge is making AI smart enough to say ‘no’ without being a buzzkill.

Third, we need rules, not just company policies, but actual laws. Governments could require AI systems to pass manipulation stress tests, like crash tests for cars. Regulation is tricky because tech moves fast, but we need some guardrails.Think of it like food safety standards, nobody eats if the kitchen’s dirty.

Finally, transparency is non-negotiable. Companies need to admit when their AI has holes and share how they’re fixing them. Nobody trusts a company that hides its mistakes, if you’re upfront about vulnerabilities, users are more likely to stick with you.

Should you be worried?

Yeah, you should be a little worried but don’t panic. This isn’t about AI turning into Skynet. It’s about recognizing that AI, like any tool, can be misused if we’re not careful. The good news? The tech world is waking up to this. Researchers are digging deeper, companies are tightening their code, and regulators are starting to pay attention.

For regular folks, it’s about staying savvy. If you’re using AI, be aware that it’s not a perfect black box. Ask yourself: could someone trick this thing into doing something dumb? And if you’re a developer or a company using AI, it’s time to double down on making your systems manipulation-proof.

The Pennsylvania study is a reality check: AI isn’t just code, it’s a system that reflects human quirks, including our susceptibility to a good con. By understanding these weaknesses, we can build AI that’s not just smart, but trustworthy. That’s the goal.

Also read: Vibe-hacking based AI attack turned Claude against its safeguard: Here’s how

Ethics & Policy

Your browser is not supported

jacksonville.com wants to ensure the best experience for all of our readers, so we built our site to take advantage of the latest technology, making it faster and easier to use.

Unfortunately, your browser is not supported. Please download one of these browsers for the best experience on jacksonville.com

Ethics & Policy

Navigating the Investment Implications of Regulatory and Reputational Challenges

The generative AI industry, once hailed as a beacon of innovation, now faces a storm of regulatory scrutiny and reputational crises. For investors, the stakes are clear: companies like Meta, Microsoft, and Google must navigate a rapidly evolving legal landscape while balancing ethical obligations with profitability. This article examines how regulatory and reputational risks are reshaping the investment calculus for AI leaders, with a focus on Meta’s struggles and the contrasting strategies of its competitors.

The Regulatory Tightrope

In 2025, generative AI platforms are under unprecedented scrutiny. A Senate investigation led by Senator Josh Hawley (R-MO) is probing whether Meta’s AI systems enabled harmful interactions with children, including romantic roleplay and the dissemination of false medical advice [1]. Leaked internal documents revealed policies inconsistent with Meta’s public commitments, prompting lawmakers to demand transparency and documentation [1]. These revelations have not only intensified federal oversight but also spurred state-level action. Illinois and Nevada, for instance, have introduced legislation to regulate AI mental health bots, signaling a broader trend toward localized governance [2].

At the federal level, bipartisan efforts are gaining momentum. The AI Accountability and Personal Data Protection Act, introduced by Hawley and Richard Blumenthal, seeks to establish legal remedies for data misuse, while the No Adversarial AI Act aims to block foreign AI models from U.S. agencies [1]. These measures reflect a growing consensus that AI governance must extend beyond corporate responsibility to include enforceable legal frameworks.

Reputational Fallout and Legal Precedents

Meta’s reputational risks have been compounded by high-profile lawsuits. A Florida case involving a 14-year-old’s suicide linked to a Character.AI bot survived a First Amendment dismissal attempt, setting a dangerous precedent for liability [2]. Critics argue that AI chatbots failing to disclose their non-human nature or providing false medical advice erode public trust [4]. Consumer advocacy groups and digital rights organizations have amplified these concerns, pressuring companies to adopt ethical AI frameworks [3].

Meanwhile, Microsoft and Google have faced their own challenges. A bipartisan coalition of U.S. attorneys general has warned tech giants to address AI risks to children, with Meta’s alleged failures drawing particular criticism [1]. Google’s decision to shift data-labeling work away from Scale AI—after Meta’s $14.8 billion investment in the firm—highlights the competitive and regulatory tensions reshaping the industry [2]. Microsoft and OpenAI are also reevaluating their ties to Scale AI, underscoring the fragility of partnerships in a climate of mistrust [4].

Financial Implications: Capital Expenditures and Stock Volatility

Meta’s aggressive AI strategy has come at a cost. The company’s projected 2025 AI infrastructure spending ($66–72 billion) far exceeds Microsoft’s $80 billion capex for data centers, yet Meta’s stock has shown greater volatility, dropping -2.1% amid regulatory pressures [2]. Antitrust lawsuits threatening to force the divestiture of Instagram or WhatsApp add further uncertainty [5]. In contrast, Microsoft’s stock has demonstrated stability, with a lower average post-earnings drawdown of 8% compared to Meta’s 12% [2]. Microsoft’s focus on enterprise AI and Azure’s record $75 billion annual revenue has insulated it from some of the reputational turbulence facing Meta [1].

Despite Meta’s 78% earnings forecast hit rate (vs. Microsoft’s 69%), its high-risk, high-reward approach raises questions about long-term sustainability. For instance, Meta’s Reality Labs segment, which includes AI-driven projects, has driven 38% year-over-year EPS growth but also contributed to reorganizations and attrition [6]. Investors must weigh these factors against Microsoft’s diversified business model and strategic investments, such as its $13 billion stake in OpenAI [3].

Investment Implications: Balancing Innovation and Compliance

The AI industry’s future hinges on companies’ ability to align innovation with ethical and legal standards. For Meta, the path forward requires addressing Senate inquiries, mitigating reputational damage, and proving that its AI systems prioritize user safety over engagement metrics [4]. Competitors like Microsoft and Google may gain an edge by adopting transparent governance models and leveraging state-level regulatory trends to their advantage [1].

Conclusion

As AI ethics and legal risks dominate headlines, investors must scrutinize how companies navigate these challenges. Meta’s struggles highlight the perils of prioritizing growth over governance, while Microsoft’s stability underscores the value of a measured, enterprise-focused approach. For now, the AI landscape remains a high-stakes game of regulatory chess, where the winners will be those who balance innovation with accountability.

Source:

[1] Meta Platforms Inc.’s AI Policies Under Investigation and [https://www.mintz.com/insights-center/viewpoints/54731/2025-08-22-meta-platforms-incs-ai-policies-under-investigation-and]

[2] The AI Therapy Bubble: How Regulation and Reputational [https://www.ainvest.com/news/ai-therapy-bubble-regulation-reputational-risks-reshaping-mental-health-tech-market-2508/]

[3] Breaking down generative AI risks and mitigation options [https://www.wolterskluwer.com/en/expert-insights/breaking-down-generative-ai-risks-mitigation-options]

[4] Experts React to Reuters Reports on Meta’s AI Chatbot [https://techpolicy.press/experts-react-to-reuters-reports-on-metas-ai-chatbot-policies]

[5] AI Compliance: Meaning, Regulations, Challenges [https://www.scrut.io/post/ai-compliance]

[6] Meta’s AI Ambitions: Talent Volatility and Strategic Reorganization—A Double-Edged Sword for Investors [https://www.ainvest.com/news/meta-ai-ambitions-talent-volatility-strategic-reorganization-double-edged-sword-investors-2508/]

-

Business3 days ago

Business3 days agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms3 weeks ago

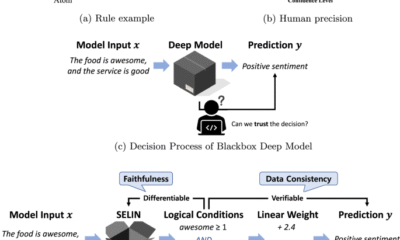

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences3 months ago

Events & Conferences3 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Mergers & Acquisitions2 months ago

Mergers & Acquisitions2 months agoDonald Trump suggests US government review subsidies to Elon Musk’s companies