A new research framework helps AI agents explore three-dimensional spaces they can’t directly detect. Called MindJourney, the approach addresses a key limitation in vision-language models (VLMs), which give AI agents their ability to interpret and describe visual scenes.

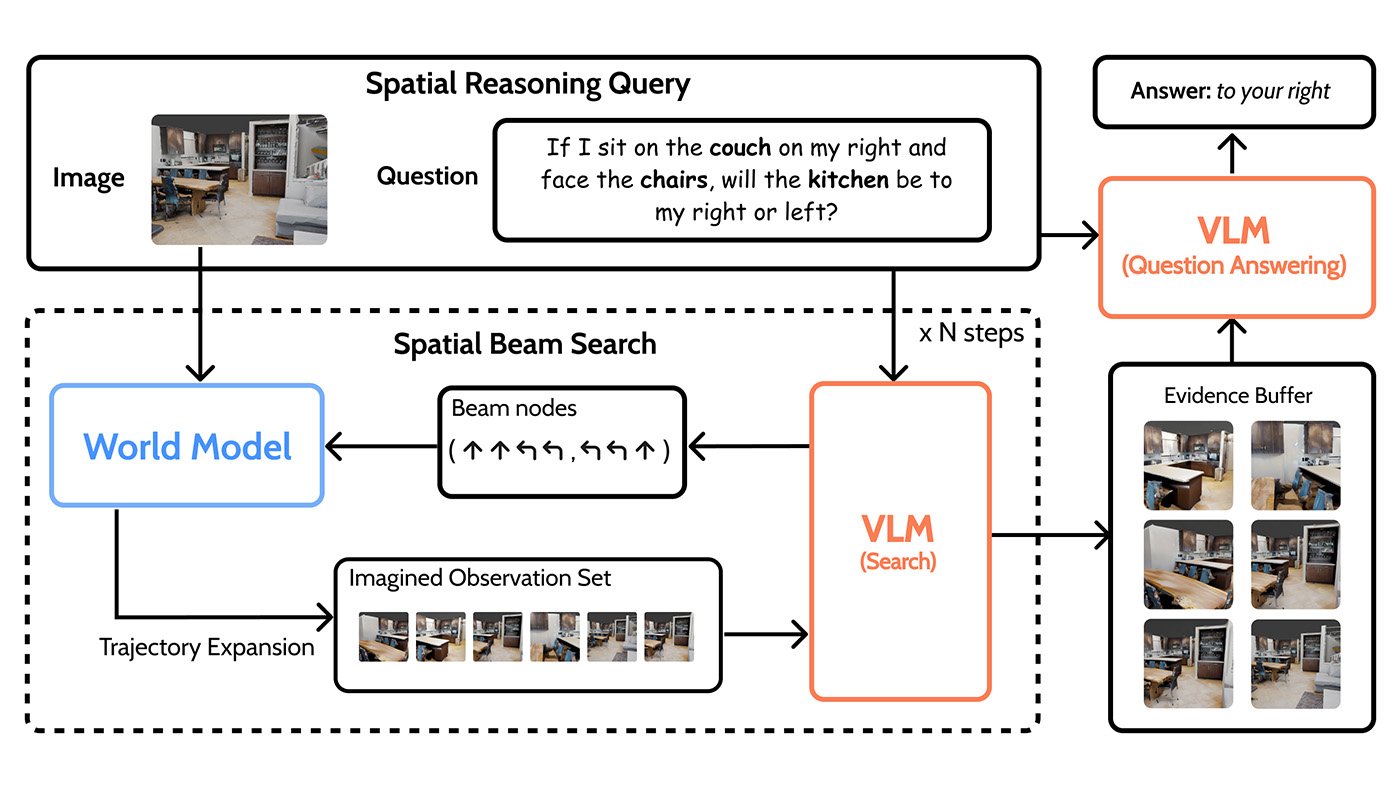

While VLMs are strong at identifying objects in static images, they struggle to interpret the interactive 3D world behind 2D images. This gap shows up in spatial questions like “If I sit on the couch that is on my right and face the chairs, will the kitchen be to my right or left?”—tasks that require an agent to interpret its position and movement through space.

People overcome this challenge by mentally exploring a space, imagining moving through it and combining those mental snapshots to work out where objects are. MindJourney applies the same process to AI agents, letting them roam a virtual space before answering spatial questions.

How MindJourney navigates 3D space

To perform this type of spatial navigation, MindJourney uses a world model—in this case, a video generation system trained on a large collection of videos captured from a single moving viewpoint, showing actions such as going forward and turning left of right, much like a 3D cinematographer. From this, it learns to predict how a new scene would appear from different perspectives.

At inference time, the model can generate photo-realistic images of a scene based on possible movements from the agent’s current position. It generates multiple possible views of a scene while the VLM acts as a filter, selecting the constructed perspectives that are most likely to answer the user’s question.

These are kept and expanded in the next iteration, while less promising paths are discarded. This process, shown in Figure 1, avoids the need to generate and evaluate thousands of possible movement sequences by focusing only on the most informative perspectives.

To make its search through a simulated space both effective and efficient, MindJourney uses a spatial beam search—an algorithm that prioritizes the most promising paths. It works within a fixed number of steps, each representing a movement. By balancing breadth with depth, spatial beam search enables MindJourney to gather strong supporting evidence. This process is illustrated in Figure 2.

By iterating through simulation, evaluation, and integration, MindJourney can reason about spatial relationships far beyond what any single 2D image can convey, all without the need for additional training. On the Spatial Aptitude Training (SAT) benchmark, it improved the accuracy of VLMs by 8% over their baseline performance.

Azure AI Foundry Labs

Get a glimpse of potential future directions for AI, with these experimental technologies from Microsoft Research.

Building smarter agents

MindJourney showed strong performance on multiple 3D spatial-reasoning benchmarks, and even advanced VLMs improved when paired with its imagination loop. This suggests that the spatial patterns that world models learn from raw images, combined with the symbolic capabilities of VLMs, create a more complete spatial capability for agents. Together, they enable agents to infer what lies beyond the visible frame and interpret the physical world more accurately.

It also demonstrates that pretrained VLMs and trainable world models can work together in 3D without retraining either one—pointing toward general-purpose agents capable of interpreting and acting in real-world environments. This opens the way to possible applications in autonomous robotics, smart home technologies, and accessibility tools for people with visual impairments.

By converting systems that simply describe static images into active agents that continually evaluate where to look next, MindJourney connects computer vision with planning. Because exploration occurs entirely within the model’s latent space—its internal representation of the scene—robots would be able to test multiple viewpoints before determining their next move, potentially reducing wear, energy use, and collision risk.

Looking ahead, we plan to extend the framework to use world models that not only predict new viewpoints but also forecast how the scene might change over time. We envision MindJourney working alongside VLMs that interpret those predictions and use them to plan what to do next. This enhancement could enable agents more accurately interpret spatial relationships and physical dynamics, helping them to operate effectively in changing environments.