AI Insights

Startup’s Technique Brings Mathematical Precision to Gen AI

Generative artificial intelligence (AI) can astound with human-like conversation and research superpowers, but it can also be disastrously wrong. That unreliability is a major barrier to adoption as AI begins to control physical machines, payments and other high-stakes domains.

AI Insights

AI creates fear, intrigue for Bay Area small businesses – NBC Bay Area

Companies in the Bay Area are both embracing and increasingly fearful of artificial intelligence.

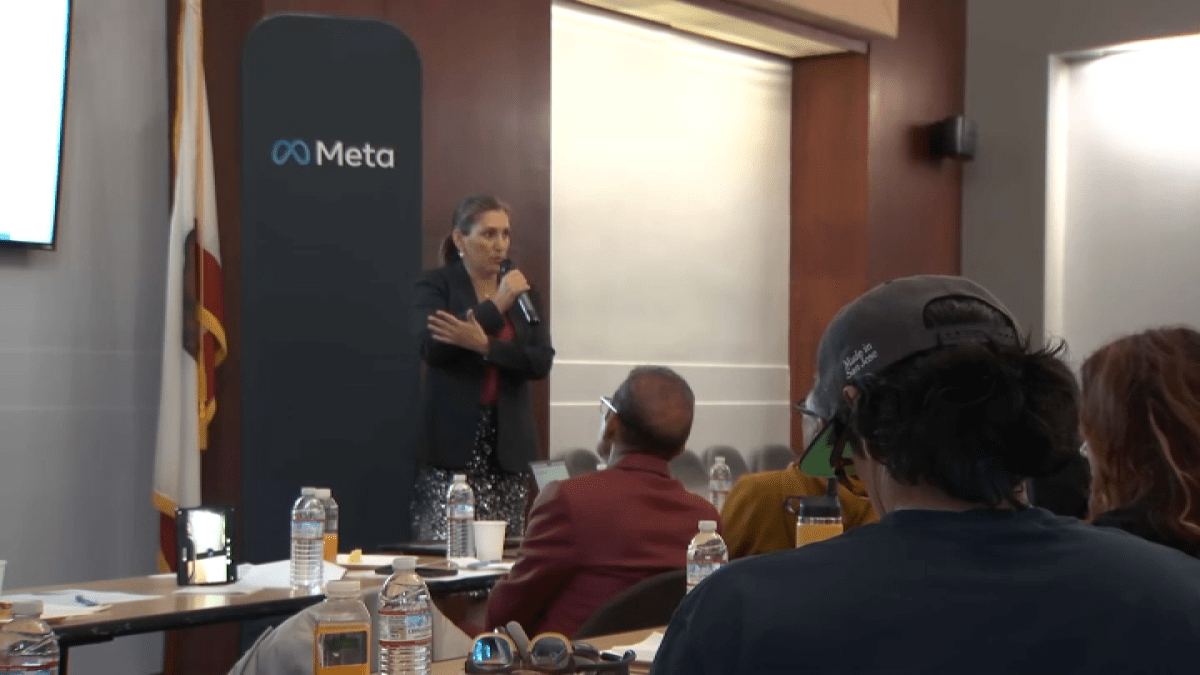

On Wednesday, Meta, one of the world’s biggest tech companies, got together with some small businesses at the San Jose Chamber of Commerce to talk about how the machines can help.

For many small businesses, AI has been something they just don’t yet have time for, but they say they’re curious.

“I think small businesses start with confusion,” San Jose Chamber of Commerce CEO Leah Toeniskoetter said. “What is it? It means so many things. It’s too big of a word. It’s like the web or like the internet. So it starts with let’s offer a course like this, an opportunity like this, to share what AI is in relation to your business.”

Meta said discussions like Wednesday’s, which brought in about 30 owners, help bridge the gap between big tech and small business.

“So getting them to adopt and use AI, even in small ways right now, is a great step forward to keep them engaged as AI is really transforming our economy,” said Jim Cullinan with Meta.

AI Insights

America’s 2025 AI Action Plan: Deregulation and Global Leadership

In July 2025, the White House released America’s AI Action Plan, a sweeping policy framework asserting that “the United States is in a race to achieve global dominance in artificial intelligence,” and that whoever controls the largest AI hub “will set global AI standards and reap broad economic and military benefits” (see Introduction). The Plan, following a January 2025 executive order, underscores the Trump administration’s vision of a deregulated, innovation-driven AI ecosystem designed and optimized to accelerate technological progress, expand workforce opportunities, and assert U.S. leadership internationally.

“America is the country that started the AI race. And as President of the United States, I’m here today to declare that America is going to win it.” –President Donald J. Trump 🇺🇸🦅 pic.twitter.com/AwnTeTmfBn

— The White House (@WhiteHouse) July 24, 2025

This article outlines the Plan’s development, key pillars, associated executive orders, and the legislative and regulatory context that frames its implementation. It also situates the Plan within ongoing legal debates about state versus federal authority in regulating AI, workforce adaptation, AI literacy, and cybersecurity.

Laying the Groundwork for AI Dominance

January 2025: Executive Order Calling for Deregulation

The first major executive action of Trump’s second term was the January 23, 2025, order titled “Removing Barriers to American Leadership in Artificial Intelligence.” This Executive Order (EO) formally rescinded policies deemed obstacles to AI innovation under the prior administration, particularly regarding AI regulation. Its stated purpose was to consolidate U.S. leadership by ensuring that AI systems are “free from ideological bias or engineered social agendas,” and that federal policies actively foster innovation.

The EO emphasized three broad goals:

- Promoting human flourishing and economic competitiveness: AI development was framed as central to national prosperity, with the federal government creating conditions for private-sector-led growth.

- National security: Leadership in AI was explicitly tied to the United States’ global strategic position.

- Deregulation: Existing federal regulations, guidance, and directives perceived as constraining AI innovation were revoked, streamlining federal involvement and eliminating bureaucratic barriers.

The January order set the stage for the July 2025 Action Plan, signaling a decisive break from the prior administration’s cautious, regulatory stance.

Scroll to continue reading

April 2025: Office of Management and Budget Memoranda

Prior to the release of America’s AI Action Plan, the Trump administration issued key guidance to facilitate federal adoption and procurement of AI technologies. This guidance focused on streamlining agency operations, promoting responsible innovation, and ensuring that federal AI use aligns with broader strategic objectives.

Two memoranda were issued by the Office of Management and Budget (OMB) on April 3, 2025, provided a framework for this shift:

- “Accelerating Federal Use of AI through Innovation, Governance, and Public Trust” (M-25-21): OMB Empowers Chief AI Officers to serve as change agents, promoting agency-wide AI adoption. Through this memorandum, agencies empower AI leaders to remove barriers to AI innovation. Also, they require federal agencies to track AI adoption through maturity assessments, identifying high-impact use cases that necessitate heightened oversight. This balances the rapid deployment of AI with privacy, civil rights, and civil liberties protections.

- “Driving Efficient Acquisition of Artificial Intelligence in Government” (M-25-22): Provides agencies with tools and concise, effective guidance on how to acquire “best-in-class” AI systems quickly and responsibly while promoting innovation across the federal government. It streamlined procurement processes, emphasizing competitive acquisition and prioritization of American AI technologies. M-25-22 also reduced reporting burdens while maintaining accountability for lawful and responsible AI use.

These April memoranda laid the procedural foundation for federal AI adoption, ensuring agencies could implement emerging AI technologies responsibly while aligning with strategic U.S. objectives.

July 2025: America’s AI Action Plan

Released on July 23, 2025, the AI Action Plan builds on the April memoranda by articulating clear principles for government procurement of AI systems, particularly Large Language Models (LLMs), to ensure federal adoption aligns with American values:

- Truth-seeking: LLMs must respond accurately to factual inquiries, prioritize historical accuracy and scientific inquiry, and acknowledge uncertainty.

- Ideological neutrality: LLMs should remain neutral and nonpartisan, avoiding the encoding of ideological agendas such as DEI unless explicitly prompted by users.

The Plan emphasizes that these principles are central to federal adoption, establishing expectations that agencies procure AI systems responsibly and in accordance with national priorities. OMB guidance, to be issued by November 20, 2025, will operationalize these principles by requiring federal contracts to include compliance terms and decommissioning costs for noncompliant vendors. Unlike the April memoranda, which focused narrowly on agency adoption and contracting, the July Plan set broad national objectives designed to accelerate U.S. leadership in artificial intelligence across sectors. These foundational principles inform the broader strategic vision outlined in the Plan, which is organized into three primary pillars:

- Accelerating AI Innovation

- Building American AI Infrastructure

- Leading in International AI Diplomacy and Security

📃The White House’s AI Action Plan sets a bold vision for innovation, infrastructure & global AI leadership. 🇺🇸🤖

In our episode [linked below], we unpack its 3 pillars, the mixed reactions around it, and what it means for practitioners.#AI #AIActionPlan #PracticalAI pic.twitter.com/ehOLTB5Haj

— Practical AI 🤖 (@PracticalAIFM) August 26, 2025

Across 3 pillars, the Plan identifies over 90 federal policy actions. The Plan highlights the Trump administration’s objective of achieving “unquestioned and unchallenged global technological dominance,” positioning AI as a driver of economic growth, job creation, and scientific advancement.

Pillar 1: Accelerating AI Innovation

The Plan emphasizes the United States must have the “most powerful AI systems in the world” while ensuring these technologies create broad economic and scientific benefits. Not only should the U.S. have the most powerful systems, but also the most transformative applications.

The pillar covers topics in AI adoption, regulation, and federal investment.

- Removing bureaucratic “red tape and onerous regulation”: The administration argued that AI innovation should not be slowed by federal rules, particularly those at the state level that are considered “burdensome.” Funding for AI projects is directed toward states with favorable regulatory climates, potentially pressuring states to align with federal deregulatory priorities.

- Encouraging open-source and open-weight AI: Expanding access to AI systems for researchers and startups is intended to catalyze rapid innovation. Particularly, the administration is looking to invest in AI interpretability, control, and robustness breakthroughs to create an “AI evaluations ecosystem.”

- Federal adoption and workforce development: Federal agencies are instructed to accelerate AI adoption, particularly in defense and national security applications.

- Workforce development: The uses of technology should ultimately create economic growth, new jobs, and scientific advancement. Policies also support workforce retraining to ensure that American workers thrive in an AI-driven economy, including pre-apprenticeship programs and high-demand occupation initiatives.

- Advancing protections: Ensuring that frontier AI protects free speech and American values. Notably, the pillar includes measures to “combat synthetic media in the legal system,” including deepfakes and fake AI-generated evidence.

Consistent with the innovation pillar, the Plan emphasizes AI literacy, recognizing that training and oversight are essential to AI accountability. This aligns with analogous principles in the EU AI Act, which requires deployers to inform users of potential AI harms. The administration proposes tax-free reimbursement for private-sector AI training and skills development programs to incentivize adoption and upskilling.

Pillar 2: Building American AI Infrastructure

AI’s computational demands require unprecedented energy and infrastructure. The Plan identifies infrastructure development as critical to sustaining global leadership, demonstrating the Administration’s pursuit of large-scale industrial plans. It contains provisions for the following:

- Data center expansion: Federal agencies are directed to expedite permitting for large-scale data centers, defined as—in a July 23, 2025 EO titled “Accelerating Federal Permitting Of Data Center Infrastructure”—facilities “requiring 100 megawatts (MW) of new load dedicated to AI inference, training, simulation, or synthetic data generation.” These policies ease federal regulatory burdens to facilitate the rapid and efficient buildout of infrastructure. This EO revokes the Biden Administration’s January 2025 Executive Order on “Advancing United States Leadership in Artificial Intelligence Infrastructure,” but maintains an emphasis on expediting permits and leasing federal lands for AI infrastructure development.

- Energy and workforce development: To meet AI power requirements, the Plan calls for streamlined permitting for semiconductor manufacturing facilities and energy infrastructure, for example, strengthening and growing the electric grid. The Plan also calls for the development of covered components, defined by the July 23, 2025 EO as “materials, products, and infrastructure that are required to build Data Center Projects or otherwise upon which Data Center Projects depend.” Additionally, investments will be made in workforce training to operate these high-demand systems. This is on par with the new national initiative to increase high-demand occupations such as electricians and HVAC technicians.

- Cybersecurity and secure-by-design AI: Recognizing AI systems as both defensive tools and potential security risks, the Administration directs information sharing of AI threats between public and private sectors and updates incident response plans to account for AI-specific threats.

Pillar 3: Leading in International AI Diplomacy and Security

The Plan extends beyond domestic priorities to assert U.S. leadership globally. The following measures illustrate a dual focus of fostering innovation while strategically leveraging American technological dominance:

- Exporting American AI: The Plan reflects efforts to drive the adoption of American AI systems, computer hardware, and standards. Commerce and State Departments are tasked with partnering with the industry to deliver “secure full-stack AI export packages… to America’s friends and allies” including hardware, software, and applications to allies and partners (see “White House Unveils America’s AI Action Plan”)

- Countering foreign influence: The Plan explicitly seeks to restrict access to advanced AI technologies by adversaries, including China, while promoting the adoption of American standards abroad.

- Global coordination: Strategic initiatives are proposed to align protection measures internationally and ensure the U.S. leads in evaluating national security risks associated with frontier AI models.

[Learn more about the pillars at ai.gov]

California’s Reception and Industry Response

The Plan addresses the interplay between federal and state authority, emphasizing that states may legislate AI provided their regulations are not “unduly restrictive to innovation.” Federal funding is explicitly conditioned on state regulatory climates, incentivizing alignment with the Plan’s deregulatory priorities. For California, this creates a favorable environment for the state’s robust tech sector, encouraging continued innovation while aligning with federal objectives. Simultaneously, the Federal Trade Commission (FTC) is directed to review its AI investigations to avoid burdening innovation, a policy reflected in the removal of prior AI guidance from the FTC website in March 2025, further supporting California’s leading role in AI development.

.@POTUS launched America’s AI Action Plan to lead in AI diplomacy and cement U.S. dominance in artificial intelligence.

AI is here now, and the USA will lead a new spirit of innovation. More on America’s action plan for AI:https://t.co/5lY6ktLDri pic.twitter.com/2R1meOje7z

— Department of State (@StateDept) August 27, 2025

The White House released an article showcasing acclaim for the Plan. Among the supporters are the AI Innovation Association, Center for Data Innovation, Consumer Technology Association, and the US Chamber of Commerce. Leading tech companies—including California-based companies Meta, Anthropic, xAI, and Zoom—praised the Plan’s focus on federal adoption, infrastructure buildout, and innovation acceleration.

California’s Anthropic highlighted alignment with its own policy priorities, including safety testing, AI interpretability, and secure deployment in a reflection. The reflection includes commentary on how to accelerate AI infrastructure and adoption, promote secure AI development, democratize AI’s benefits, and establish a natural standard by proposing a framework for frontier model transparency. The AI Action Plan’s recommendations to increase federal government adoption of AI include proposals aligned with policy priorities and recommendations Anthropic made to the White House; recommendations made in response to the Office of Science and Technology’s “Request for Information on the Development of an AI Action Plan.” Additionally, Anthropic released a “Build AI in America” report detailing steps the Administration can take to accelerate the buildout of the nation’s AI infrastructure. The company is looking to work with the administration on measures to expand domestic energy capacity.

California’s tech industry has not only embraced the Action Plan but positioned itself as a key partner in shaping its implementation. With companies like Anthropic, Meta, and xAI already aligning their priorities to federal policy, California has an opportunity to set a national precedent for constructive collaboration between industry and government. By fostering accountability principles grounded in truth-seeking and ideological neutrality, and by maintaining a regulatory climate favorable to innovation, the state can both strengthen its relationship with Washington and serve as a model for other states seeking to balance growth, safety, and public trust in the AI era.

America’s AI Action Plan moves from policy articulation to implementation, the coordination between federal guidance and state-level innovation will be critical. California’s tech industry is already demonstrating how strategic alignment with national priorities can accelerate adoption, build infrastructure, and set standards for responsible AI development. The Plan offers an opportunity for states to serve as models of effective governance, showing how deregulation, accountability principles, and public-private collaboration can advance technological leadership while safeguarding public trust. By continuing to harmonize innovation with ethical oversight, the United States can solidify its position as the global leader in artificial intelligence.

AI Insights

Job seekers, HR professionals grapple with use of artificial intelligence

RALEIGH, N.C. (WTVD) — The conversation surrounding the use of generative artificial intelligence, such as OpenAI’s ChatGPT, Microsoft CoPilot, Google Gemini, and others, is rapidly evolving and continuing to provoke questions of thought.

The debate comes as North Carolina Governor Josh Stein signed into law an executive order geared toward artificial intelligence.

It’s a space that is transforming at a pace much quicker than many people can adapt to, and is finding its way more and more into everyday use.

One of those spaces is the job market.

“I’ll even share with my experience yesterday. So I had gotten a completely generative AI-written resume, and my first reaction was, ‘Oh, I don’t love this. ‘ And then my second reaction was, ‘but why?’ I’m going to want them doing this at work. So why wouldn’t I want them doing it in the application process?” said human resources executive Steve O’Brien.

O’Brien’s comments caught the attention of colleagues internally and externally.

“I think what we need to do is ask ourselves, how do we interview in a world where generative AI is involved. Not how do we exclude generative AI from the interview process,” added O’Brien.

According to the 2025 Job Seeker Nation Report by Employ, 69% of applicants say they use artificial intelligence to find or match their work history with relevant job listings. That is up by one percent compared to 2024. Alternatively, in 2025, Employ found that 52% of applicants write or review resumes using artificial intelligence, down from 58% in 2024.

“I think recruiters are getting very good at spotting this AI-generated content. Every resume sounds the same, every line sounds the same, and the resume is missing the stories that. I mean, humans love stories,” said resume and career coaching expert Mir Garvy.

ALSO SEE Judge orders search shakeup in Google monopoly case, but keeps hands off Chrome and default deals

Meanwhile, career website Zety found that 58% of HR managers believe it’s ethical for candidates to use AI during their job search.

“Now those applicant tracking systems are AI-informed. But when all of us have access to tools like ChatGPT, in a sense, we have now a more level playing field,” Garvy said.

“If you had asked me six months ago, I’d have said that I was disappointed that generative AI had made the resume. But I don’t think that I have that opinion anymore,” said O’Brien. “So I don’t fault the candidates who are being asked to write 75 resumes and reply to 100 jobs before they get an interview for trying to figure out an efficient way to engage in that marketplace.”

The pair, along with job seekers, agree that AI is a tool that is best used to aid and assist, but not replace.

“(Artificial intelligence) should tell your story. It should highlight the things that are most important and downplay or eliminate the things that aren’t,” said Garvy.

O’Brien added, “If you completely outsource the creative process to ChatGPT, that’s probably not great, right? You are sort of erasing yourself from the equation. But if there’s something in there that you need help articulating, you need a different perspective on how to visualize, I have found it to be an extraordinary partner.”

Copyright © 2025 WTVD-TV. All Rights Reserved.

-

Business5 days ago

Business5 days agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms3 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Education2 months ago

Education2 months agoAERDF highlights the latest PreK-12 discoveries and inventions