Ethics & Policy

We Already Have an Ethics Framework for AI (opinion)

For the third time in my career as an academic librarian, we are facing a digital revolution that is radically and rapidly transforming our information ecosystem. The first was when the internet became broadly available by virtue of browsers. The second was the emergence of Web 2.0 with mobile and social media. The third—and current—results from the increasing ubiquity of AI, especially generative AI.

Once again, I am hearing a combination of fear-based thinking alongside a rhetoric of inevitability and scoldings directed at those critics who are portrayed as “resistant to change” by AI proponents. I wish I were hearing more voices advocating for the benefits of specific uses of AI alongside clearheaded acknowledgment of risks of AI in specific circumstances and an emphasis on risk mitigation. Academics should approach AI as a tool for specific interventions and then assess the ethics of those interventions.

Caution is warranted. The burden of building trust should be on the AI developers and corporations. While Web 2.0 delivered on its promise of a more interactive, collaborative experience on the web that centered user-generated content, the fulfillment of that promise was not without societal costs.

In retrospect, Web 2.0 arguably fails to meet the basic standard of beneficence. It is implicated in the global rise of authoritarianism, in the undermining of truth as a value, in promoting both polarization and extremism, in degrading the quality of our attention and thinking, in a growing and serious mental health crisis, and in the spread of an epidemic of loneliness. The information technology sector has earned our deep skepticism. We should do everything in our power to learn from the mistakes of our past and do what we can to prevent similar outcomes in the future.

We need to develop an ethical framework for assessing uses of new information technology—and specifically AI—that can guide individuals and institutions as they consider employing, promoting and licensing these tools for various functions. There are two main factors about AI that complicate ethical analysis. The first is that an interaction with AI frequently continues past the initial user-AI transaction; information from that transaction can become part of the system’s training set. Secondly, there is often a significant lack of transparency about what the AI model is doing under the surface, making it difficult to assess. We should demand as much transparency as possible from tool providers.

Academia already has an agreed-upon set of ethical principles and processes for assessing potential interventions. The principles in “The Belmont Report: Ethical Principles and Guidelines for the Protection of Human Subjects of Research” govern our approach to research with humans and can fruitfully be applied if we think of potential uses of AI as interventions. These principles not only benefit academia in making assessments about using AI but also provide a framework for technology developers thinking through their design requirements.

The Belmont Report articulates three primary ethical principles:

- Respect for persons

- Beneficence

- Justice

“Respect for persons,” as it’s been translated into U.S. code and practiced by IRBs, has several facets, including autonomy, informed consent and privacy. Autonomy means that individuals should have the power to control their engagement and should not be coerced to engage. Informed consent requires that people should have clear information so that they understand what they are consenting to. Privacy means a person should have control and choice about how their personal information is collected, stored, used and shared.

Following are some questions we might ask to assess whether a particular AI intervention honors autonomy.

- Is it obvious to users that they are interacting with AI? This becomes increasingly important as AI is integrated into other tools.

- Is it obvious when something was generated by AI?

- Can users control how their information is harvested by AI, or is the only option to not use the tool?

- Can users access essential services without engaging with AI? If not, that may be coercive.

- Can users control how information they produce is used by AI? This includes whether their content is used to train AI models.

- Is there a risk of overreliance, especially if there are design elements that encourage psychological dependency? From an educational perspective, is using an AI tool for a particular purpose likely to prevent users from learning foundational skills so that they become dependent on the model?

In relation to informed consent, is the information provided about what the model is doing both sufficient and in a form that a person who is neither a lawyer nor a technology developer can understand? It is imperative that users be given information about what data is going to be collected from which sources and what will happen to that data.

Privacy infringement happens either when someone’s personal data is revealed or used in an unintended way or when information thought private is correctly inferred. When there is sufficient data and computing power, re-identification of research subjects is a danger. Given that “de-identification of data” is one of the most common strategies for risk mitigation in human subjects’ research, and there is an increasing emphasis on publishing data sets for the purposes of research reproducibility, this is an area of ethical concern that demands attention. Privacy emphasizes that individuals should have control over their private information, but how that private information is used should also be assessed in relation to the second major principle—beneficence.

Beneficence is the general principle that says that the benefits should outweigh the risks of harm and that risks should be mitigated as much as possible. Beneficence should be assessed on multiple levels—both the individual and the systemic. The principle of beneficence demands that we pay particularly careful attention to those who are vulnerable because they lack full autonomy, such as minors.

Even when making personal decisions, we need to think about potential systemic harms. For example, some vendors offer tools that allow researchers to share their personal information in order to generate highly personalized search results—increasing research efficiency. As the tool builds a picture of the researcher, it will presumably continue to refine results with the goal of not showing things that it does not believe are useful to the researcher. This may benefit the individual researcher. However, on a systemic level, if such practices become ubiquitous, will the boundaries between various discourses harden? Will researchers doing similar scholarship get shown an increasingly narrow view of the world, focused on research and outlooks that are similar to each other, while researchers in a different discourse are shown a separate view of the world? If so, would this disempower interdisciplinary or radically novel research or exacerbate disciplinary confirmation bias? Can such risks be mitigated? We need to develop a habit of thinking about potential impacts beyond the individual in order to create mitigations.

There are many potential benefits to certain uses of AI. There are real possibilities it can rapidly advance medicine and science—see, for example, the stunning successes of the protein structure database AlphaFold. There are corresponding potentialities for swift advances in technology that can serve the common good, including in our fight against the climate crisis. The potential benefits are transformative, and a good ethical framework should encourage them. The principle of beneficence does not demand that there are no risks, but that we should identify uses where the benefits are significant and that we mitigate the risks, both individual and systemic. Risks can be minimized by improving the tools, such as work to prevent them from hallucinating, propagating toxic or misleading content, or delivering inappropriate advice.

Questions of beneficence also require attention to environmental impacts of generative AI models. Because the models require vast amounts of computing power and, therefore, electricity, using them taxes our collective infrastructure and contributes to pollution. When analyzing a particular use through the ethical lens of beneficence, we should ask whether the proposed use provides enough likely benefit to justify the environmental harm. Use of AI for trivial purposes arguably fails the test for beneficence.

The principle of justice demands that the people and populations who bear the risks should also receive the benefits. With AI, there are significant equity concerns. For example, generative AI may be trained on data that includes our biases, both current and historic. Models must be rigorously tested to see if they create prejudicial or misleading content. Similarly, AI tools should be closely interrogated to ensure that they do not work better for some groups than for others. Inequities impact the calculations of beneficence and, depending on the stakes of the use case, could make the use unethical.

Another consideration in relation to the principle of justice and AI is the issue of fair compensation and attribution. It is important that AI does not undermine creative economies. Additionally, scholars are important content producers, and the academic coin of the realm is citations. Content creators have a right to expect that their work will be used with integrity, will be cited and that they will be remunerated appropriately. As part of autonomy, content creators should also be able to control whether their material is used in a training set, and this should, at least going forward, be part of author negotiations. Similarly, the use of AI tools in research should be cited in the scholarly product; we need to develop standards about what is appropriate to include in methodology sections and citations, and possibly when an AI model should be granted co-authorial status.

The principles outlined above from the Belmont Report are, I believe, sufficiently flexible to allow for further and rapid developments in the field. Academia has a long history of using them as guidance to make ethical assessments. They give us a shared foundation from which we can ethically promote the use of AI to be of benefit to the world while simultaneously avoiding the types of harms that can poison the promise.

Ethics & Policy

Tech leaders in financial services say responsible AI is necessary to unlock GenAI value

Good morning. CFOs are increasingly responsible for aligning AI investments with business goals, measuring ROI, and ensuring ethical adoption. But is responsible AI an overlooked value creator?

Scott Zoldi, chief analytics officer at FICO and author of more than 35 patents in responsible AI methods, found that many customers he’s spoken to lacked a clear concept of responsible AI—aligning AI ethically with an organizational purpose—prompting an in-depth look at how tech leaders are managing it.

According to a new FICO report released this morning, responsible AI standards are considered essential innovation enablers by senior technology and AI leaders at financial services firms. More than half (56%) named responsible AI a leading contributor to ROI, compared to 40% who credited generative AI for bottom-line improvements.

The report, based on a global survey of 254 financial services technology leaders, explores the dynamic between chief AI/analytics officers—who focus on AI strategy, governance, and ethics—and CTOs/CIOs, who manage core technology operations and alignment with company objectives.

Zoldi explained that, while generative AI is valuable, tech leaders see the most critical problems and ROI gains arising from responsible AI and true synchronization of AI investments with business strategy—a gap that still exists in most firms. Only 5% of respondents reported strong alignment between AI initiatives and business goals, leaving 95% lagging in this area, according to the findings.

In addition, 72% of chief AI officers and chief analytics officers cite insufficient collaboration between business and IT as a major barrier to company alignment. Departments often work from different metrics, assumptions, and roadmaps.

This difficulty is compounded by a widespread lack of AI literacy. More than 65% said weak AI literacy inhibits scaling. Meanwhile, CIOs and CTOs report that only 12% of organizations have fully integrated AI operational standards.

In the FICO report, State Street’s Barbara Widholm notes, “Tech-led solutions lack strategic nuance, while AI-led initiatives can miss infrastructure constraints. Cross-functional alignment is critical.”

Chief AI officers are challenged to keep up with the rapid evolution of AI. Mastercard’s chief AI and data officer, Greg Ulrich, recently told Fortune that last year was “early innings,” focused on education and experimentation, but that the role is shifting from architect to operator: “We’ve moved from exploration to execution.”

Across the board, FICO found that about 75% of tech leaders surveyed believe stronger collaboration between business and IT leaders, together with a shared AI platform, could drive ROI gains of 50% or more. Zoldi highlighted the problem of fragmentation: “A bank in Australia I visited had 23 different AI platforms.”

When asked about innovation enablers, 83% of respondents rated cross-departmental collaboration as “very important” or “critical”—signaling that alignment is now foundational.

The report also stresses the importance of human-AI interaction: “Mature organizations will find the right marriage between the AI and the human,” Zoldi said. And that involves human understanding for where to ”best place AI in that loop,” he said.

Sheryl Estrada

sheryl.estrada@fortune.com

Leaderboard

Brian Robins was appointed CFO of Snowflake (NYSE: SNOW), an AI Data Cloud company, effective Sept. 22. Snowflake also announced that Mike Scarpelli is retiring as CFO. Scarpelli will stay a Snowflake employee for a transition period. Robins has served as CFO of GitLab Inc., a technology company, since October 2020. Before that, he was CFO of Sisense, Cylance, AlienVault, and Verisign.

Big Deal

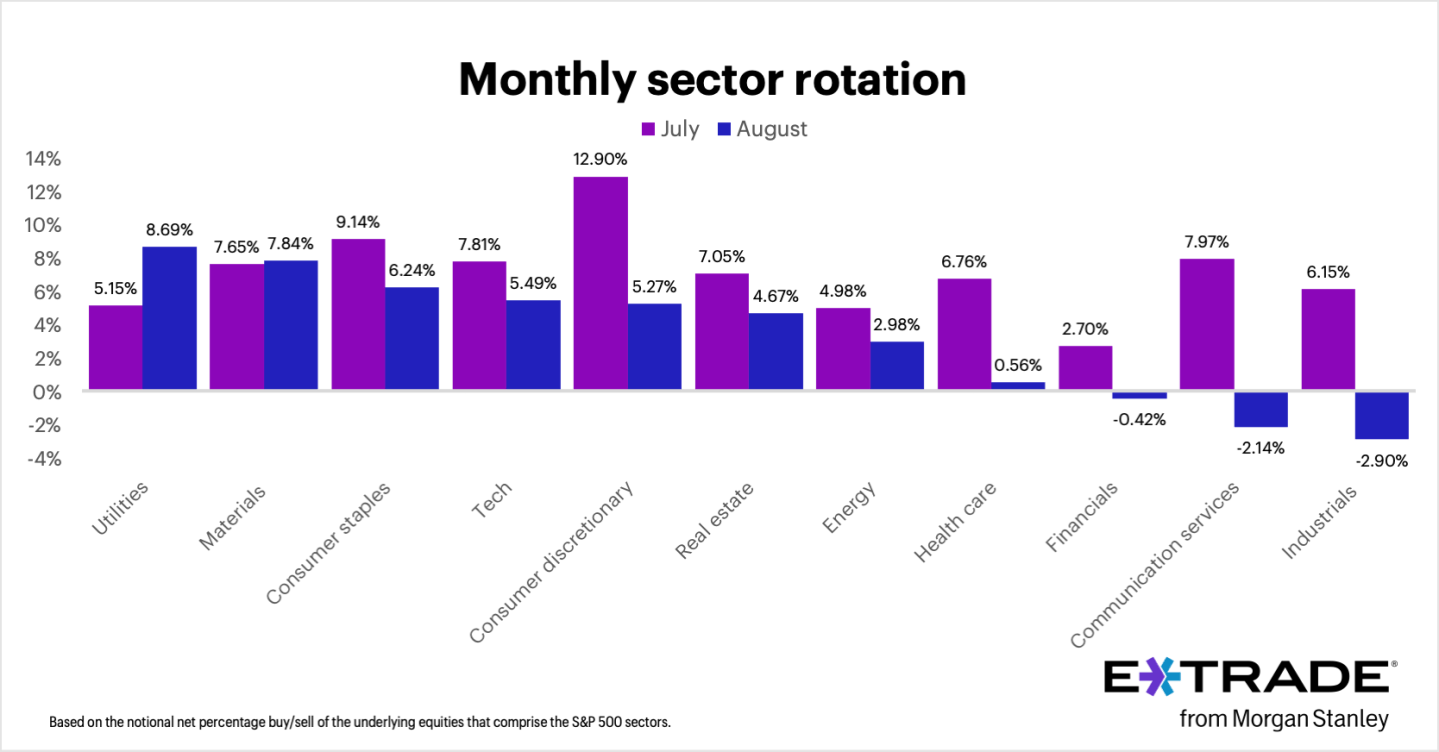

August marked the S&P 500’s fourth consecutive month of gains, with E*TRADE clients net buyers in eight out of 11 sectors, Chris Larkin, managing director of trading and investing, said in a statement. “But some of that buying was contrarian and possibly defensive,” Larkin noted. “Clients rotated most into utilities, a defensive sector that was actually the S&P 500’s weakest performer last month. Another traditionally defensive sector, consumer staples, received the third-most net buying.” By contrast, clients were net sellers in three sectors—industrials, communication services, and financials—which have been among the S&P 500’s stronger performers so far this year.

“Given September’s history as the weakest month of the year for stocks, it’s possible that some investors booked profits from recent winners while increasing positions in defensive areas of their portfolios,” Larkin added.

Going deeper

“Warren Buffett’s $57 billion face-plant: Kraft Heinz breaks up a decade after his megamerger soured” is a Fortune report by Eva Roytburg.

From the report: “Kraft Heinz, the packaged-food giant created in 2015 by Warren Buffett and Brazilian private equity firm 3G Capital, is officially breaking up. The Tuesday announcement ends one of Buffett’s highest-profile bets—and one of his most painful—as the merger that once promised efficiency and dominance instead wiped out roughly $57 billion, or 60%, in market value. Shares slid 7% after the announcement, and Berkshire Hathaway still owns a 27.5% stake.” You can read the complete report here.

Overheard

“Effective change management is the linchpin of enterprise-wide AI implementation, yet it’s often underestimated. I learned this first-hand in my early days as CEO at Sanofi.”

—Paul Hudson, CEO of global healthcare company Sanofi since September 2019, writes in a Fortune opinion piece. Previously, Hudson was CEO of Novartis Pharmaceuticals from 2016 to 2019.

Ethics & Policy

Humans at Core: Navigating AI Ethics and Leadership

Hyderabad recently hosted a vital dialogue on ‘Human at Core: Conversations on AI, Ethics and Future,’ co-organized by IILM University and The Dr Pritam Singh Foundation at Tech Mahindra, Cyberabad. Gathering prominent figures from academia, government, and industry, the event delved into the ethical imperatives of AI and human-centric leadership in a tech-driven future.

The event commenced with Sri Gaddam Prasad Kumar advocating for technology as a servant to humanity, followed by a keynote from Sri Padmanabhaiah Kantipudi, who addressed the friction between rapid technological growth and ethical governance. Two pivotal panels explored the crossroads of AI’s progress versus principle and leadership’s critical role in AI development.

Key insights emerged around empathy and foresight in AI’s evolution, as leaders like Manoj Jha and Rajesh Dhuddu emphasized. Dr. Ravi Kumar Jain highlighted the collective responsibility to steer innovation wisely, aligning technological advancement with human values. The event reinforced the importance of cross-sector collaboration to ensure technology enhances equity and dignity globally.

Ethics & Policy

IILM University and The Dr Pritam Singh Foundation Host Round Table Conference on “Human at Core” Exploring AI, Ethics, and the Future

Hyderabad (Telangana) [India], September 4: IILM University, in collaboration with The Dr Pritam Singh Foundation, hosted a high-level round table discussion on the theme “Human at Core: Conversations on AI, Ethics and Future” at Tech Mahindra, Cyberabad, on 29th August 2025. The event brought together distinguished leaders from academia, government, and industry to engage in a timely and thought-provoking dialogue on the ethical imperatives of artificial intelligence and the crucial role of human-centric leadership in shaping a responsible technological future. The proceedings began with an opening address by Sri Gaddam Prasad Kumar, Speaker, Telangana Legislative Assembly, who emphasised the need to ensure that technology remains a tool in the service of humanity. This was followed by a keynote address delivered by Sri Padmanabhaiah Kantipudi, IAS (Retd.), Chairman of the Administrative Staff College of India (ASCI), who highlighted the growing tension between technological acceleration and ethical oversight.

The event featured two significant panel discussions, each addressing the complex intersections between technology, ethics, and leadership. The first panel, moderated by Mamata Vegunta, Executive Director and Head of HR at DBS Tech India, examined the question, “AI’s Crossroads: The Choice Between Progress and Principle.” The discussion reflected on the critical junctures at which leaders must make choices that balance innovation with responsibility. Panellists, including Deepak Gowda of the Union Learning Academy, Dr. Deepak Kumar of IDRBT, Srini Vudumula of Novelis Consulting, and Gaurav Maheshwari of Signode India Limited, shared their insights on the pressing need for robust ethical frameworks that evolve alongside AI.

The second panel, moderated by Vinay Agrawal, Global Head of Business HR at Tech Mahindra, focused on the theme “Human-Centred AI: Why Leadership Matters More Than Ever.” This session brought to light the growing expectation of leaders to act not just as enablers of technological progress, but as custodians of its impact. Panellists Manoj Jha from Makeen Energy, Dr Anadi Pande from Mahindra University, Rajesh Dhuddu of PwC, and Kiranmai Pendyala, investor and former UWH Chairperson, collectively underlined the importance of empathy, accountability, and foresight in guiding AI development.

Speaking at the event, Dr Ravi Kumar Jain, Director, School of Management – IILM University Gurugram, remarked, “We are at a defining moment in human history, where the question is not merely about how fast we can innovate, but how wisely we choose to do so. At IILM, we believe in nurturing leaders who are not only competent but also conscious of their responsibilities to society.” His sentiments were echoed by Prof Harivansh Chaturvedi, Director General at IILM Lodhi Road, who affirmed the university’s continued commitment to promoting responsible leadership through dialogue, collaboration, and critical inquiry. Across both panels, there was a shared recognition that ethical leadership must keep pace with the rapid transformations driven by AI, and that collaborative efforts across sectors will be essential to ensure that innovation serves the broader goals of equity, dignity, and humanity.

The discussions concluded with a renewed call to action for academic institutions, industry leaders, and policymakers to work together in shaping a future where technology empowers without eroding core human values. In doing so, the event reaffirmed the central message behind its theme that in an increasingly digital world, it is important now more than ever to keep it human at the core.

(Disclaimer: The above press release comes to you under an arrangement with PNN and PTI takes no editorial responsibility for the same.). PTI PWR

(This content is sourced from a syndicated feed and is published as received. The Tribune assumes no responsibility or liability for its accuracy, completeness, or content.)

-

Business6 days ago

Business6 days agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms3 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics