AI Insights

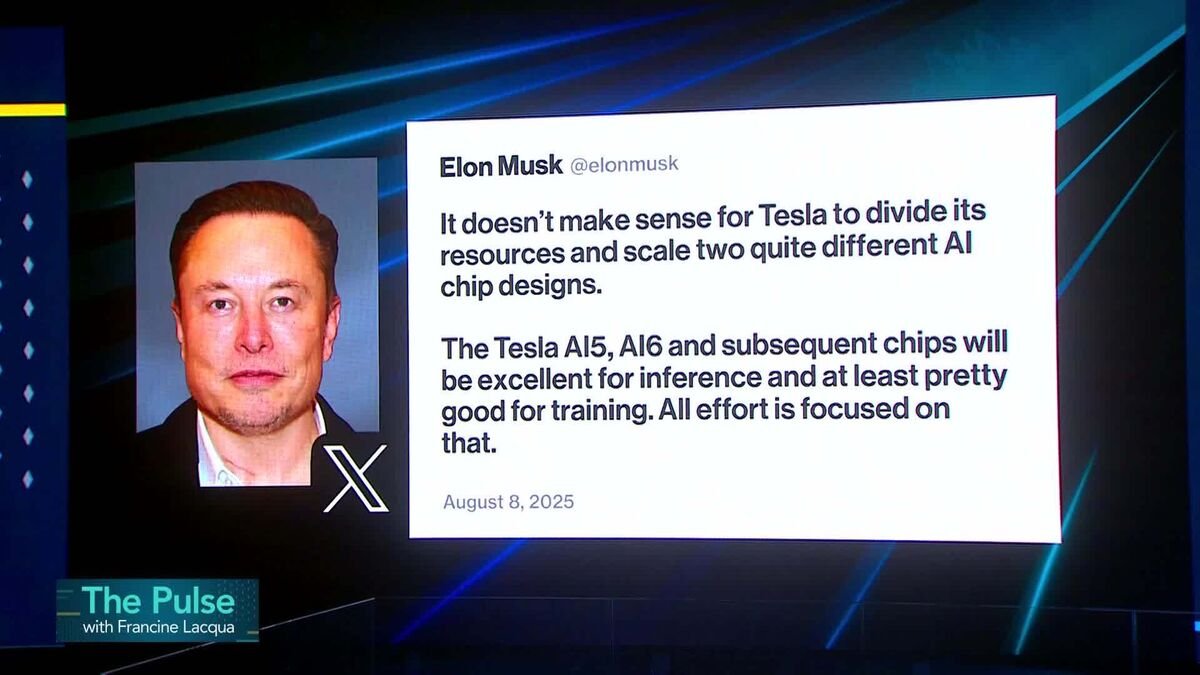

Tesla Disbands Dojo Supercomputer Team, Upending AI Work

Tesla Inc. is disbanding its Dojo team, upending the automaker’s effort to build an in-house supercomputer for developing driverless-vehicle technology. The team has lost about 20 workers recently to newly formed DensityAI, and remaining Dojo workers are being reassigned to other data center and compute projects within Tesla. Craig Trudell reports on Bloomberg Television.

Source link

AI Insights

Top Artificial Intelligence Stocks To Watch Now – September 15th – MarketBeat

AI Insights

Full Slate of Hearings This Week on Broadband, Artificial Intelligence and Energy

Congress tackles broadband, AI and energy in a busy week of hearings. Broadband Breakfast’s Resilient Critical Infrastructure Summit is on Thursday.

AI Insights

How Math Teachers Are Making Decisions About Using AI

Our Findings

Finding 1: Teachers valued many different criteria but placed highest importance on accuracy, inclusiveness, and utility.

We analyzed 61 rubrics that teachers created to evaluate AI. Teachers generated a diverse set of criteria, which we grouped into ten categories: accuracy, contextual awareness, engagingness, fidelity, inclusiveness, output variety, pedagogical soundness, user agency, and utility. We asked teachers to rank their criteria in order of importance and found a relatively flat distribution, with no single criterion emerging as one that a majority assigned highest importance. Still, our results suggest that teachers placed highest importance on accuracy, inclusiveness, and utility. 13% of teachers listed accuracy (which we defined as mathematically accurate, grounded in facts, and trustworthy) as their top evaluation criterion. Several teachers cited “trustworthiness” and “mathematical correctness” as their most important evaluation criteria, and another teacher described accuracy as a “gateway” for continuing evaluation; in other words, if the tool was not accurate, it would not even be worth further evaluation. Another 13% ranked inclusiveness (which we defined as accessible to diverse cognitive and cultural needs of users) as their top evaluation criterion. Teachers required AI tools to be inclusive to both student and teacher users. With respect to student users, teachers suggested that AI tools must be “accessible,” free of “bias and stereotypes,” and “culturally relevant.” They also wanted AI tools to be adaptable for “all teachers.” One teacher wrote, “Different teachers/scenarios need different levels/styles of support. There is no ‘one size fits all’ when it comes to teacher support!” Additionally, 11% of teachers reported utility as their top evaluation criterion (defined as benefits of using the tool significantly outweigh the costs). Teachers who cited this criterion valued “efficiency” and “feasibility.” One added that AI needed to be “directly useful to me and my students.”

In addition to accuracy, inclusiveness, and utility, teachers also valued tools that were relevant to their grade level or other context (10%), pedagogically sound (10%), and engaging (7%). Additionally, 8% reported that AI tools should be faithful to their own methods and voice. Several teachers listed “authentic,” “realistic,” and “sounds like me” as top evaluation criteria. One remarked that they wanted ChatGPT to generate questions for coaching colleagues, “in my voice,” adding, “I would only use ChatGPT-generated coaching questions if they felt like they were something I would actually say to that adult.”

|

CODE |

DESCRIPTION |

EXAMPLES |

|

Accuracy |

Tool outputs are mathematically accurate, grounded in fact, and trustworthy. |

Grounded in actual research and sources ( not hallucinations); mathematical correctness |

|

Adaptability |

Tool learns from data and can improve over time or with iterative prompting |

Continue to prompt until it fits the needs of the given scenario; continue to tailor it! |

|

Contextual Awareness |

Tool is responsive and applicable to specific classroom contexts, including grade level, standards, or teacher-specified goals. |

Ability to be specific to a context / grade-level / community |

|

Engagingness |

Tool evokes users’ interest, curiosity, or excitement. |

A math problem should be interesting or motivate students to engage with the math |

|

Fidelity |

Tool outputs are faithful to users’ intent or voice. |

In my voice- I would only use chatGPT- generated coaching questions if they felt like they were something I would actually say to that adult |

|

Inclusiveness |

Tool is accessible to diverse cognitive and cultural needs of users. |

I have to be able to adapt with regard to differentiation and cultural relevance. |

|

Output Variety |

Tool can provide a variety of output options for users to evaluate or enhance divergent thinking. |

Multiple solutions, not all feedback from chat is useful so providing multiple options is beneficial |

|

Pedagogically Sound |

Tool adheres to established pedagogical best practices. |

Knowledge about educational lingo and pedagogies |

|

User Agency |

Tool promotes users’ control over their own teaching and learning experience. |

It is used as a tool that enables student curiosity and advocacy for learning rather than a source to find answers. |

|

Utility |

Benefits of using the tool significantly outweigh the costs (e.g., risks, resource and time investment). |

Efficiency – will it actually help or is it something I already know |

Table 1. Codes for the top criteria, along with definitions and examples.

Teachers expressed criteria in their own words, which we categorized and quantified via inductive coding.

We have summarized teachers’ evaluation criteria on the chart below:

Finding 2: Teachers’ evaluation criteria revealed important tensions in AI edtech tool design.

In some cases, teachers listed two or more evaluation criteria that were in tension with one another. For example, many teachers emphasized the importance of AI tools that were relevant to their teaching context, grade level, and student population, while also being easy to learn and use. Yet, providing AI tools with adequate context would likely require teachers to invest significant time and effort, compromising efficiency and utility. Additionally, tools with high degrees of context awareness might also pose risks to student privacy, another evaluation criterion some teachers named as important. Teachers could input student demographics, Individualized Education Plans (IEPs), and health records into an AI tool to provide more personalized support for a student. However, the same data could be leaked or misused in a number of ways, including further training of AI models without consent.

Another tension apparent in our data was the tension between accuracy and creativity. As mentioned above, teachers placed highest importance on mathematical correctness and trustworthiness, with one stating that they would not even consider other criteria if a tool was not reliably accurate or produced hallucinations. However, several teachers also listed creativity as a top criterion – a trait produced by LLMs’ stochasticity, which in turn also leads to hallucinations. The tension here is that while accuracy is paramount for fact-based queries, teachers may want to use AI tools as a creative thought-partner for generating novel, outside-the-box tasks – potentially with mathematical inaccuracies – that motivate student reasoning and discussion.

Finding 3: A collaborative approach helped teachers quickly arrive at nuanced criteria.

One important finding we observed is that, when provided time and structure to explore, critique, and design with AI tools in community with peers, teachers develop nuanced ways of evaluating AI – even without having received training in AI. Grounding the summit in both teachers’ own values and concrete problems of practice helped teachers develop specific evaluation criteria tied to realistic classroom scenarios. We used purposeful tactics to organize teachers into groups with peers who held different experiences with and attitudes toward AI than they did, exposing them to diverse perspectives they may not have otherwise considered. Juxtaposing different perspectives informed thoughtful, balanced evaluation criteria, such as, “Teaching students to use AI tools as a resource for curiosity and creativity, not for dependence.” One teacher reflected, “There is so much more to learn outside of where I’m from and it is encouraging to learn from other people from all over.”

Over the course of the summit, several of our facilitators observed that teachers – even those who arrived with strong positive or strong negative feelings about AI – adopted a stance toward AI that we characterized as “critical but curious.” They moved easily between optimism and pessimism about AI, often in the same sentence. One teacher wrote in her summit reflection, “I’m mostly skeptical about using AI as a teacher for lesson planning, but I’m really excited … it could be used to analyze classroom talk, give students feedback … and help teachers foster a greater sense of community.” Another summed it up well: “We need more people dreaming and creating positive tools to outweigh those that will create tools that will cause challenges to education and our society as a whole.”

-

Business2 weeks ago

Business2 weeks agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms1 month ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy2 months ago

Ethics & Policy2 months agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers3 months ago

Jobs & Careers3 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Education3 months ago

Education3 months agoVEX Robotics launches AI-powered classroom robotics system

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Funding & Business3 months ago

Funding & Business3 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries