Jobs & Careers

NVIDIA H20 Chip Shortage Delays DeepSeek R2 Launch

The launch of DeepSeek’s upcoming model, R2, could face significant setbacks in China as US export restrictions choke the supply of NVIDIA’s H20 chips, critical for running the company’s AI models, reported The Information.

R2, the successor to DeepSeek’s widely used R1, has yet to receive a release date. The report added that CEO Liang Wenfeng is unsatisfied with its performance, and engineers continue to work on improvements before it is cleared for launch.

Cloud providers that host and distribute DeepSeek’s models warn that existing inventories of NVIDIA chips will likely fall short of meeting the demand R2 could generate, particularly if it performs better than current open-source alternatives.

These concerns have intensified following the April ban on NVIDIA’s H20 chip, which was specifically built for the Chinese market after earlier export restrictions barred the sale of more powerful Hopper series GPUs.

During the recent earnings call, NVIDIA CFO Colette Kress said the company’s outlook reflects a loss of approximately $8 billion in H20 revenue for the second quarter.

R1 and R2 are tightly optimised to run on NVIDIA’s architecture, making substitution with Chinese-developed chips difficult and inefficient.

According to the report, employees at Chinese cloud companies said DeepSeek’s models “are so completely optimised for NVIDIA’s hardware and software” that deploying them on domestic alternatives is not viable at scale.

Despite the ban, some Chinese companies have found a workaround to obtain NVIDIA hardware.

According to The Wall Street Journal, engineers from Chinese AI companies are heading to Kuala Lumpur, Malaysia, with hard drives packed with instructions and data to train AI models. They then utilise the NVIDIA chips available at Malaysian data centres to train the model and return it to China.

Meanwhile, to cope with chip shortages, some Chinese firms have resorted to using gaming GPUs like NVIDIA’s RTX 5090 and 4090, which are also under export restrictions but easier to obtain through grey markets.

DeepSeek, backed by hedge fund firm High-Flyer Capital Management, made headlines for training R1 with less compute than US competitors like OpenAI.

In response to the surge in R1 usage, major Chinese tech firms, including ByteDance, Alibaba, and Tencent, placed $16 billion worth of orders for 1.2 million H20 chips in early 2025, according to SemiAnalysis estimates. That compares with the 1 million chips shipped to China by NVIDIA last year.

Despite these efforts, the scalability of R2 in China could be limited. Companies outside China, not constrained by US chip curbs, may find it easier to deploy the model at full scale.

Jobs & Careers

TCS Launches Chiplet-Based Engineering Services to Boost Semiconductor Innovation

Tata Consultancy Services (TCS) has launched its chiplet-based system engineering services to help semiconductor companies design next-generation chips. TCS aims to enable faster, more efficient and powerful processors at a time when demand for advanced semiconductors is rising.

The company said that the new services are designed to support chipmakers as the industry shifts from traditional chip design to chiplet-based systems.

“TCS Chiplet-based System Engineering services will help semiconductor enterprises accelerate chiplets tapeout, driving flexibility, scalability and faster time to market,” said V Rajanna, president for technology, software and services at TCS.

Why Chiplet-Based Design Matters

The semiconductor industry faces bottlenecks in scaling and making chiplet-based design a preferred approach. Smaller chips can be mixed and matched to meet varied needs, enabling faster product launches and cost reduction.

With demand driven by AI, cloud computing, smartphones, electric vehicles and connected devices, this shift comes at a critical time.

India’s semiconductor market, valued at $45–50 billion in 2024–2025, is projected to grow to $100–110 billion by 2030. Backed by the India Semiconductor Mission, the country is aiming to become a global hub for chip design and manufacturing.

TCS’s new services are expected to strengthen this momentum by giving companies access to chip-to-system engineering expertise.

TCS in the Semiconductor Industry

TCS has over 20 years of experience in the semiconductor sector and offers a portfolio of chip-to-system engineering services. Its offerings include design and verification of UCIe and HBM standards, as well as advanced package design such as 2.5D and 3D interposers. The company has also worked with a North American semiconductor firm to integrate chiplets into AI processors, reducing delivery timelines.

In February this year, the Indian IT giant also announced a collaboration with Salesforce to enhance the use of AI in the manufacturing and semiconductor sectors. As part of this partnership, TCS launched three key initiatives to improve sales and service efficiency. The Semiconductor Sales Accelerator was expected to help businesses increase sales by providing data-driven insights.

The post TCS Launches Chiplet-Based Engineering Services to Boost Semiconductor Innovation appeared first on Analytics India Magazine.

Jobs & Careers

12 Essential Lessons for Building AI Agents

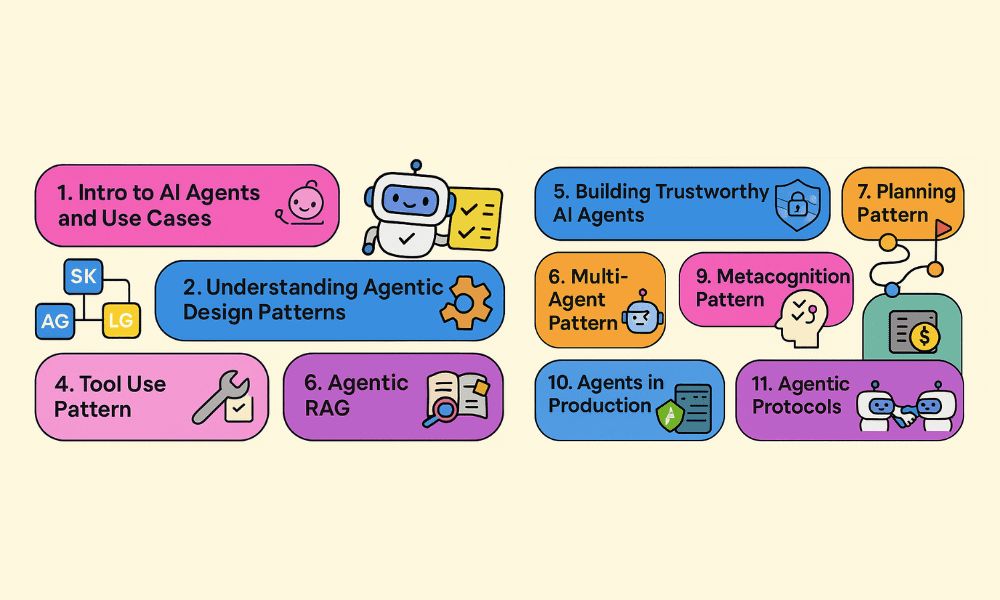

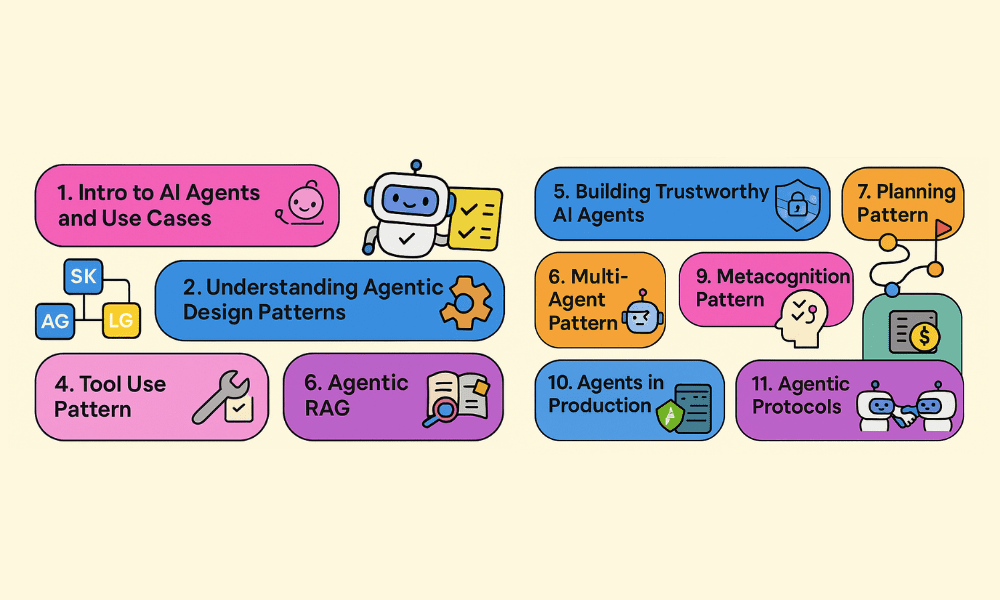

Image by Author | Canva & ChatGPT

# Introduction

GitHub has become the go-to platform for beginners eager to learn new programming languages, concepts, and skills. With the growing interest in agentic AI, the platform is increasingly showcasing real projects that focus on “agentic workflows,” making it an ideal environment to learn and build.

One notable resource is microsoft/ai-agents-for-beginners, which features a 12-lesson course covering the fundamentals of building AI agents. Each lesson is designed to stand on its own, allowing you to start at any point that suits your needs. This repository also offers multi-language support, ensuring broader accessibility for learners. Each lesson in this course includes code examples, which can be found in the code_samples folder.

Moreover, this course uses Azure AI Foundry and GitHub Model Catalogs for interacting with language models. It also incorporates several AI agent frameworks and services like Azure AI Agent Service, Semantic Kernel, and AutoGen.

To facilitate your decision-making process and provide a clear overview of what you will learn, we will review each lesson in detail. This guide serves as a helpful resource for beginners who might feel uncertain about choosing a starting point.

# 1. Intro to AI Agents and Agent Use Cases

This lesson introduces AI agents — systems powered by large language models (LLMs) that sense their environment, reason over tools and knowledge, and act — and surveys key agent types (simple/model-based reflex, goal/utility-based, learning, hierarchical, and multi-agent systems (MAS)) through travel-booking examples.

You will learn when to apply agents to open-ended, multi-step, and improvable tasks, and the foundational building blocks of agentic solutions: defining tools, actions, and behaviors.

# 2. Exploring AI Agentic Frameworks

This lesson explores AI agent frameworks with pre-built components and abstractions that let you prototype, iterate, and deploy agents faster by standardizing common challenges and boosting scalability and developer efficiency.

You will compare Microsoft AutoGen, Semantic Kernel, and the managed Azure AI Agent Service, and learn when to integrate with your existing Azure ecosystem versus using standalone tools.

# 3. Understanding AI Agentic Design Patterns

This lesson introduces AI agentic design principles, a human-centric user experience (UX) approach for building customer-focused agent experiences amid the inherent ambiguity of generative AI.

You will learn what the principles are, practical guidelines for applying them, and examples of their use, with an emphasis on agents that broaden and scale human capacities, fill knowledge gaps, facilitate collaboration, and help people become better versions of themselves through supportive, goal-aligned interactions.

# 4. Tool Use Design Pattern

This lesson introduces the tool-use design pattern, which allows LLM-powered agents to have controlled access to external tools such as functions and APIs, enabling them to take actions beyond just generating text.

You will learn about key use cases, including dynamic data retrieval, code execution, workflow automation, customer support integrations, and content generation/editing. Additionally, the lesson will cover the essential building blocks of this design pattern, such as well-defined tool schemas, routing and selection logic, execution sandboxing, memory and observations, and error handling (including timeout and retry mechanisms).

# 5. Agentic RAG

This lesson explains agentic retrieval-augmented generation (RAG), a multi-step retrieval-and-reasoning approach driven by large language models (LLMs). In this approach, the model plans actions, alternates between tool/function calls and structured outputs, evaluates results, refines queries, and repeats the process until achieving a satisfactory answer. It often uses a maker-checker loop to enhance correctness and recover from malformed queries.

You will learn about the situations where agentic RAG excels, particularly in correctness-first scenarios and extended tool-integrated workflows, such as API calls. Additionally, you will discover how taking ownership of the reasoning process and using iterative loops can enhance reliability and outcomes.

# 6. Building Trustworthy AI Agents

This lesson teaches you how to build trustworthy AI agents by designing a robust system message framework (meta prompts, basic prompts, and iterative refinement), enforcing security and privacy best practices, and delivering a quality user experience.

You will learn to identify and mitigate risks, such as prompt/goal injection, unauthorized system access, service overloading, knowledge-base poisoning, and cascading errors.

# 7. Planning Design Pattern

This lesson focuses on planning design for AI agents. Start by defining a clear overall goal and establishing success criteria. Then, break down complex tasks into ordered and manageable subtasks.

Use structured output formats to ensure reliable, machine-readable responses, and implement event-driven orchestration to address dynamic tasks and unexpected inputs. Equip agents with the appropriate tools and guidelines for when and how to use them.

Continuously evaluate the outcomes of the subtasks, measure performance, and iterate to improve the final results.

# 8. Multi-Agent Design Pattern

This lesson explains the multi-agent design pattern, which involves coordinating multiple specialized agents to collaborate toward a shared goal. This approach is particularly effective for complex, cross-domain, or parallelizable tasks that benefit from the division of labor and coordinated handoffs.

In this lesson, you will learn about the core building blocks of this design pattern: an orchestrator/controller, role-defined agents, shared memory/state, communication protocols, and routing/hand-off strategies, including sequential, concurrent, and group chat patterns.

# 9. Metacognition Design Pattern

This lesson introduces metacognition, which can be understood as “thinking about thinking,” for AI agents. Metacognition allows these agents to monitor their own reasoning processes, explain their decisions, and adapt based on feedback and past experiences.

You will learn planning and evaluation techniques, such as reflection, critique, and maker-checker patterns. These methods promote self-correction, help identify errors, and prevent endless reasoning loops. Additionally, these techniques will enhance transparency, improve the quality of reasoning, and support better adaptation and perception.

# 10. AI Agents in Production

This lesson demonstrates how to transform “black box” agents into “glass box” systems by implementing robust observability and evaluation techniques. You will model runs as traces (representing end-to-end tasks) and spans (petitions for specific steps involving language models or tools) using platforms like Langfuse and Azure AI Foundry. This approach will enable you to perform debugging and root-cause analysis, manage latency and costs, and conduct trust, safety, and compliance audits.

You will learn what aspects to evaluate, such as output quality, safety, tool-call success, latency, and costs, and apply strategies to enhance performance and effectiveness.

# 11. Using Agentic Protocols

This lesson introduces agentic protocols that standardize the ways AI agents connect and collaborate. We will explore three key protocols:

Model Context Protocol (MCP), which provides consistent, client-server access to tools, resources, and prompts, functioning as a “universal adapter” for context and capabilities.

Agent-to-Agent Protocol (A2A), which ensures secure, interoperable communication and task delegation between agents, complementing the MCP.

Natural Language Web Protocol (NLWeb), which enables natural-language interfaces for websites, allowing agents to discover and interact with web content.

In this lesson, you will learn about the purpose and benefits of each protocol, how they enable large language models (LLMs) to communicate with tools and other agents, and where each fits into larger architectures.

# 12. Context Engineering for AI Agents

This lesson introduces context engineering, which is the disciplined practice of providing agents with the right information, in the right format, and at the right time. This approach enables them to plan their next steps effectively, moving beyond one-time prompt writing.

You will learn how context engineering differs from prompt engineering, as it involves ongoing, dynamic curation rather than static instructions. Additionally, you will understand why strategies such as writing, selecting, compressing, and isolating information are essential for reliability, especially given the limitations of constrained context windows.

# Final Thoughts

This GitHub course provides everything you need to start building AI agents. It includes comprehensive lessons, short videos, and runnable Python code. You can explore topics in any order and run samples using GitHub Models (available for free) or Azure AI Foundry.

Additionally, you will have the opportunity to work with Microsoft’s Azure AI Agent Service, Semantic Kernel, and AutoGen. This course is community-driven and open source; contributions are welcome, issues are encouraged, and it is licensed for you to fork and extend.

Abid Ali Awan (@1abidaliawan) is a certified data scientist professional who loves building machine learning models. Currently, he is focusing on content creation and writing technical blogs on machine learning and data science technologies. Abid holds a Master’s degree in technology management and a bachelor’s degree in telecommunication engineering. His vision is to build an AI product using a graph neural network for students struggling with mental illness.

Jobs & Careers

Uber Users Can Soon Book Electric Air Taxis Through the App

Uber is preparing to expand its travel options by adding air mobility services to its app, through a new partnership with Joby Aviation. The move follows Joby’s acquisition of Blade Air Mobility’s passenger business in August, paving the way for Blade flights to be integrated into Uber as soon as next year.

Blade claims that it has been a key player in short-distance air travel, flying more than 50,000 passengers in 2024 across routes in New York and Southern Europe. By combining this network with Uber’s global platform, the companies aim to deliver a seamless experience for customers looking to move quickly between airports, city centres and other high-traffic destinations.

Andrew Macdonald, president and COO of Uber, said in a statement that the deal builds on a long-standing belief at the company.

“Since Uber’s earliest days, we’ve believed in the power of advanced air mobility to deliver safe, quiet and sustainable transportation to cities around the world,” he said.

“By harnessing the scale of the Uber platform and partnering with Joby, we’re excited to bring to our customers the next generation of travel.”

Uber’s ties to the sector date back to 2019, when it began collaborating with Joby to shape urban air mobility. In 2021, Joby acquired Uber’s Elevate division, which helped create tools for market selection and multi-modal transport.

Joby now plans to use Blade’s infrastructure and decade of operational experience to accelerate the launch of its own electric air taxi service in global markets, including Dubai, New York, Los Angeles, the United Kingdom and Japan.

The integration of Blade now should set the stage for the eventual rollout of Joby’s own zero-emissions air taxi, designed to carry four passengers and a pilot at speeds of up to 200 miles per hour (321 kmph).

For Uber, the deal extends its multi-modal vision, placing helicopters and electric air taxis alongside cars, bikes and deliveries.

The post Uber Users Can Soon Book Electric Air Taxis Through the App appeared first on Analytics India Magazine.

-

Business2 weeks ago

Business2 weeks agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms1 month ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy2 months ago

Ethics & Policy2 months agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi