AI Research

AI and health care: Professor’s paper explores how systems assess patient risks and medical coding: Luddy School of Informatics, Computing, and Engineering : Indiana University

Could AI and large-language models become tools to better manage health care?

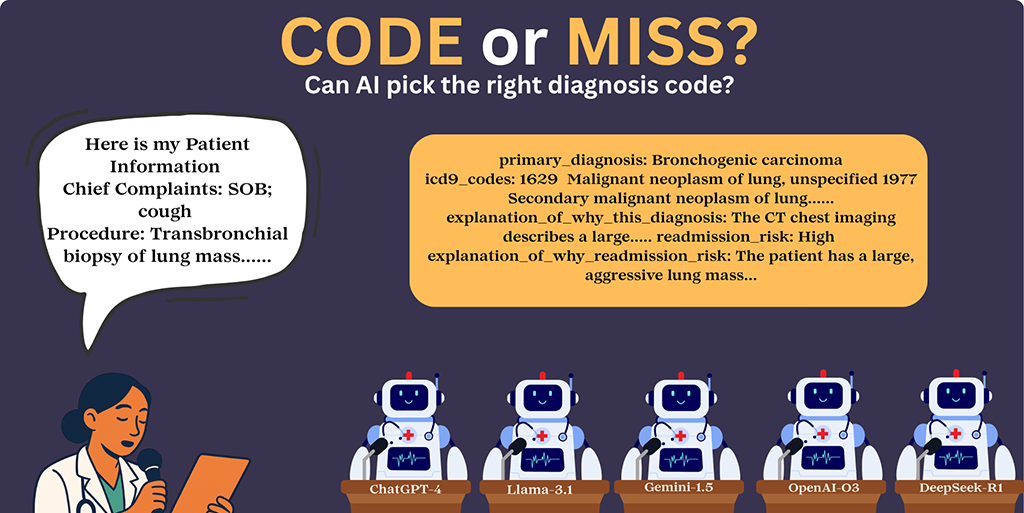

The potential is there – but evidence is lacking. In a paper published in the Journal of Medical Internet Research (JMIR), Luddy Indianapolis Associate Professor Saptarshi Purkayastha examines how large-language models (LLMs) such as ChatGPT-4 and OpenAI-03 performed when tackling some essential clinical tasks. (Spoiler: large-language models have some large problems to overcome.)

The paper published July 30 in the journal, “Evaluating the Reasoning Capabilities of Large Language Models for Medical Coding and Hospital Readmission Risk Stratification: Zero-Shot Prompting Approach,” assesses whether LLMs can serve as general-purpose clinical decision support tools.

“For health care leaders and clinical researchers, the study offers a clear message: while LLMs hold significant potential to support clinical workflows, such as speeding up coding drafts and risk stratification, they are not yet ready to replace human expertise,” says Purkayastha, Ph.D. He is director of Health Informatics and associate chair of the Biomedical Engineering and Informatics department at IU’s Luddy School of Informatics, Computing, and Engineering in Indianapolis.

“The recommended path forward,” he adds, “lies in responsible deployment through hybrid human-AI workflows, specialized fine-tuning on clinical datasets, inspections for detecting bias, and robust governance frameworks that ensure continuous monitoring, auditing, and correction.”

Crunching the numbers

Large-language models are artificial intelligence systems designed to understand and generate human-like text.

Newer reasoning models, which emerged during the study, have reasoning capabilities embedded in their design, allowing more logical, step-by-step decision-making, the paper notes.

For this study, Purkayastha and co-authors Parvati Naliyatthaliyazchayil, Raajitha Mutyala, and Judy Gichoya focused on five LLMs: DeepSeek-R1 and OpenAI-O3 (reasoning models), and ChatGPT-4, Gemini-1.5, and LLaMA-3.1 (non-reasoning models).

The study evaluated the models’ performance in three key clinical tasks:

- Primary diagnosis generation

- ICD-9 medical code prediction

- Hospital readmission risk stratification

Working backwards

When you’re hospitalized, you probably have a lot of questions. By the time you’re discharged, you should have some answers.

In their study, Purkayastha and his co-authors reversed the process, giving the large-language models the results, and letting them take it from there.

“We selected a random cohort of 300 hospital discharge summaries,” the authors explained in their JMIR research paper. The large-language models were given structured clinical content from five note sections:

- Chief complaints

- Past medical history

- Surgical history

- Labs

- Imaging

The challenge: Would the models be able to accurately generate a primary diagnosis; predict medical codes; and assess risk of readmission?

A variable track record

The researchers used zero-shot prompting. This meant the LLMs had NOT seen the actual samples used in the discharge summaries before.

“All model interactions were conducted through publicly available web user interfaces,” the researchers noted, “without using APIs or backend access, to simulate real-world accessibility for non-technical users.”

How did the large-language models perform?

Primary diagnosis generation

This is where LLMs shone brightest. “Among non-reasoning models, LLaMA-3.1 achieved the highest primary diagnosis accuracy (85%), followed by ChatGPT-4 (84.7%) and Gemini-1.5 (79%),” the researchers reported. “Among reasoning models, OpenAI-O3 outperformed in diagnosis (90%).”

ICD-9 medical code prediction

Large-language models fell behind in this category. “For ICD-9 prediction, correctness dropped significantly across all models: LLaMA-3.1 (42.6%), ChatGPT-4 (40.6%), Gemini-1.5 (14.6%),” according to the researchers. OpenAI-03, a reasoning model, scored 45.3%.

Hospital readmission risk stratification

Hospital readmission risk prediction showed low performance in non-reasoning models: LLaMA-3.1 (41.3%), Gemini-1.5 (40.7%), ChatGPT-4 (33%). Reasoning model DeepSeek-R1 performed slightly better in the readmission risk prediction (72.66% vs. OpenAI-O3’s 70.66%), the paper states.

The takeaways

“This study reveals critical insights with profound real-world implications,” Purkayastha says.

“Misclassification in coding can lead to billing inaccuracies, resource misallocation, and flawed health care data analytics. Similarly, incorrect readmission risk predictions may impact discharge planning and patient safety.

“When AI systems err or hallucinate, questions of liability and transparency become pressing. Ambiguities about who is accountable – developers, clinicians, or health care providers – raise legal and professional risks.”

Looking at reasoning vs. non-reasoning models, the researchers said, “Our results show that reasoning models outperformed nonreasoning ones across most tasks.”

The researchers concluded OpenAI-03 outperformed the other models in these tasks, noting, “Reasoning models offer marginally better performance and increased interpretability but remain limited in reliability.”

Their conclusion: when it comes to clinical decision-making and artificial intelligence, there’s a lot of room for improvement.

Identifying LLM shortcomings can lead to solutions

“These results highlight the need for task-specific fine-tuning and adding more human-in-loop models to train them,” the researchers concluded. “Future work will explore fine-tuning, stability through repeated trials, and evaluation on a different subset of de-identified real-world data with a larger sample size.

“The recorded limitations serve as essential guideposts for safely and effectively integrating LLMs into clinical practice.”

Purkayastha acknowledges the role of artificial intelligence in the clinical workflow going forward.

“As artificial intelligence continues to reshape the future of health care, this study represents an important contribution,” he says, “demonstrating original research with significant implications.

“It advocates for balanced optimism paired with caution and ethical vigilance, ensuring that the power of AI truly enhances patient care without compromising safety or trust.”

AI Research

Ray Dalio calls for ‘redistribution policy’ when AI and humanoid robots start to benefit the top 1% to 10% more than everyone else

Legendary investor Ray Dalio, founder of Bridgewater Associates, has issued a stark warning regarding the future impact of artificial intelligence (AI) and humanoid robots, predicting a dramatic increase in wealth inequality that will necessitate a new “redistribution policy”. Dalio articulated his concerns, suggesting that these advanced technologies are poised to benefit the top 1% to 10% of the population significantly more than everyone else, potentially leading to profound societal challenges.

Speaking on “The Diary Of A CEO” podcast, Dalio described a future where humanoid robots, smarter than humans, and advanced AI systems, powered by trillions of dollars in investment, could render many current professions obsolete. He questioned the need for lawyers, accountants, and medical professionals if highly intelligent robots with PhD-level knowledge become commonplace, stating, “we will not need a lot of those jobs.” This technological leap, while promising “great advances,” also carries the potential for “great conflicts.”

He predicted “a limited number of winners and a bunch of losers,” with the likely result being much greater polarity. With the top 1% to 10% “benefiting a lot,” he foresees that being a dividing force. He described the current business climate on AI and robotics as a “crazy boom,” but the question that’s really on his mind is: why would you need even a highly skilled professional if there’s a “humanoid robot that is smarter than all of us and has a PhD and everything.” Perhaps surprisingly, the founder of the biggest hedge fund in history suggested that redistribution will be sorely needed.

Five big forces

“There certainly needs to be a redistribution policy,” Dalio told host Steven Bartlett, without directly mentioning universal basic income. He clarified that this will have to more than “just a redistribution of money policy because uselessness and money may not be a great combination.” In other words, if you redistribute money but don’t think about how to put people to work, that could have negative effects in a world of autonomous agents. The ultimate takeaway, Dalio said, is “that has to be figured out, and the question is whether we’re too fragmented to figure that out.”

Dalio’s remarks echo those of computer science professor Roman Yampolskiy, who sees AI creating up to 80 hours of free time per week for most people. But AI is also showing clear signs of shrinking the jobs market for recent grads, with one study seeing a 13% drop in AI-exposed jobs since 2022. Major revisions from the Bureau of Labor Statistics show that AI has begun “automating away tech jobs,” an economist said in a statement to Fortune in early September.

Dalio said he views this technological acceleration as the fifth of five “big forces” that create an approximate 80-year cycle throughout history. He explained that human inventiveness, particularly with new technologies, has consistently raised living standards over time. However, when people don’t believe the system works for them, he said, internal conflicts and “wars between the left and the right” can erupt. Both the U.S. and UK are currently experiencing these kinds of wealth and values gaps, he said, leading to internal conflict and a questioning of democratic systems.

Drawing on his extensive study of history, which spans 500 years and covers the rise and fall of empires, Dalio sees a historical precedent for such transformative shifts. He likened the current era to previous evolutions, from the agricultural age, where people were treated “essentially like oxen,” to the industrial revolutions where machines replaced physical labor. He said he’s concerned about a similar thing with mental labor, as “our best thinking may be totally replaced.” Dalio highlighted that throughout history, “intelligence matters more than anything” as it attracts investment and drives power.

Pessimistic outlook

Despite the “crazy boom” in AI and robotics, Dalio’s outlook on the future of major powers like the UK and U.S. was not optimistic, citing high debt, internal conflict, and geopolitical factors, in addition to a lack of innovative culture and capital markets in some regions. While personally “excited” by the potential of these technologies, Dalio’s ultimate concern rests on “human nature”. He questions whether people can “rise above this” to prioritize the “collective good” and foster “win-win relationships,” or if greed and power hunger will prevail, exacerbating existing geopolitical tensions.

Not all market watchers see a crazy boom as such a good thing. Even OpenAI CEO Sam Alman himself has said it resembles a “bubble” in some respects. Goldman Sachs has calculated that a bubble popping could wipe out up to 20% of the S&P 500’s valuation. And some long-time critics of the current AI landscape, such as Gary Marcus, disagree with Dalio entirely, arguing that the bubble is due to pop because the AI technology currently on the market is too error-prone to be relied upon, and therefore can’t be scaled away. Stanford computer science professor Jure Leskovec told Fortune that AI is a powerful but imperfect tool and it’s boosting “human expertise” in his classroom, including the hand-written and hand-graded exams that he’s using to really test his students’ knowledge.

For this story, Fortune used generative AI to help with an initial draft. An editor verified the accuracy of the information before publishing.

AI Research

Artificial Intelligence Cheating – The Post Star

Artificial Intelligence Cheating The Post Star

Source link

AI Research

Leveraging AI And Data Science For Biologics Characterization

By Simon Letarte, Letarte Scientific Consulting LLC

Biologics complexity presents significant challenges for characterization, quality control, and regulatory approval. Unlike small molecules, biologics are heterogeneous and structurally intricate, requiring advanced analytical techniques for comprehensive characterization. In this context, artificial intelligence (AI) and data science have emerged as powerful tools, augmenting traditional characterization methods and driving innovation throughout the life cycle of biologic drug development.

Artificial intelligence encompasses machine learning (ML), deep learning, natural language processing (NLP), and other computational techniques that enable software to learn from data, identify patterns, and make predictions. Data science refers to the broader discipline of collecting, processing, analyzing, and visualizing data to extract actionable knowledge.

This paper will focus on the three following case studies:

- CQA data sharing

- Peptide map results interpretation

- Automated regulatory authoring

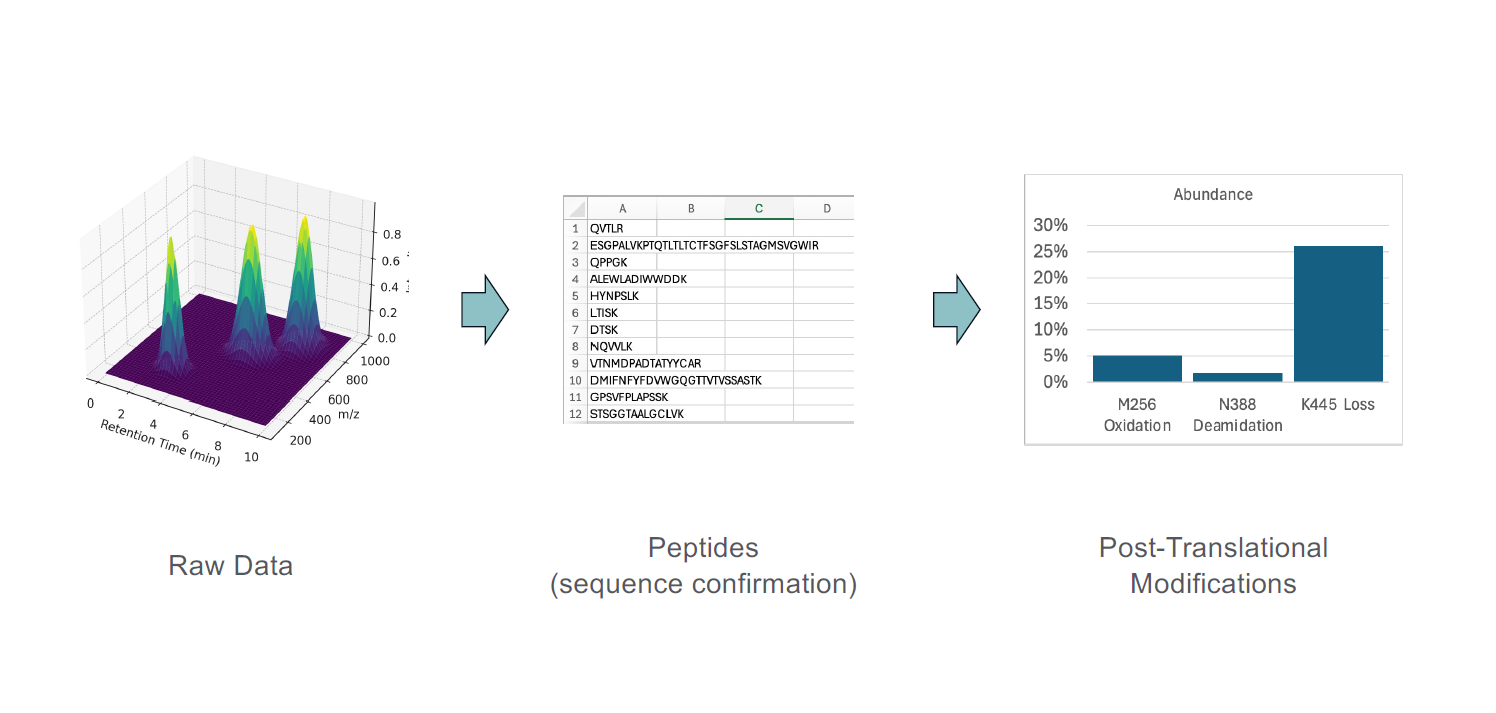

Distilling Raw Data Into CQA Measurements

The characterization of biologics encompasses detailed analysis of their physicochemical properties, including structural integrity, glycosylation patterns, aggregation status, purity, potency, and immunogenicity. Analytical platforms such as mass spectrometry, chromatography, electrophoresis, and bioassays generate complex data sets that require expert interpretation, often through several layers of software. For example, an LC-MS experiment has raw data that is composed of retention time, m/z, and intensity. The raw data tends to be very large and not very informative on its own. It needs to be contextualized and go through a software stack to identify the peptides and proteins that were contained in the sample, as illustrated in Figure 1. Most of the time, we are not interested in the peptides and proteins but in the post-translational modifications (PTMs) on specific amino acids. Therefore, another step of data processing is often necessary.

What data do we share, and how? The raw data should be kept for as long as the corporate policy will allow so it can be re-interrogated if needed in the future. Realistically, the raw data is only useful to the team that performed the analysis because it requires specialized software to read and process. It is the processed data that is of interest to the broader team, and it should be easily accessible to the stakeholders.

Mass spectrometry is unique among analytical techniques because it can tell what is in the sample and how much of it there is. This poses a problem for standardization of the data because the reported attributes may not be defined until after the analysis is complete.

One solution to sharing analytical data is to focus on critical quality attributes (CQAs). In early stages of development, exploratory experiments should be performed to identify the molecule’s liabilities and degradation pathways. Once that is established, one must create analysis templates to record the value of each potential CQA so that every time the analysis is performed, those values are recorded in a structured way. For this to be successful, the team needs to align on a standard vocabulary to name attributes.

Central to this concept, a CQA database is a useful tool, where attributes, criticality scoring, justifications, and recorded values can be visualized for each attribute. One can later search for maximum, minimum, or average value for any lot of product analyzed. This information needs to be available cross-functionally to all stakeholders.

Using AI To Interpret Peptide Map Results

Another interesting area where AI can be applied is in the interpretation of analytical testing results. AI could look at the peptide map results and tell the analyst what the concerning PTMs are as well as their impacts on PK/PD, biological activity, immunogenicity, and safety. This is a level of knowledge that an expert analyst would have in their head, but leveraging AI could make everybody an expert.

General large language models (LLMs) can perform this task to a certain extent but can be unreliable when the residues don’t match the Kabat notation. Most current AI tools do not understand biology and cannot account for whether an amino acid is in an impactful region or not. But this is changing very rapidly, and the tools are much better today than they were a few months ago. There are opportunities to make dedicated AI tools, such as small language models (SMLs) and fine tune them to produce more comprehensive and reliable results for a set of predefined tasks.

Automated Regulatory Authoring

Regulatory authoring tends to be a time-consuming and stressful activity for the analytical scientist. This includes wrapping up the last experiments, finishing the electronic lab notebooks (ELNs), writing up the scientific reports, the multiple rounds of revisions, and, finally, authoring the regulatory document. To streamline this process, one can develop content mapping scripts between data from an ELN to automatically transfer tables and figures into a predefined section of a Word document, as illustrated in Figure 2. Once the notebook is approved, the data remains in a validated state and can be mapped into the regulatory document template.

The above approach encourages end-to-end planning of the regulatory writing and can save significant time iterating across the different versions of the reports. Most modern ELNs offer application programming interface (APIs) that can interface with custom scripts to transfer the data into Microsoft Word documents.

A Word Of Caution

Although AI can write entire documents, we need to keep in mind how the activities of writing and thinking are interconnected. It is well documented that writing things down helps with remembering them and creates connections in the brain.1, 2

As an illustration of this process, I was recently reviewing a report one of my team members wrote. We had found a trace-level impurity but were unsure of its identity. The deadline for the report was approaching, so we began drafting. As I read the draft and rewrote some sections, something clicked. It clicked because I was reading the entire body of evidence at once and mentally connecting loose ends. My attention was drawn where the text was imperfect, perhaps a sign of gaps in the story. I started thinking and rearranging paragraphs. Eventually, I noticed minor peaks from another assay that were explaining our unknown trace impurity.

The process of writing and revising the document directly led to this important insight. I spent more time where the text was imperfect and unclear, which indicated to me a lack of understanding and deficiency in the data. If the text had been written by an AI, all proper, grammatically correct, and boring, I might have missed it.

Challenges And Future Directions

While the promise of AI and data science in biologics characterization is profound and goes far beyond the few case studies presented here, several obstacles remain:

- Data quality and standardization: The utility of AI depends on robust, high-quality training data, which is often lacking due to proprietary constraints and variability in experimental practices. As with anything, garbage in, garbage out.

- Model interpretability: Regulatory agencies and stakeholders require transparent, interpretable models. The “black box” nature of deep learning can limit adoption. We need more deterministic and fewer probabilistic models.

- Integration with legacy systems: Many laboratories operate with heterogeneous equipment and software, complicating data integration and workflow automation. A lot of effort is needed at the interfaces to ensure the connections are made correctly.

Future advances may include federated learning for cross-institutional model development, use of specialized SMLs, explainable AI for regulatory acceptance, and the integration of AI with real-time analytics for adaptive process control.

Conclusion

Today is a great time to enroll AI to give a productivity boost to your team. As resources are stretched thin across organizations, these tools allow scientists to focus their time on value-added work. AI can impart advanced knowledge to junior scientists so they can perform tasks that only the most senior team members could do only a few years ago. This shift has the potential to lower the cost of developing safe, effective medicines and increase profitability of biotech companies.

References:

- Hillesund, T., Scholarly reading (and writing) and the power of impact factors: a study of distributed cognition and intellectual habits, Frontiers in Psychology, Vol 14, 2023.

- Menary, R., Writing as Thinking, J. Lang. Sci., Vol 29, No. 5, 2007.

About The Author:

Simon Letarte, Ph.D., is an analytical chemist and principal consultant at Letarte Scientific Consulting LLC. He has more than 15 years of experience characterizing proteins and peptides using proteomics and mass spectrometry. Previously, he was a director of structural characterization at Gilead Sciences. Before that, he worked as a principal scientist at Merck and, before that, Pfizer. Other previous roles include senior research scientist roles at Dendreon and the Institute for Systems Biology. Connect with him on LinkedIn.

Simon Letarte, Ph.D., is an analytical chemist and principal consultant at Letarte Scientific Consulting LLC. He has more than 15 years of experience characterizing proteins and peptides using proteomics and mass spectrometry. Previously, he was a director of structural characterization at Gilead Sciences. Before that, he worked as a principal scientist at Merck and, before that, Pfizer. Other previous roles include senior research scientist roles at Dendreon and the Institute for Systems Biology. Connect with him on LinkedIn.

-

Business2 weeks ago

Business2 weeks agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms1 month ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy2 months ago

Ethics & Policy2 months agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi