Ethics & Policy

Unmasking secret cyborgs, California SB 1047, LLM creativity, toxicity evaluation ++

Welcome to another edition of the Montreal AI Ethics Institute’s weekly AI Ethics Brief that will help you keep up with the fast-changing world of AI Ethics! Every week, we summarize the best of AI Ethics research and reporting, along with some commentary. More about us at montrealethics.ai/about.

💖 To keep our content free for everyone, we ask those who can, to support us: become a paying subscriber for the price of a couple of ☕.

If you’d prefer to make a one-time donation, visit our donation page. We use this Wikipedia-style tipping model to support our mission of Democratizing AI Ethics Literacy and to ensure we can continue to serve our community.

-

On the Challenges of Using Black-Box APIs for Toxicity Evaluation in Research

-

On the Creativity of Large Language Models

-

Supporting Human-LLM collaboration in Auditing LLMs with LLMs

-

AI Governance Appears on Corporate Radar

-

TikTok to automatically label AI-generated user content in global first

-

Creating sexually explicit deepfake images to be made offence in UK

Bill SB 1047 in California aims to establish safety standards for the development of advanced AI models while authorizing a regulatory body to enforce compliance. However, there is ongoing debate about whether the bill strikes the right balance between mitigating AI risks and enabling innovation.

In brief, the bill requires the following from AI ecosystem actors:

But, there have been some very vocal concerns that have been raised by (influential) people in the AI ecosystem on how this might stifle innovation, including emigration of companies to other more hospitable jurisdictions to develop AI systems. Prominent figures like Andrew Ng argue the bill stokes unnecessary fear and hinders AI innovation. Critics say the bill burdens smaller AI companies with compliance costs and targets hypothetical risks, impacting open-source models which have driven a tremendous amount of capability advances in recent months, such as those enabled by Llama 3.

It will be interesting to see how the bill evolves given its current state, the arguments raised by industry actors, the profiles of the co-sponsors who are supporting the bill, and ultimately the balance that we need to strike in crafting such rules so that there is an appropriate balance between the ability to innovate while safeguarding end-user interests. Swinging the pendulum too far on either end is dangerous!

Did we miss anything?

Every week, we’ll feature a question from the MAIEI community and share our thinking here. We invite you to ask yours, and we’ll answer it in the upcoming editions.

Here are the results from the previous edition for this segment:

A little bit sad to see that there is a bigger percentage of readers who haven’t had a chance to engage in futures thinking at their organization. Hopefully, the guide from last week, Think further into the future: An approach to better RAI programs, with the suggested actions of (1) establishing a foresight team, (2) developing long-term metrics, (3) conducting regular futures scenario workshops, and (4) building flexible policies provides you with a starting point to experiment with this approach.

This week, reader Kristian B., asks us about being appointed/assigned as the first person in their organization to implement (ambitiously) sweeping changes to operationalize Responsible AI. Yet, this comes with a warning that they need to be cautious as they make those changes – so, they ask us, how to achieve that balance? (And yes, it seems like balance is the topic-du-jour this week!)

We believe that the right approach is one that makes large changes in small, safe steps as we write in this week’s exploration of the subject:

The “large changes in small safe steps” approach leads to more successful program implementation by effectively mitigating risks, enhancing stakeholder engagement and trust, and ensuring sustainable and scalable adoption of new practices. This strategic method balances innovation with caution, fostering a resilient and adaptive framework for Responsible AI programs.

What were the lessons you learned from the deployment of Responsible AI at your organization? Please let us know! Share your thoughts with the MAIEI community:

Unmasking Secret Cyborgs and Illuminating Their AI Shadows

To address the challenges of “shadow AI” adoption and “secret cyborgs,” in organizations, policymakers and governance professionals should focus on creating frameworks that require transparency and accountability in AI usage.

To delve deeper, read the full article here.

Raging debates, like the ones around California SB 1047, and how they approach the balance between safety and speed of innovation pose crucial questions for the Responsible AI community on how we should support such legislative efforts in the most effective manner so that the outcomes are something that achieve that balancing act in the best possible manner. What mediation techniques have you found that work well for such a process?

We’d love to hear from you and share your thoughts with everyone in the next edition:

In some essence, continuing to build on the idea of having to evaluate difficult tradeoffs, such as the ones presented in California SB 1047 as we discuss this week, let’s take a look at how we can determine tradeoffs when it comes to safety, ethics, and inclusivity in AI systems.

Design decisions for AI systems involve value judgements and optimization choices. Some relate to technical considerations like latency and accuracy, others relate to business metrics. But each require careful consideration as they have consequences in the final outcome from the system.

To be clear, not everything has to translate into a tradeoff. There are often smart reformulations of a problem so that you can meet the needs of your users and customers while also satisfying internal business considerations.

Take for example an early LinkedIn feature that encouraged job postings by asking connections to recommend specific job postings to target users based on how appropriate they thought them to be for the target user. It provided the recommending user a sense of purpose and goodwill by only sharing relevant jobs to their connections at the same time helping LinkedIn provide more relevant recommendations to users. This was a win-win scenario compared to having to continuously probe a user deeper and deeper to get more data to provide them with more targeted job recommendations.

This article will build on The importance of goal setting in product development to achieve Responsible AI adding another dimension of consideration in building AI systems that are ethical, safe, and inclusive.

You can either click the “Leave a comment” button below or send us an email! We’ll feature the best response next week in this section.

On the Challenges of Using Black-Box APIs for Toxicity Evaluation in Research

We show how silent changes in a toxicity scoring API have impacted a fair comparison of toxicity metrics between language models over time. This affected research reproducibility and living benchmarks of model risk such as HELM. We suggest caution in applying apples-to-apples comparisons between toxicity studies and lay recommendations for a more structured approach to evaluating toxicity over time.

To delve deeper, read the full summary here.

On the Creativity of Large Language Models

Large Language Models (LLMs) like ChatGPT are revolutionizing several areas of AI, including those related to creative writing. This paper offers a critical discussion of LLMs in the light of human theories of creativity, including the impact these technologies might have on society.

To delve deeper, read the full summary here.

Supporting Human-LLM collaboration in Auditing LLMs with LLMs

While large language models (LLMs) are being increasingly deployed in sociotechnical systems, in practice, LLMs propagate social biases and behave irresponsibly, imploring the need for rigorous evaluations. Existing tools for finding failures of LLMs leverage either or both humans and LLMs, however, they fail to bring the human into the loop effectively, missing out on their expertise and skills complementary to those of LLMs. In this work, we build upon an auditing tool to support humans in steering the failure-finding process while leveraging the generative skill and efficiency of LLMs.

To delve deeper, read the full summary here.

AI Governance Appears on Corporate Radar

-

What happened: The rapid evolution of AI is reshaping business strategies, prompting companies to integrate AI for efficiency, competitive advantages, and stakeholder engagement. As AI usage surges, so do concerns about its risks, prompting the White House to issue an executive order on AI regulation. Reflecting this, companies are adapting by recruiting directors with AI expertise and establishing board-level oversight.

-

Why it matters: AI’s potential benefits come with significant risks, urging companies to adopt proactive measures for oversight. While only a fraction of S&P 500 companies explicitly disclose AI oversight, sectors like Information Technology lead in integrating AI expertise on boards, with ‘30% of S&P 500 IT companies having at least one director with AI-related expertise.’ This trend indicates a growing recognition of AI’s impact, especially in industries where it’s most influential. Investors are beginning to demand greater transparency regarding AI’s use and impact, signaling a shift towards increased accountability and governance in AI integration.

-

Between the lines: As AI becomes more central to business operations, investor expectations for transparent and responsible AI governance are mounting. The emergence of shareholder proposals focusing on AI underscores this shift, signaling a potential future where AI oversight becomes a standard requirement. While regulatory changes and investor policies may evolve in response to AI’s growing influence, companies are urged to establish robust oversight mechanisms to navigate AI-related risks and opportunities effectively.

TikTok to automatically label AI-generated user content in global first

-

What happened: TikTok is taking proactive steps to address concerns surrounding the proliferation of AI-generated content, particularly deepfakes, by automatically labeling such content on its platform. This move comes amid growing worries about the spread of disinformation facilitated by advances in generative AI. TikTok’s announcement follows existing requirements by online groups, including Meta, for users to disclose AI-generated media.

-

Why it matters: TikTok’s decision to label AI-generated content is a significant response to the rising prevalence of harmful content generated through AI. By providing transparency, TikTok aims to preserve the authenticity of its platform and empower users to distinguish between human-created and AI-generated content. Other major social media platforms are also grappling with integrating generative AI while combatting issues like spam and deepfakes, especially in the context of upcoming elections. TikTok’s move underscores the broader industry efforts to address these challenges and foster a more trustworthy online environment.

-

Between the lines: While tech companies are exploring ways to embed AI technology into their platforms, concerns persist about the potential misuse of open-source AI tools by bad actors to create undetectable deepfakes. Meta has also announced plans to label AI-generated content, joining TikTok in this initiative. Experts suggest that transparency and authentication tools like those developed by Adobe could be crucial in mitigating the risks associated with AI-generated content, marking an initial step in addressing this complex issue.

Creating sexually explicit deepfake images to be made offence in UK

-

What happened: The Ministry of Justice has announced plans to criminalize the creation of sexually explicit “deepfake” images, regardless of whether they are shared. This amendment to the criminal justice bill aims to address concerns regarding the use of deepfake technology to produce intimate images without consent. The legislation aligns with the Online Safety Act, which already prohibits the sharing of such content.

-

Why it matters: The proposed offence signifies a significant step in safeguarding individuals’ privacy and dignity in the digital age. Laura Farris, the minister for victims and safeguarding, emphasized the need to combat the dehumanizing and harmful nature of deepfake sexual images, particularly in their potential to cause catastrophic consequences when shared widely. Yvette Cooper, the shadow home secretary, underscored the importance of equipping law enforcement with the necessary tools to enforce these laws effectively, thereby preventing perpetrators from exploiting individuals with impunity.

-

Between the lines: Deborah Joseph, the editor-in-chief of Glamour UK, expressed support for the legislative amendment, citing a Glamour survey highlighting widespread concerns among readers about the safety implications of deepfake technology. Despite this progress, Joseph emphasized the ongoing challenges in ensuring women’s safety and called for continued efforts to combat this disturbing activity effectively.

What is hallucination in LLMs?

👇 Learn more about why it matters in AI Ethics via our Living Dictionary.

Bridging the civilian-military divide in responsible AI principles and practices

Advances in AI research have brought increasingly sophisticated capabilities to AI systems and heightened the societal consequences of their use. Researchers and industry professionals have responded by contemplating responsible principles and practices for AI system design. At the same time, defense institutions are contemplating ethical guidelines and requirements for the development and use of AI for warfare. However, varying ethical and procedural approaches to technological development, research emphasis on offensive uses of AI, and lack of appropriate venues for multistakeholder dialogue have led to differing operationalization of responsible AI principles and practices among civilian and defense entities. We argue that the disconnect between civilian and defense responsible development and use practices leads to underutilization of responsible AI research and hinders the implementation of responsible AI principles in both communities. We propose a research roadmap and recommendations for dialogue to increase exchange of responsible AI development and use practices for AI systems between civilian and defense communities. We argue that generating more opportunities for exchange will stimulate global progress in the implementation of responsible AI principles.

To delve deeper, read more details here.

A Systematic Review of Ethical Concerns with Voice Assistants

We’re increasingly becoming aware of ethical issues around the use of voice assistants, such as the privacy implications of having devices that are always listening and the ways that these devices are integrated into existing social structures in the home. This has created a burgeoning area of research across various fields, including computer science, social science, and psychology, which we mapped through a systematic literature review of 117 research articles. In addition to analysis of specific areas of concern, we also explored how different research methods are used and who gets to participate in research on voice assistants.

To delve deeper, read the full article here.

We’d love to hear from you, our readers, on what recent research papers caught your attention. We’re looking for ones that have been published in journals or as a part of conference proceedings.

Ethics & Policy

Tech leaders in financial services say responsible AI is necessary to unlock GenAI value

Good morning. CFOs are increasingly responsible for aligning AI investments with business goals, measuring ROI, and ensuring ethical adoption. But is responsible AI an overlooked value creator?

Scott Zoldi, chief analytics officer at FICO and author of more than 35 patents in responsible AI methods, found that many customers he’s spoken to lacked a clear concept of responsible AI—aligning AI ethically with an organizational purpose—prompting an in-depth look at how tech leaders are managing it.

According to a new FICO report released this morning, responsible AI standards are considered essential innovation enablers by senior technology and AI leaders at financial services firms. More than half (56%) named responsible AI a leading contributor to ROI, compared to 40% who credited generative AI for bottom-line improvements.

The report, based on a global survey of 254 financial services technology leaders, explores the dynamic between chief AI/analytics officers—who focus on AI strategy, governance, and ethics—and CTOs/CIOs, who manage core technology operations and alignment with company objectives.

Zoldi explained that, while generative AI is valuable, tech leaders see the most critical problems and ROI gains arising from responsible AI and true synchronization of AI investments with business strategy—a gap that still exists in most firms. Only 5% of respondents reported strong alignment between AI initiatives and business goals, leaving 95% lagging in this area, according to the findings.

In addition, 72% of chief AI officers and chief analytics officers cite insufficient collaboration between business and IT as a major barrier to company alignment. Departments often work from different metrics, assumptions, and roadmaps.

This difficulty is compounded by a widespread lack of AI literacy. More than 65% said weak AI literacy inhibits scaling. Meanwhile, CIOs and CTOs report that only 12% of organizations have fully integrated AI operational standards.

In the FICO report, State Street’s Barbara Widholm notes, “Tech-led solutions lack strategic nuance, while AI-led initiatives can miss infrastructure constraints. Cross-functional alignment is critical.”

Chief AI officers are challenged to keep up with the rapid evolution of AI. Mastercard’s chief AI and data officer, Greg Ulrich, recently told Fortune that last year was “early innings,” focused on education and experimentation, but that the role is shifting from architect to operator: “We’ve moved from exploration to execution.”

Across the board, FICO found that about 75% of tech leaders surveyed believe stronger collaboration between business and IT leaders, together with a shared AI platform, could drive ROI gains of 50% or more. Zoldi highlighted the problem of fragmentation: “A bank in Australia I visited had 23 different AI platforms.”

When asked about innovation enablers, 83% of respondents rated cross-departmental collaboration as “very important” or “critical”—signaling that alignment is now foundational.

The report also stresses the importance of human-AI interaction: “Mature organizations will find the right marriage between the AI and the human,” Zoldi said. And that involves human understanding for where to ”best place AI in that loop,” he said.

Sheryl Estrada

sheryl.estrada@fortune.com

Leaderboard

Brian Robins was appointed CFO of Snowflake (NYSE: SNOW), an AI Data Cloud company, effective Sept. 22. Snowflake also announced that Mike Scarpelli is retiring as CFO. Scarpelli will stay a Snowflake employee for a transition period. Robins has served as CFO of GitLab Inc., a technology company, since October 2020. Before that, he was CFO of Sisense, Cylance, AlienVault, and Verisign.

Big Deal

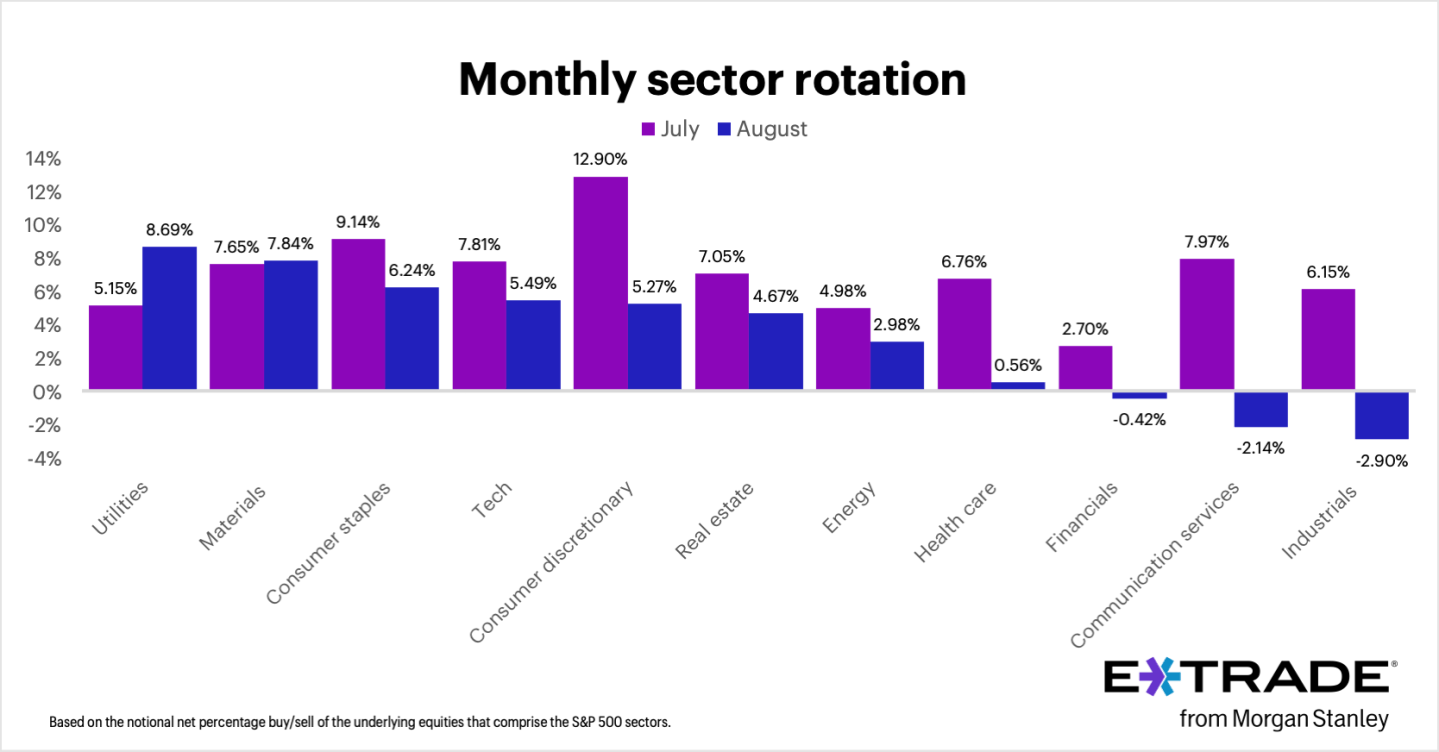

August marked the S&P 500’s fourth consecutive month of gains, with E*TRADE clients net buyers in eight out of 11 sectors, Chris Larkin, managing director of trading and investing, said in a statement. “But some of that buying was contrarian and possibly defensive,” Larkin noted. “Clients rotated most into utilities, a defensive sector that was actually the S&P 500’s weakest performer last month. Another traditionally defensive sector, consumer staples, received the third-most net buying.” By contrast, clients were net sellers in three sectors—industrials, communication services, and financials—which have been among the S&P 500’s stronger performers so far this year.

“Given September’s history as the weakest month of the year for stocks, it’s possible that some investors booked profits from recent winners while increasing positions in defensive areas of their portfolios,” Larkin added.

Going deeper

“Warren Buffett’s $57 billion face-plant: Kraft Heinz breaks up a decade after his megamerger soured” is a Fortune report by Eva Roytburg.

From the report: “Kraft Heinz, the packaged-food giant created in 2015 by Warren Buffett and Brazilian private equity firm 3G Capital, is officially breaking up. The Tuesday announcement ends one of Buffett’s highest-profile bets—and one of his most painful—as the merger that once promised efficiency and dominance instead wiped out roughly $57 billion, or 60%, in market value. Shares slid 7% after the announcement, and Berkshire Hathaway still owns a 27.5% stake.” You can read the complete report here.

Overheard

“Effective change management is the linchpin of enterprise-wide AI implementation, yet it’s often underestimated. I learned this first-hand in my early days as CEO at Sanofi.”

—Paul Hudson, CEO of global healthcare company Sanofi since September 2019, writes in a Fortune opinion piece. Previously, Hudson was CEO of Novartis Pharmaceuticals from 2016 to 2019.

Ethics & Policy

Humans at Core: Navigating AI Ethics and Leadership

Hyderabad recently hosted a vital dialogue on ‘Human at Core: Conversations on AI, Ethics and Future,’ co-organized by IILM University and The Dr Pritam Singh Foundation at Tech Mahindra, Cyberabad. Gathering prominent figures from academia, government, and industry, the event delved into the ethical imperatives of AI and human-centric leadership in a tech-driven future.

The event commenced with Sri Gaddam Prasad Kumar advocating for technology as a servant to humanity, followed by a keynote from Sri Padmanabhaiah Kantipudi, who addressed the friction between rapid technological growth and ethical governance. Two pivotal panels explored the crossroads of AI’s progress versus principle and leadership’s critical role in AI development.

Key insights emerged around empathy and foresight in AI’s evolution, as leaders like Manoj Jha and Rajesh Dhuddu emphasized. Dr. Ravi Kumar Jain highlighted the collective responsibility to steer innovation wisely, aligning technological advancement with human values. The event reinforced the importance of cross-sector collaboration to ensure technology enhances equity and dignity globally.

Ethics & Policy

IILM University and The Dr Pritam Singh Foundation Host Round Table Conference on “Human at Core” Exploring AI, Ethics, and the Future

Hyderabad (Telangana) [India], September 4: IILM University, in collaboration with The Dr Pritam Singh Foundation, hosted a high-level round table discussion on the theme “Human at Core: Conversations on AI, Ethics and Future” at Tech Mahindra, Cyberabad, on 29th August 2025. The event brought together distinguished leaders from academia, government, and industry to engage in a timely and thought-provoking dialogue on the ethical imperatives of artificial intelligence and the crucial role of human-centric leadership in shaping a responsible technological future. The proceedings began with an opening address by Sri Gaddam Prasad Kumar, Speaker, Telangana Legislative Assembly, who emphasised the need to ensure that technology remains a tool in the service of humanity. This was followed by a keynote address delivered by Sri Padmanabhaiah Kantipudi, IAS (Retd.), Chairman of the Administrative Staff College of India (ASCI), who highlighted the growing tension between technological acceleration and ethical oversight.

The event featured two significant panel discussions, each addressing the complex intersections between technology, ethics, and leadership. The first panel, moderated by Mamata Vegunta, Executive Director and Head of HR at DBS Tech India, examined the question, “AI’s Crossroads: The Choice Between Progress and Principle.” The discussion reflected on the critical junctures at which leaders must make choices that balance innovation with responsibility. Panellists, including Deepak Gowda of the Union Learning Academy, Dr. Deepak Kumar of IDRBT, Srini Vudumula of Novelis Consulting, and Gaurav Maheshwari of Signode India Limited, shared their insights on the pressing need for robust ethical frameworks that evolve alongside AI.

The second panel, moderated by Vinay Agrawal, Global Head of Business HR at Tech Mahindra, focused on the theme “Human-Centred AI: Why Leadership Matters More Than Ever.” This session brought to light the growing expectation of leaders to act not just as enablers of technological progress, but as custodians of its impact. Panellists Manoj Jha from Makeen Energy, Dr Anadi Pande from Mahindra University, Rajesh Dhuddu of PwC, and Kiranmai Pendyala, investor and former UWH Chairperson, collectively underlined the importance of empathy, accountability, and foresight in guiding AI development.

Speaking at the event, Dr Ravi Kumar Jain, Director, School of Management – IILM University Gurugram, remarked, “We are at a defining moment in human history, where the question is not merely about how fast we can innovate, but how wisely we choose to do so. At IILM, we believe in nurturing leaders who are not only competent but also conscious of their responsibilities to society.” His sentiments were echoed by Prof Harivansh Chaturvedi, Director General at IILM Lodhi Road, who affirmed the university’s continued commitment to promoting responsible leadership through dialogue, collaboration, and critical inquiry. Across both panels, there was a shared recognition that ethical leadership must keep pace with the rapid transformations driven by AI, and that collaborative efforts across sectors will be essential to ensure that innovation serves the broader goals of equity, dignity, and humanity.

The discussions concluded with a renewed call to action for academic institutions, industry leaders, and policymakers to work together in shaping a future where technology empowers without eroding core human values. In doing so, the event reaffirmed the central message behind its theme that in an increasingly digital world, it is important now more than ever to keep it human at the core.

(Disclaimer: The above press release comes to you under an arrangement with PNN and PTI takes no editorial responsibility for the same.). PTI PWR

(This content is sourced from a syndicated feed and is published as received. The Tribune assumes no responsibility or liability for its accuracy, completeness, or content.)

-

Business6 days ago

Business6 days agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms3 weeks ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy1 month ago

Ethics & Policy1 month agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics