Ethics & Policy

Misuse of AI in War, the Commoditization of Intelligence, and more.

Welcome to The AI Ethics Brief, a bi-weekly publication by the Montreal AI Ethics Institute. Stay informed on the evolving world of AI ethics with key research, insightful reporting, and thoughtful commentary. Learn more at montrealethics.ai/about.

Follow MAIEI on Bluesky and LinkedIn.

-

Beyond the Façade: Challenging and Evaluating the Meaning of Participation in AI Governance – Tech Policy Press

-

Elon Musk staffer created a DOGE AI assistant for making government ‘less dumb’ – TechCrunch

Fireside Chat: Funding Landscape and the Future of the Digital Rights Ecosystem (RightsCon 2025, Taipei)

In an op-ed for MAIEI, Seher Shafiq of the Mozilla Foundation reflects on key discussions from RightsCon 2025 in Taipei, highlighting three urgent challenges in the digital rights space:

-

A deepening funding crisis threatens the ability of advocacy organizations to respond effectively to emerging digital threats. In 2023, foreign aid totaled $233 billion, but $78 billion in cuts this year—nearly one-third of the sector’s funding—vanished overnight, leaving a crisis still unfolding.

-

The weaponization of AI, particularly in war, conflict, and genocide, raises new concerns about the ethical and human rights implications of automated decision-making.

-

The lack of Global Majority representation in shaping AI governance frameworks highlights the need for more inclusive perspectives in decision-making. These voices matter now more than ever.

These challenges are interconnected. Funding shortfalls limit advocacy efforts at a time when AI systems are being deployed in high-stakes environments with little oversight. Meanwhile, those most affected by AI-driven harms—particularly in the Global Majority (also known as the Global South)—are often excluded from shaping solutions. Addressing these gaps requires not only new funding models and stronger AI governance frameworks, but also a fundamental shift in whose knowledge and experiences guide global AI policy.

As AI’s impact on human rights continues to grow, the conversation must evolve beyond technical fixes and policy frameworks to focus on community resilience, diverse coalitions, and a commitment to tech development that centers dignity and justice for all. The urgency felt at RightsCon 2025 wasn’t just about individual crises—it was about rethinking how the digital rights ecosystem itself must adapt to meet this moment.

📖 Read Seher’s op-ed on the MAIEI website.

Since our last post on AI agents, nearly every Big Tech company has rolled out a new agentic research feature—and they’re all practically named the same. OpenAI released DeepResearch within ChatGPT, Perplexity did the same with their own Deep Research (powered by DeepSeek’s R1 model, which we analyzed here), and Google launched Deep Research in its Gemini model back in December. X (formerly Twitter) took a slightly different path, calling its tool DeepSearch.

Beyond similarities in branding, the aim of these tools remains the same: to synthesize complex research in minutes that would otherwise take a human multiple hours to do. The basic principle involves the user prompting the model with a research direction, refining the research plan presented by the model, and then leaving it to scour the internet for insights and present its findings.

Benchmarks used to evaluate these models vary, including Humanity’s Last Exam (HLE), a test set of nearly 2,700 interdisciplinary questions designed to test LLMs on a broad range of expert-level academic subjects, and the General AI Assistant (GAIA) Benchmark, a benchmark designed to evaluate AI assistants on real-world problem solving tasks.

While the ability to save many hours of research is promising, said ability must be taken with a pinch of salt. These models still hallucinate, making up references and struggling to distinguish authoritative sources from misinformation. Furthermore, given the increase in processing time (e.g. ChatGPT could be compiling a research report for up to 30 minutes), the required amount of compute needed will increase, driving up the models’ environmental costs. As the agentic AI race heats up (both metaphorically and literally), the competition isn’t just about efficiency—it’s about whether these tools can truly justify their costs and risks.

Did we miss anything? Let us know in the comments below.

A recent conversation over coffee sparked this question: as AI agents and AI-generated content proliferate across the web at an unprecedented rate, what remains uniquely human? If AI can outperform us in IQ-based tasks—summarizing papers, answering complex questions, and writing compelling text—where does our distinct value lie?

While AI systems can generate articulate and even persuasive content, it lacks depth in understanding. It can explain, but it cannot truly comprehend. It can mimic empathy, but it cannot feel. This distinction matters, particularly in fields like mental health, education, and creative work, where emotional intelligence (EQ) is as crucial as cognitive intelligence (IQ).

An alarming example is AI’s role in therapy and emotional support. When LLMs are trained to optimize for the probability of the “next best word,” it lacks the contextual judgment required to prioritize ethical considerations. Recent instances where AI chatbots have encouraged self-harm illustrate the dangers of mistaking superficial empathy (articulating empathetic phrases) for genuine empathy (authentic care and understanding).

This raises a broader question: What is the value of human interpretation in an age of AI-generated content? As AI-generated knowledge becomes commodified, our human capabilities—analytical depth, emotional intelligence, and ethical reasoning—grow more valuable. Without thoughtful boundaries on Al usage, we risk a future where intelligence is cheap, but wisdom is rare.

At MAIEI, we recognize this challenge. Our editorial stance on AI tools in submissions requires explicit disclosure of any AI tool usage in developing content, including research summaries, op-eds, and original essays. We actively seek feedback from contributors, readers, and partners as we refine these standards. Our goal remains balancing technological innovation with the integrity of human intellectual contribution that advances AI ethics.

As part of this broader conversation, redefining literacy in an AI-driven world is becoming increasingly important. Kate Arthur, an advisor to MAIEI, explores this evolving concept in her newly published book, Am I Literate? Redefining Literacy in the Age of Artificial Intelligence. Reflecting on her journey as an educator and advocate for digital literacy, she examines how AI is reshaping our understanding of knowledge, communication, and critical thinking. Her insights offer a timely perspective on what it truly means to be literate in an era where AI-generated content is ubiquitous.

To dive deeper, read more details on “Am I Literate?” here.

Please share your thoughts with the MAIEI community:

In each edition, we highlight a question from the MAIEI community and share our insights. Have a question on AI ethics? Send it our way, and we may feature it in an upcoming edition!

We’re curious to get a sense of where readers stand on AI-generated content, especially as it becomes increasingly prevalent across industries From research reports to creative writing, AI is producing content at scale.

But does knowing something was AI-generated affect how much we trust or value it? Is the quality of content all that matters, or does authorship and authenticity still hold significance?

💡 Vote and share your thoughts!

-

It’s all about substance – If the content is accurate and useful, I don’t care who (or what) created it.

-

Human touch matters – I trust and value human-crafted content more, even if AI can generate similar results.

-

Context is key – Context matters (e.g., news vs. creative writing vs. research).

-

AI content should always be disclosed – Transparency matters, and AI-generated work should always be labeled.

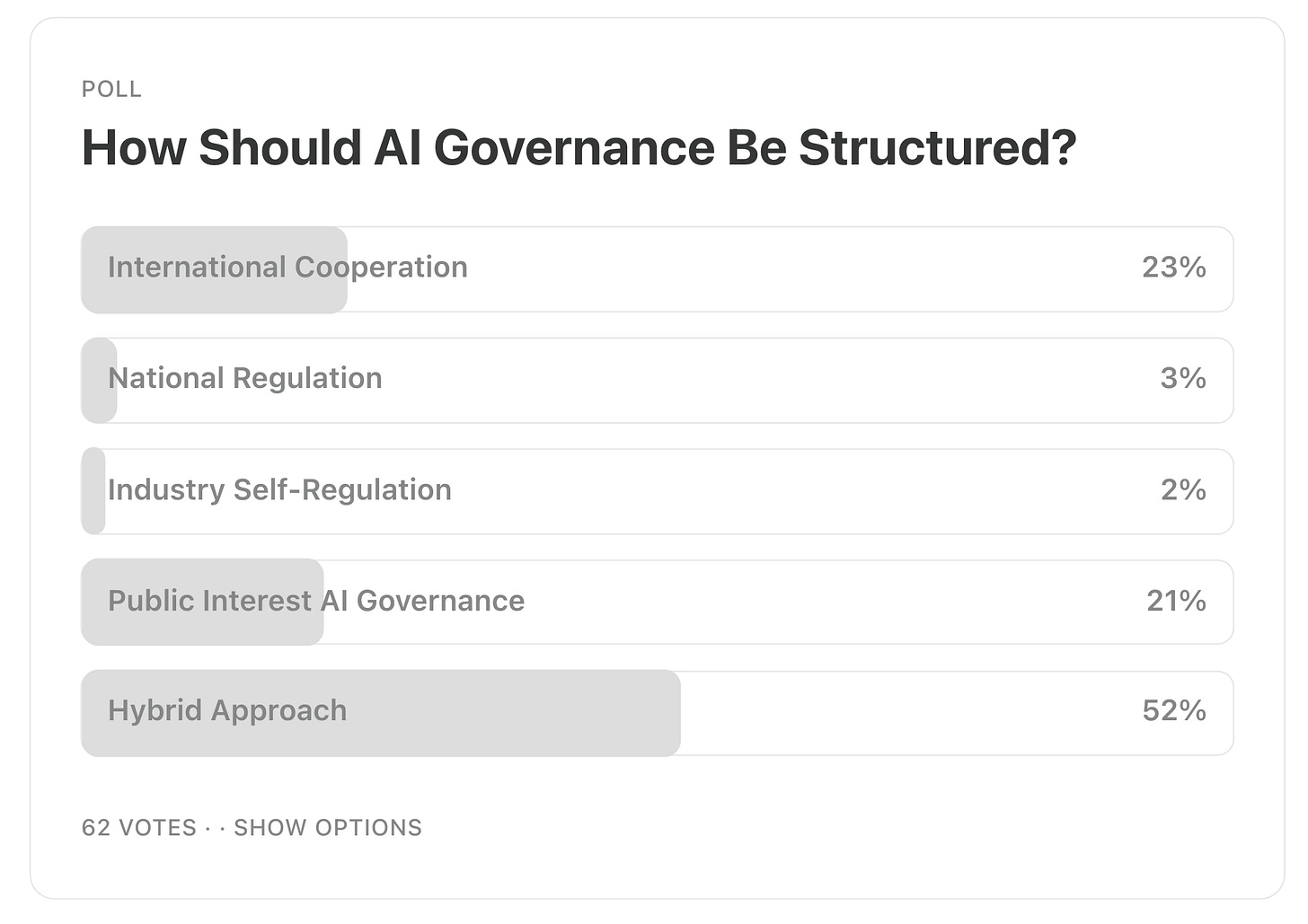

Our latest informal poll (n=62) suggests that a hybrid approach is the preferred model for AI governance, with 52% of respondents favoring a mix of government regulation, industry innovation, and public oversight. This reflects a broad consensus that AI governance should balance accountability and progress, integrating multiple stakeholders to ensure ethical and effective AI deployment.

International cooperation emerged as the second most favored option, securing 23% of the vote. This highlights the recognition that AI’s global impact requires cross-border regulatory frameworks to address challenges like bias, misinformation, and ethical concerns at an international level.

Public interest AI governance, which emphasizes civil society, academia, and advocacy groups shaping oversight, received 21% support. This suggests that many believe governance should be driven by broader societal values rather than just governments or corporations.

By contrast, national regulation (3%) and industry self-regulation (2%) received minimal support, indicating that respondents view government-only oversight or corporate-led governance as insufficient to address the complexities of AI governance effectively.

-

A hybrid approach dominates as the preferred governance model, emphasizing the need for collaborative oversight that includes governments, industry, civil society, academia, and other diverse stakeholders to ensure inclusive and effective AI governance.

-

International cooperation is seen as crucial, reinforcing the need for global AI standards and regulatory consistency.

-

Public interest AI governance holds strong support, reflecting a desire for civil society and academic institutions to play a central role in shaping AI policy.

-

Minimal support for national or industry self-regulation suggests that most respondents favor multi-stakeholder approaches over isolated governance models.

As AI governance debates continue, the challenge will be in defining effective structures that balance innovation, accountability, and global coordination to ensure AI serves the public good.

Please share your thoughts with the MAIEI community:

Teaching Responsible AI in a Time of Hype

As AI literacy becomes an urgent priority, organizations across sectors are rushing to provide training. But what should these courses truly accomplish? In this essay, Thomas Linder reflects on redesigning a responsible AI course at Concordia University in Montreal, originally developed by the late Abhishek Gupta before the generative AI boom. He highlights three key goals: decentering generative AI, unpacking AI concepts, and anchoring responsible AI in stakeholder perspectives. Linder concludes with recommendations to ensure AI education remains focused on ethical outcomes.

To dive deeper, read the full essay here.

Documenting the Impacts of Foundation Models – Partnership on AI

Our Director of Partnerships, Connor Wright, participated in Partnership on AI’s Post-Deployment Governance Practices Working Group, providing insights into how best to document the impacts of foundation models. The document presents the pain points involved in the process of documenting foundation model effects and offers recommendations, examples, and best practices for overcoming these challenges.

To dive deeper, download and read the full report here.

Help us keep The AI Ethics Brief free and accessible for everyone by becoming a paid subscriber on Substack for the price of a coffee or making a one-time or recurring donation at montrealethics.ai/donate

Your support sustains our mission of Democratizing AI Ethics Literacy, honours Abhishek Gupta’s legacy, and ensures we can continue serving our community.

For corporate partnerships or larger donations, please contact us at support@montrealethics.ai

Towards a Feminist Metaethics of AI

Despite numerous guidelines and codes of conduct about the ethical development and deployment of AI, neither academia nor practice has undertaken comparable efforts to explicitly and systematically evaluate the field of AI ethics itself. Such an evaluation would benefit from a feminist metaethics, which asks not only what ethics is but also what it should be like.

To dive deeper, read the full summary here.

Collectionless Artificial Intelligence

Learning from huge data collections introduces risks related to data centralization, privacy, energy efficiency, limited customizability, and control. This paper focuses on the perspective in which artificial agents are progressively developed over time by online learning from potentially lifelong streams of sensory data. This is achieved without storing the sensory information and without building datasets for offline learning purposes while pushing towards interactions with the environment, including humans and other artificial agents.

To dive deeper, read the full summary here.

-

What happened: In this reflection on the AI Safety Summit in Paris in February, the author hones in on the performative nature behind the event.

-

Why it matters: They note how enforcing AI safety through mechanisms such as AI audits will be rendered ineffective if such mechanisms do not have the power to decommission the AI systems found to be at fault. Furthermore, those who air their grievances at these summits are not given the tools to help resolve their situation, meaning they are subjected to corporate platitudes.

-

Between the lines: Effective governance must include the power to act upon its findings. For AI harms to be truly mitigated, those who are harmed ought to be equipped with the appropriate tools and know-how to help combat any injustices they experience.

To dive deeper, read the full article here.

Elon Musk staffer created a DOGE AI assistant for making government ‘less dumb’ – TechCrunch

-

What happened: A senior Elon Musk staffer working at both the White House and SpaceX has reportedly developed a Department of Governmental Efficiency (DOGE) AI assistant to help the department improve its efficiency. The chatbot was trained on 5 guiding principles for DOGE, including making government “less dumb” and optimizing business processes, amongst others.

-

Why it matters: This is another example of the extreme cost-cutting measures being undertaken by DOGE in the US government, which has already raised privacy and legal concerns.

-

Between the lines: Given the propensity for large language models (LLMs) to hallucinate, the consequences of doing so increase exponentially when the technology is applied to government: a notoriously sensitive area. For a truly transformative government bureaucracy experience, LLMs need to be far more robust than they currently are.

To dive deeper, read the full article here.

👇 Learn more about why it matters in AI Ethics via our Living Dictionary.

The Aider LLM Leaderboards provide a benchmark for evaluating large language models (LLMs) in code editing and programming tasks. By assessing models across multiple languages—including Python, C++, Java, and Rust—the leaderboard serves as a valuable resource for understanding how different AI models perform in real-world coding applications. Whether you’re a developer, researcher, or policymaker, this dataset offers key insights into LLM strengths, weaknesses, and cost-performance trade-offs in software engineering.

To dive deeper, read more details here.

Harnessing Collective Intelligence Under a Lack of Cultural Consensus

Harnessing collective intelligence (CI) to solve complex problems benefits from the ability to detect and characterize heterogeneity in consensus beliefs. This is particularly true in domains where a consensus amongst respondents defines an intersubjective “ground truth,” leading to a multiplicity of ground truths when subsets of respondents sustain mutually incompatible consensuses. In this paper, we extend Cultural Consensus Theory, a classic mathematical framework to detect divergent consensus beliefs, to allow culturally held beliefs to take the form of a deep latent construct: a fine-tuned deep neural network that maps features of a concept or entity to the consensus response among a subset of respondent via stick-breaking construction.

To dive deeper, read the full summary here.

We’d love to hear from you, our readers, about any recent research papers, articles, or newsworthy developments that have captured your attention. Please share your suggestions to help shape future discussions!

Ethics & Policy

Santa Fe Ethics Board Discusses Revisions to City Ethics Code

One of the key discussions centered around a motion to dismiss a complaint due to a lack of legal sufficiency, emphasizing the board’s commitment to ensuring that candidates adhere to ethical guidelines during their campaigns. Members expressed the need for candidates to be vigilant about compliance to avoid unnecessary hearings that detract from their campaigning efforts.

The board also explored the possibility of revising the city’s ethics code to address gaps in current regulations. A member raised concerns about the potential for counselors to interfere with city staff, suggesting that clearer rules could help delineate appropriate boundaries. Additionally, the discussion touched on the need for stronger provisions against discrimination, particularly in light of the challenges posed by the current political climate.

The board acknowledged that while the existing ethics code is a solid foundation, there is room for improvement. With upcoming changes in city leadership, members agreed that now is an opportune time to consider these revisions. The conversation underscored the board’s role as an independent body capable of addressing ethical concerns that may not be adequately resolved within the current city structure.

As the board continues to deliberate on these issues, the outcomes of their discussions could significantly impact how ethics are managed in Santa Fe, ensuring that the city remains committed to transparency and accountability in governance.

Ethics & Policy

Universities Bypass Ethics Reviews for AI Synthetic Medical Data

In the rapidly evolving field of medical research, artificial intelligence is reshaping how scientists handle sensitive data, potentially bypassing traditional ethical safeguards. A recent report highlights how several prominent universities are opting out of standard ethics reviews for studies using AI-generated medical data, arguing that such synthetic information poses no risk to real patients. This shift could accelerate innovation but raises questions about oversight in an era where AI tools are becoming indispensable.

Representatives from four major medical research centers, including institutions in the U.S. and Europe, have informed Nature that they’ve waived typical institutional review board (IRB) processes for projects involving these fabricated datasets. The rationale is straightforward: synthetic data, created by algorithms that mimic real patient records without including any identifiable or traceable information, doesn’t involve human subjects in the conventional sense. This allows researchers to train AI models on vast amounts of simulated health records, from imaging scans to genetic profiles, without the delays and paperwork associated with ethics approvals.

The Ethical Gray Zone in AI-Driven Research

Critics, however, warn that this approach might erode the foundational principles of medical ethics, established in the wake of historical abuses like the Tuskegee syphilis study. By sidestepping IRBs, which typically scrutinize potential harms, data privacy, and informed consent, institutions could inadvertently open the door to biases embedded in the AI systems generating the data. For instance, if the algorithms are trained on skewed real-world datasets, the synthetic outputs might perpetuate disparities in healthcare outcomes for underrepresented groups.

Proponents counter that the benefits outweigh these concerns, particularly in fields like drug discovery and personalized medicine, where data scarcity has long been a bottleneck. One researcher quoted in the Nature article emphasized that synthetic data enables rapid prototyping of AI diagnostics, potentially speeding up breakthroughs in areas such as cancer detection or rare disease modeling. Universities like those affiliated with the report are already integrating these methods into their workflows, viewing them as a pragmatic response to regulatory hurdles that can stall projects for months.

Implications for Regulatory Frameworks

This trend is not isolated; it’s part of a broader push to adapt ethics guidelines to AI’s capabilities. In the U.S., the Food and Drug Administration has begun exploring how to regulate AI-generated data in clinical trials, while European bodies under the General Data Protection Regulation (GDPR) are debating whether synthetic datasets truly escape privacy rules. Industry insiders note that companies like Google and IBM are investing heavily in synthetic data generation, seeing it as a way to comply with strict data protection laws without compromising on innovation.

Yet, the lack of uniform standards could lead to inconsistencies. Some experts argue for a hybrid model where synthetic data undergoes a lighter review process, focusing on algorithmic transparency rather than patient rights. As one bioethicist told Nature, “We’re trading one set of risks for another—real patient data breaches for the unknown perils of AI hallucinations in medical simulations.”

Balancing Innovation and Accountability

Looking ahead, this development could transform how medical research is conducted globally. With AI tools becoming more sophisticated, the line between real and synthetic data blurs, promising faster iterations in machine learning models for epidemiology or vaccine development. However, without robust guidelines, there’s a risk of public backlash if errors in synthetic data lead to flawed research outcomes.

Institutions are responding by forming internal committees to self-regulate, but calls for international standards are growing. As the Nature report underscores, the key challenge is ensuring that this shortcut doesn’t undermine trust in science. For industry leaders, the message is clear: embrace AI’s potential, but proceed with caution to maintain the integrity of ethical oversight in an increasingly digital research environment.

Ethics & Policy

Canadian AI Ethics Report Withdrawn Over Fabricated Citations

In a striking irony that underscores the perils of artificial intelligence in academic and policy circles, a comprehensive Canadian government report advocating for ethical AI deployment in education has been exposed for citing over 15 fabricated sources. The document, produced after an 18-month effort by Quebec’s Higher Education Council, aimed to guide educators on responsibly integrating AI tools into classrooms. Instead, it has become a cautionary tale about the very technology it sought to regulate.

Experts, including AI researchers and fact-checkers, uncovered the discrepancies when scrutinizing the report’s bibliography. Many of the cited works, purportedly from reputable journals and authors, simply do not exist—hallmarks of AI-generated hallucinations, where language models invent plausible but nonexistent references. This revelation, detailed in a recent piece by Ars Technica, highlights how even well-intentioned initiatives can falter when relying on unverified AI assistance.

The Hallucination Epidemic in Policy Making

The report’s authors, who remain unnamed in public disclosures, likely turned to AI models like ChatGPT or similar tools to expedite research and drafting. According to the Ars Technica analysis, over a dozen citations pointed to phantom studies on topics such as AI’s impact on student equity and data privacy. This isn’t an isolated incident; a study from ScienceDaily warns that AI’s “black box” nature exacerbates ethical lapses, leaving decisions untraceable and potentially harmful.

Industry insiders point out that such fabrications erode trust in governmental advisories, especially in education where AI is increasingly used for grading, content creation, and personalized learning. The Quebec council has since pulled the report for revisions, but the damage raises questions about accountability in AI-augmented workflows.

Broader Implications for AI Ethics in Academia

Delving deeper, this scandal aligns with findings from a AAUP report on artificial intelligence in higher education, which emphasizes the need for faculty oversight to mitigate risks like algorithmic bias and privacy breaches. Without stringent verification protocols, AI tools can propagate misinformation at scale, as evidenced by the Canadian case.

Moreover, a qualitative study published in Scientific Reports explores ethical issues in AI for foreign language learning, noting that unchecked use could undermine academic integrity. For policymakers and educators, the takeaway is clear: ethical guidelines must include robust human review to prevent AI from fabricating the evidence base itself.

Calls for Reform and Industry Responses

In response, tech firms are under pressure to enhance transparency in their models. A recent Ars Technica story on a Duke University study reveals that professionals who rely on AI often face reputational stigma, fearing judgment for perceived laziness or inaccuracy. This cultural shift is prompting calls for mandatory disclosure of AI involvement in official documents.

Educational bodies worldwide are now reevaluating their approaches. For instance, a report from the Education Commission of the States discusses state-level responses to AI, advocating balanced innovation with ethical safeguards. As AI permeates education, incidents like the Quebec report serve as a wake-up call, urging a hybrid model where human expertise tempers technological efficiency.

Toward a More Vigilant Future

Ultimately, this episode illustrates the double-edged sword of AI: its power to streamline complex tasks is matched by its potential for undetected errors. Industry leaders argue that investing in AI literacy training for researchers and policymakers could prevent future mishaps. With reports like one from Brussels Signal noting a surge in ethical breaches, the path forward demands not just better tools, but a fundamental rethinking of how we integrate them into critical domains like education policy.

-

Business2 weeks ago

Business2 weeks agoThe Guardian view on Trump and the Fed: independence is no substitute for accountability | Editorial

-

Tools & Platforms1 month ago

Building Trust in Military AI Starts with Opening the Black Box – War on the Rocks

-

Ethics & Policy2 months ago

Ethics & Policy2 months agoSDAIA Supports Saudi Arabia’s Leadership in Shaping Global AI Ethics, Policy, and Research – وكالة الأنباء السعودية

-

Events & Conferences4 months ago

Events & Conferences4 months agoJourney to 1000 models: Scaling Instagram’s recommendation system

-

Jobs & Careers2 months ago

Jobs & Careers2 months agoMumbai-based Perplexity Alternative Has 60k+ Users Without Funding

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoHappy 4th of July! 🎆 Made with Veo 3 in Gemini

-

Education2 months ago

Education2 months agoMacron says UK and France have duty to tackle illegal migration ‘with humanity, solidarity and firmness’ – UK politics live | Politics

-

Education2 months ago

Education2 months agoVEX Robotics launches AI-powered classroom robotics system

-

Podcasts & Talks2 months ago

Podcasts & Talks2 months agoOpenAI 🤝 @teamganassi

-

Funding & Business2 months ago

Funding & Business2 months agoKayak and Expedia race to build AI travel agents that turn social posts into itineraries